Date: 2019-12-26 18:30:06 CET, cola version: 1.3.2

Document is loading...

First the variable is renamed to res_list.

res_list = rl

All available functions which can be applied to this res_list object:

res_list

#> A 'ConsensusPartitionList' object with 24 methods.

#> On a matrix with 4116 rows and 72 columns.

#> Top rows are extracted by 'SD, CV, MAD, ATC' methods.

#> Subgroups are detected by 'hclust, kmeans, skmeans, pam, mclust, NMF' method.

#> Number of partitions are tried for k = 2, 3, 4, 5, 6.

#> Performed in total 30000 partitions by row resampling.

#>

#> Following methods can be applied to this 'ConsensusPartitionList' object:

#> [1] "cola_report" "collect_classes" "collect_plots" "collect_stats"

#> [5] "colnames" "functional_enrichment" "get_anno_col" "get_anno"

#> [9] "get_classes" "get_matrix" "get_membership" "get_stats"

#> [13] "is_best_k" "is_stable_k" "ncol" "nrow"

#> [17] "rownames" "show" "suggest_best_k" "test_to_known_factors"

#> [21] "top_rows_heatmap" "top_rows_overlap"

#>

#> You can get result for a single method by, e.g. object["SD", "hclust"] or object["SD:hclust"]

#> or a subset of methods by object[c("SD", "CV")], c("hclust", "kmeans")]

The call of run_all_consensus_partition_methods() was:

#> run_all_consensus_partition_methods(data = m, mc.cores = 4, anno = anno, anno_col = anno_col)

Dimension of the input matrix:

mat = get_matrix(res_list)

dim(mat)

#> [1] 4116 72

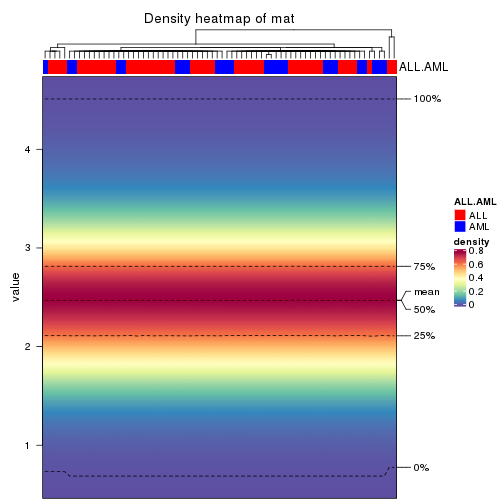

The density distribution for each sample is visualized as in one column in the following heatmap. The clustering is based on the distance which is the Kolmogorov-Smirnov statistic between two distributions.

library(ComplexHeatmap)

densityHeatmap(mat, top_annotation = HeatmapAnnotation(df = get_anno(res_list),

col = get_anno_col(res_list)), ylab = "value", cluster_columns = TRUE, show_column_names = FALSE,

mc.cores = 4)

Folowing table shows the best k (number of partitions) for each combination

of top-value methods and partition methods. Clicking on the method name in

the table goes to the section for a single combination of methods.

The cola vignette explains the definition of the metrics used for determining the best number of partitions.

suggest_best_k(res_list)

| The best k | 1-PAC | Mean silhouette | Concordance | Optional k | ||

|---|---|---|---|---|---|---|

| ATC:kmeans | 2 | 1.000 | 0.982 | 0.992 | ** | |

| ATC:NMF | 2 | 0.972 | 0.927 | 0.973 | ** | |

| ATC:skmeans | 3 | 0.961 | 0.932 | 0.973 | ** | 2 |

| MAD:skmeans | 3 | 0.866 | 0.872 | 0.946 | ||

| CV:skmeans | 3 | 0.837 | 0.857 | 0.938 | ||

| MAD:mclust | 2 | 0.824 | 0.927 | 0.932 | ||

| SD:skmeans | 3 | 0.745 | 0.836 | 0.918 | ||

| CV:kmeans | 3 | 0.739 | 0.864 | 0.901 | ||

| MAD:NMF | 2 | 0.680 | 0.811 | 0.925 | ||

| SD:kmeans | 2 | 0.662 | 0.836 | 0.911 | ||

| MAD:pam | 2 | 0.651 | 0.824 | 0.925 | ||

| SD:mclust | 5 | 0.634 | 0.721 | 0.790 | ||

| SD:NMF | 2 | 0.633 | 0.823 | 0.928 | ||

| CV:NMF | 2 | 0.633 | 0.787 | 0.916 | ||

| MAD:kmeans | 2 | 0.623 | 0.805 | 0.904 | ||

| ATC:pam | 2 | 0.597 | 0.808 | 0.918 | ||

| ATC:hclust | 2 | 0.574 | 0.813 | 0.911 | ||

| SD:pam | 2 | 0.548 | 0.686 | 0.876 | ||

| CV:pam | 2 | 0.546 | 0.784 | 0.904 | ||

| CV:mclust | 2 | 0.489 | 0.908 | 0.895 | ||

| ATC:mclust | 2 | 0.360 | 0.711 | 0.828 | ||

| SD:hclust | 2 | 0.236 | 0.777 | 0.851 | ||

| MAD:hclust | 2 | 0.227 | 0.737 | 0.838 | ||

| CV:hclust | 2 | 0.214 | 0.570 | 0.805 |

**: 1-PAC > 0.95, *: 1-PAC > 0.9

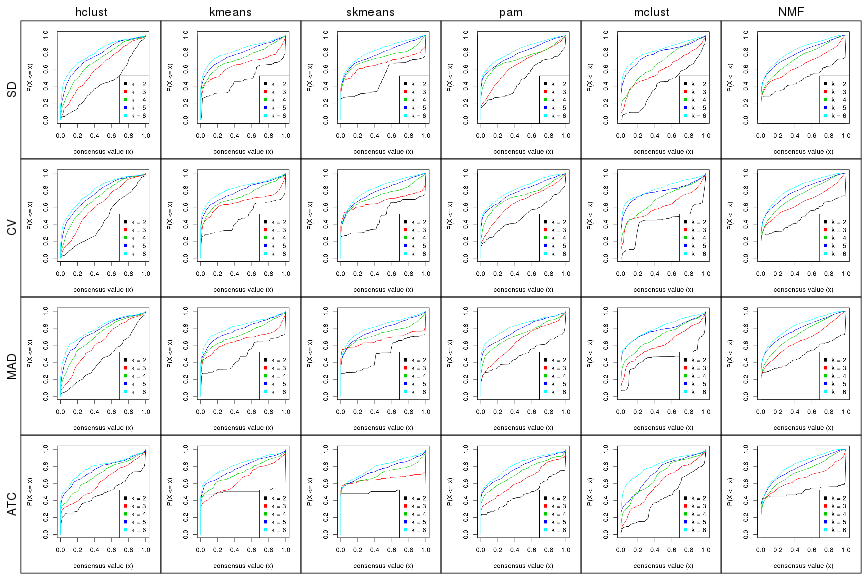

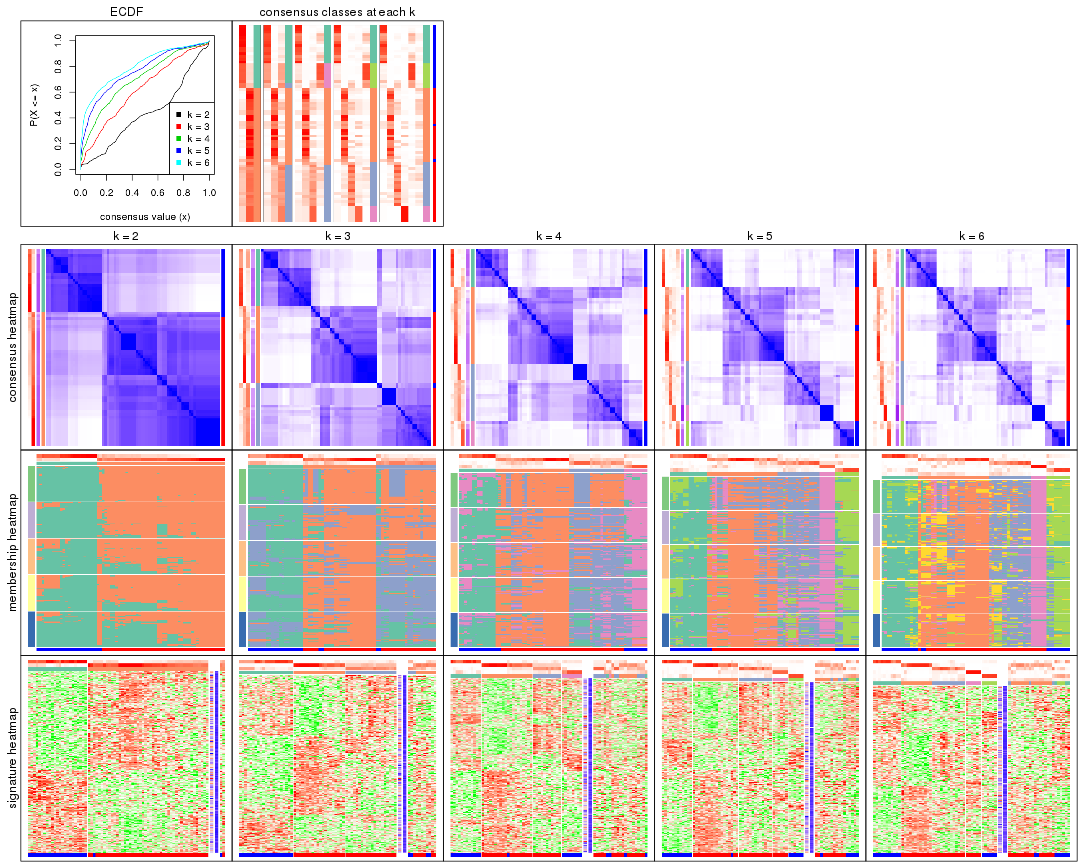

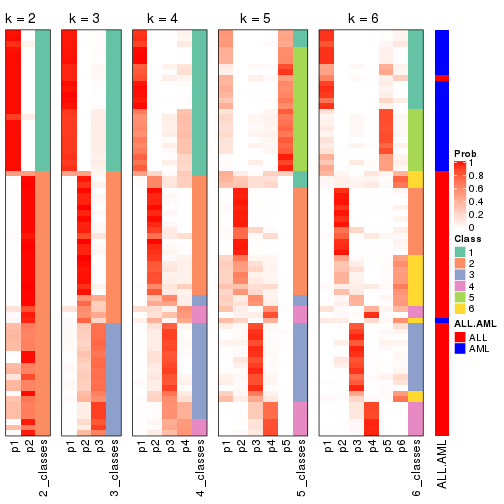

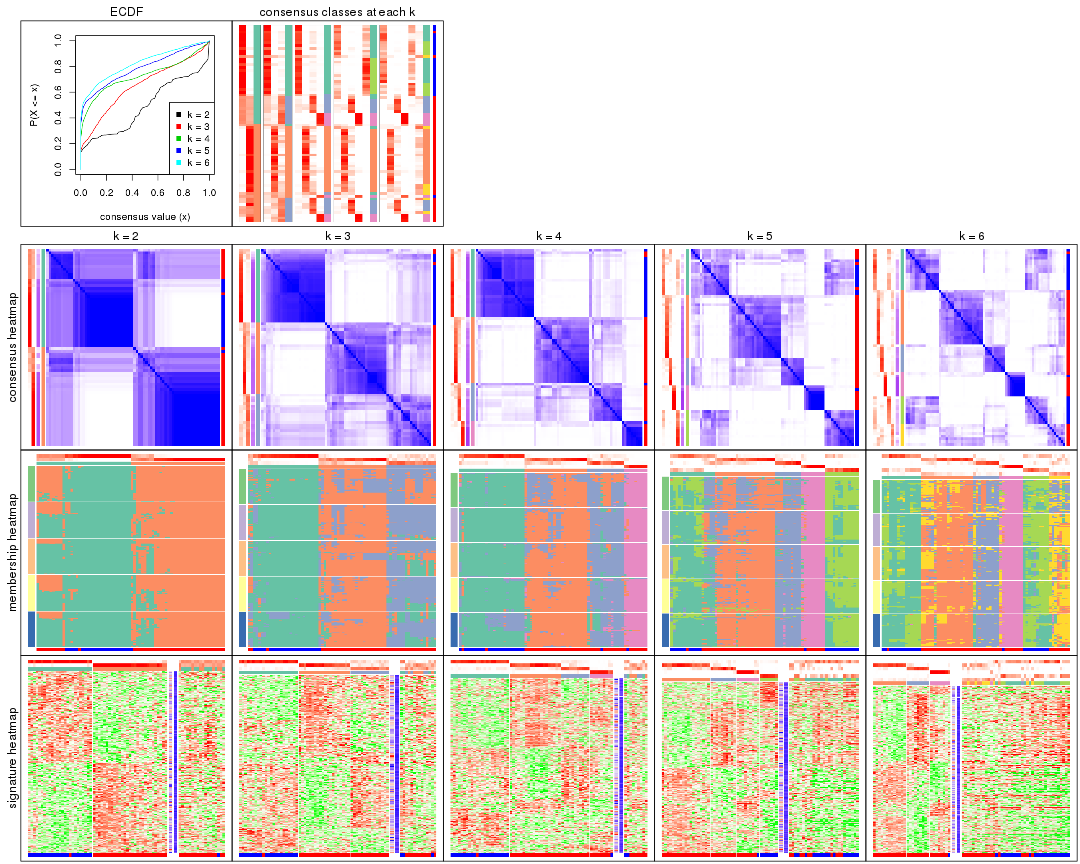

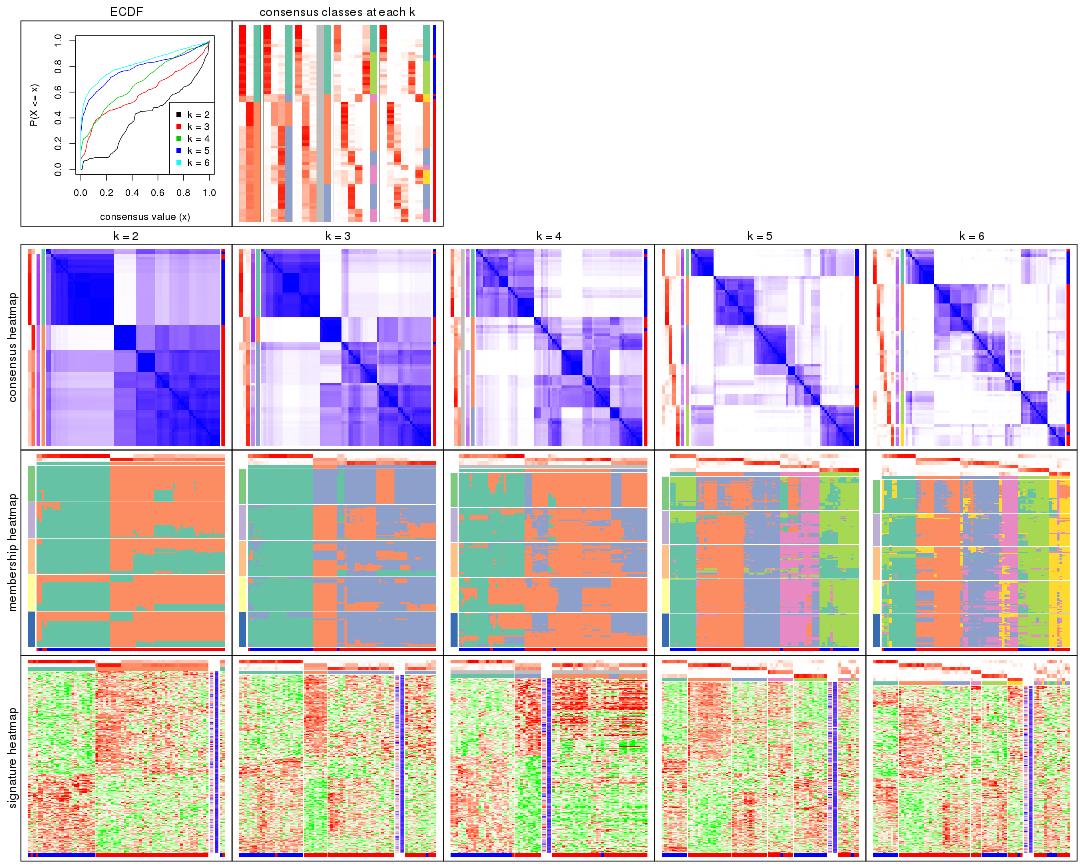

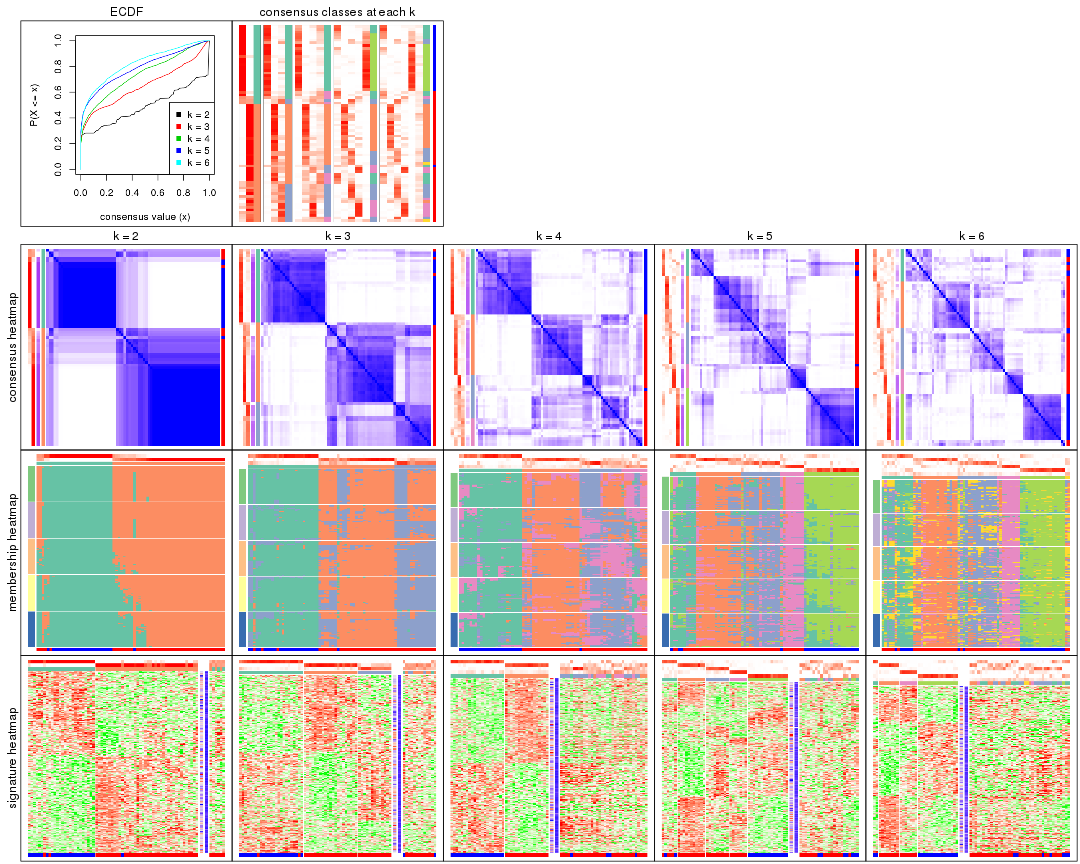

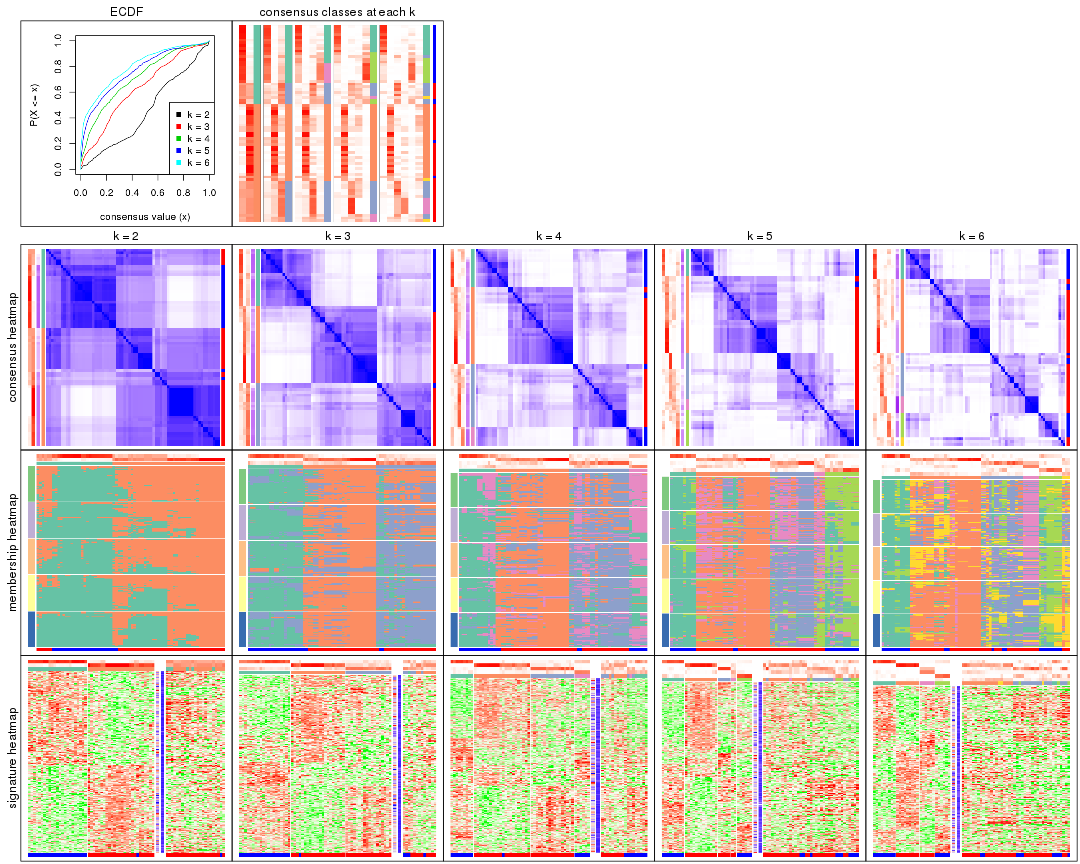

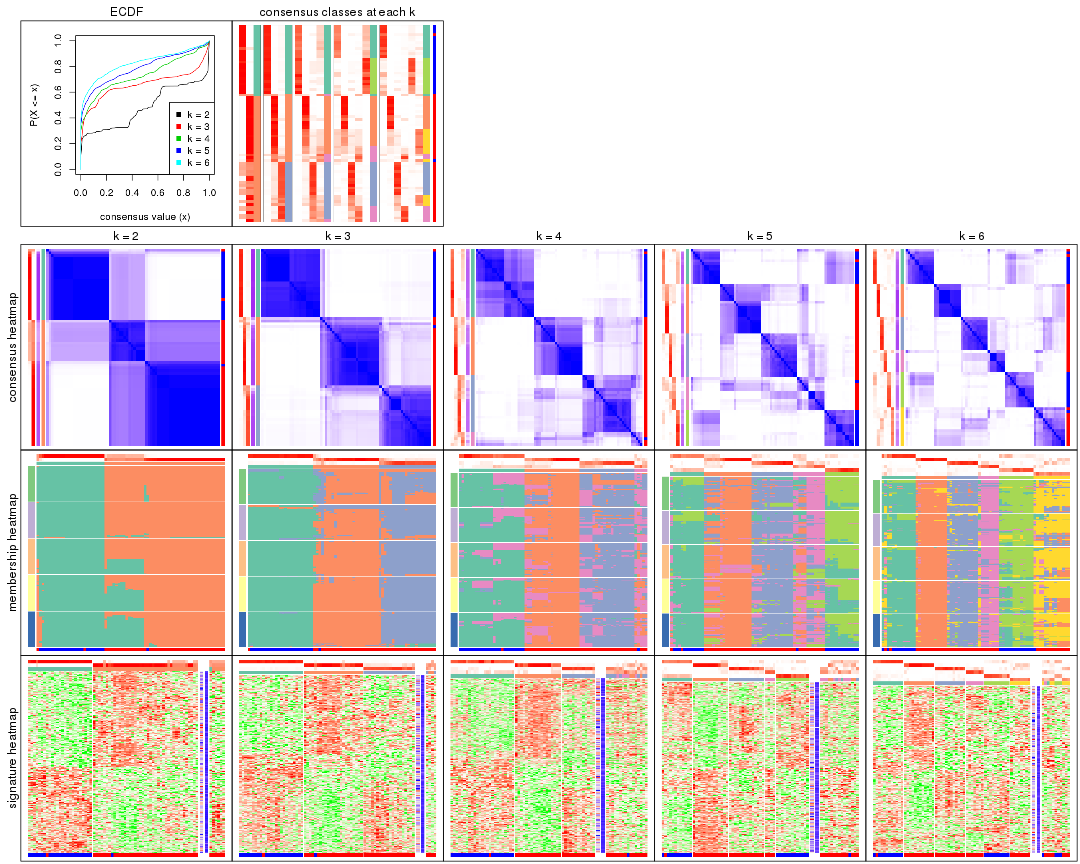

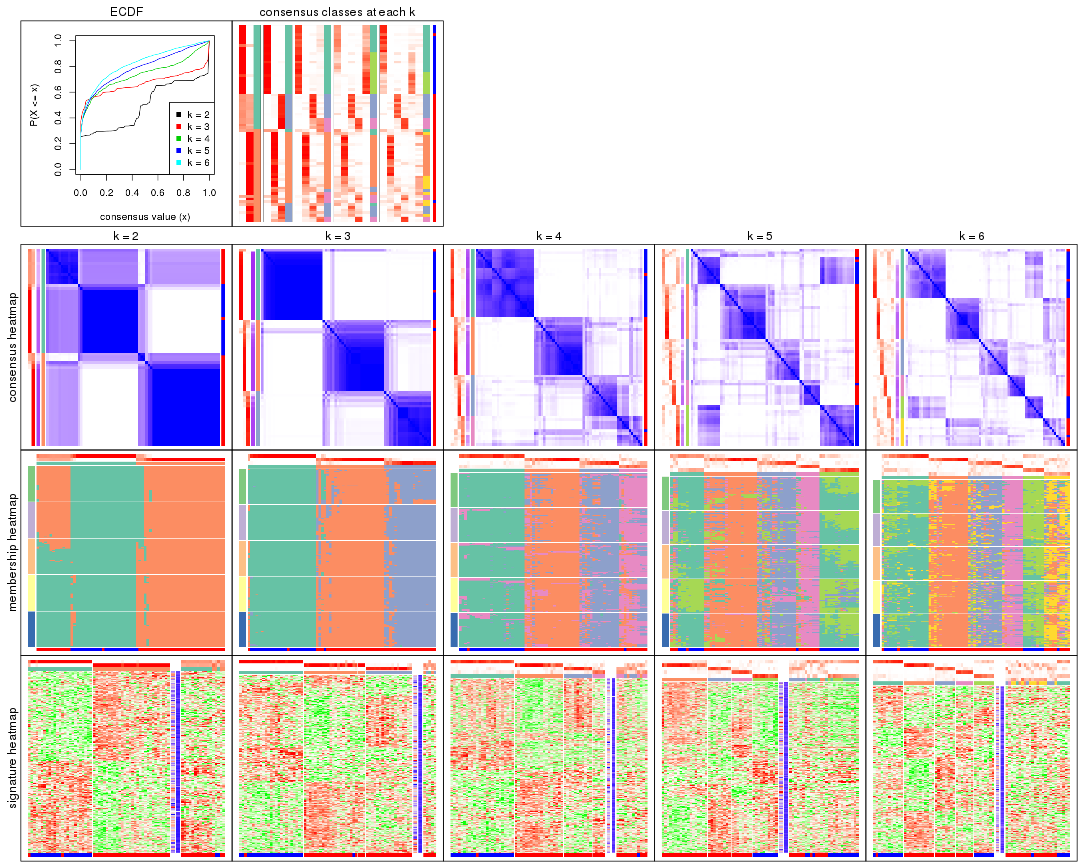

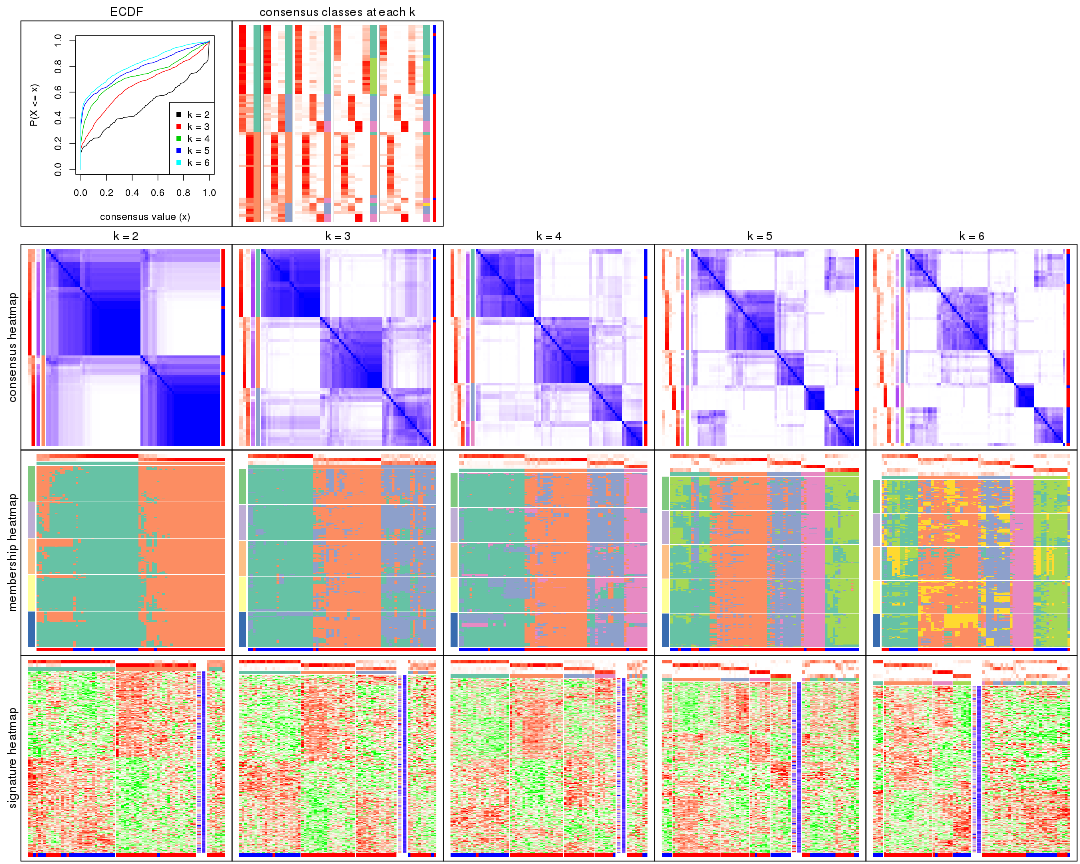

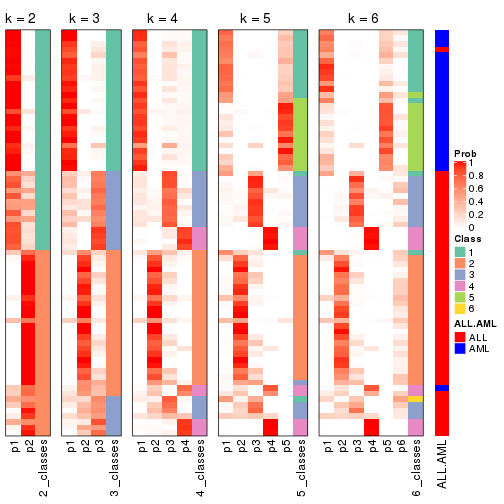

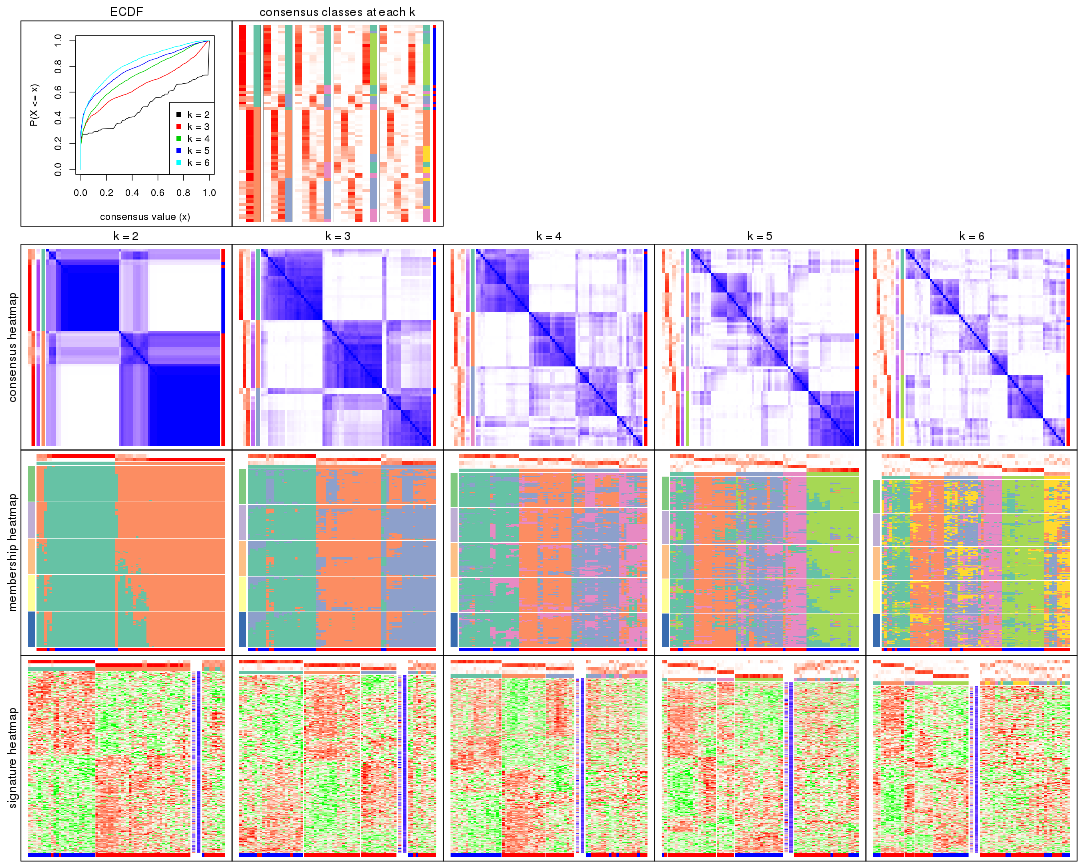

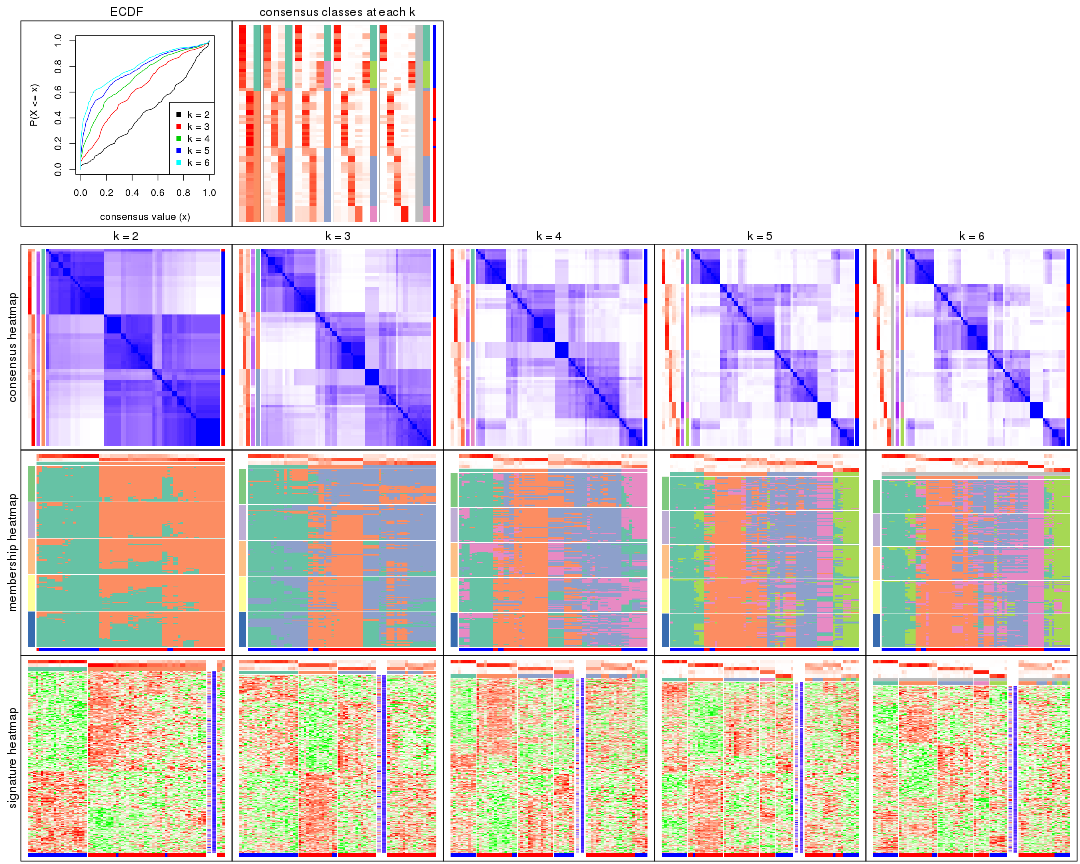

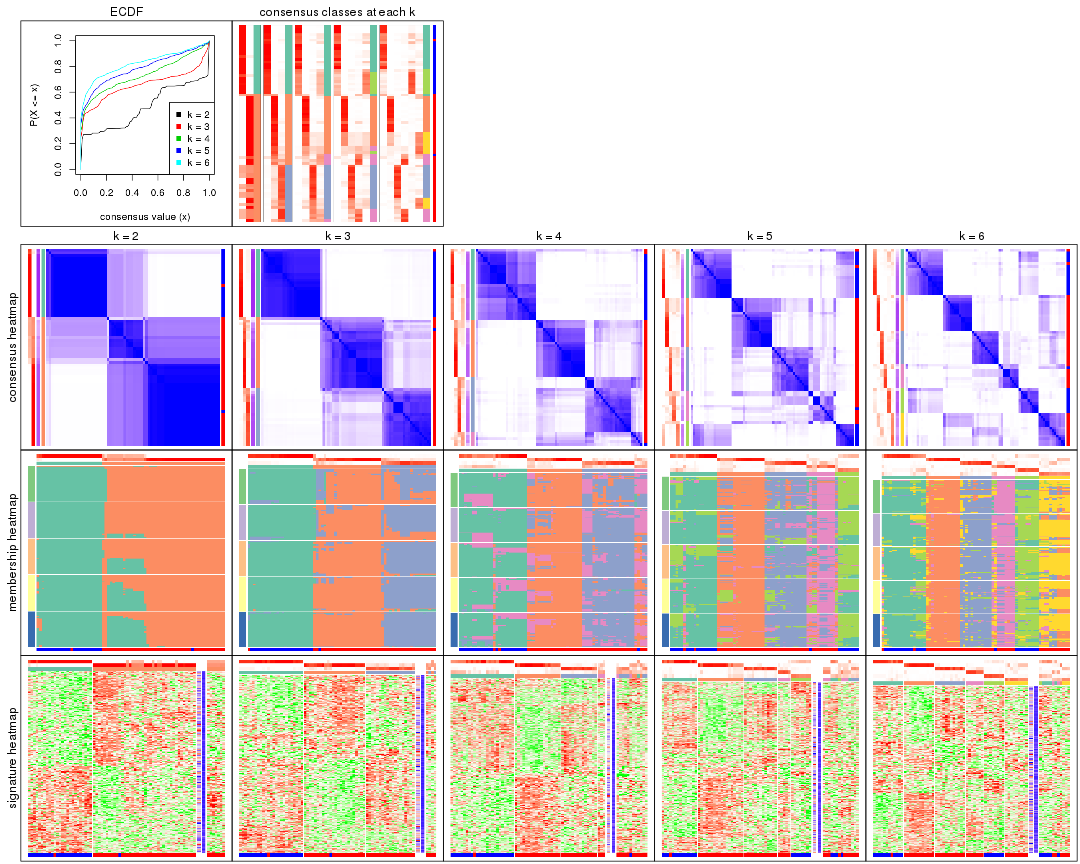

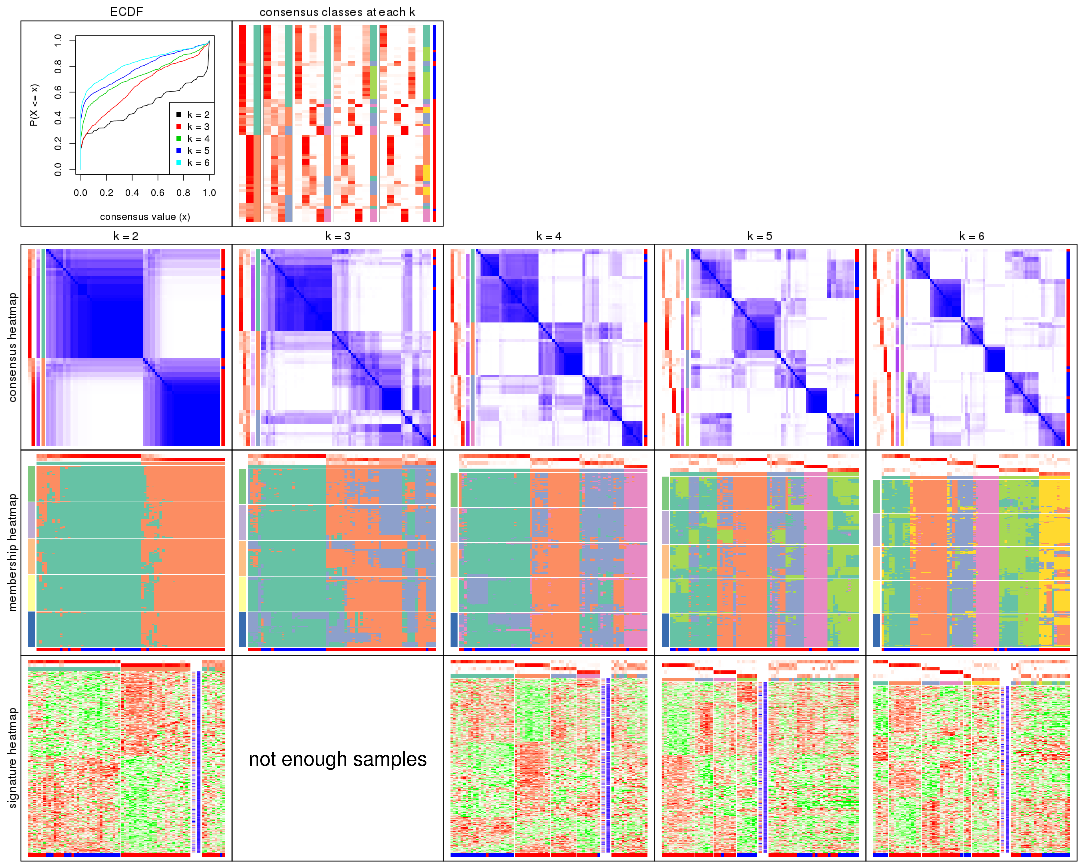

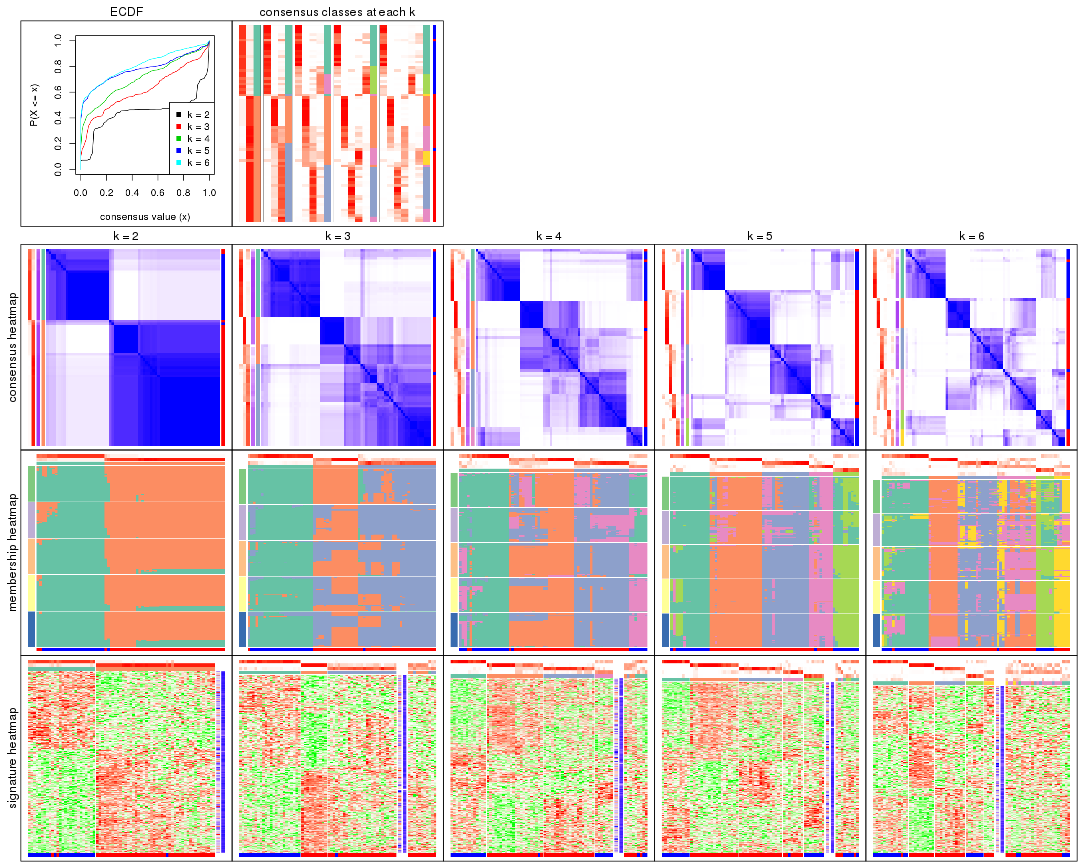

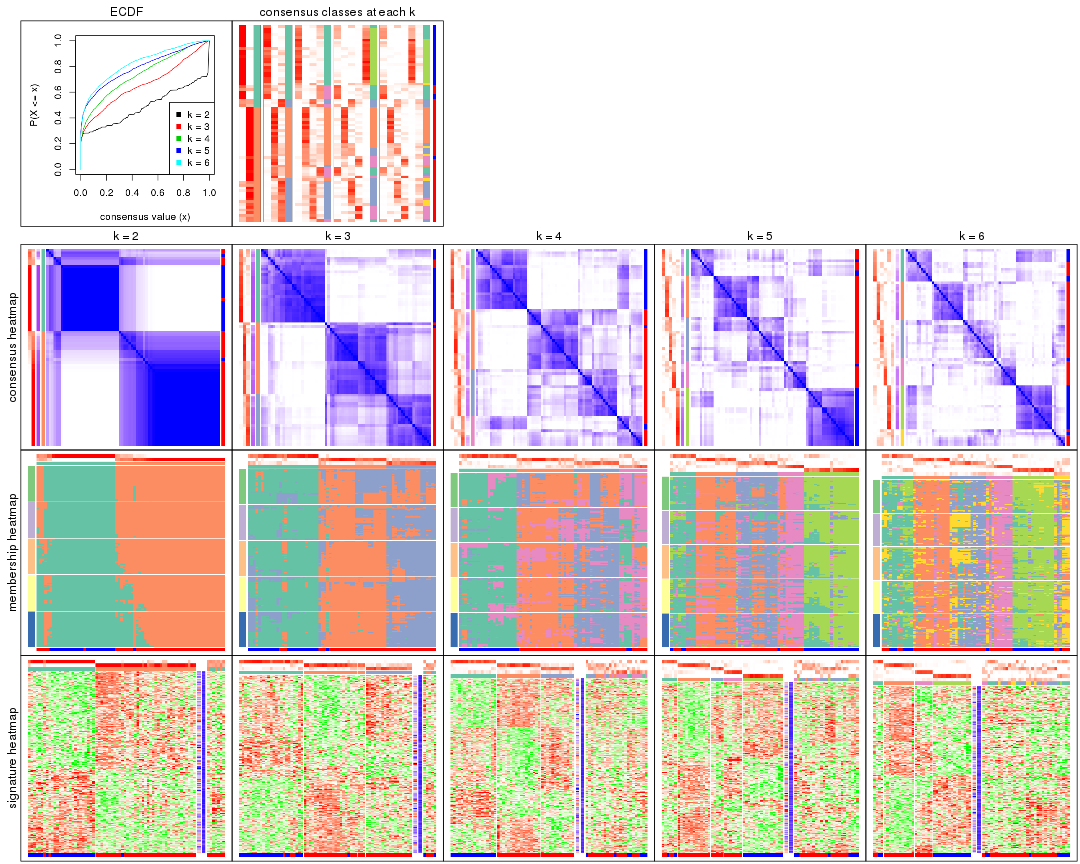

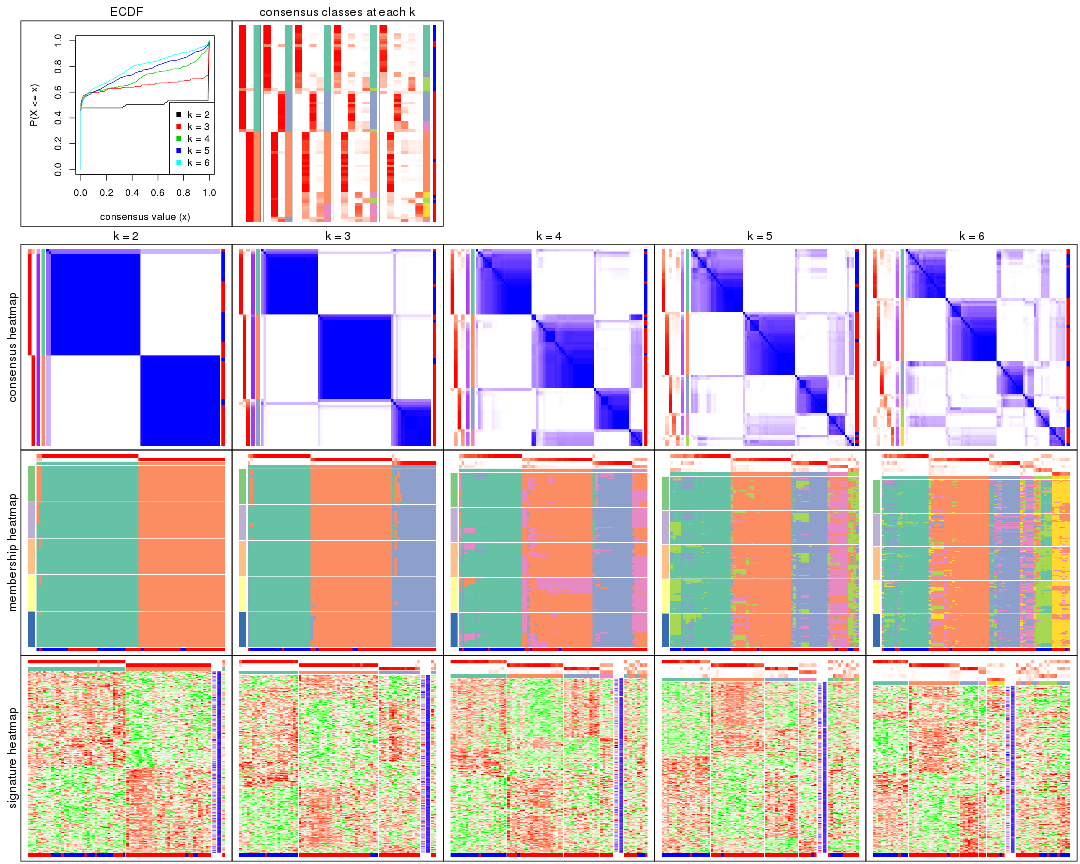

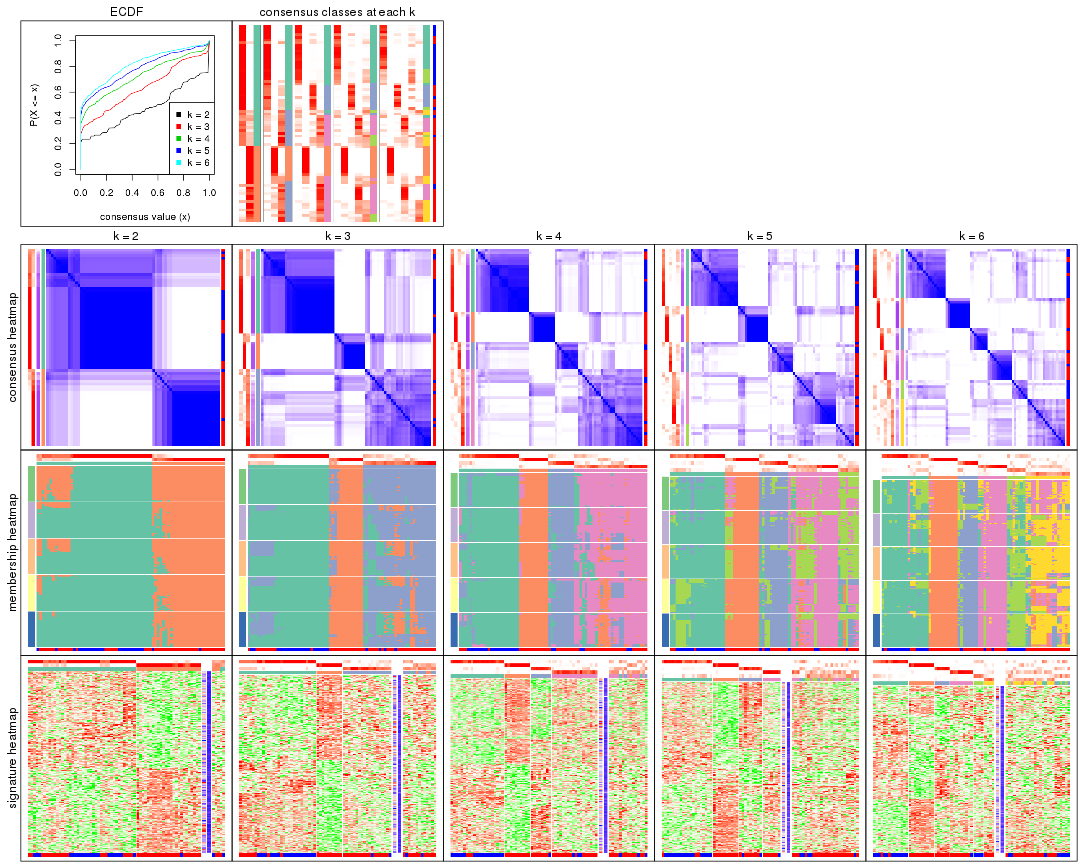

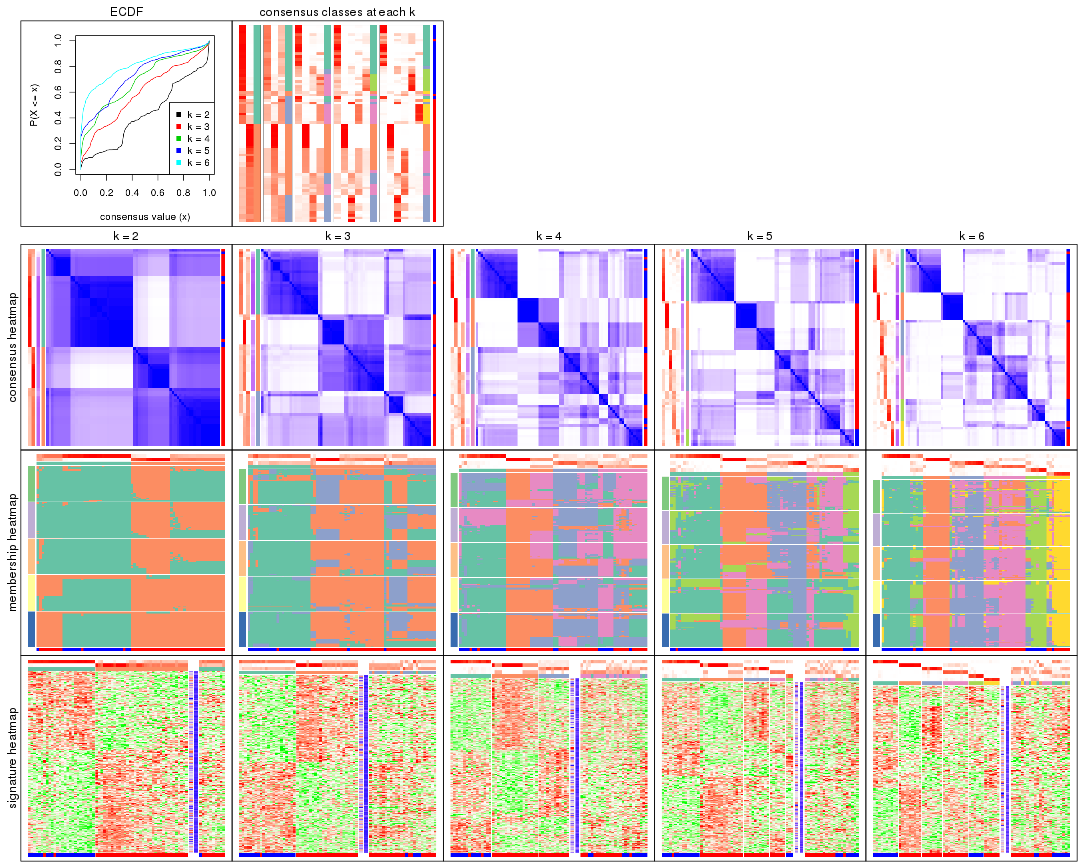

Cumulative distribution function curves of consensus matrix for all methods.

collect_plots(res_list, fun = plot_ecdf)

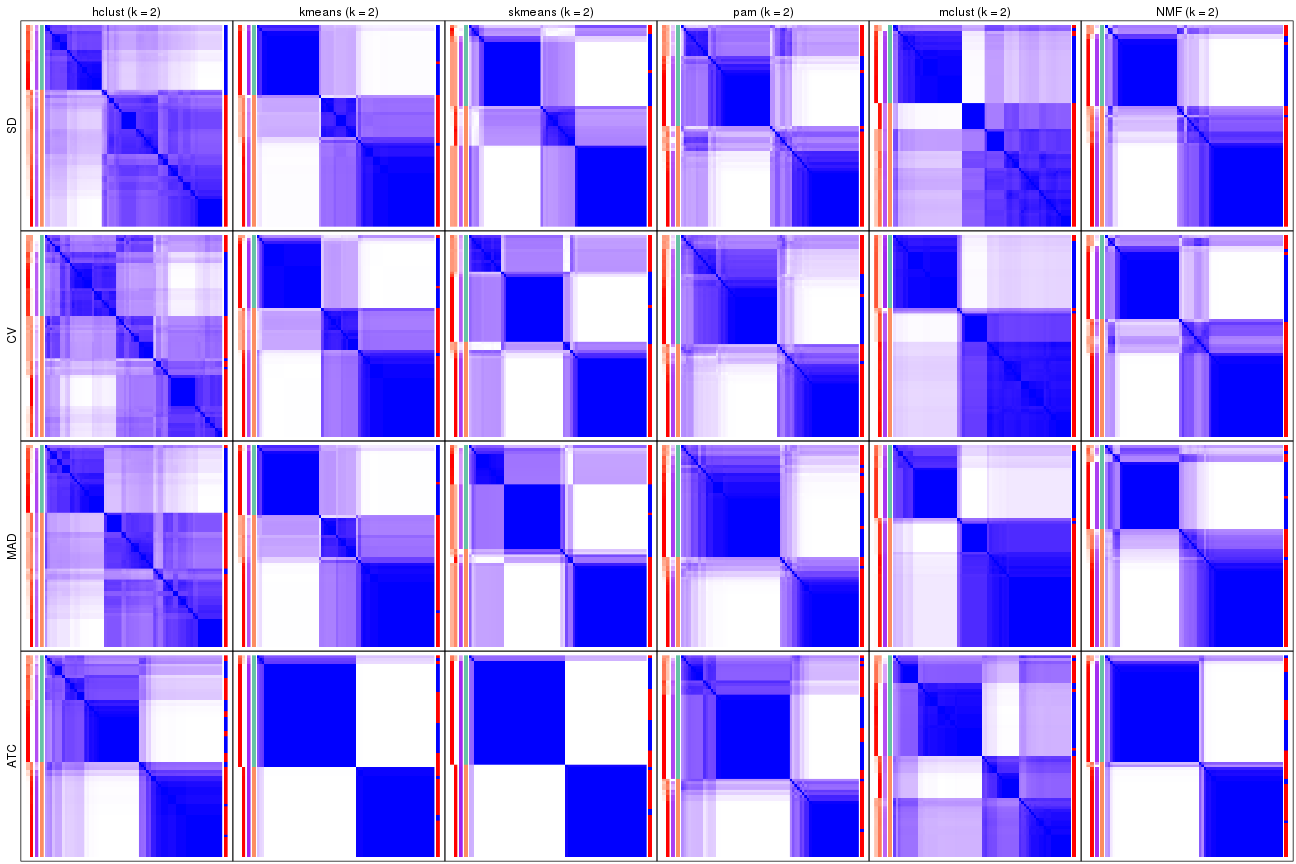

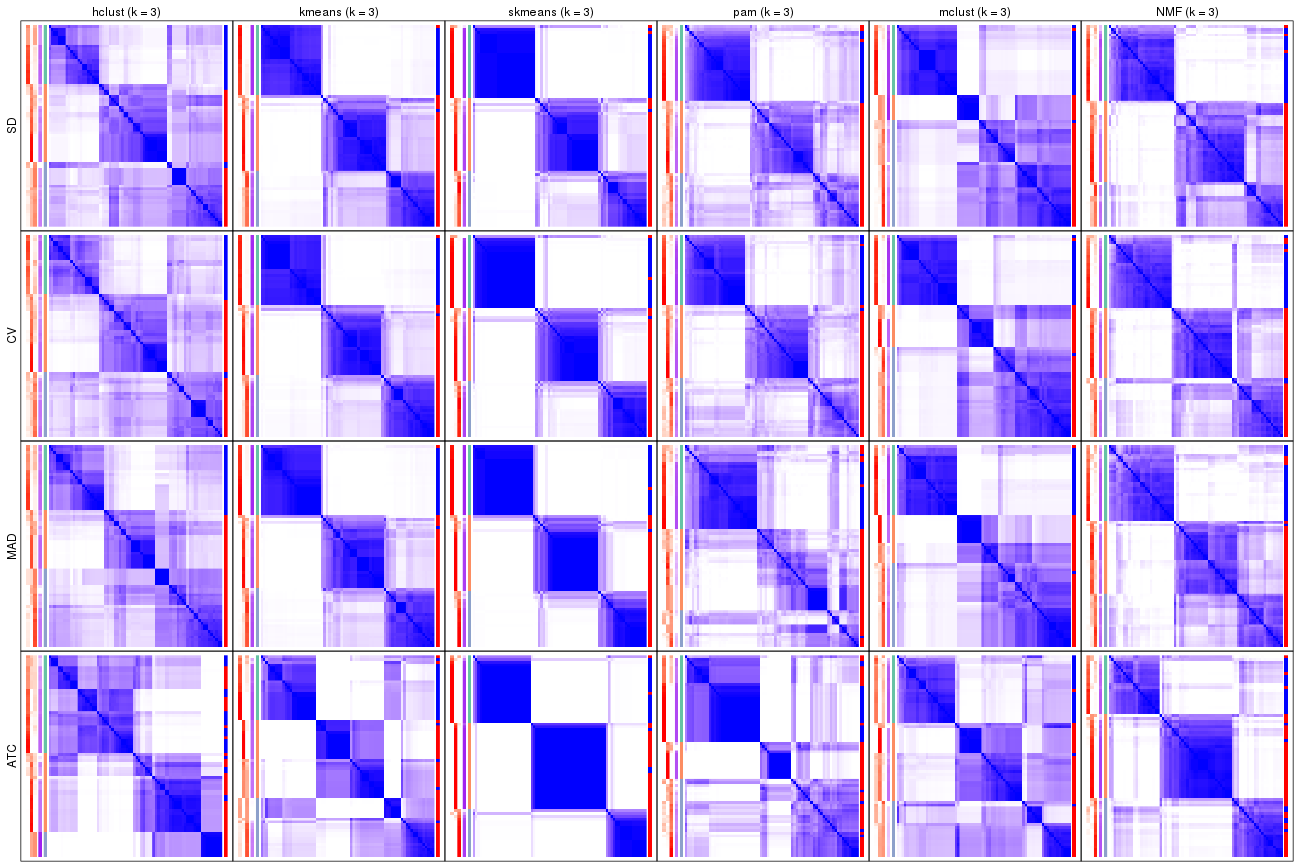

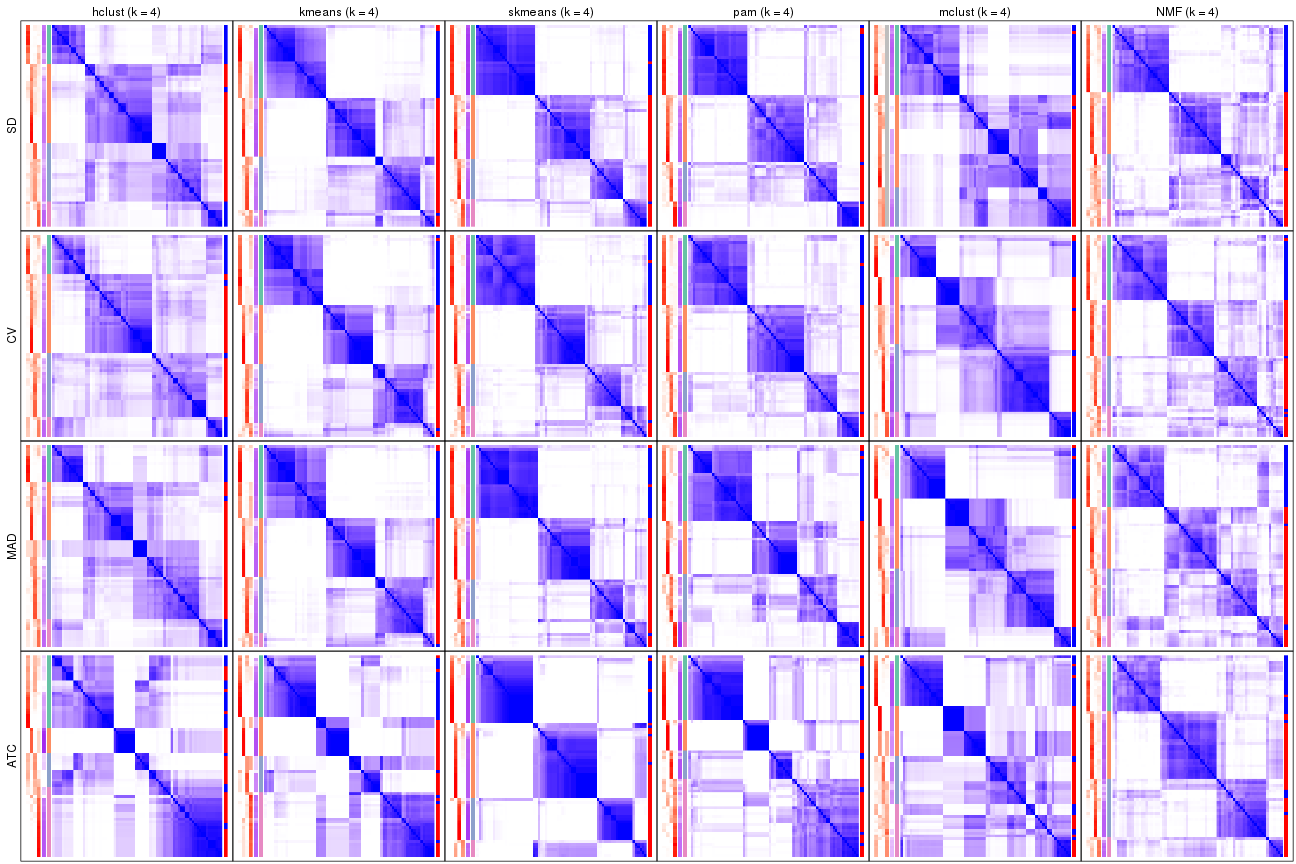

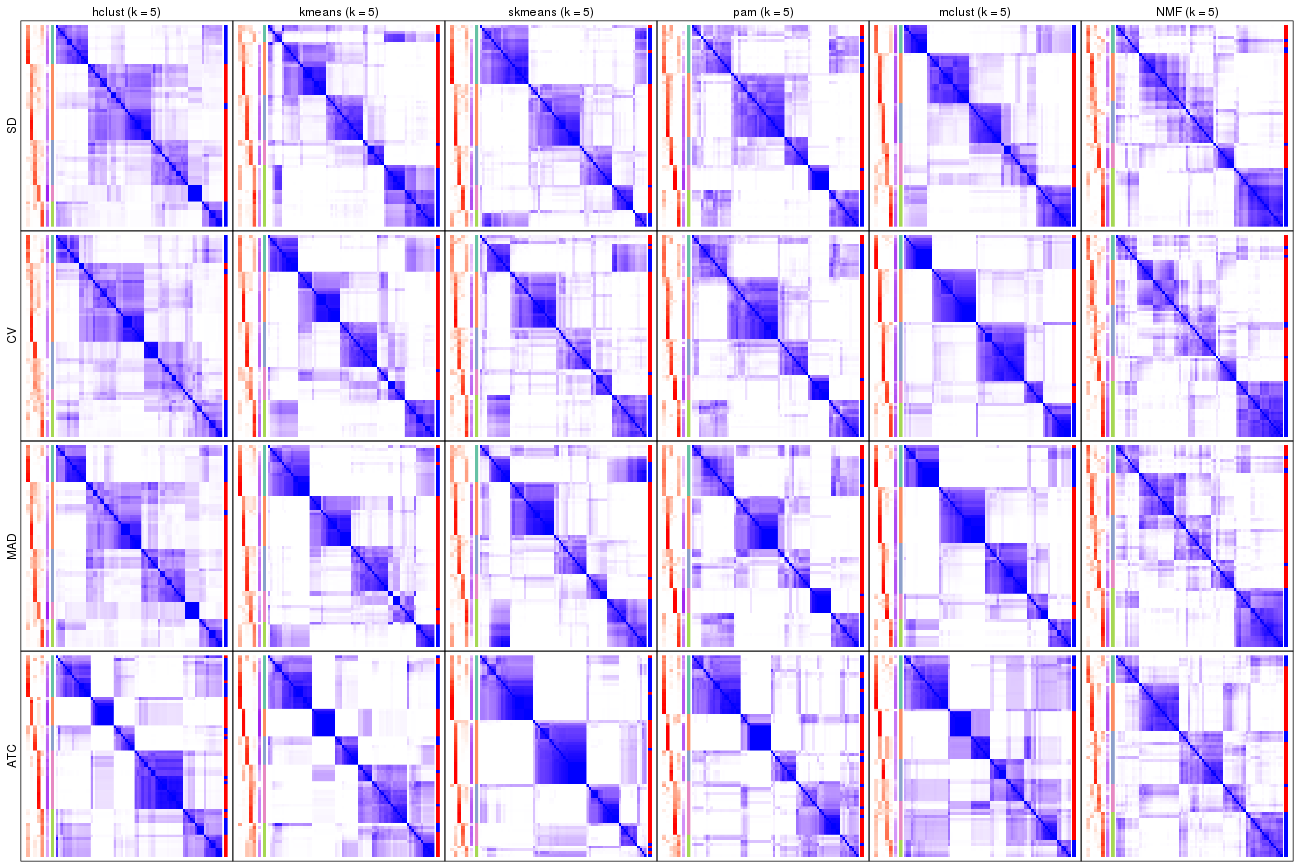

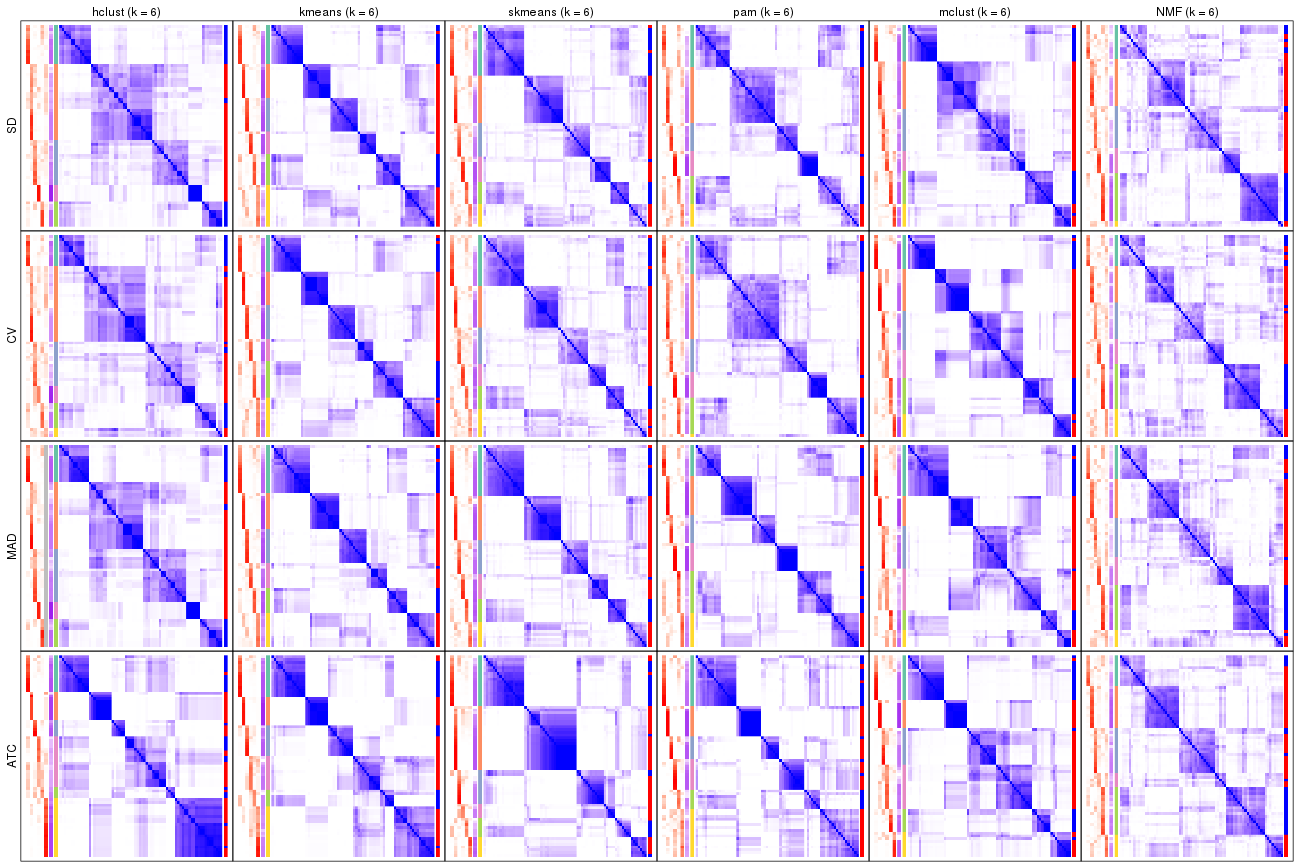

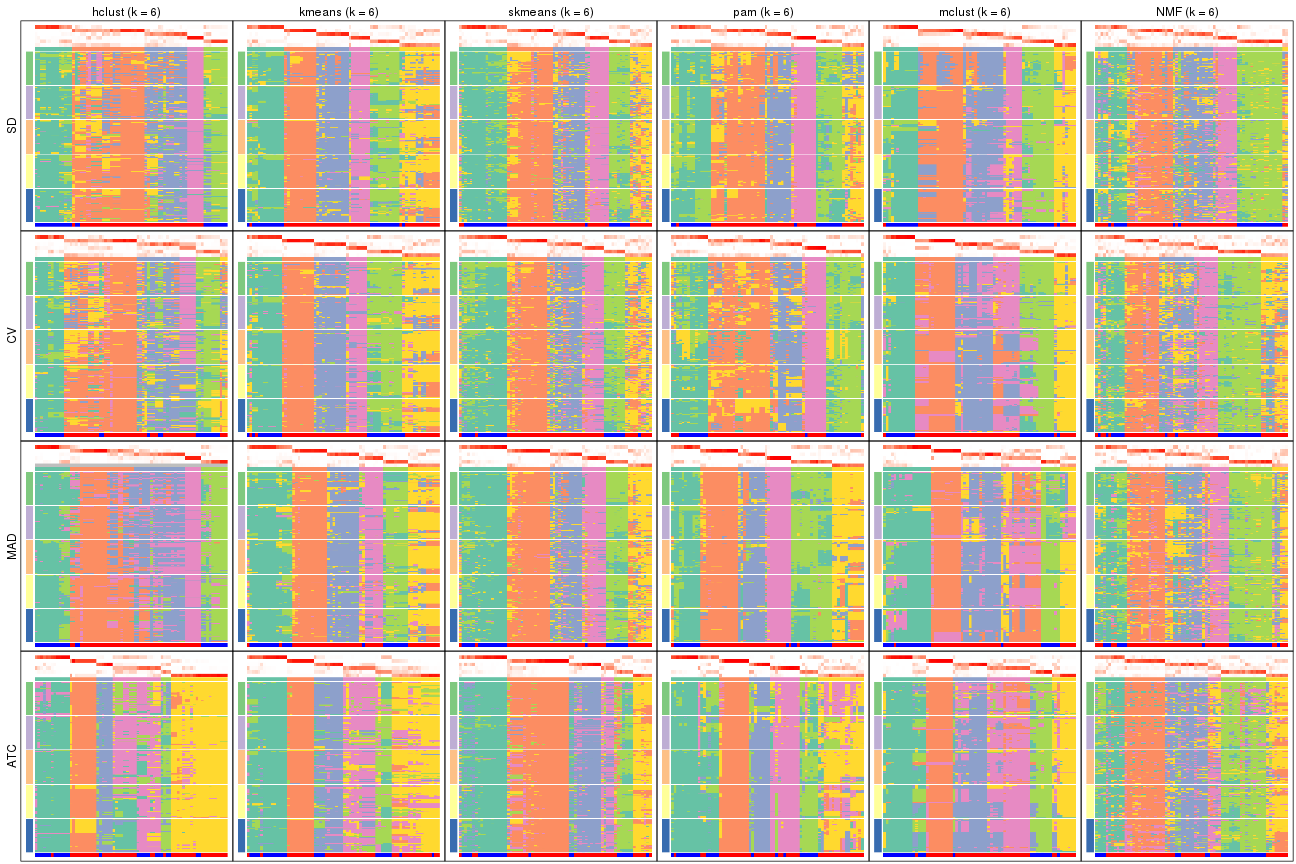

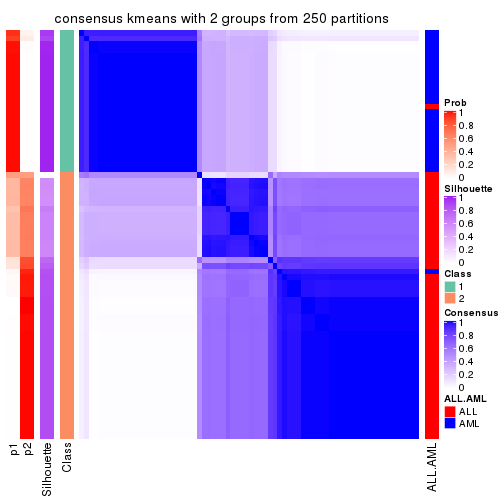

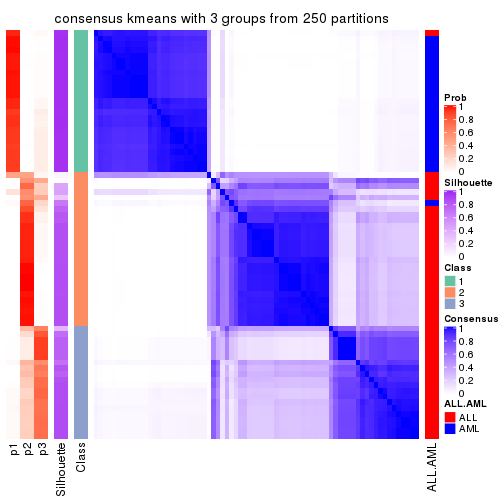

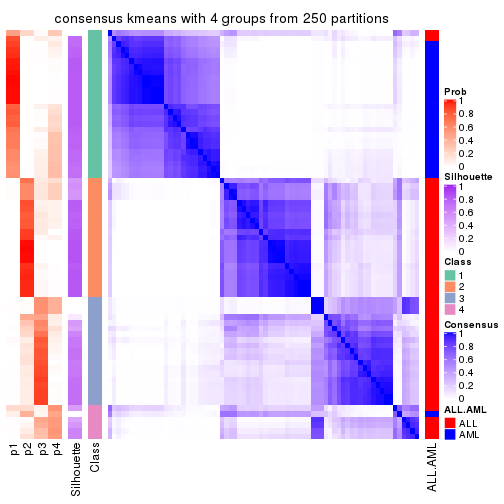

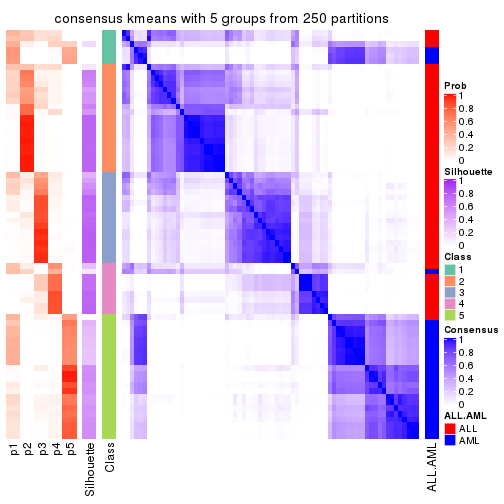

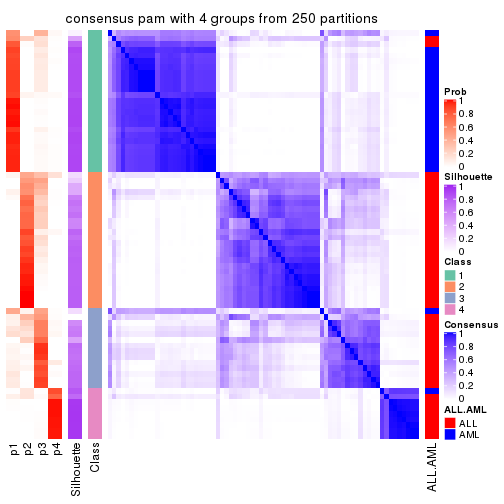

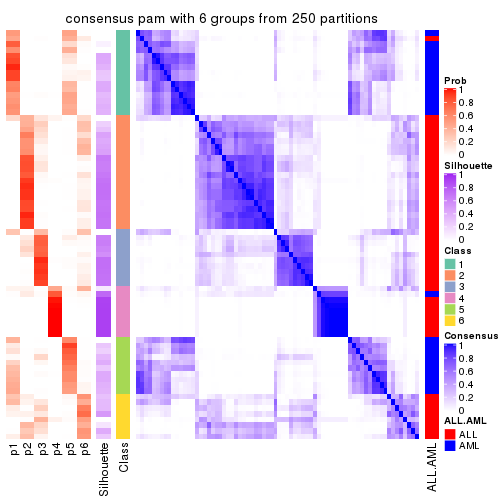

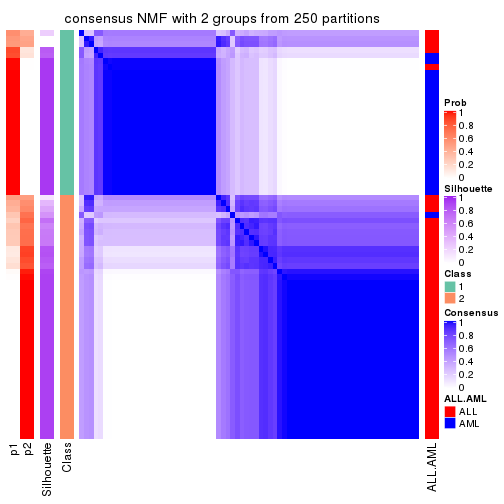

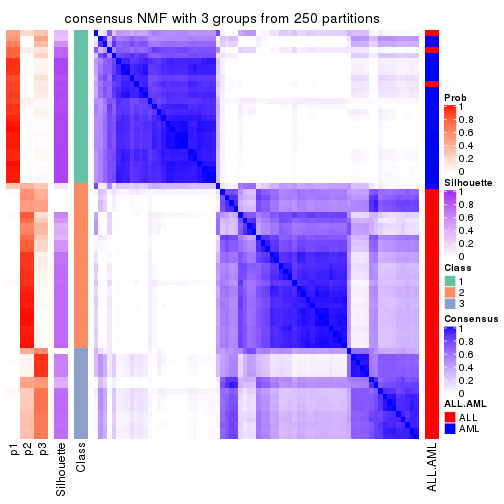

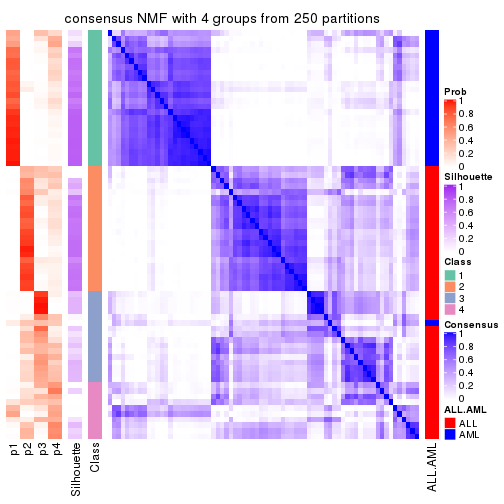

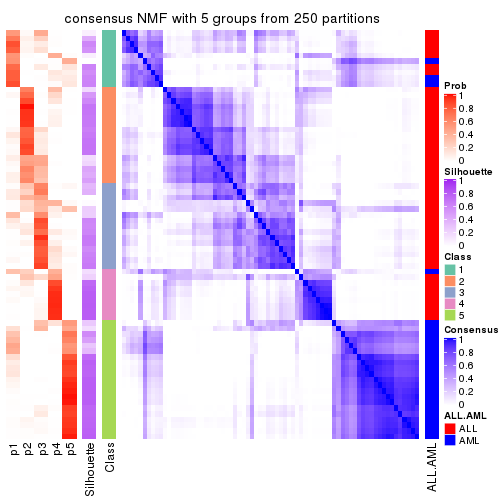

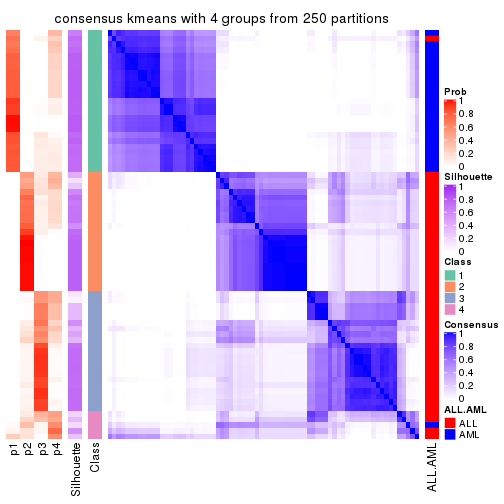

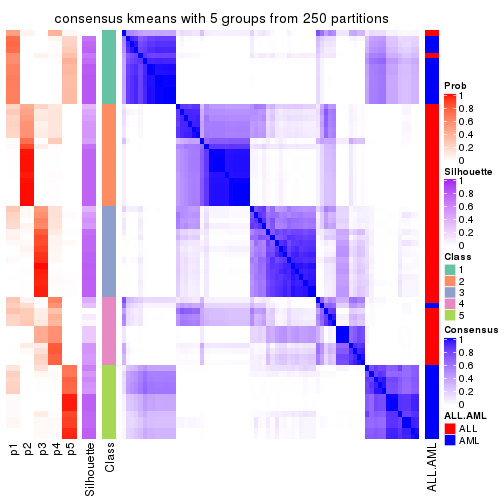

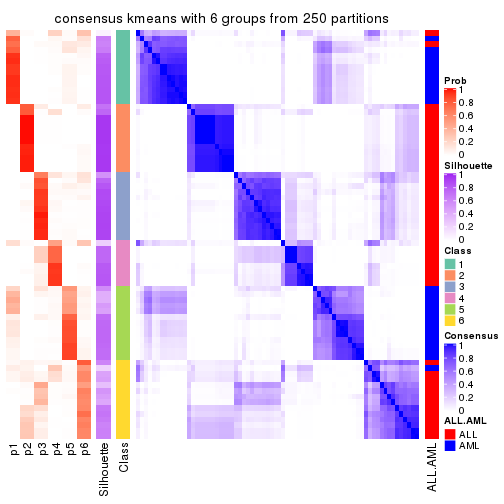

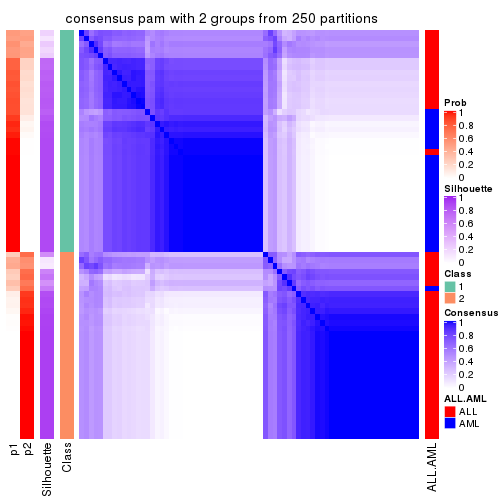

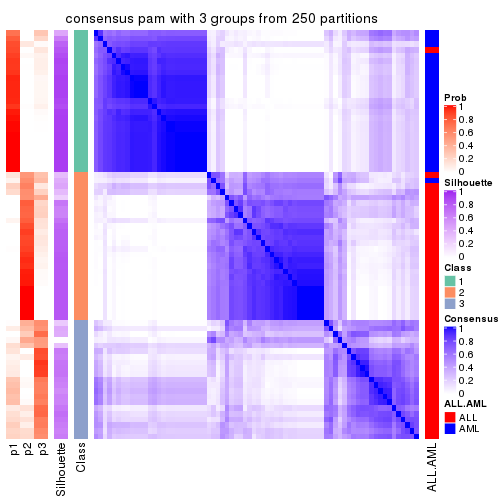

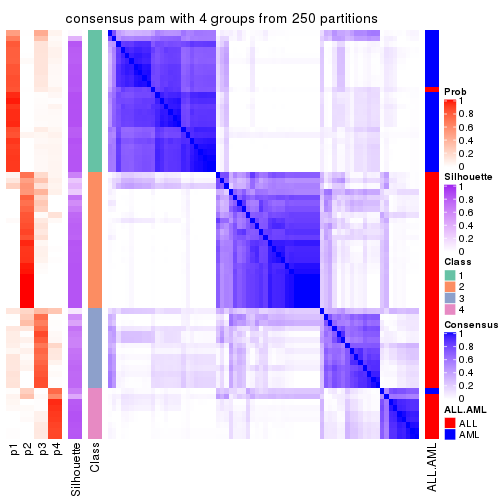

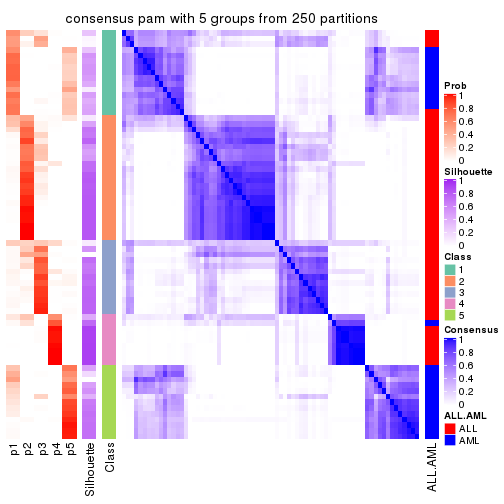

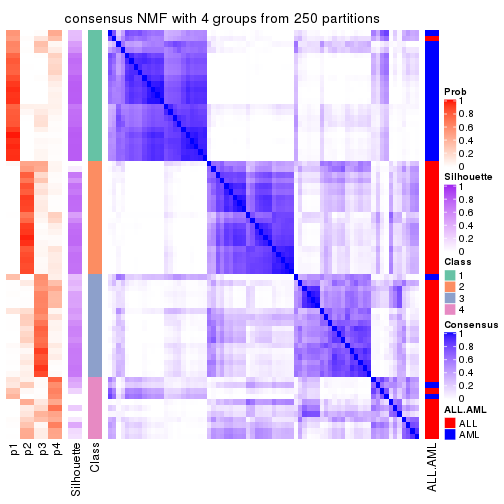

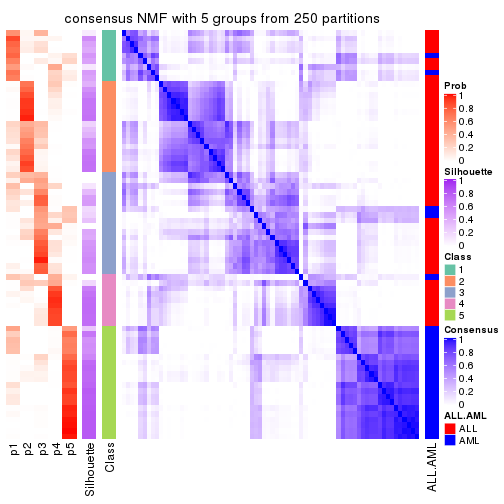

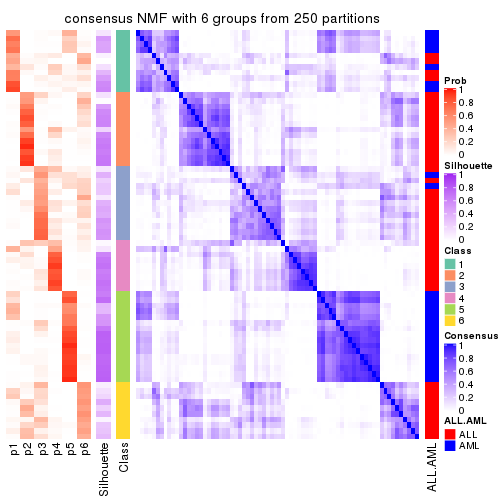

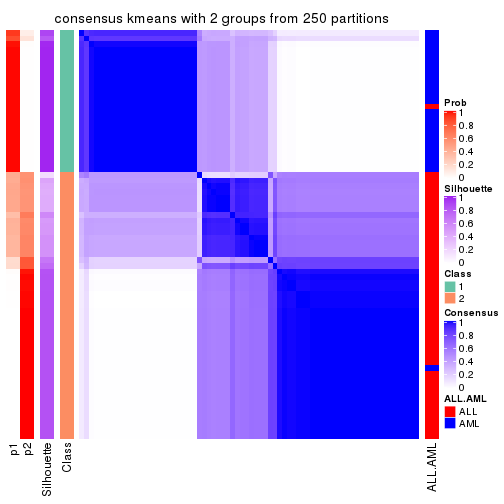

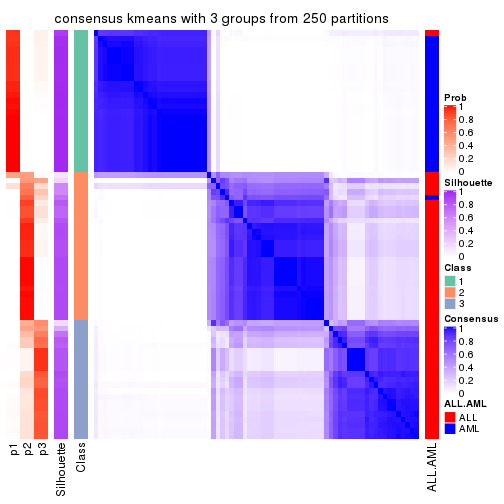

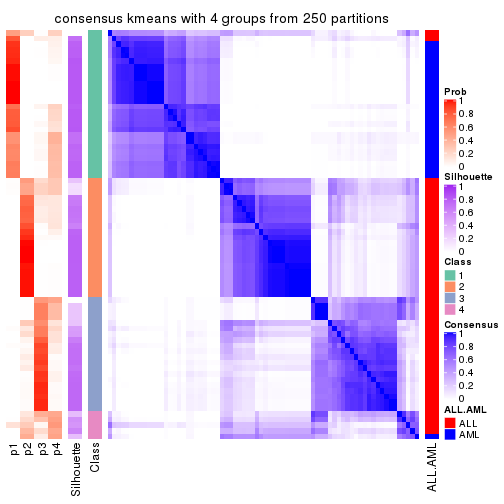

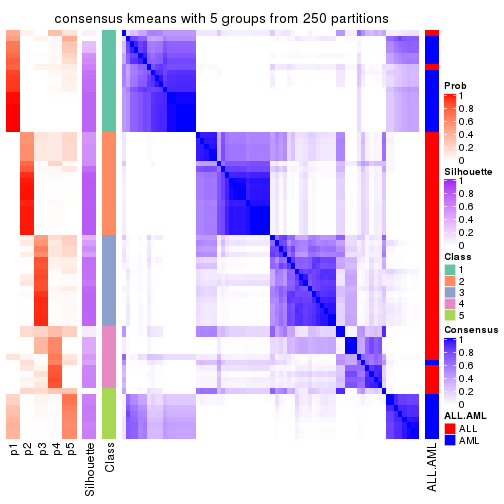

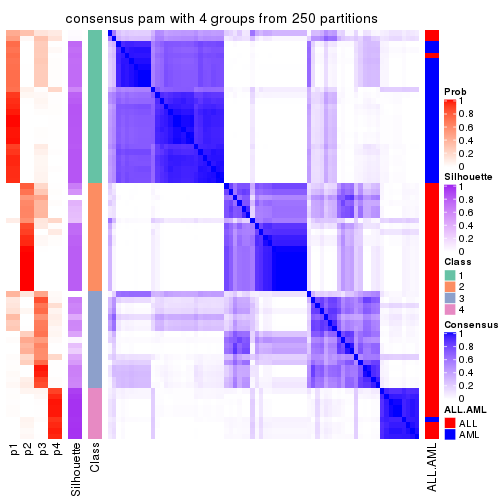

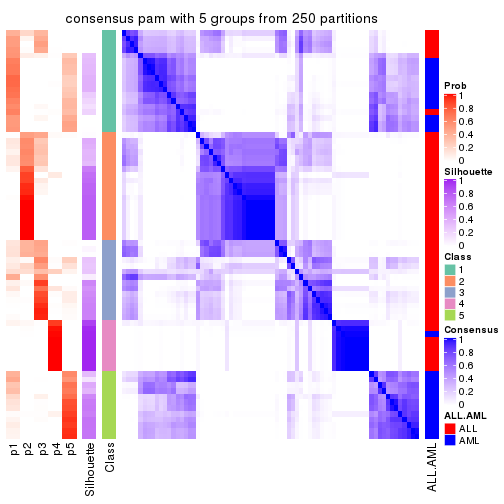

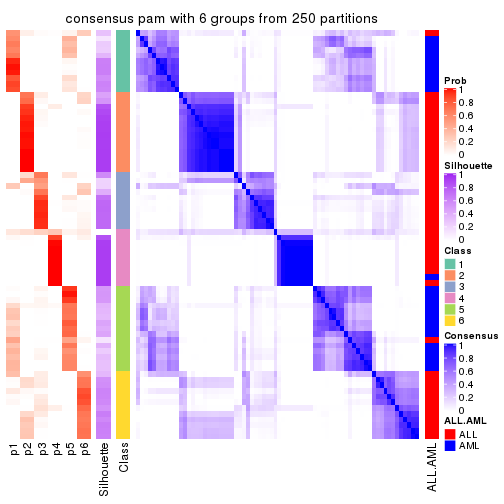

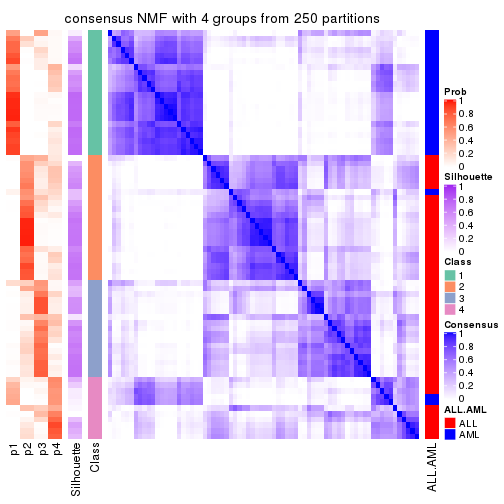

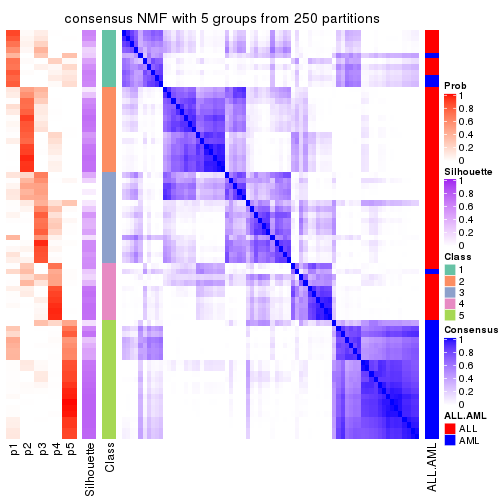

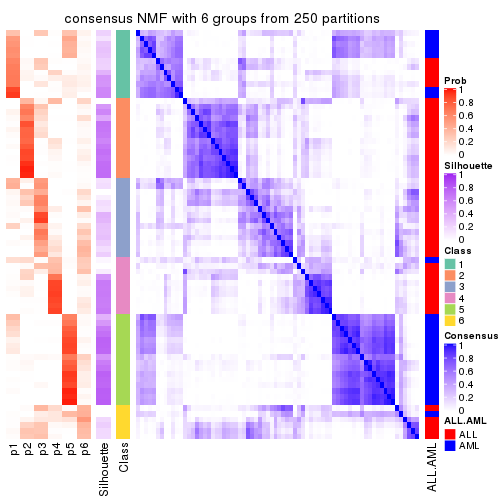

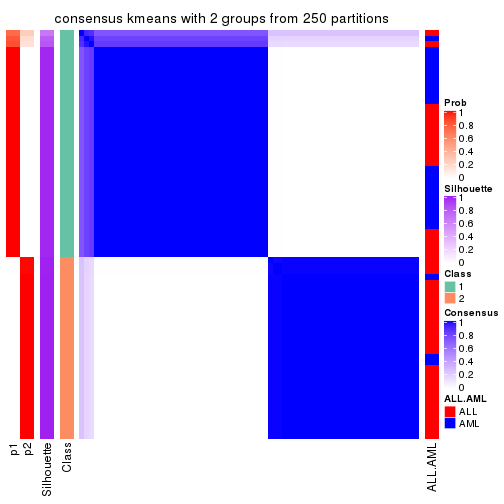

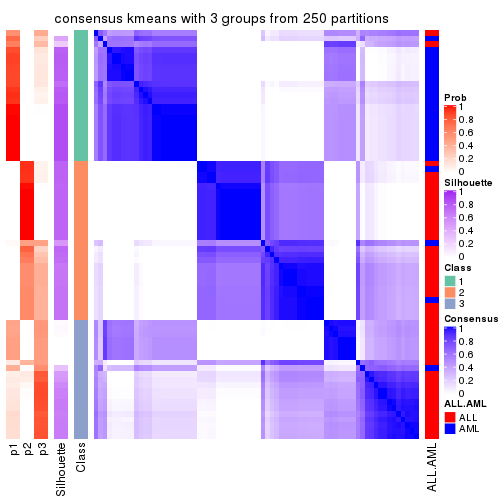

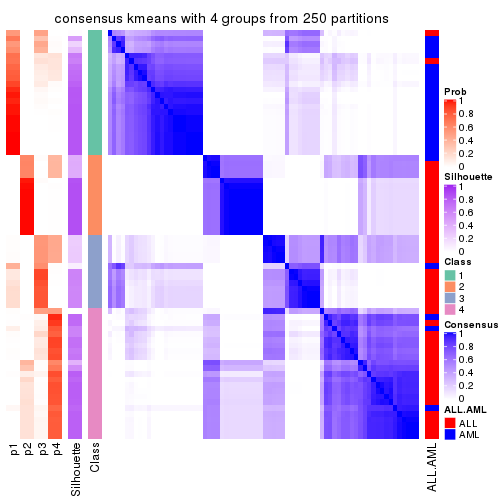

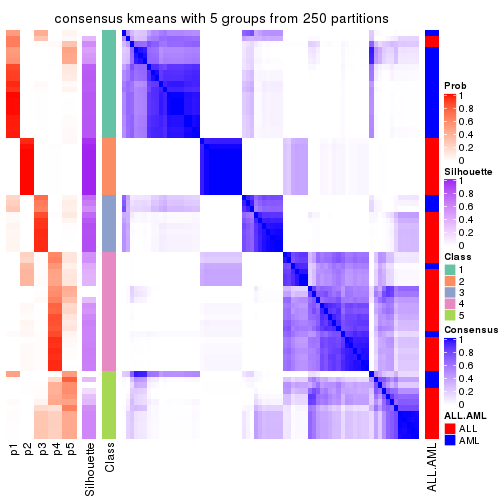

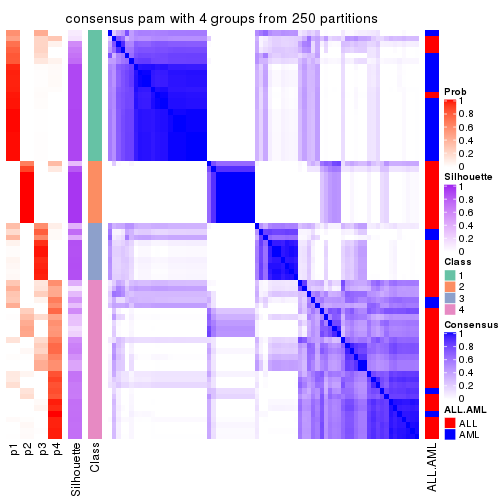

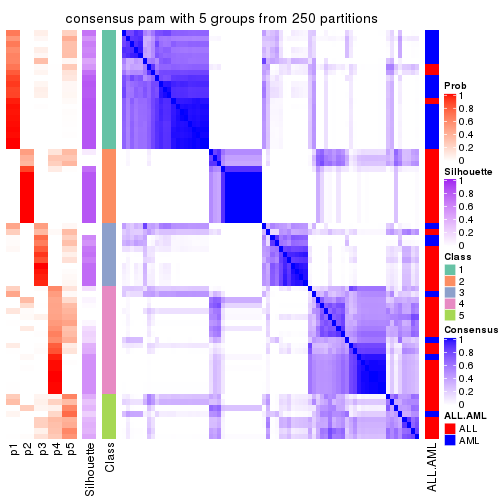

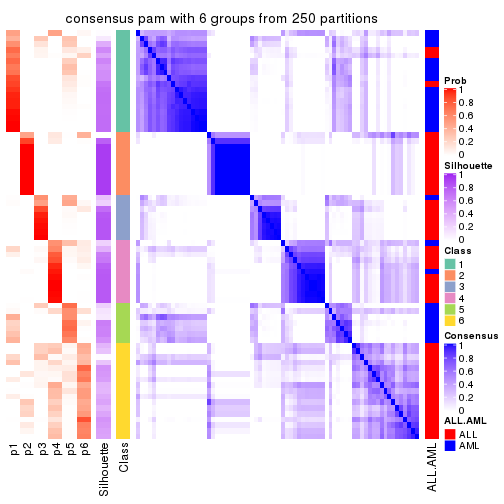

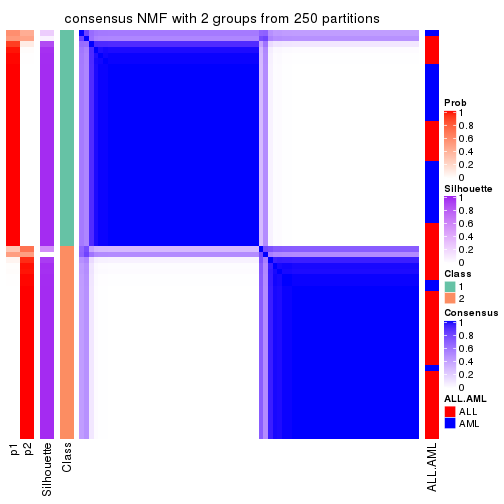

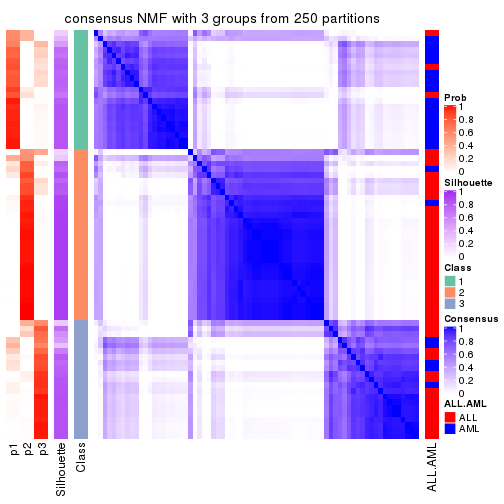

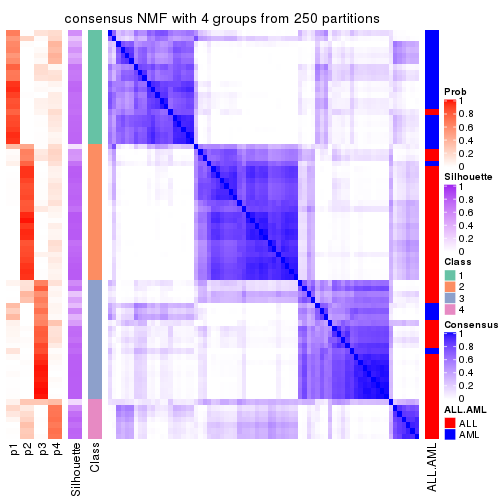

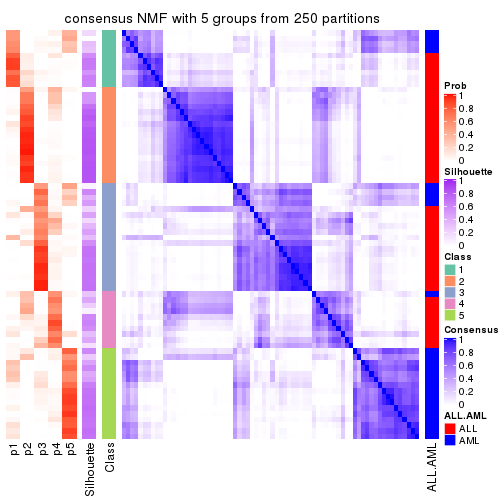

Consensus heatmaps for all methods. (What is a consensus heatmap?)

collect_plots(res_list, k = 2, fun = consensus_heatmap, mc.cores = 4)

collect_plots(res_list, k = 3, fun = consensus_heatmap, mc.cores = 4)

collect_plots(res_list, k = 4, fun = consensus_heatmap, mc.cores = 4)

collect_plots(res_list, k = 5, fun = consensus_heatmap, mc.cores = 4)

collect_plots(res_list, k = 6, fun = consensus_heatmap, mc.cores = 4)

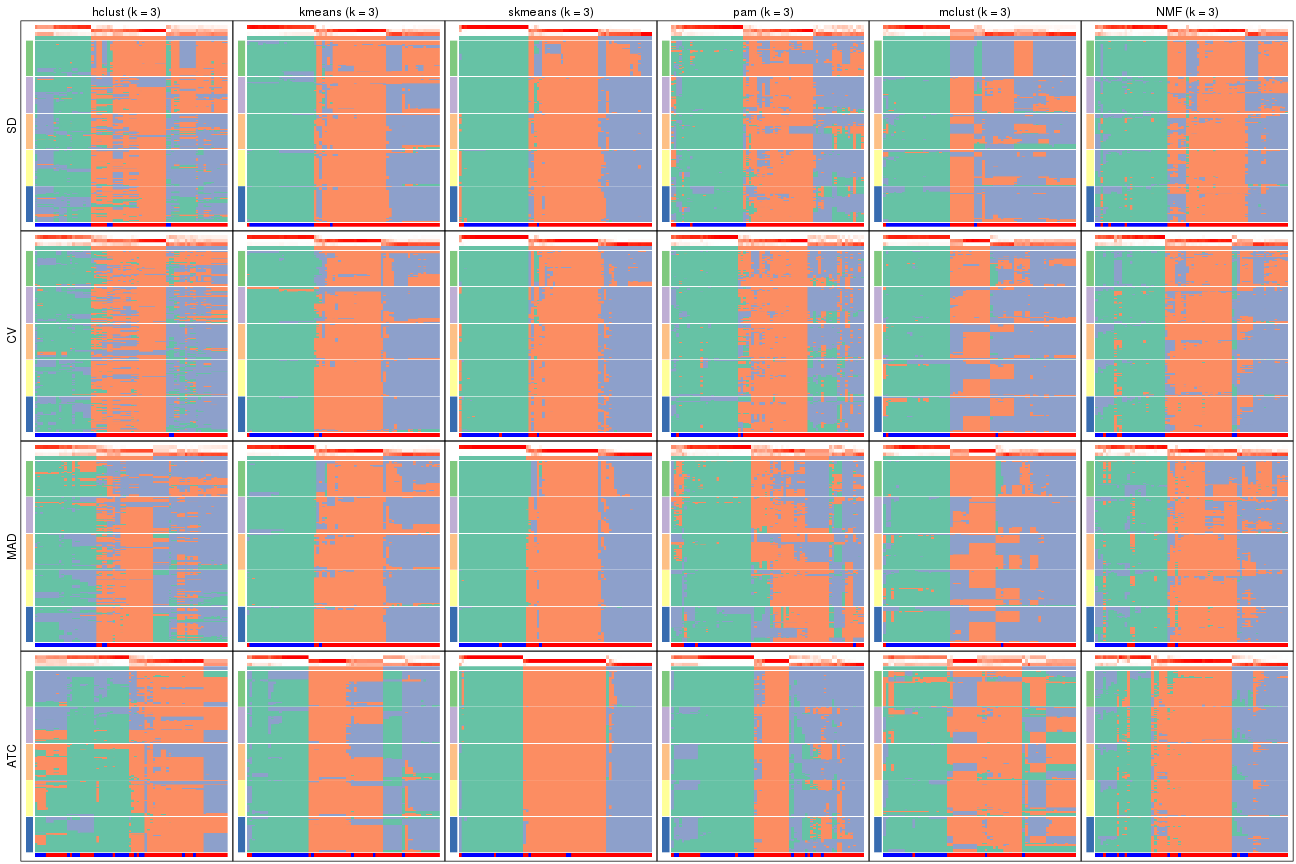

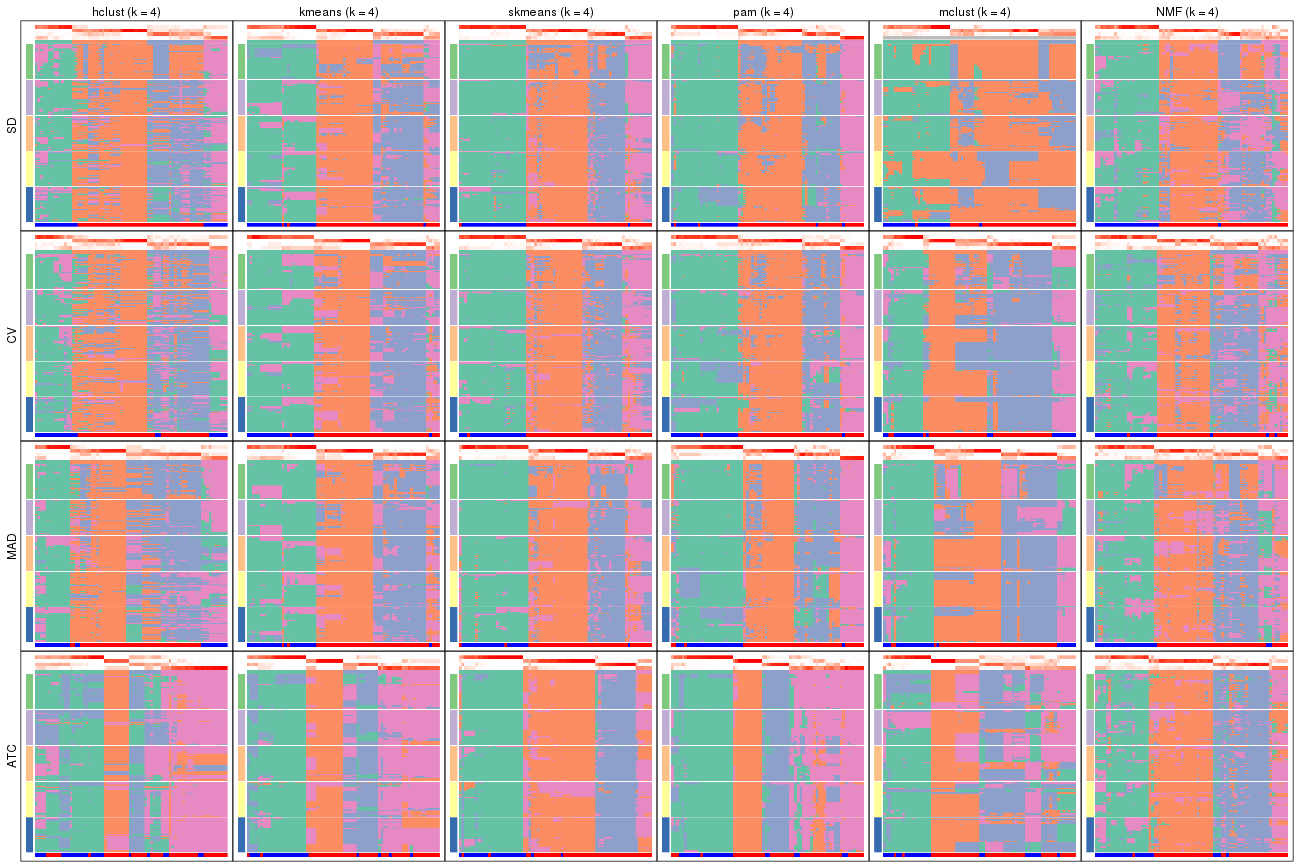

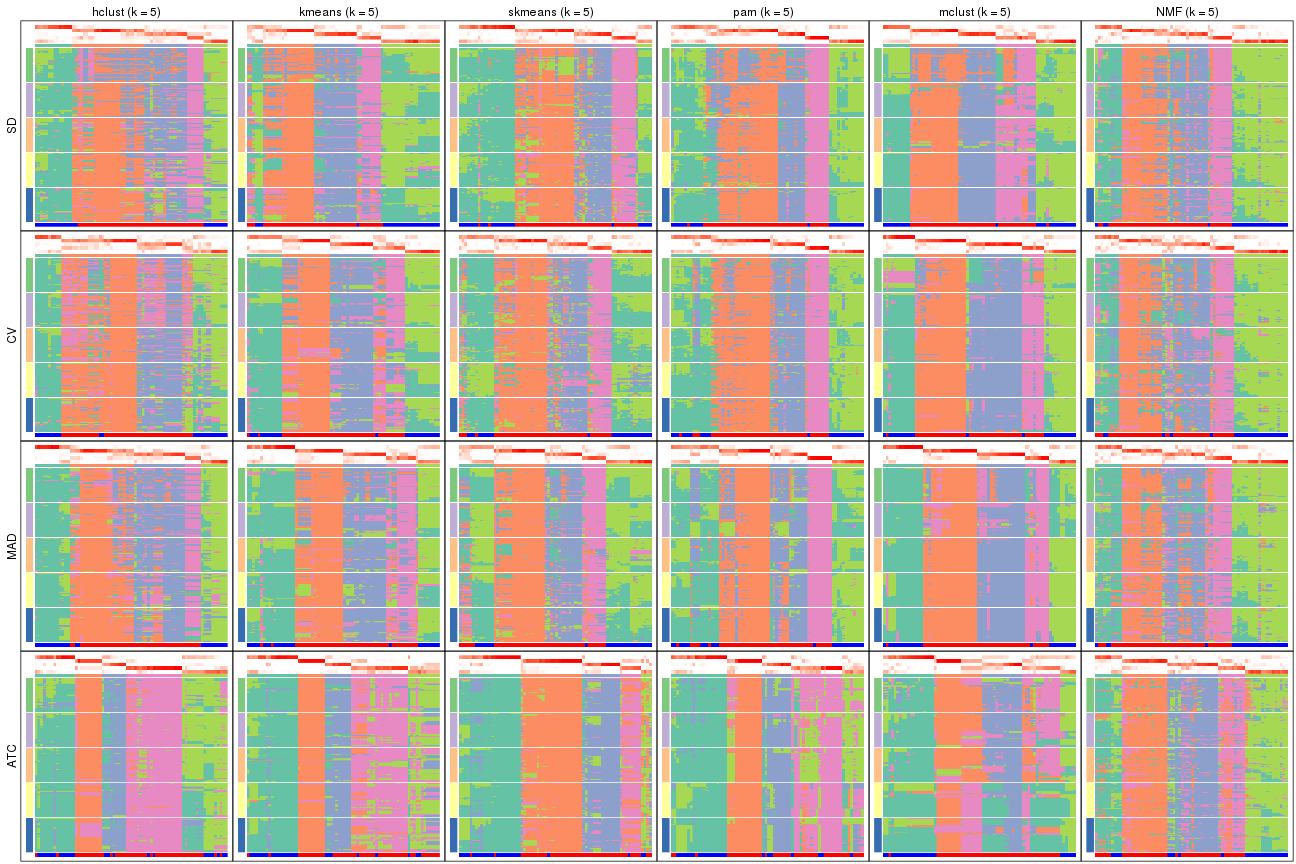

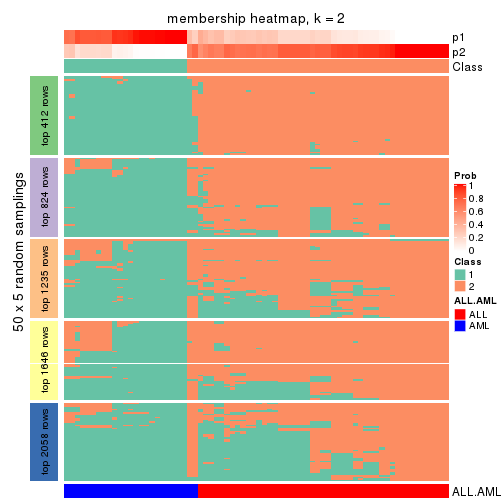

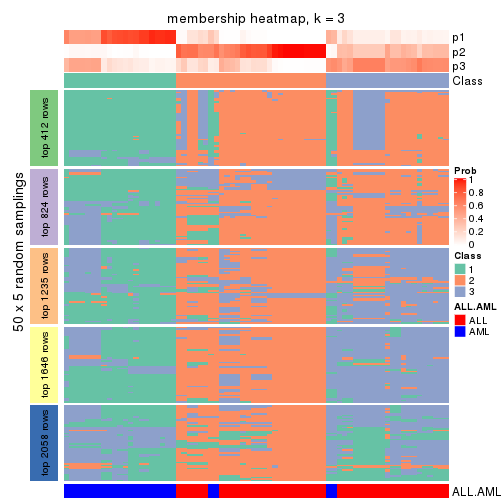

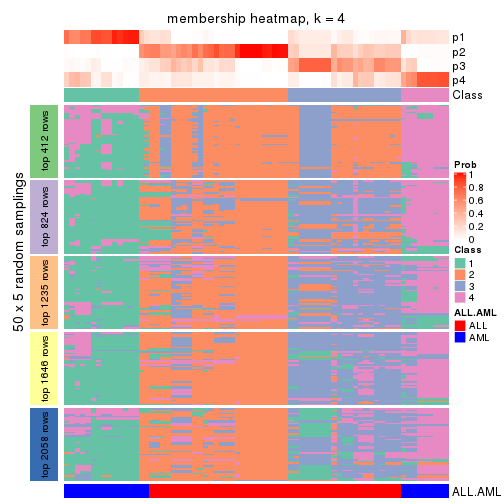

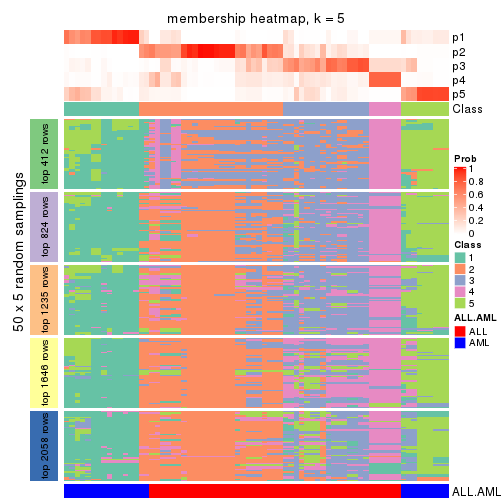

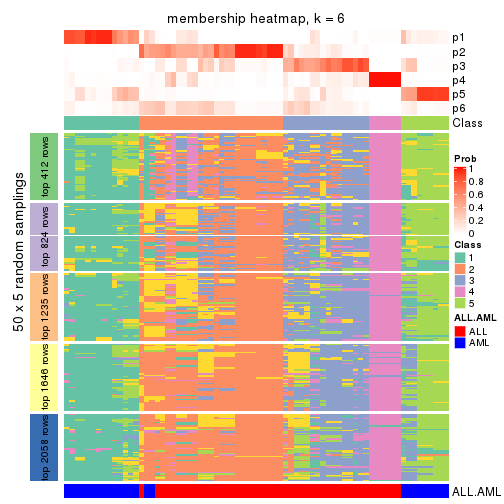

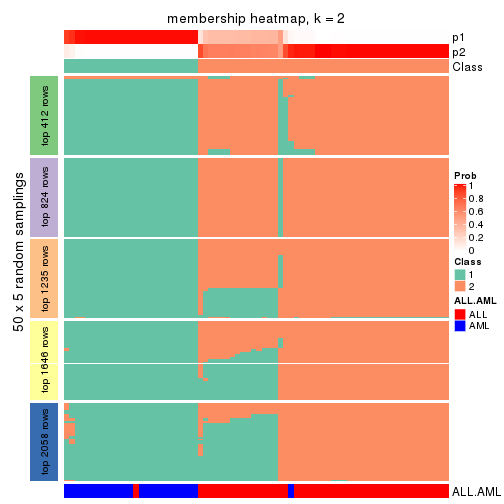

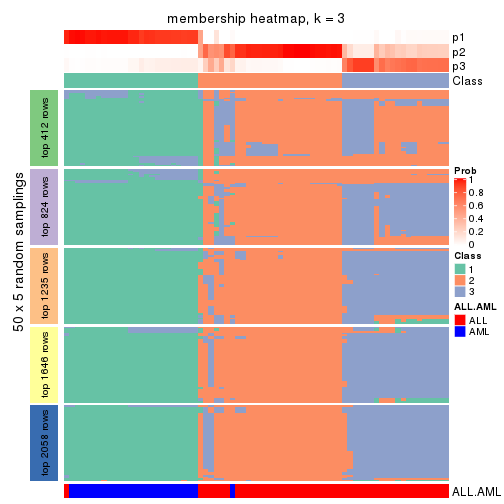

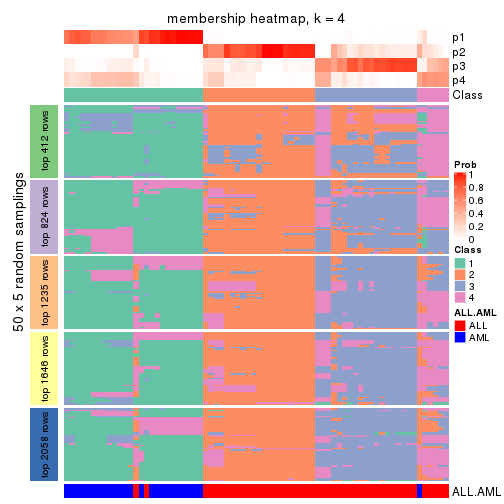

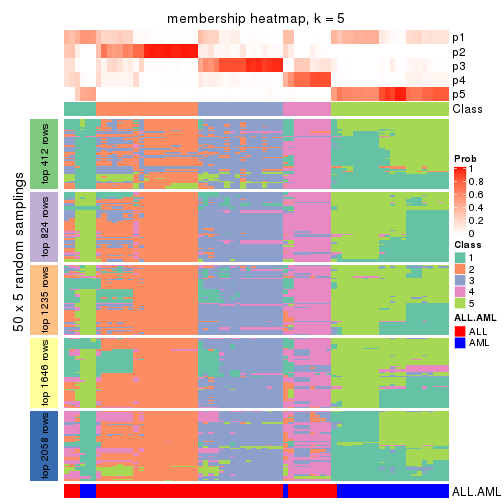

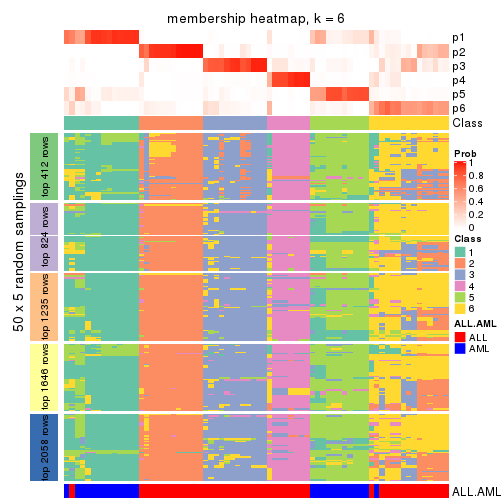

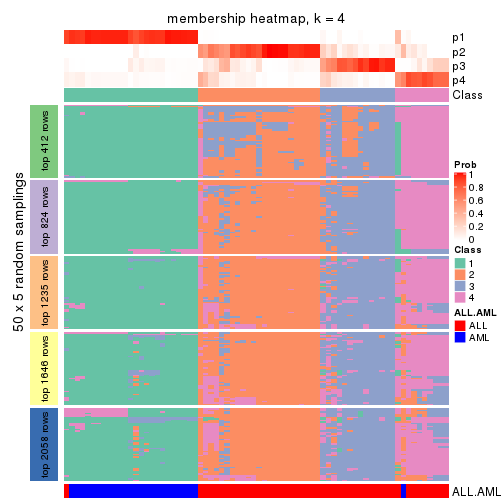

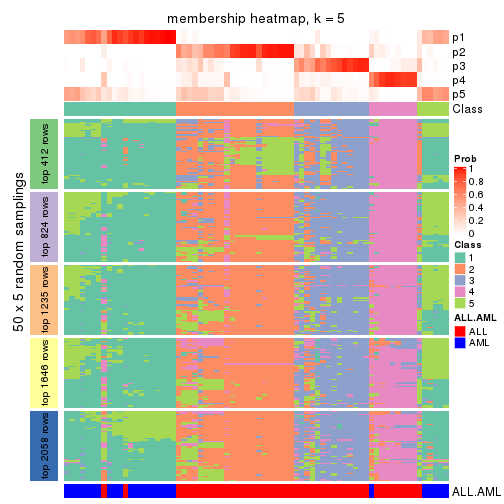

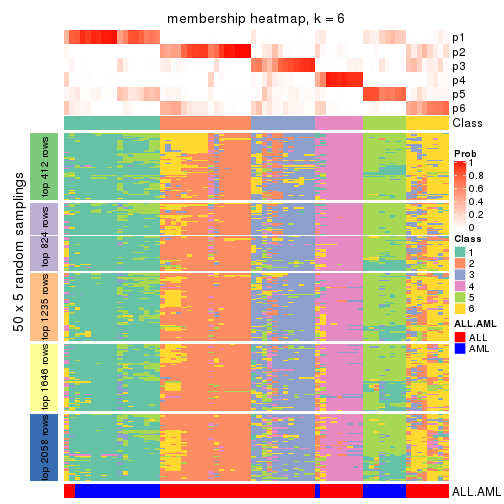

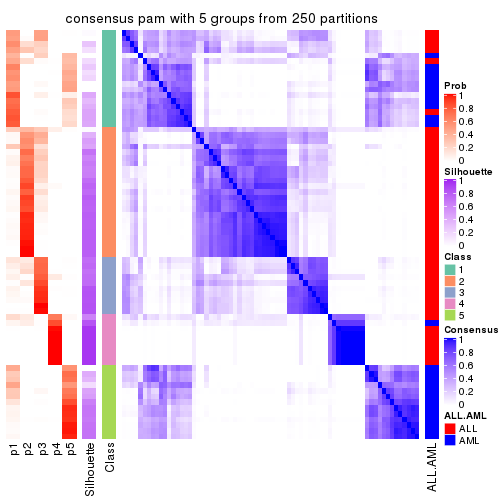

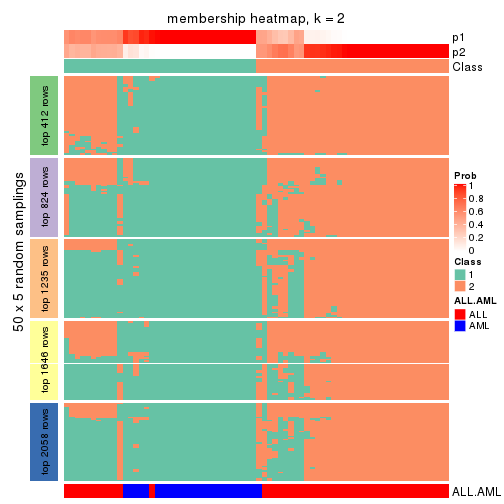

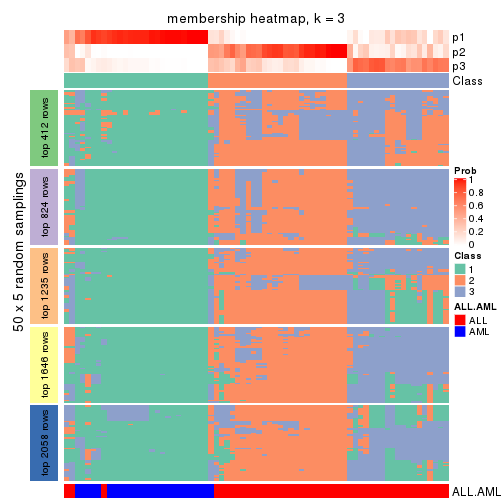

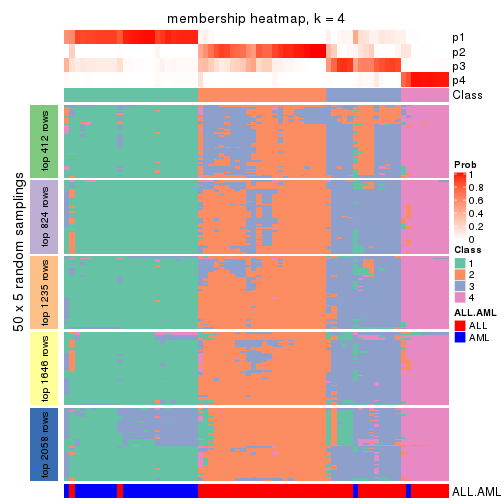

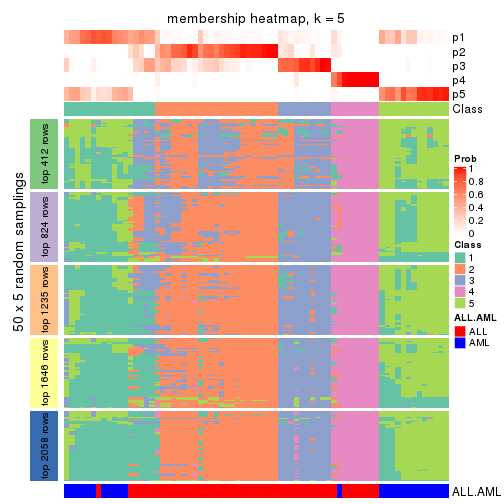

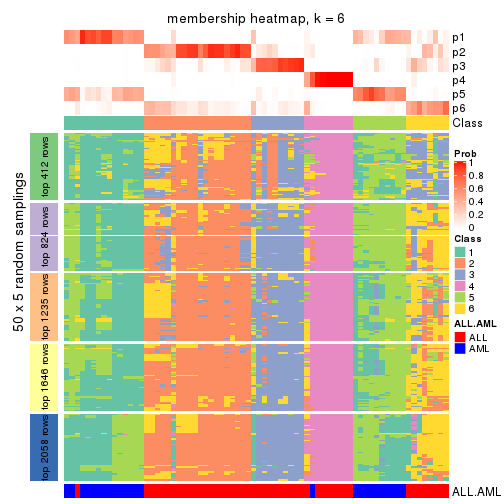

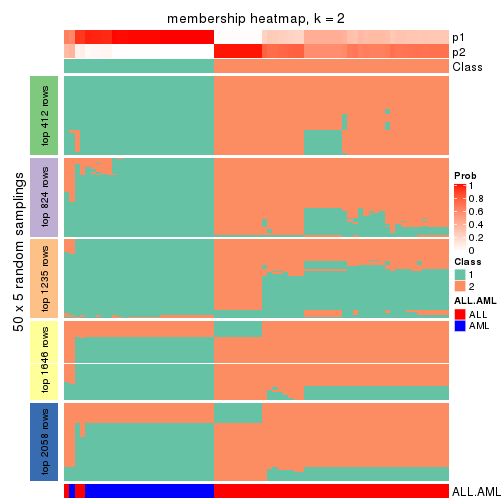

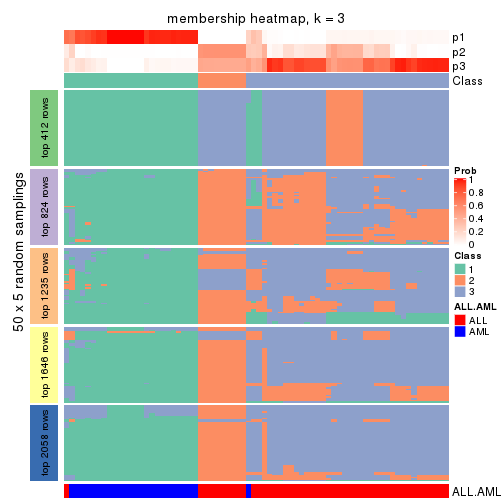

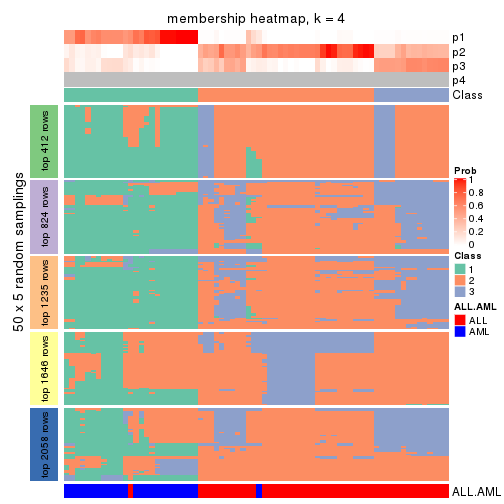

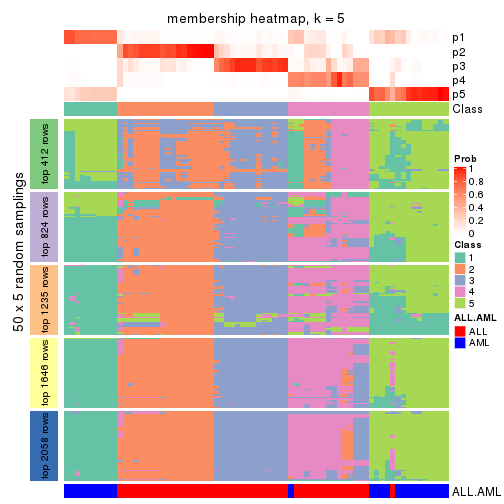

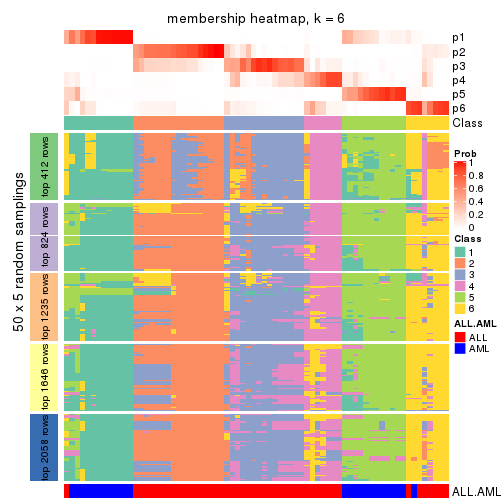

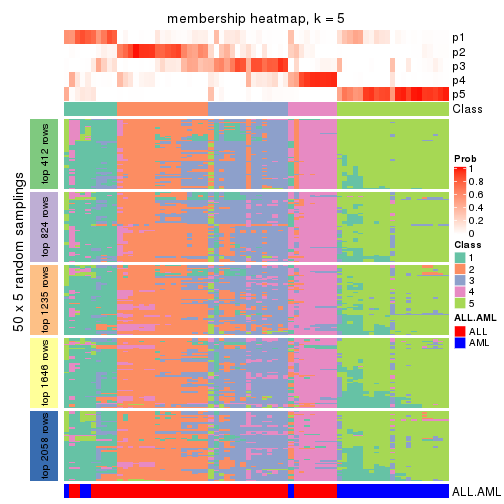

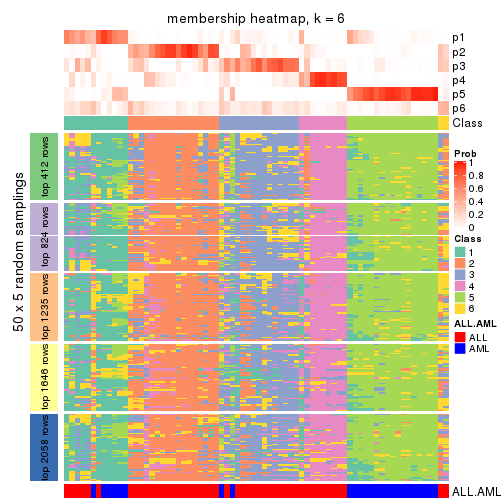

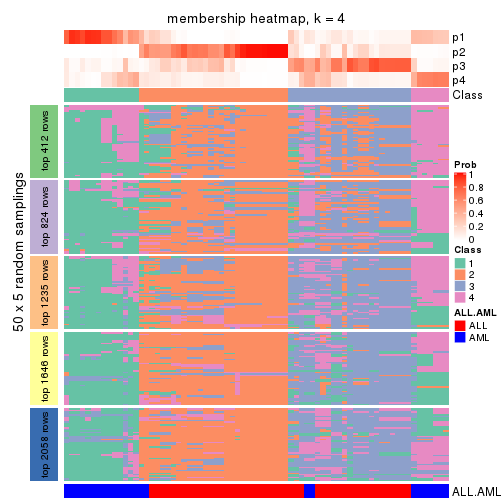

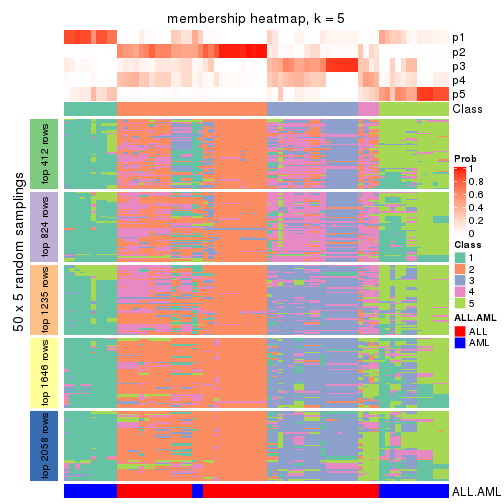

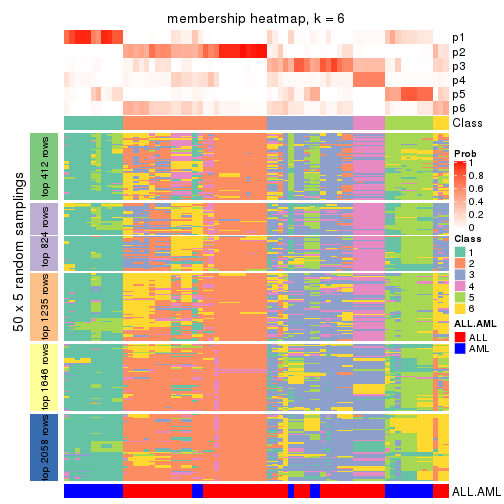

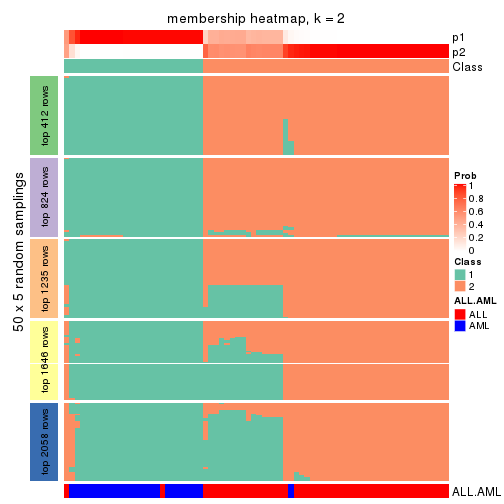

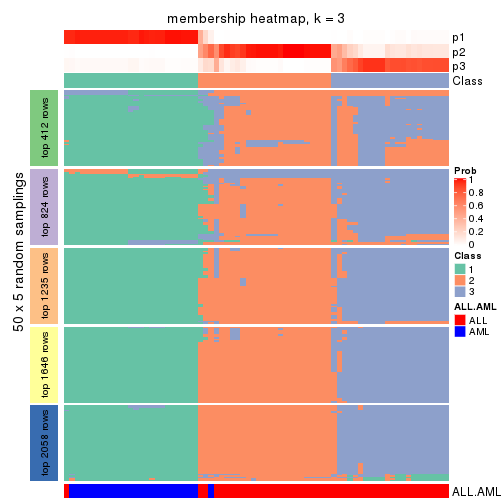

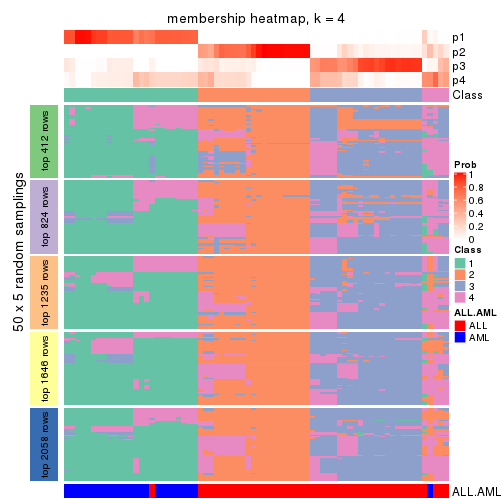

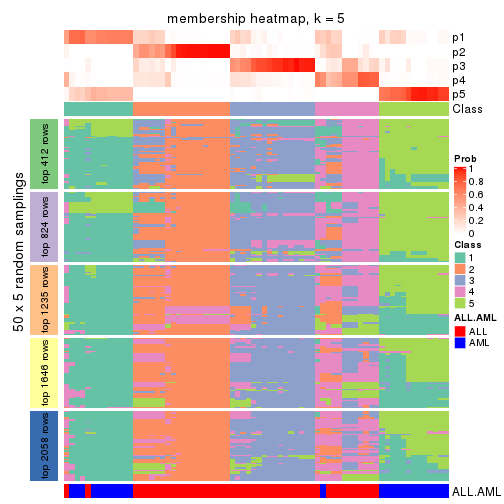

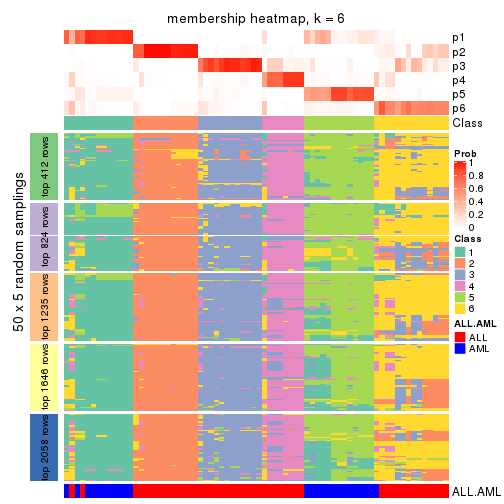

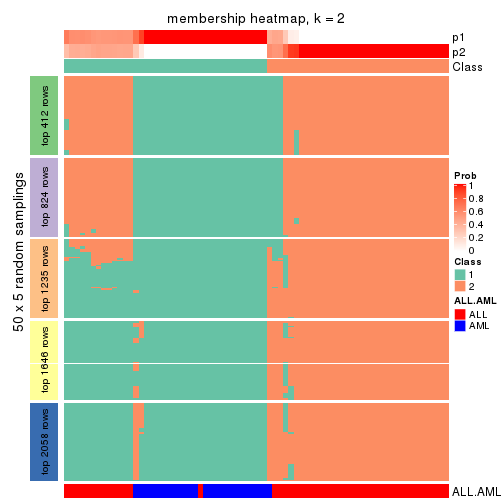

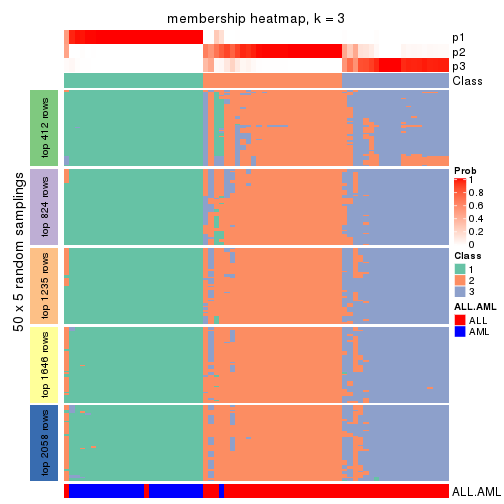

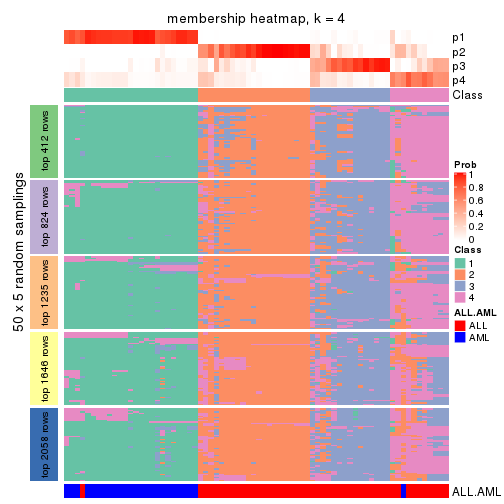

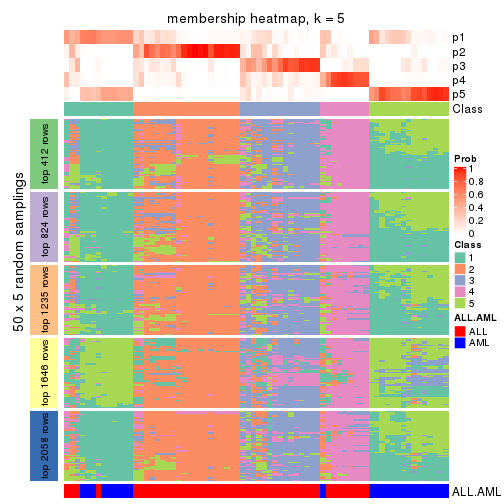

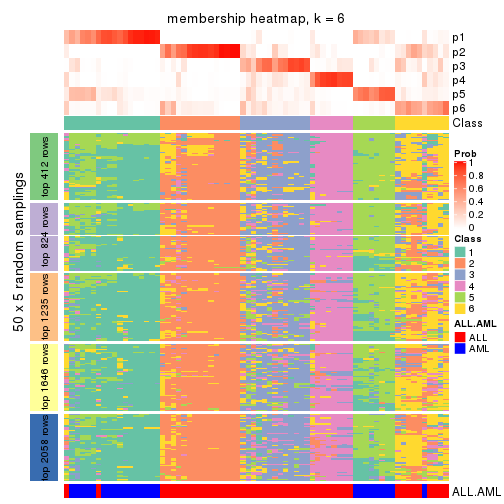

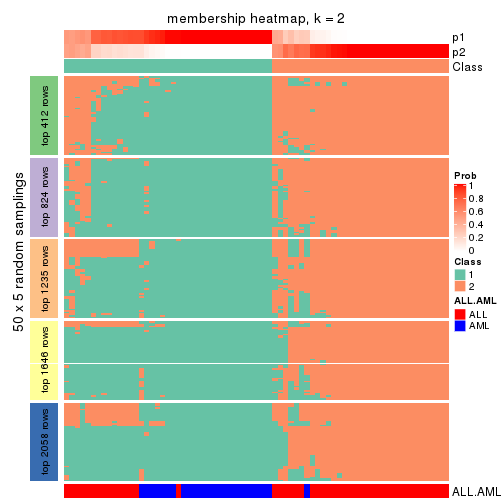

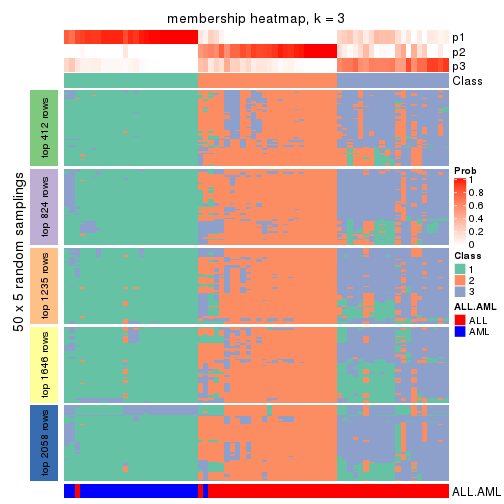

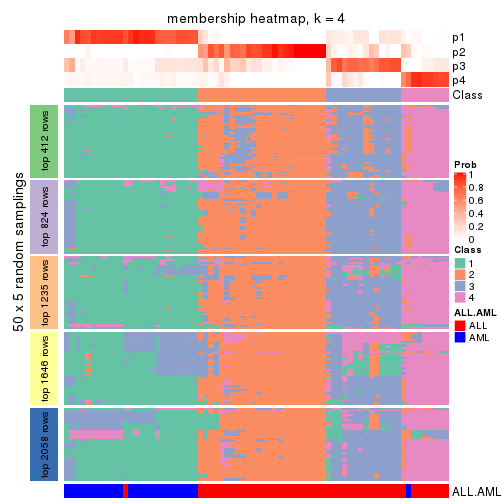

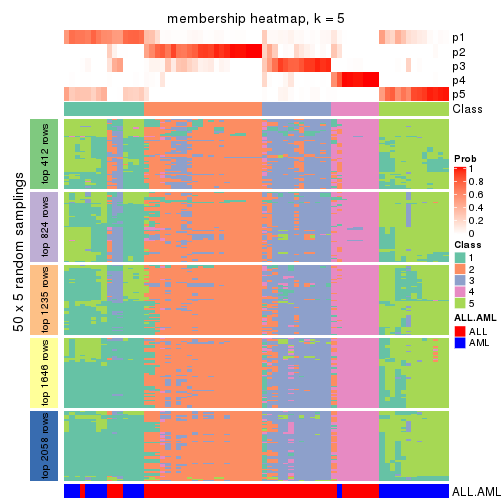

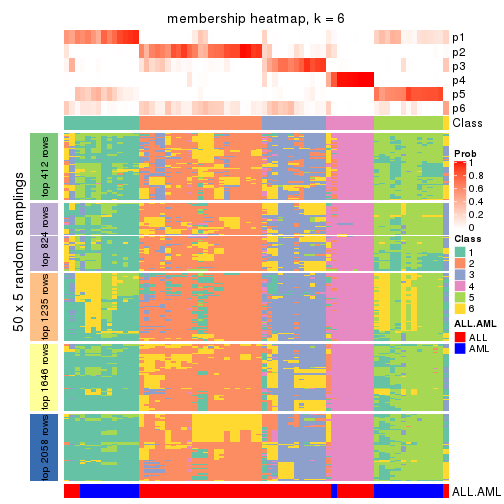

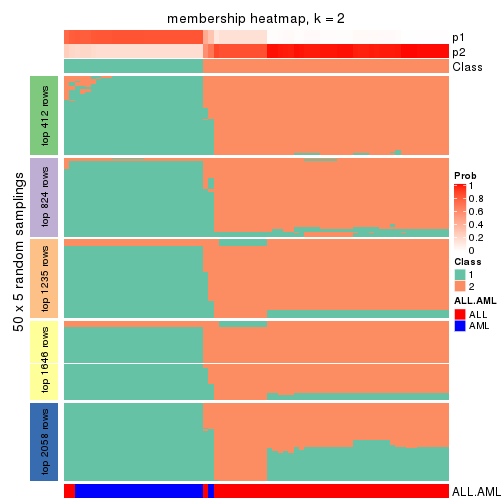

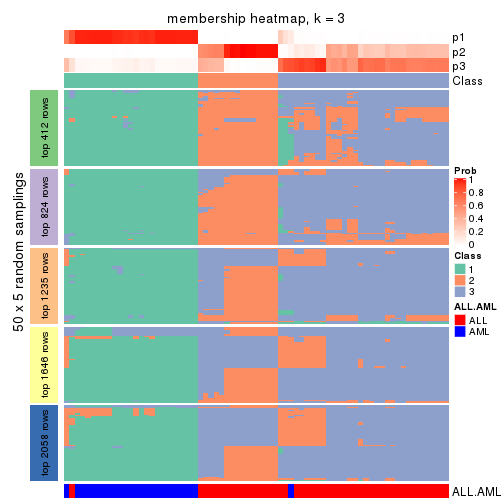

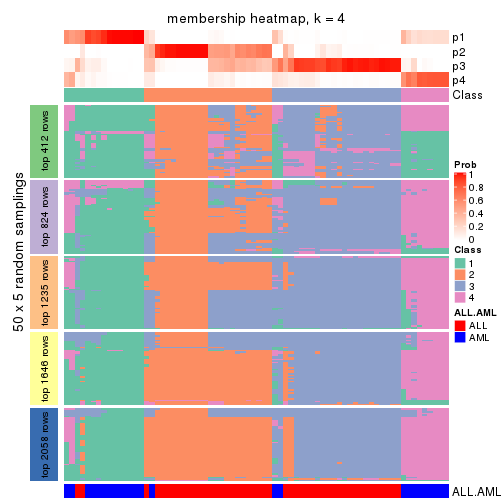

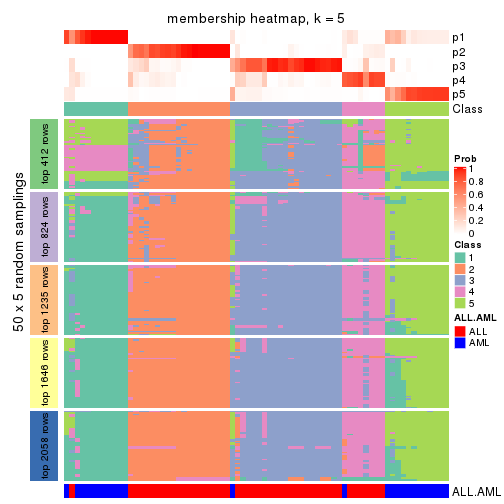

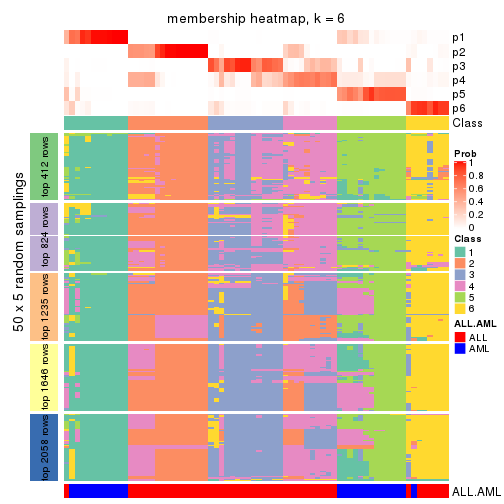

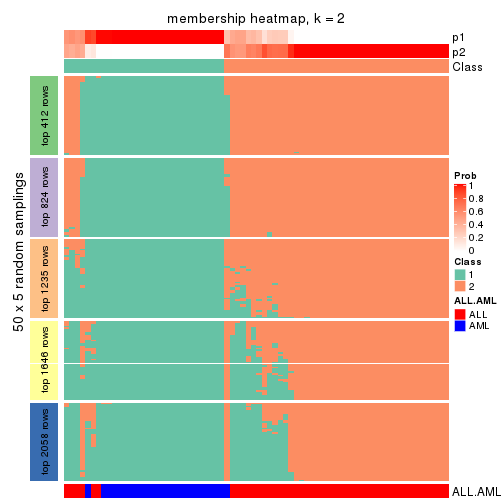

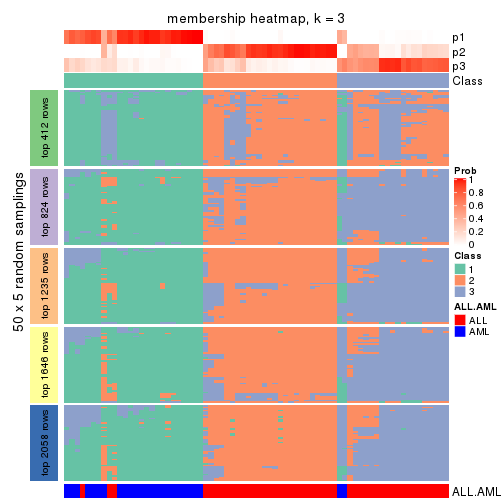

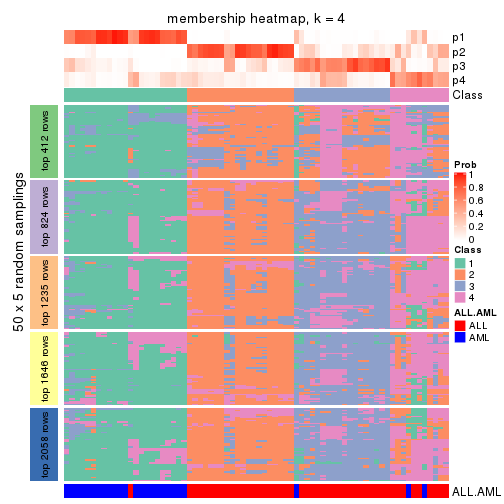

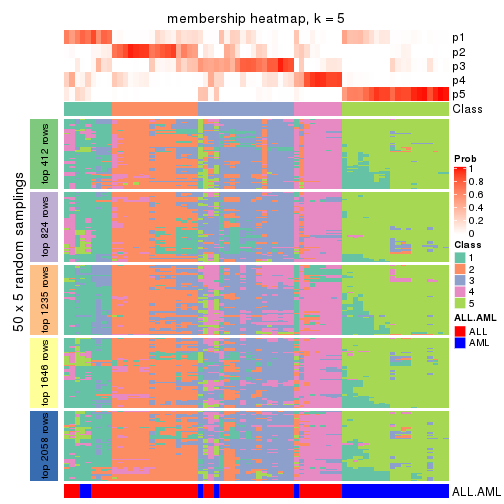

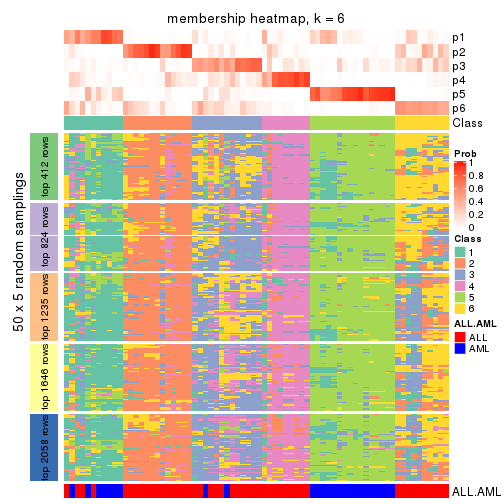

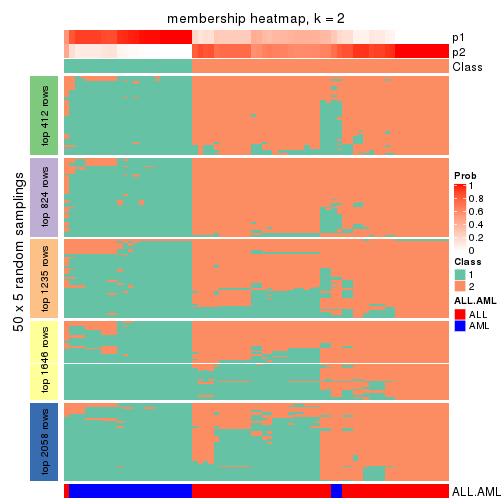

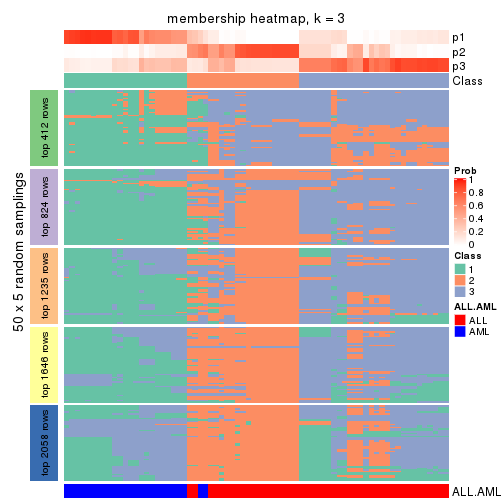

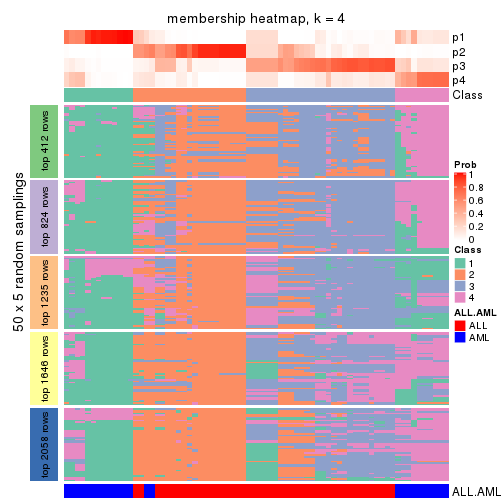

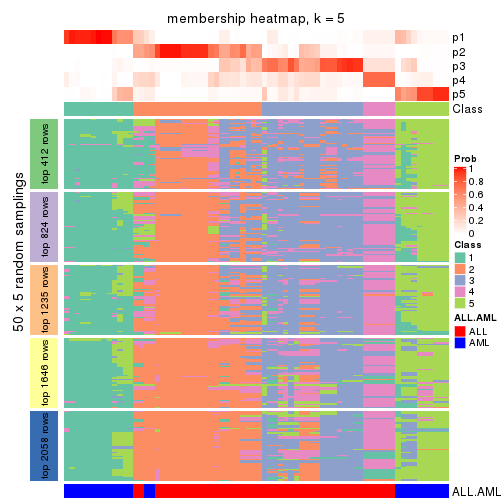

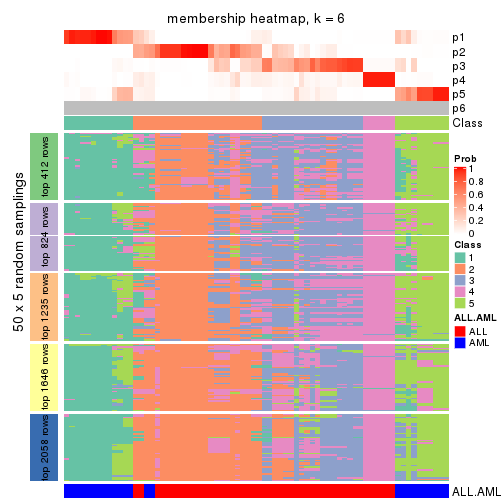

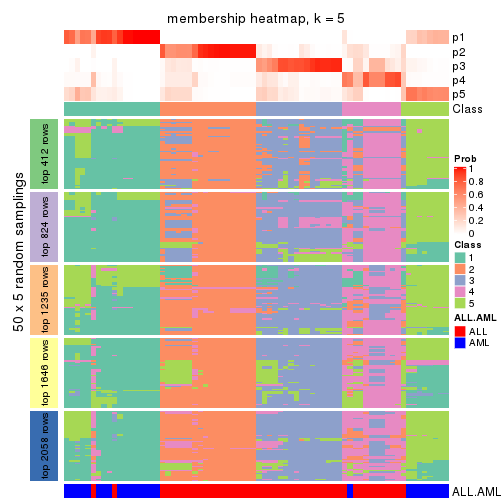

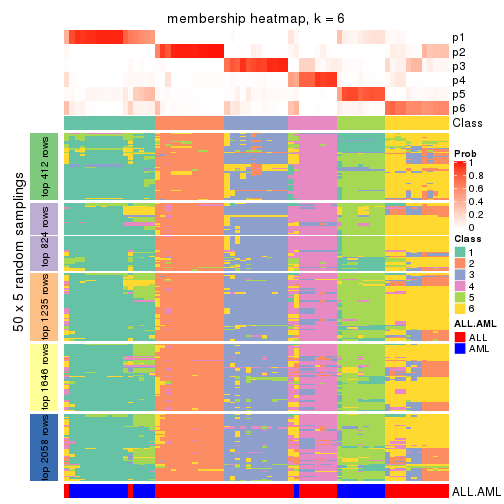

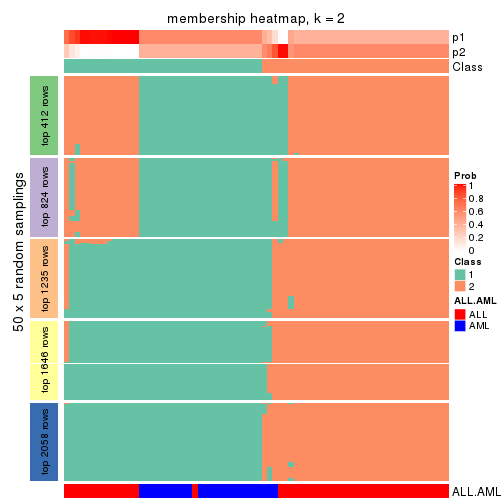

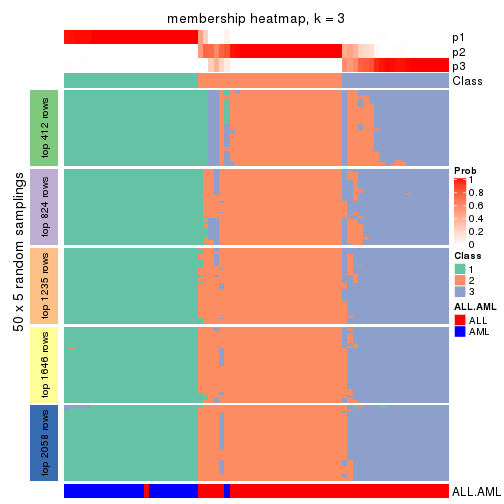

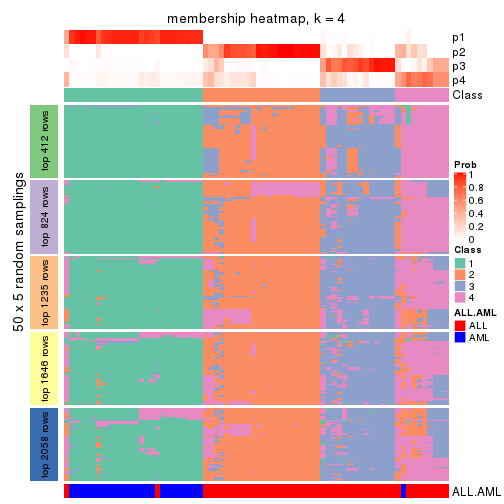

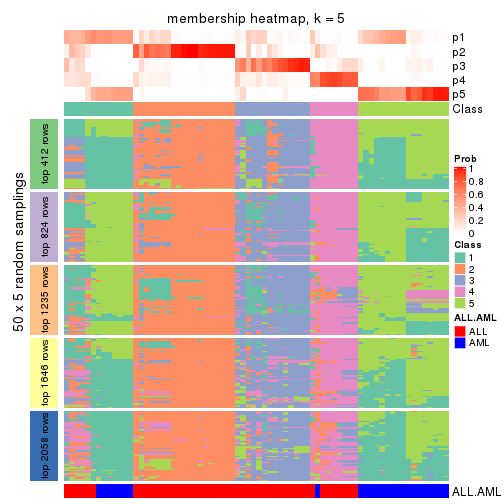

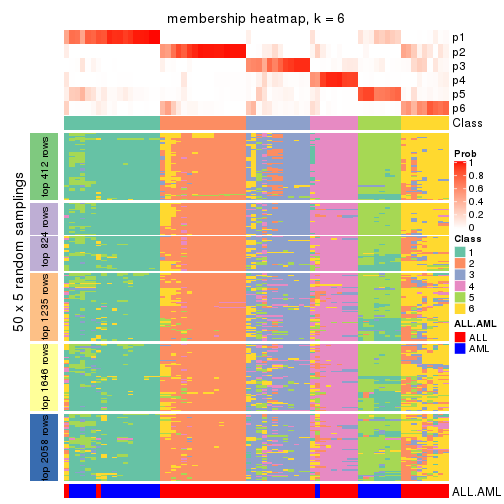

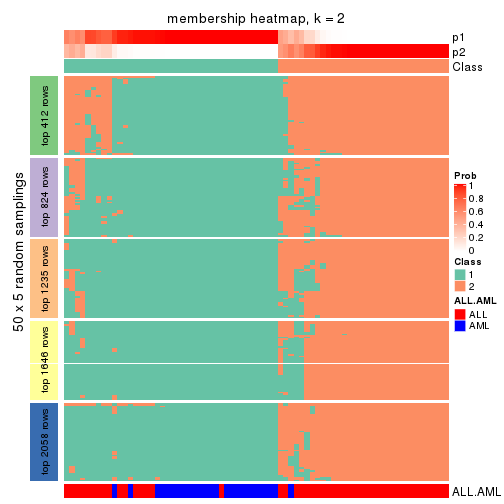

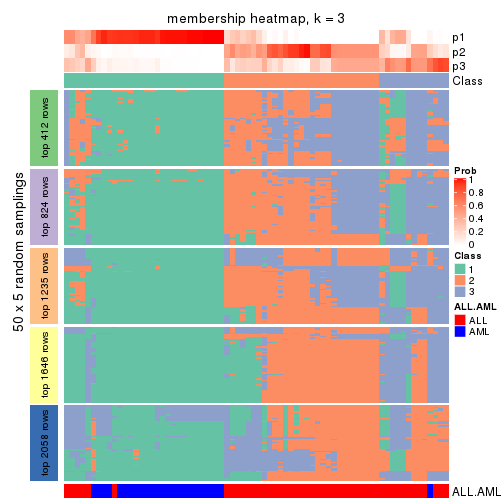

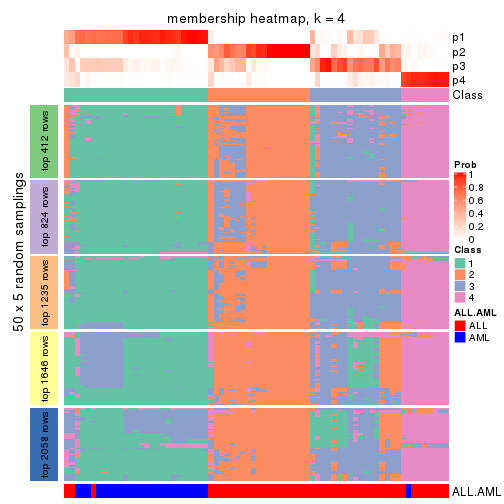

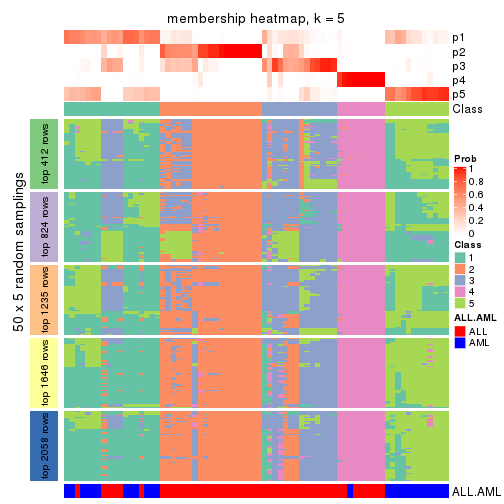

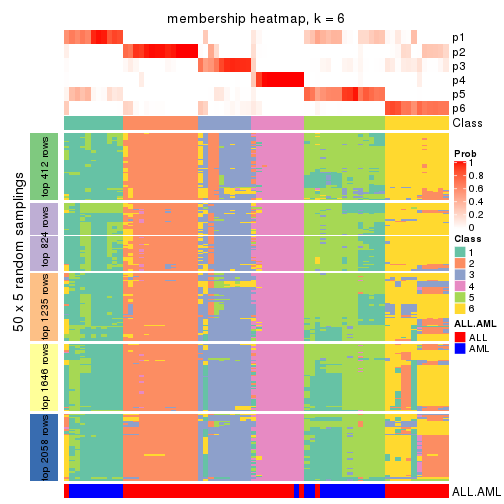

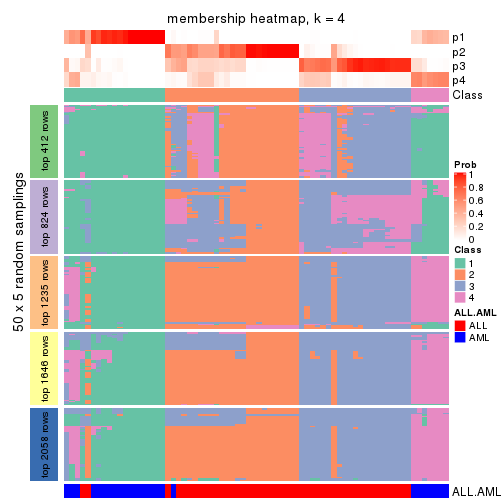

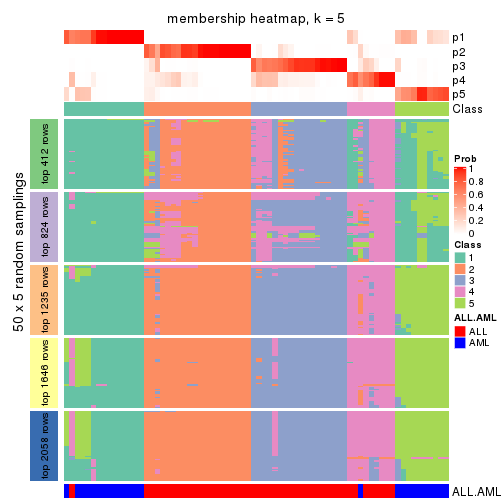

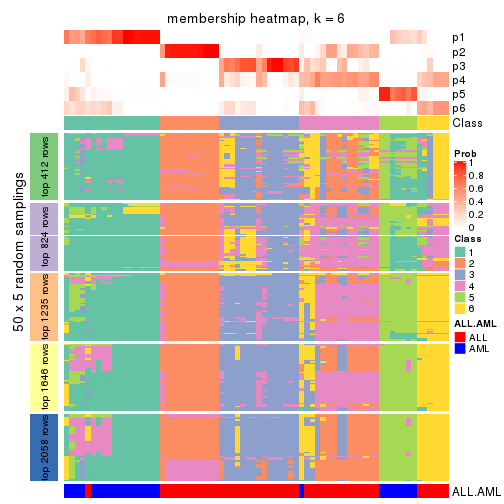

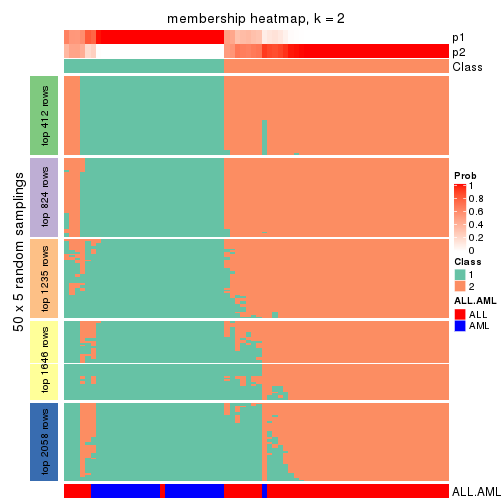

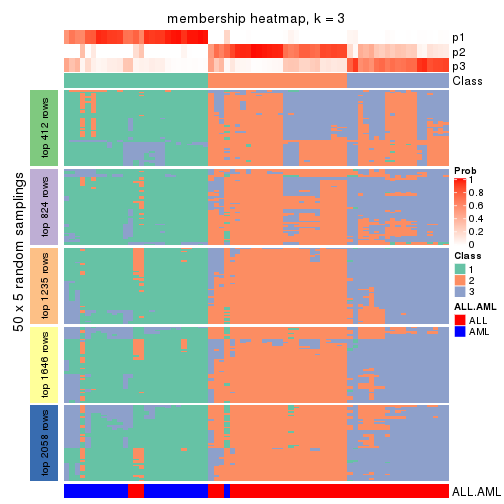

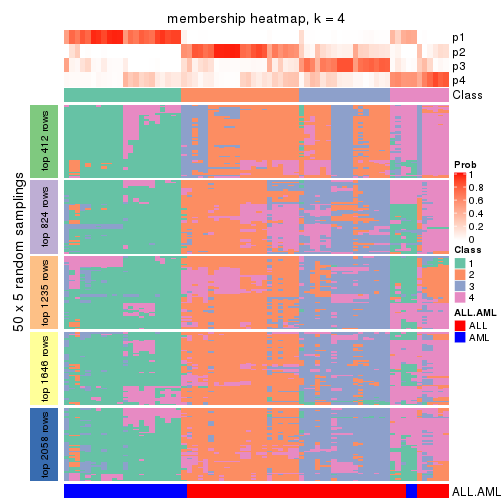

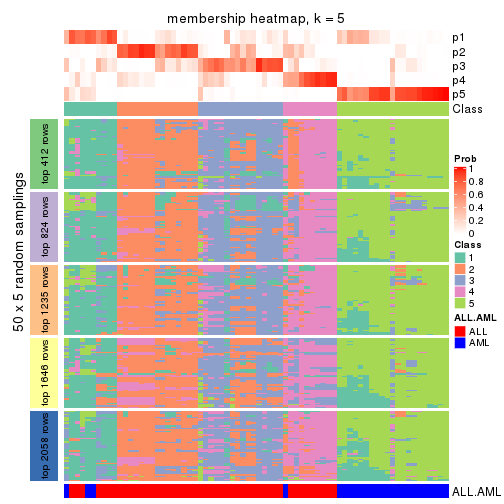

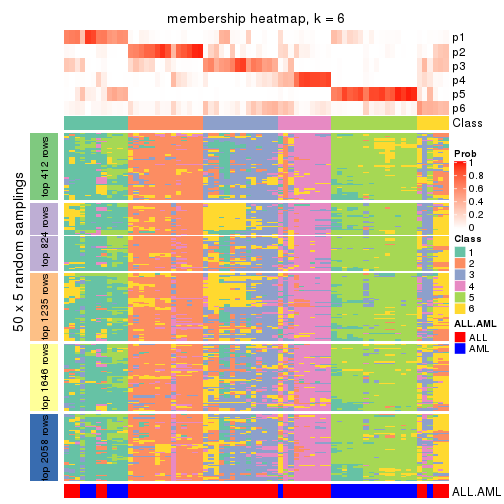

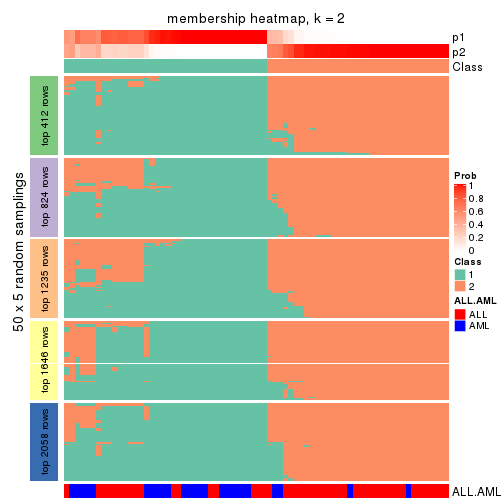

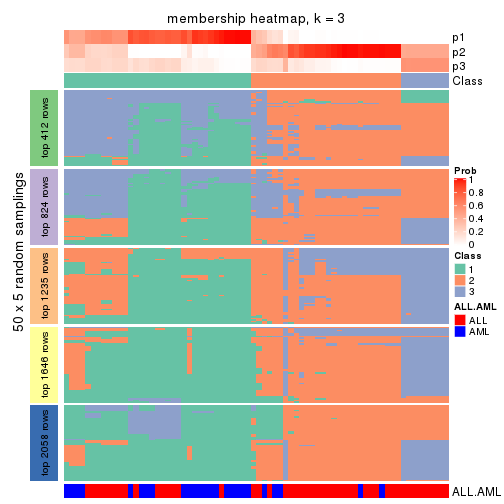

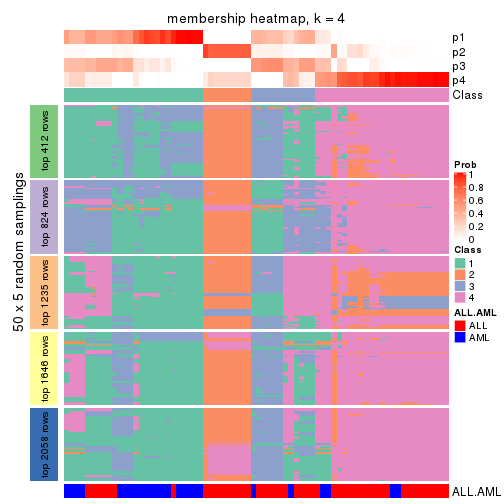

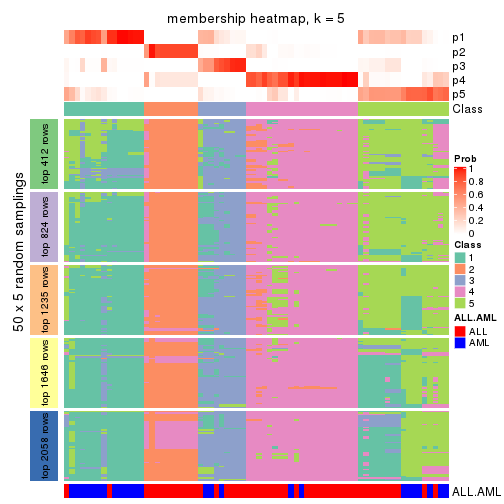

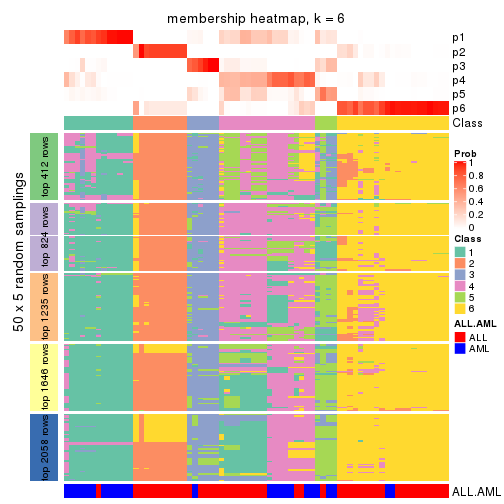

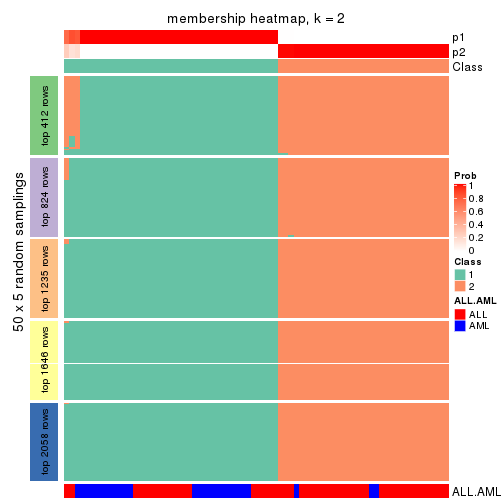

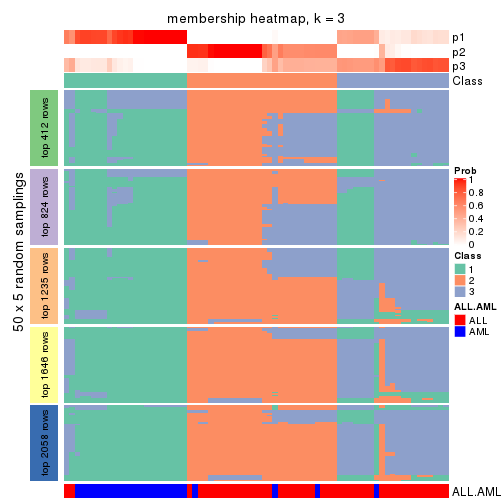

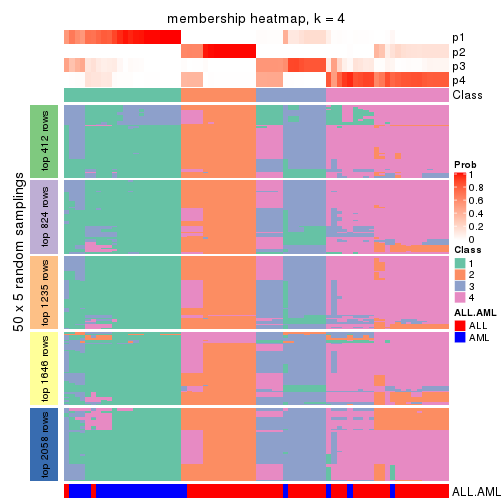

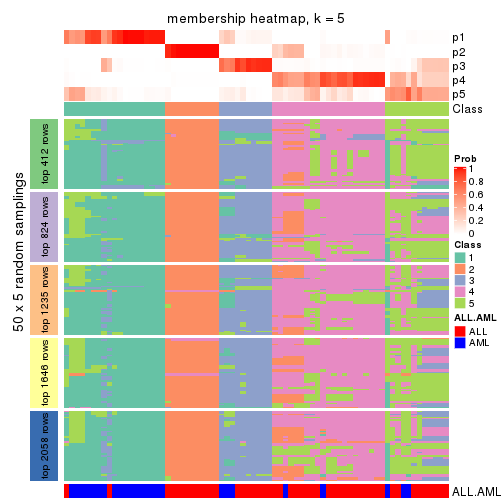

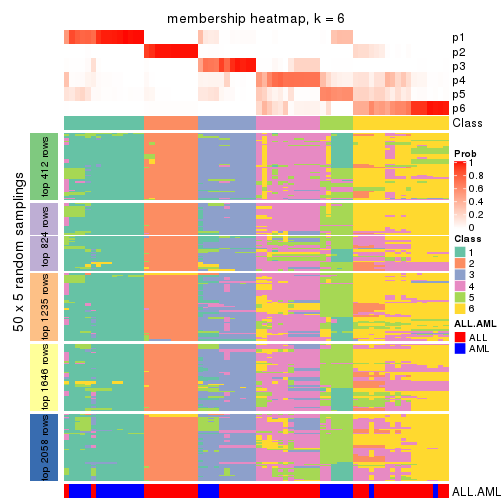

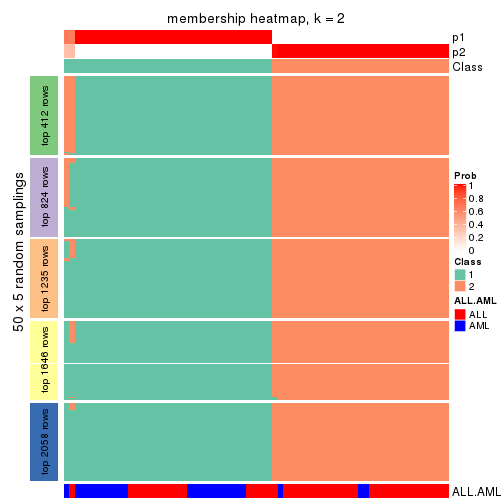

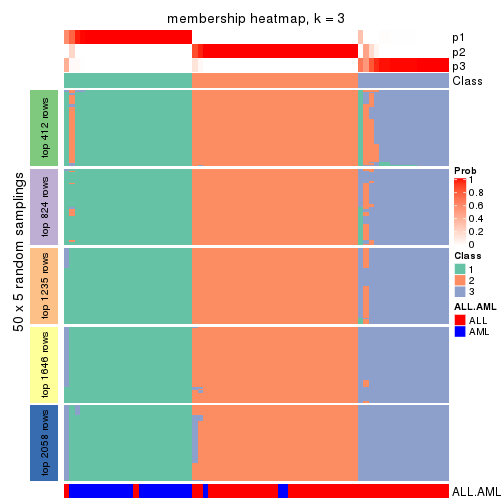

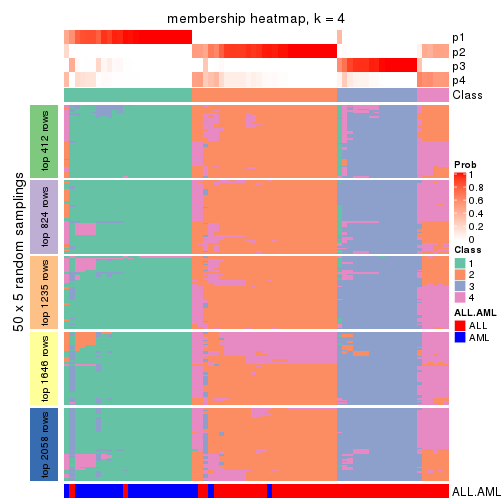

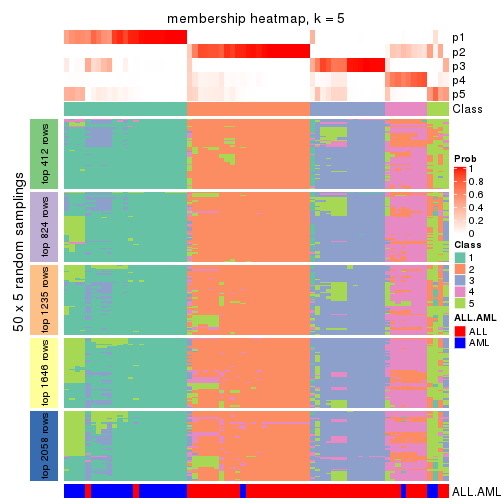

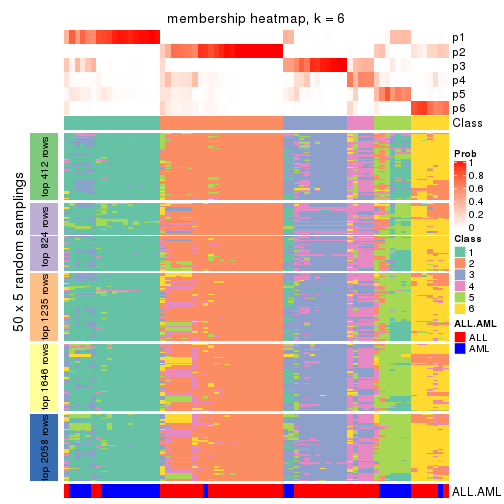

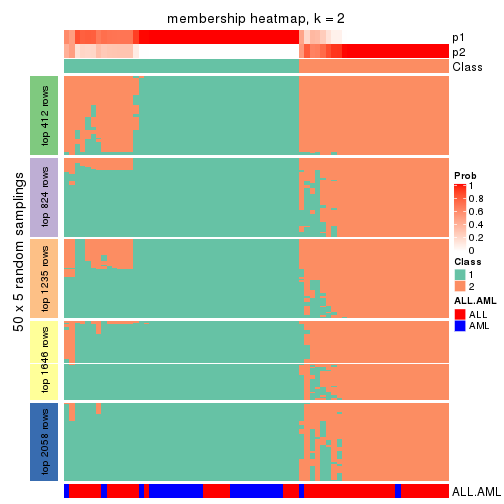

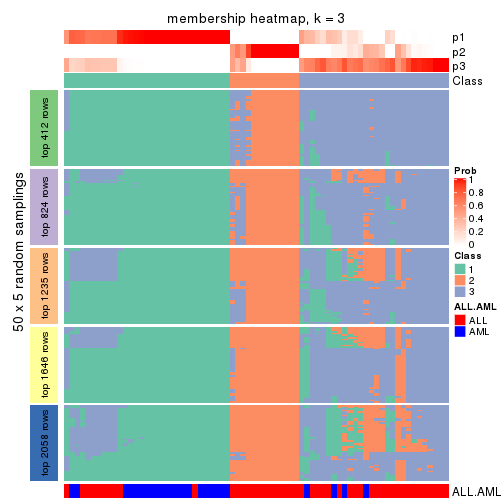

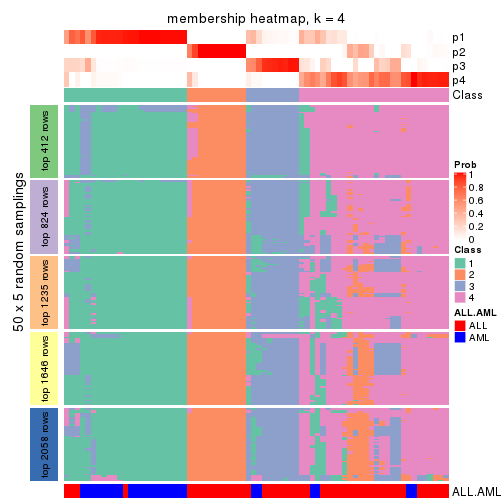

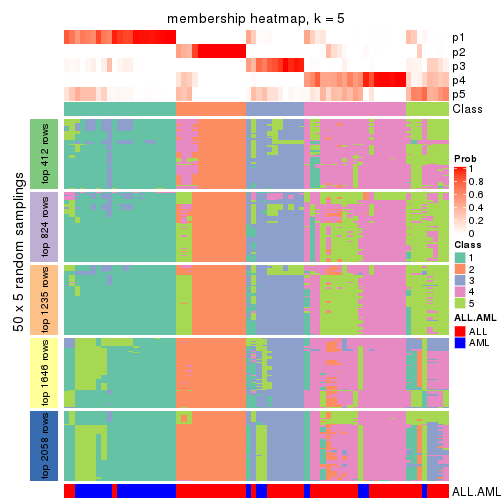

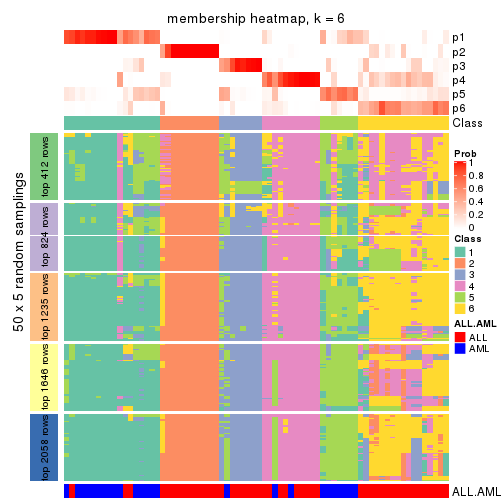

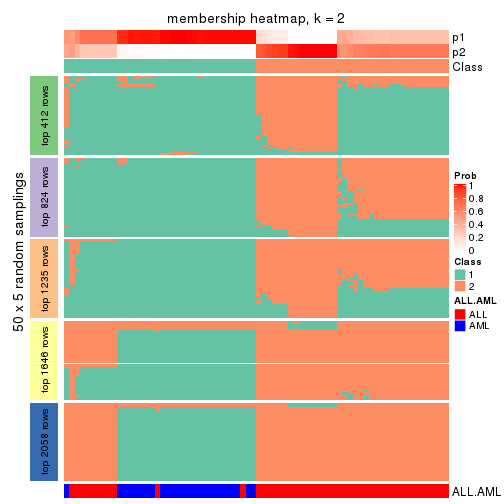

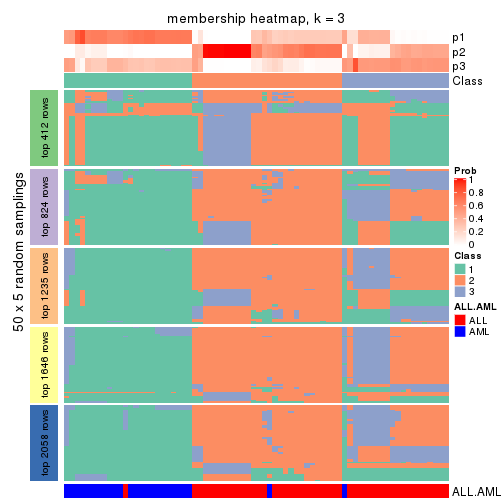

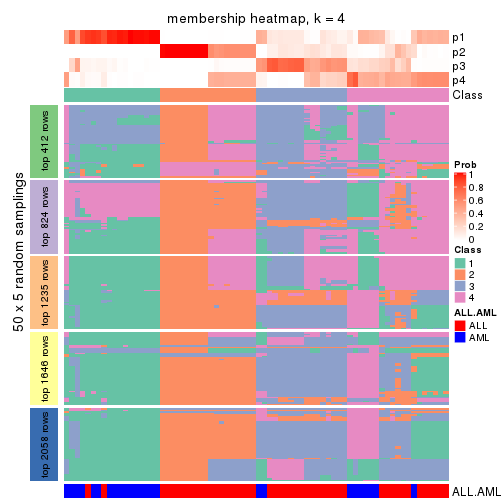

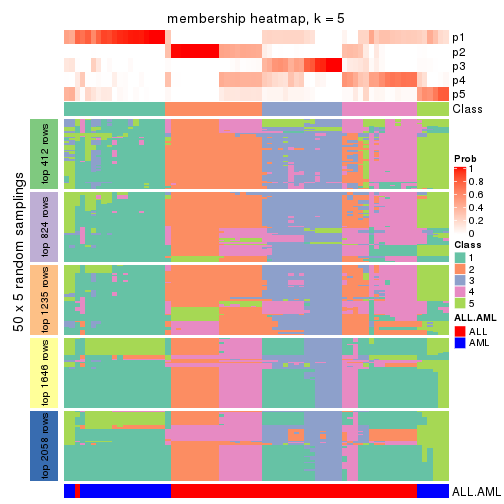

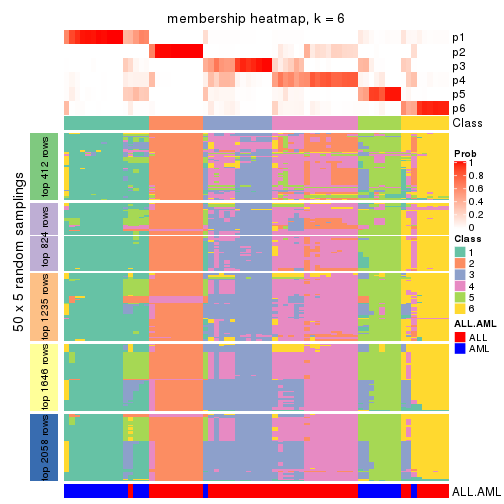

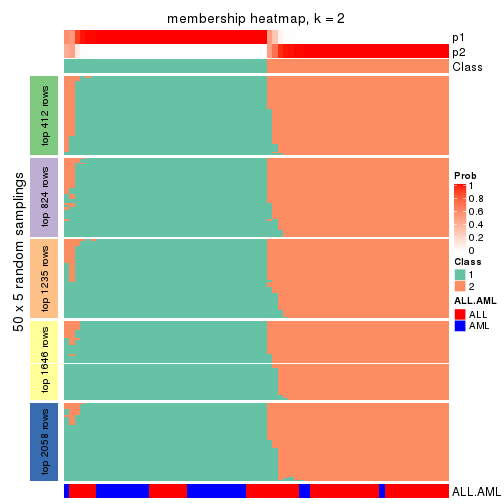

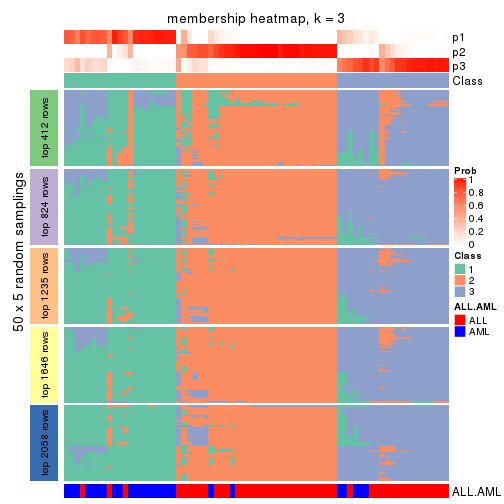

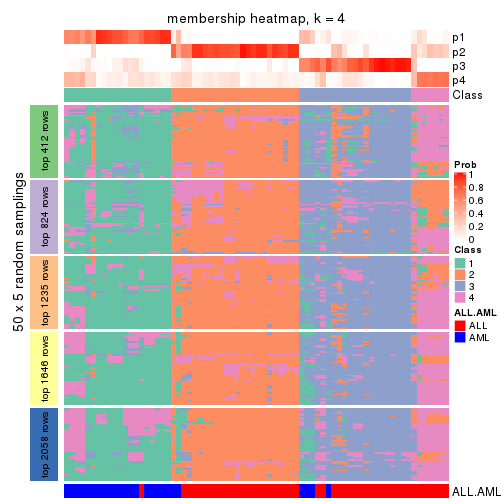

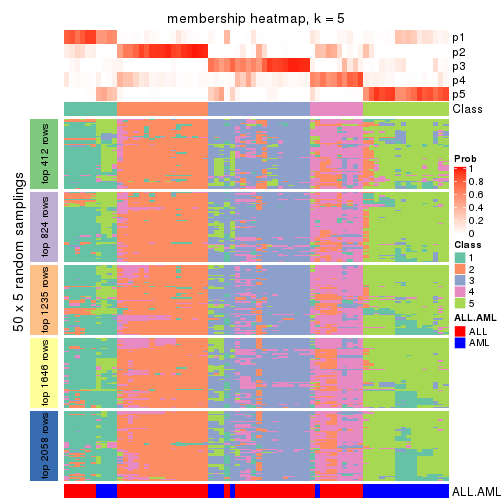

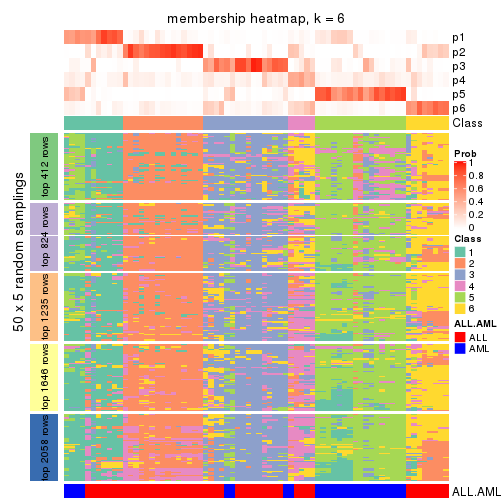

Membership heatmaps for all methods. (What is a membership heatmap?)

collect_plots(res_list, k = 2, fun = membership_heatmap, mc.cores = 4)

collect_plots(res_list, k = 3, fun = membership_heatmap, mc.cores = 4)

collect_plots(res_list, k = 4, fun = membership_heatmap, mc.cores = 4)

collect_plots(res_list, k = 5, fun = membership_heatmap, mc.cores = 4)

collect_plots(res_list, k = 6, fun = membership_heatmap, mc.cores = 4)

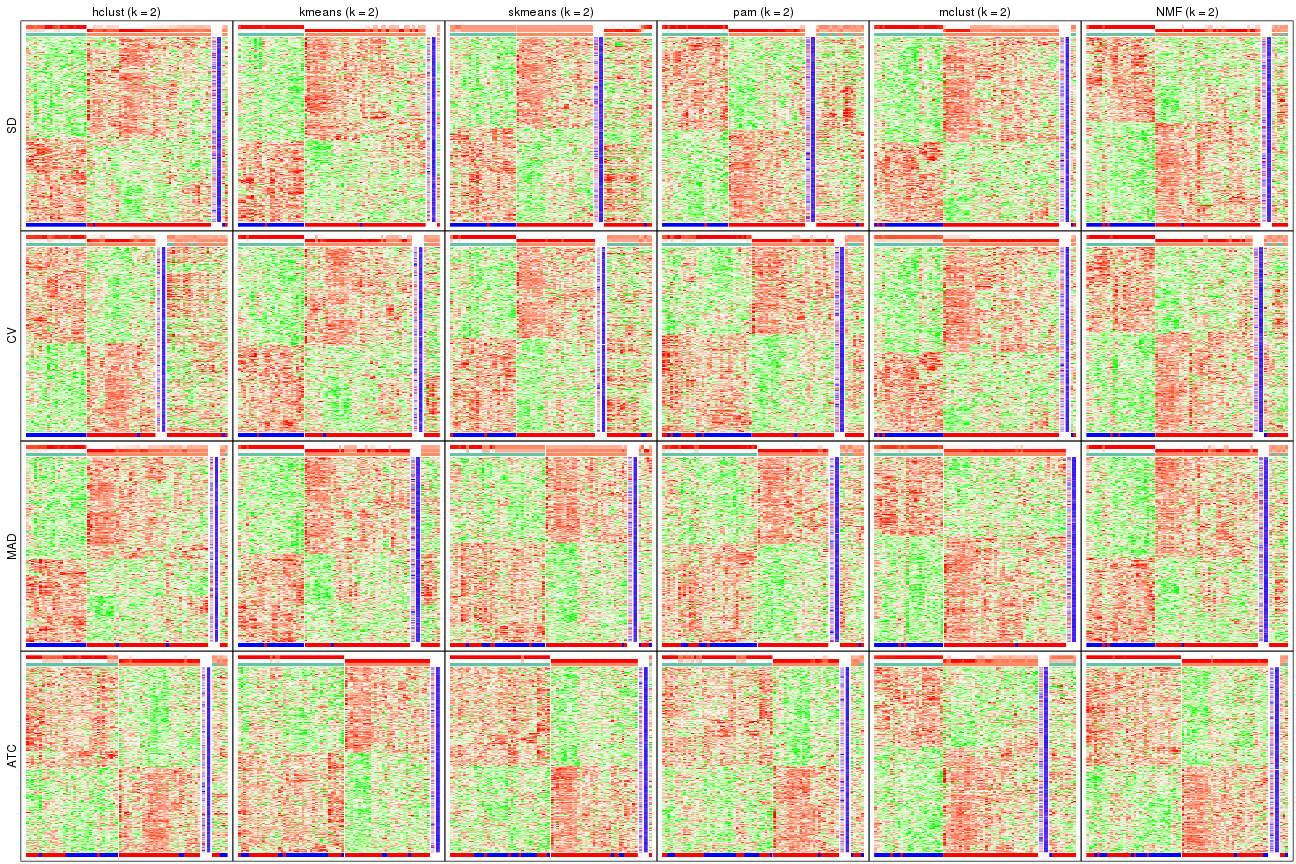

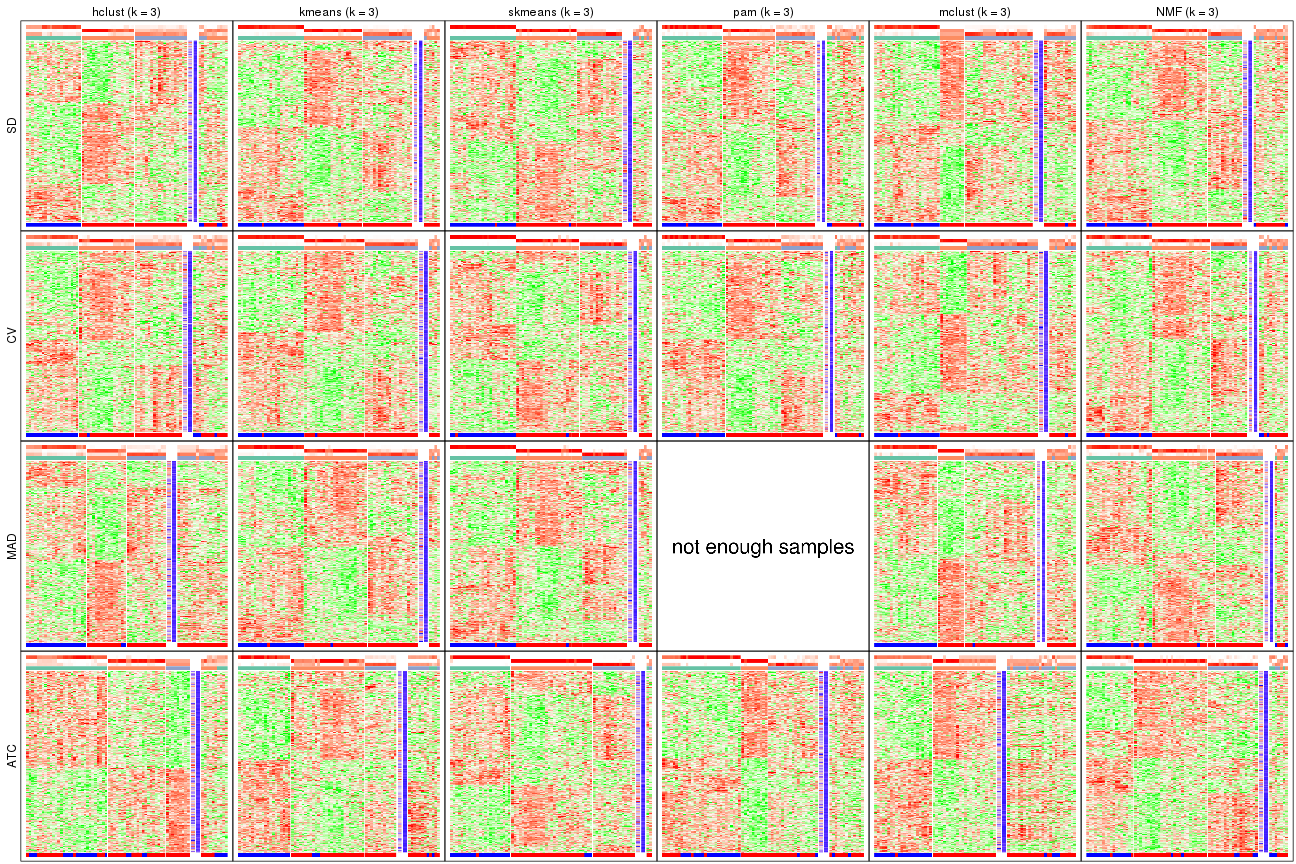

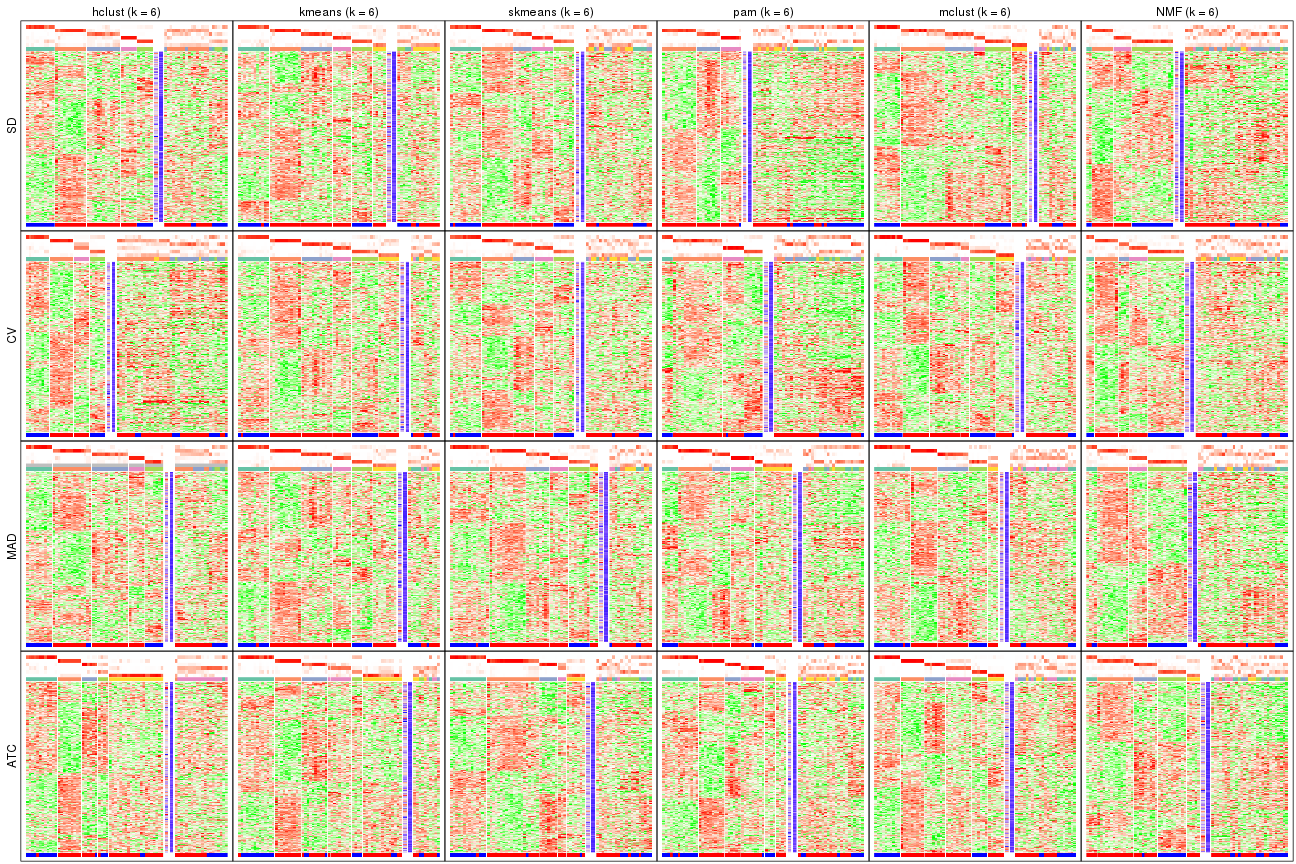

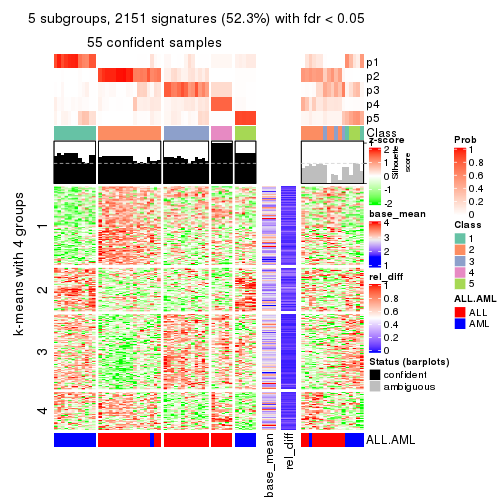

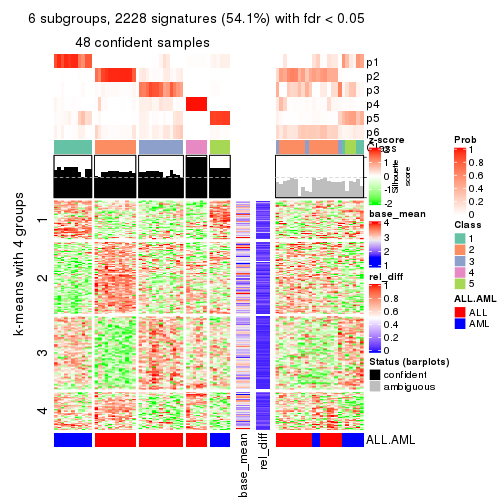

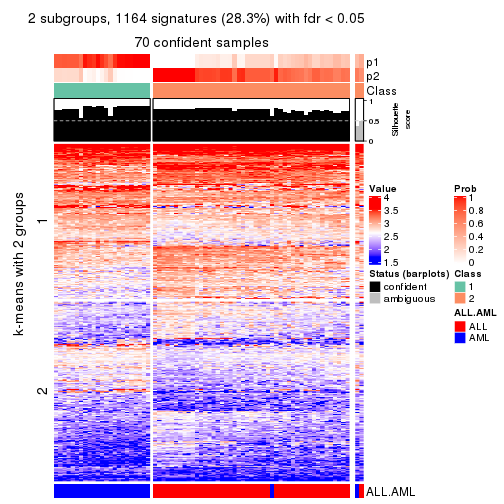

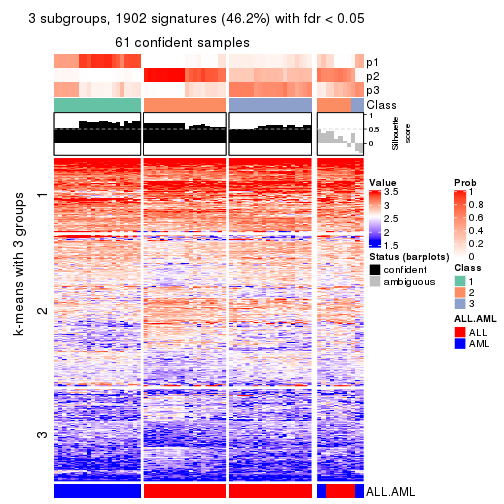

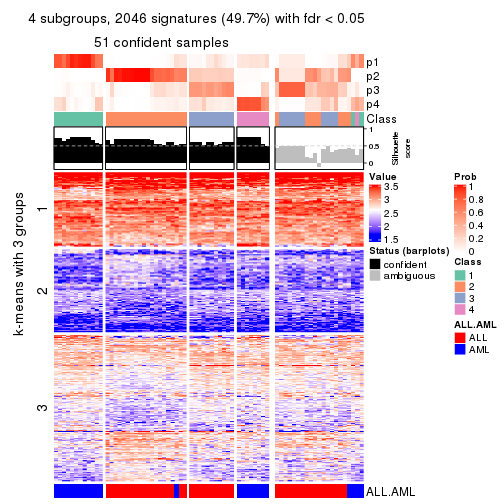

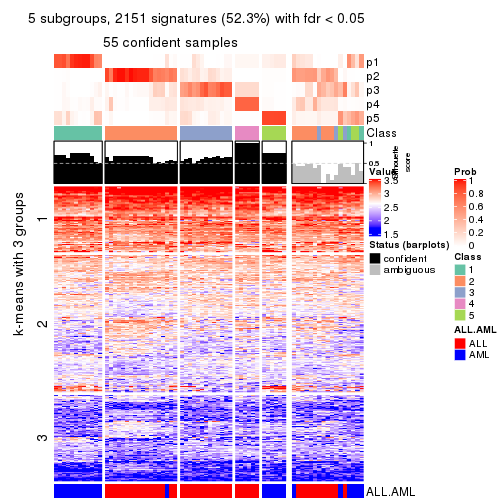

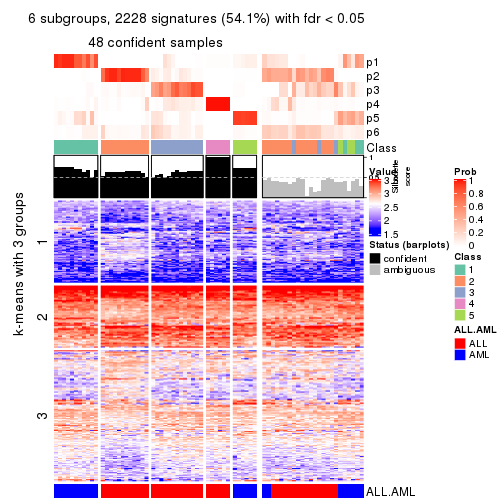

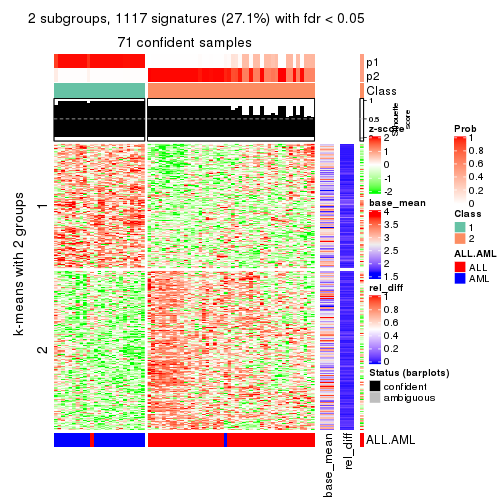

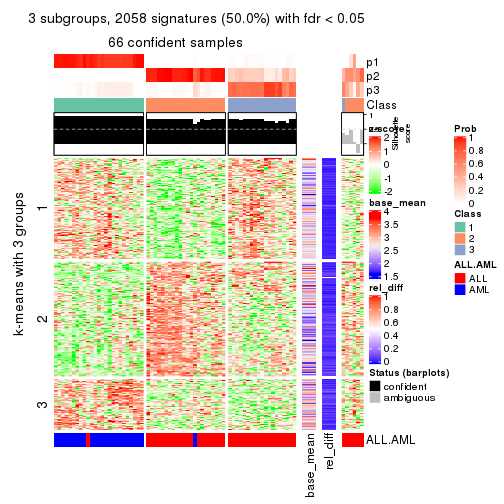

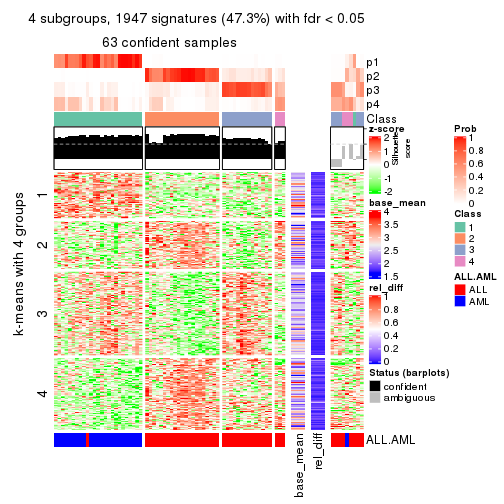

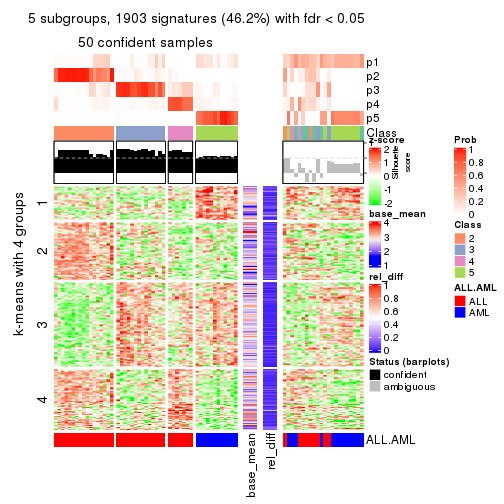

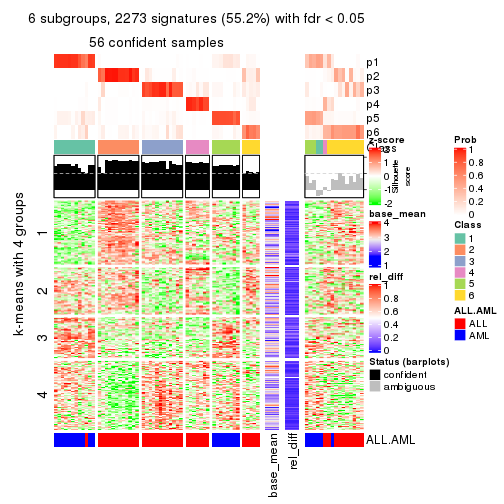

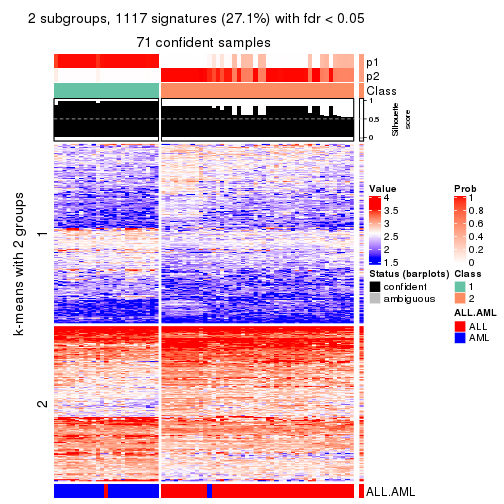

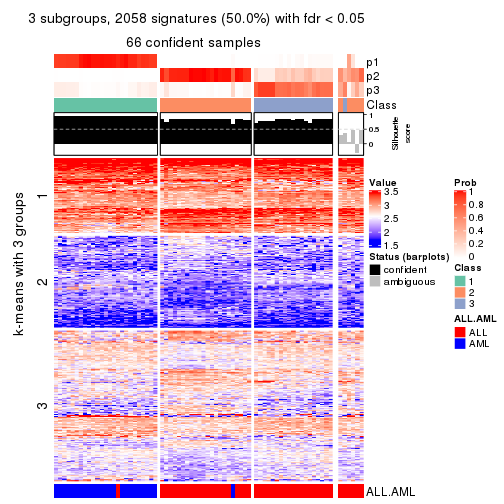

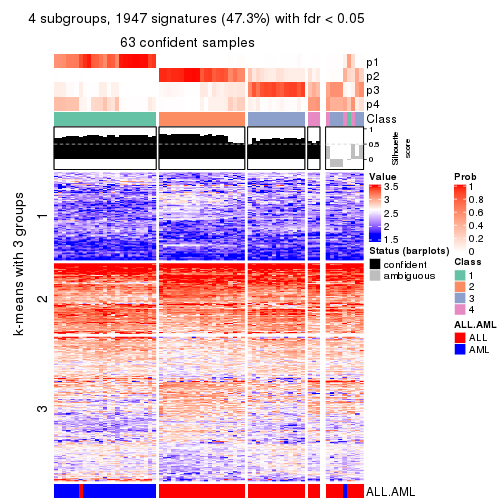

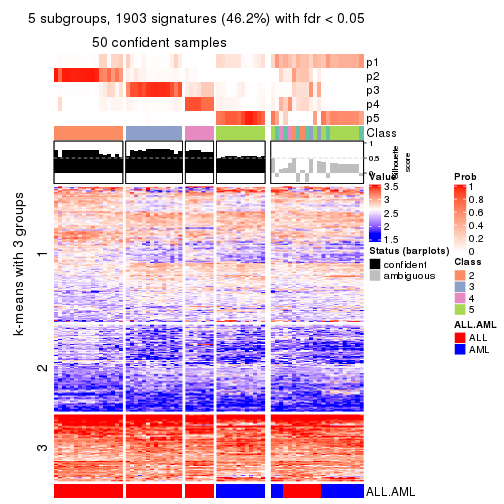

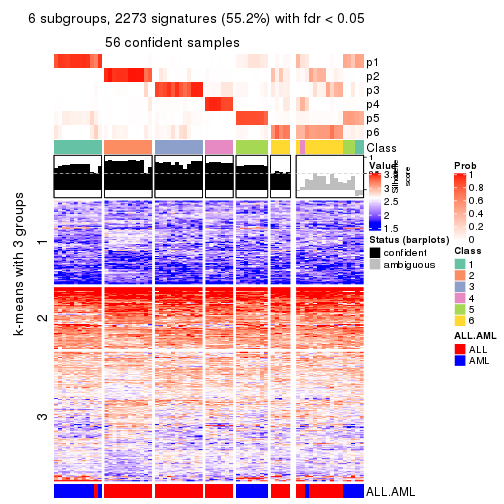

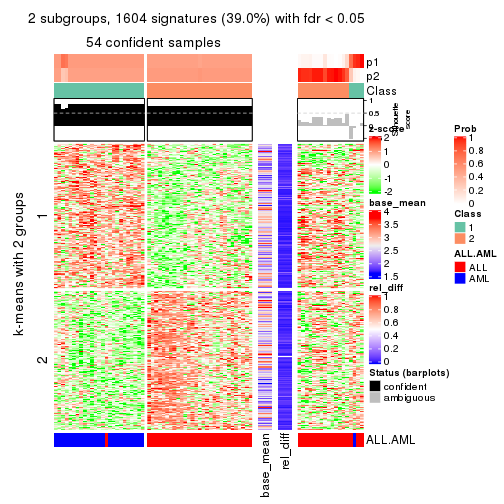

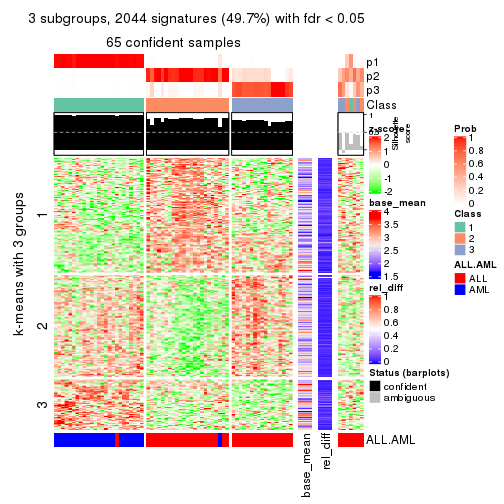

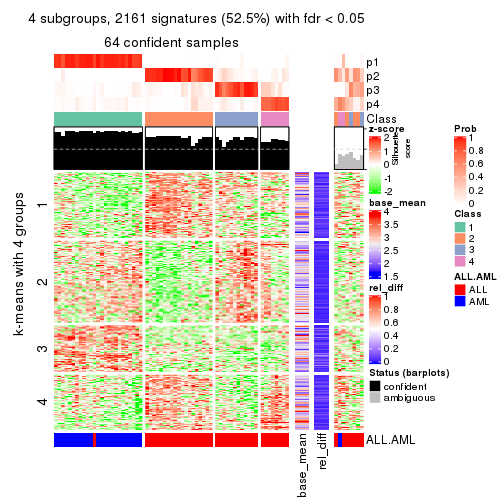

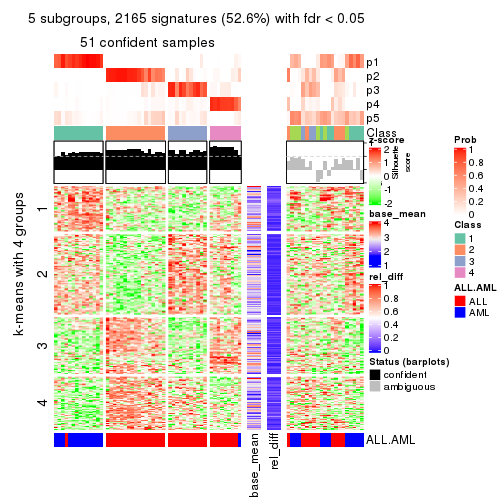

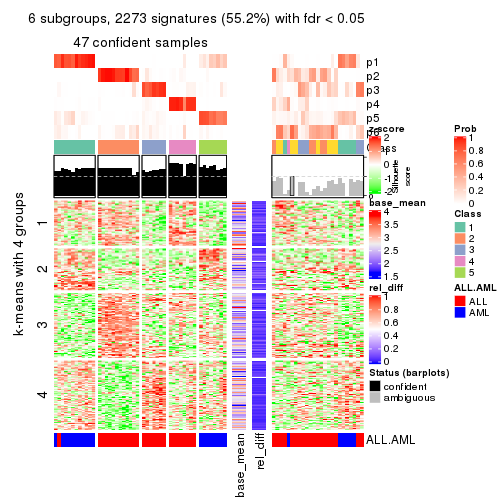

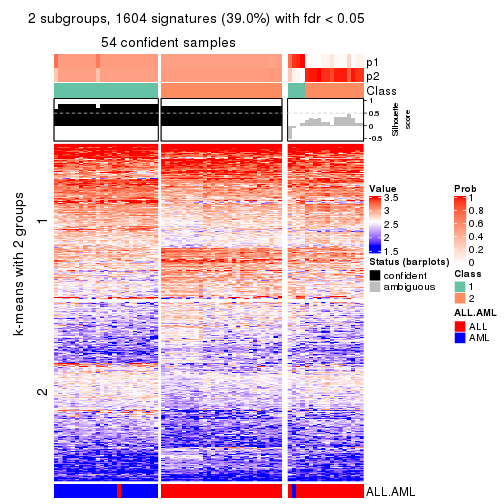

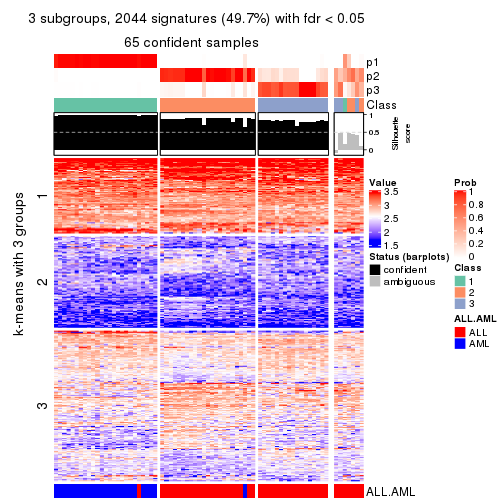

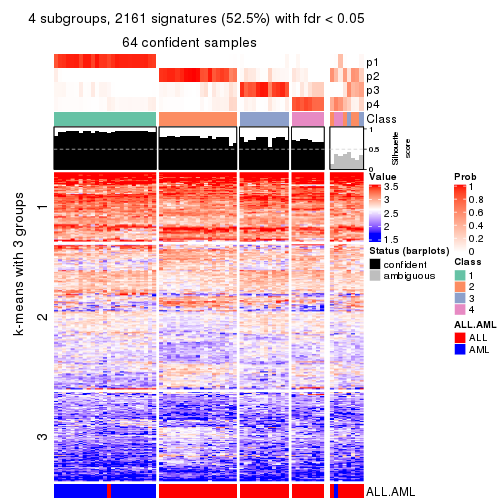

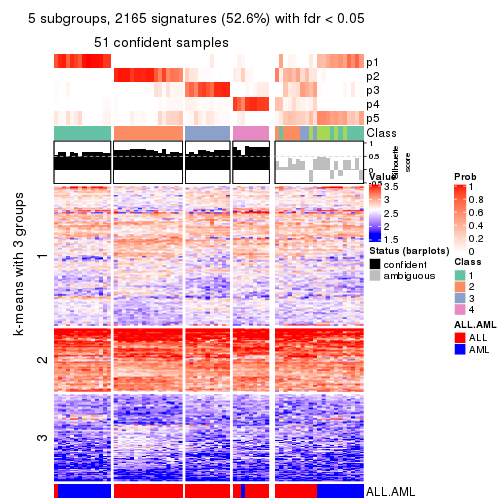

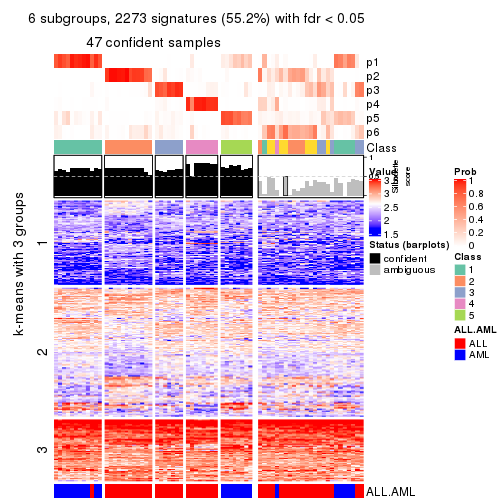

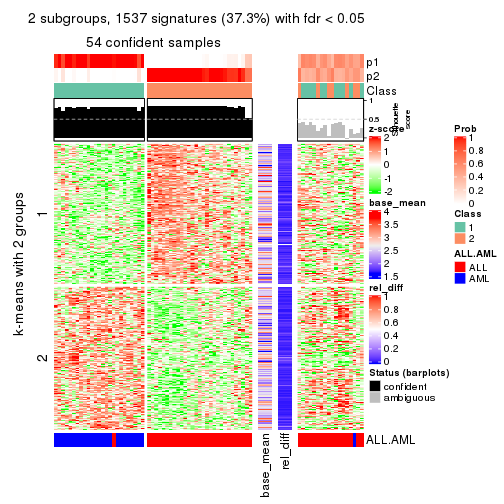

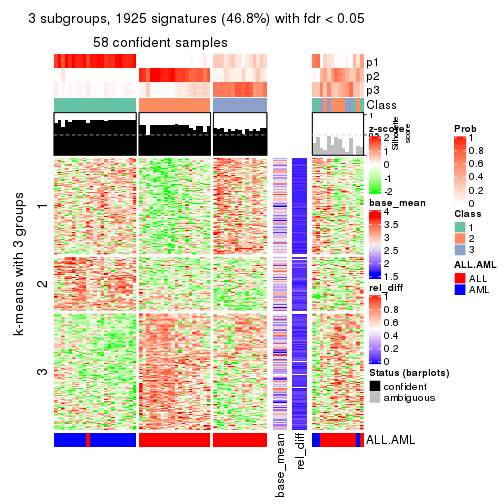

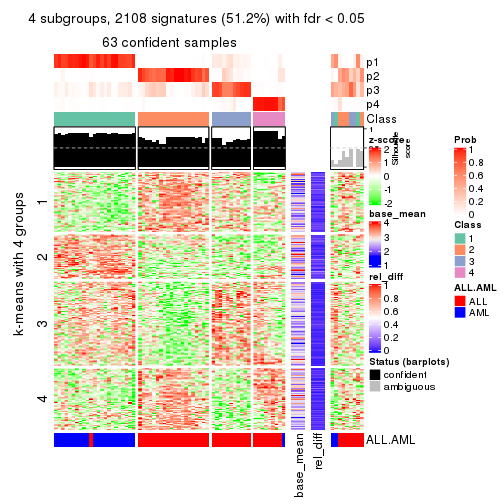

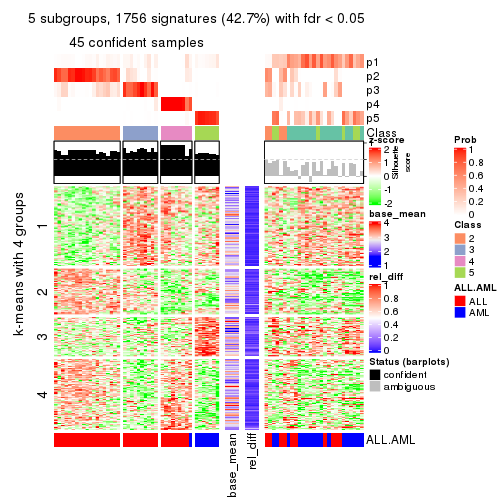

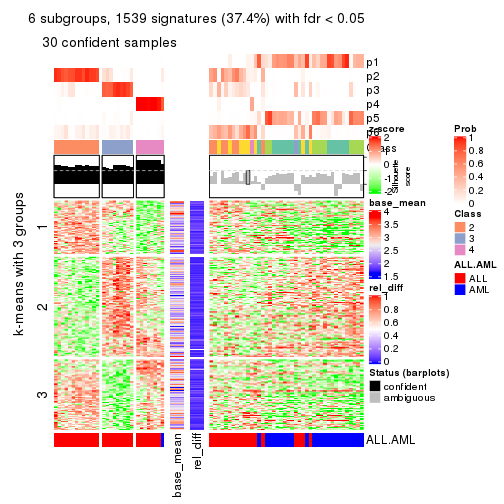

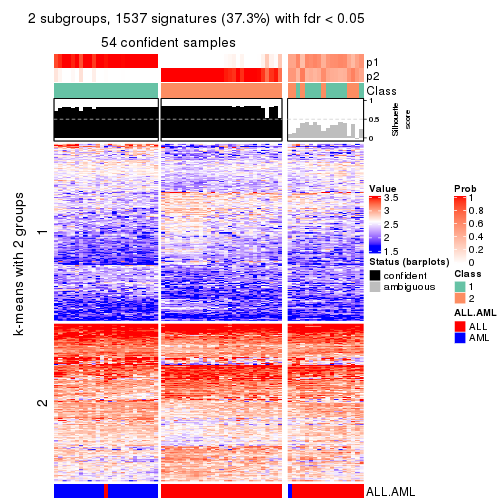

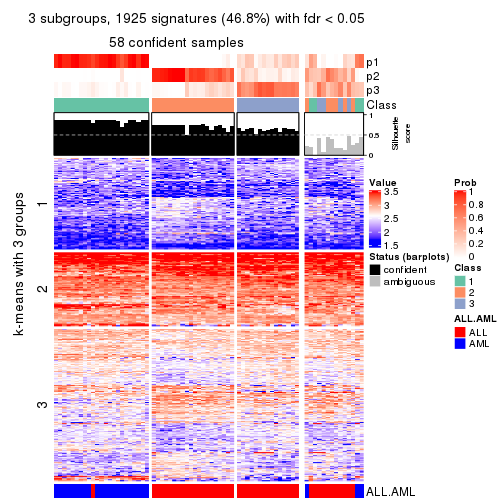

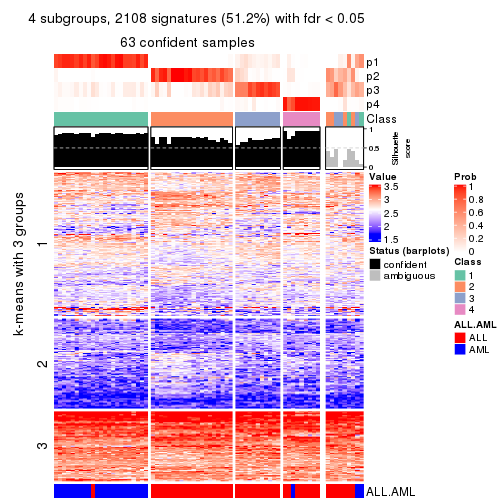

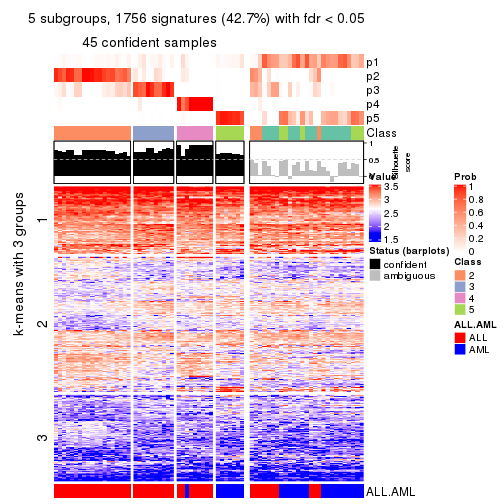

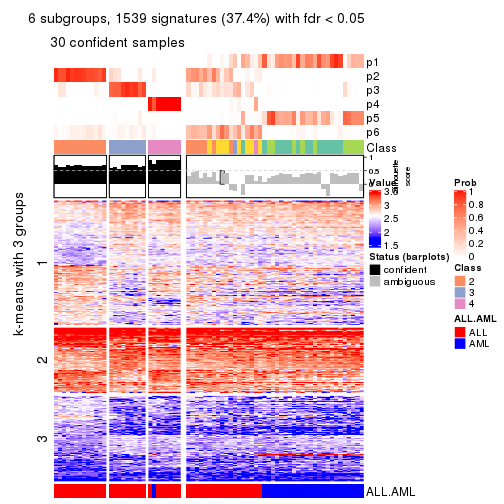

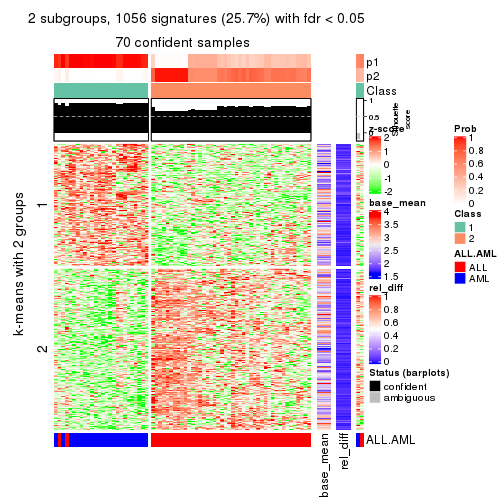

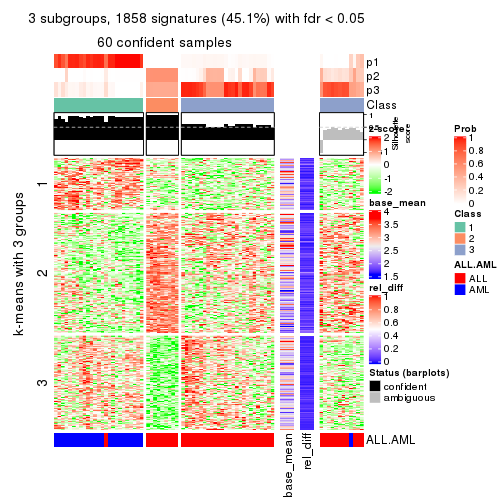

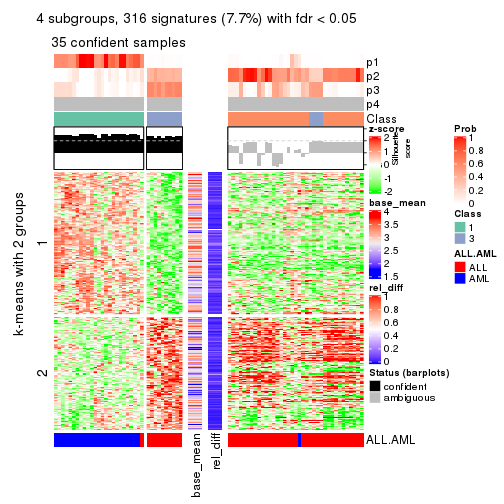

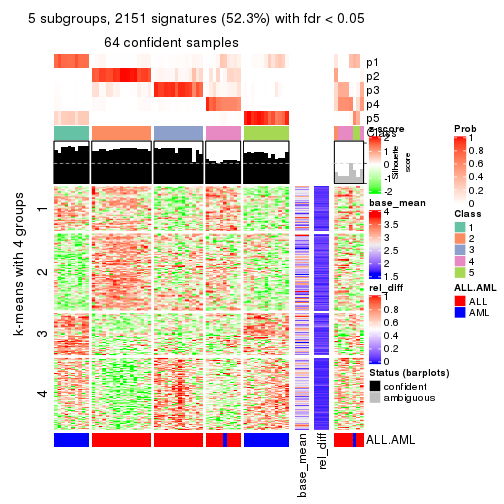

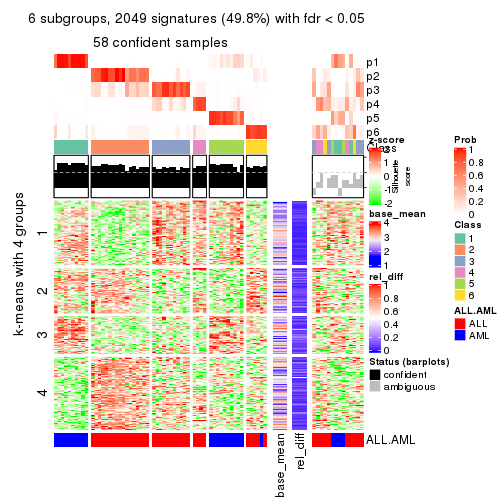

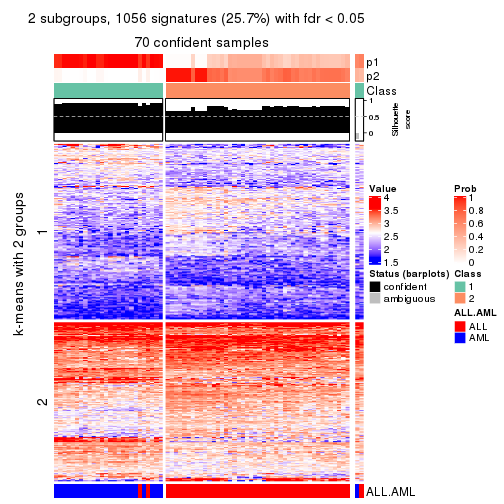

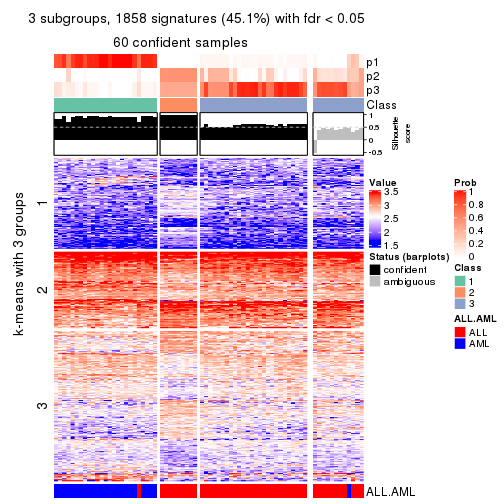

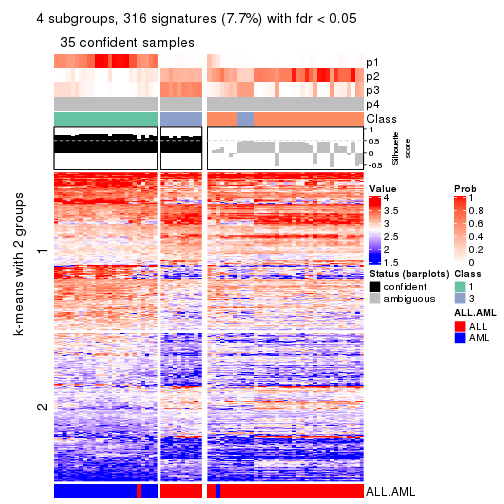

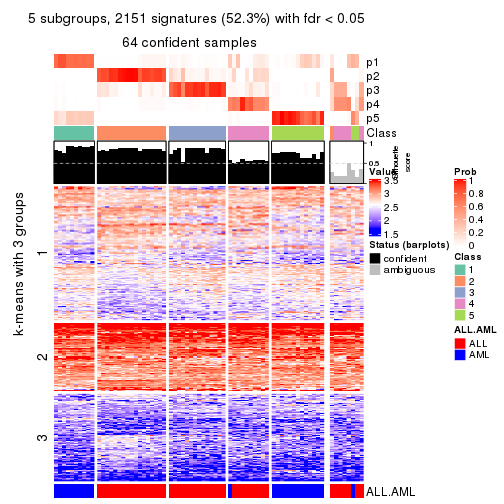

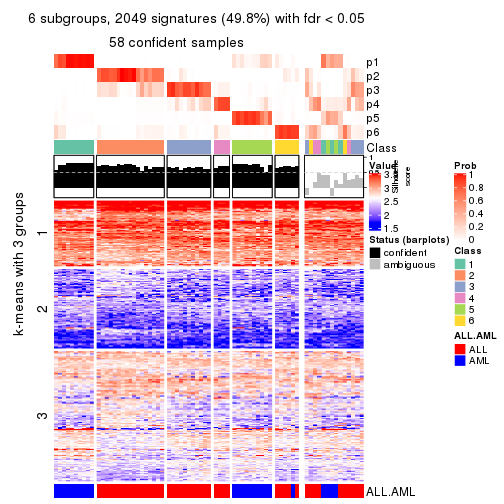

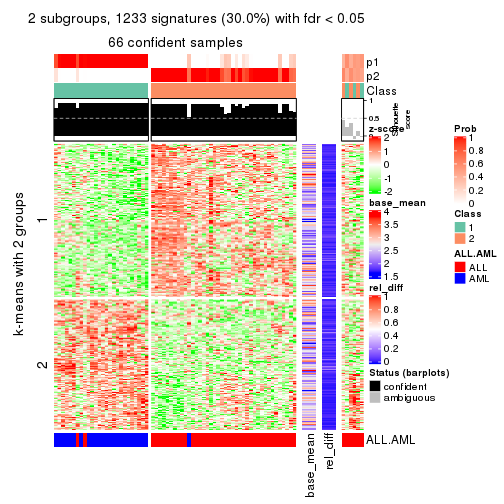

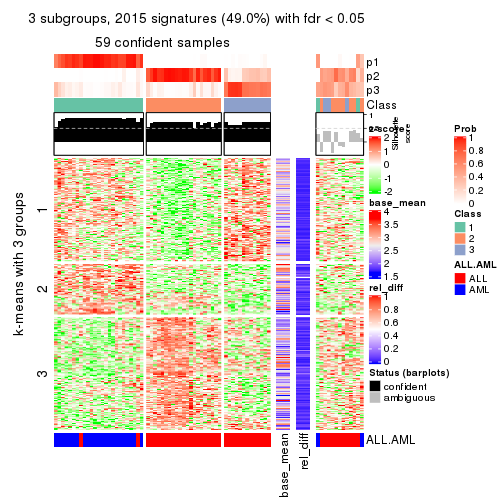

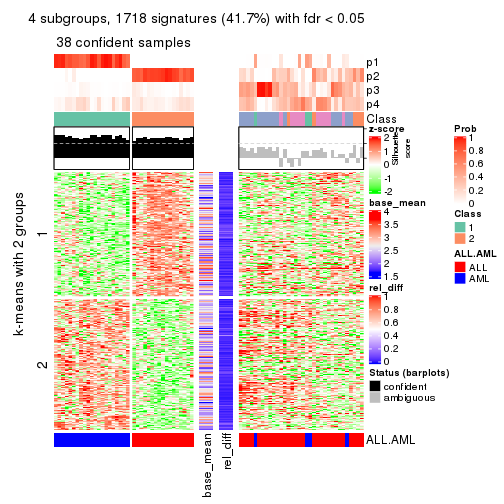

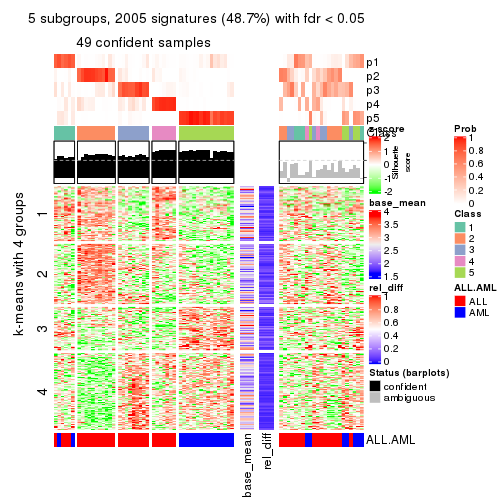

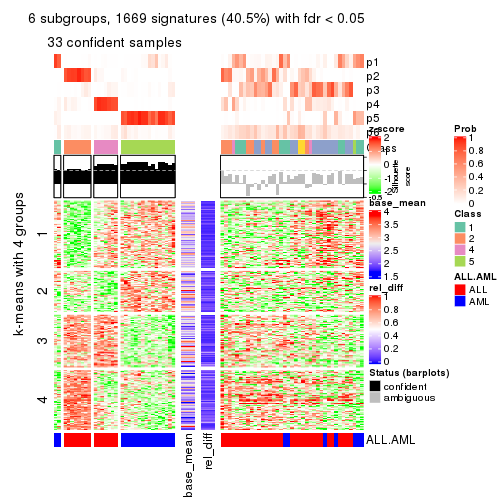

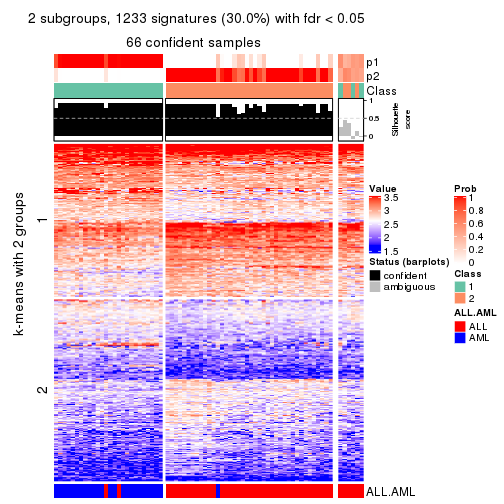

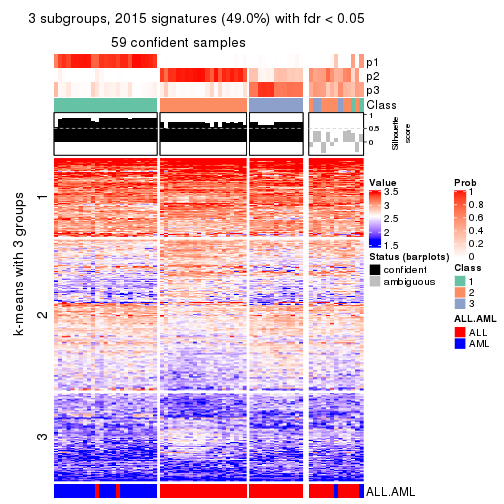

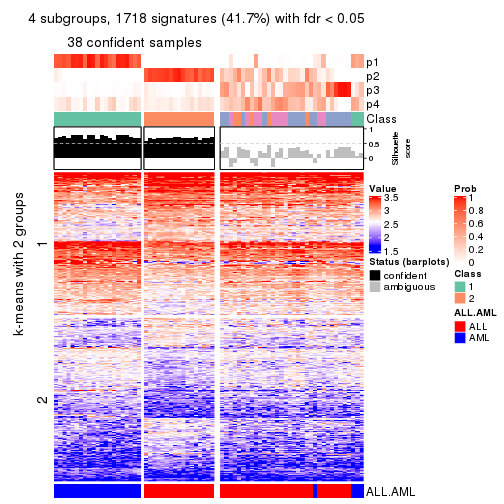

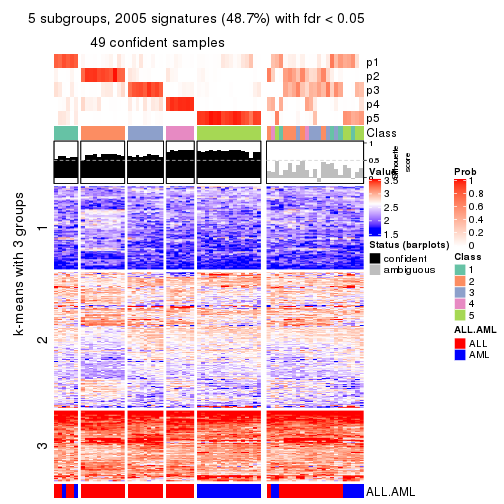

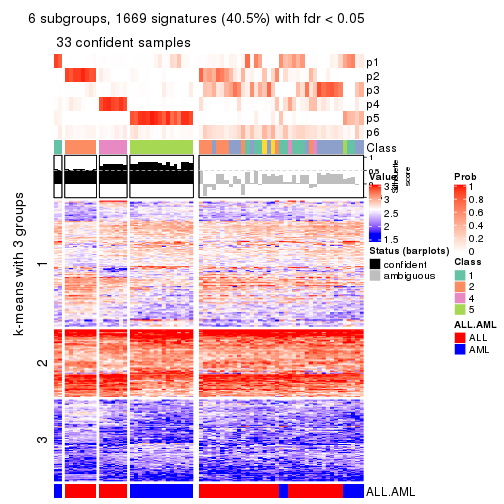

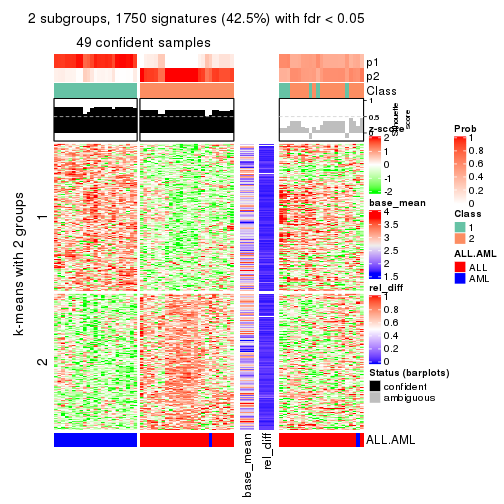

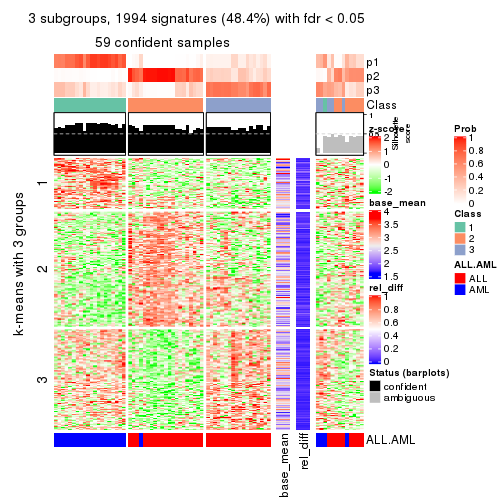

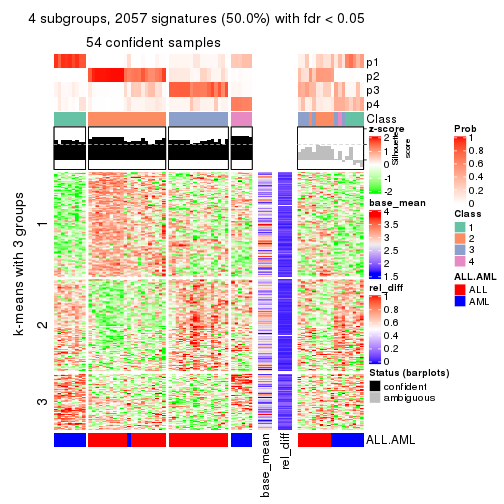

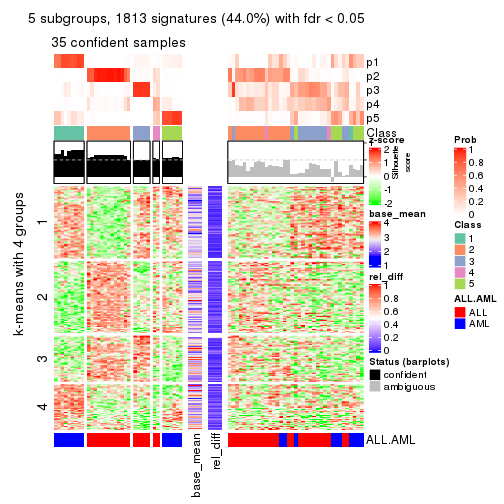

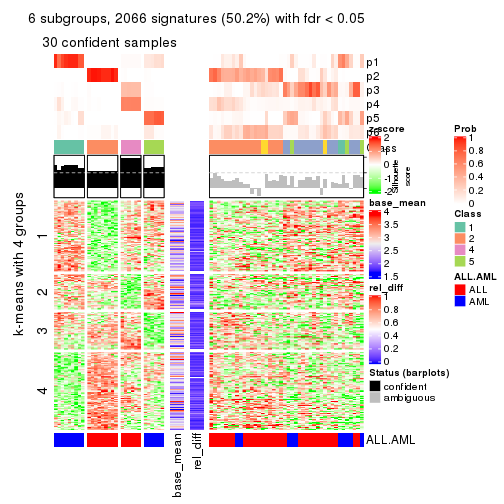

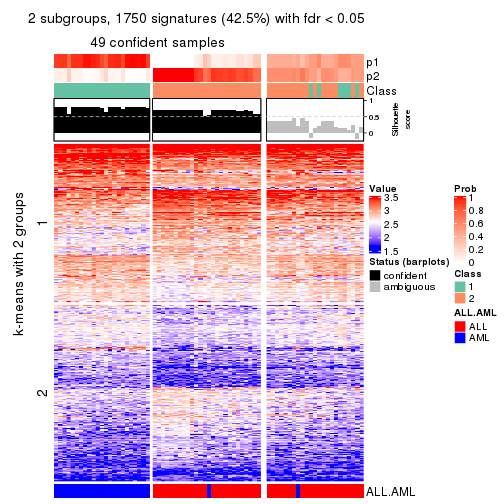

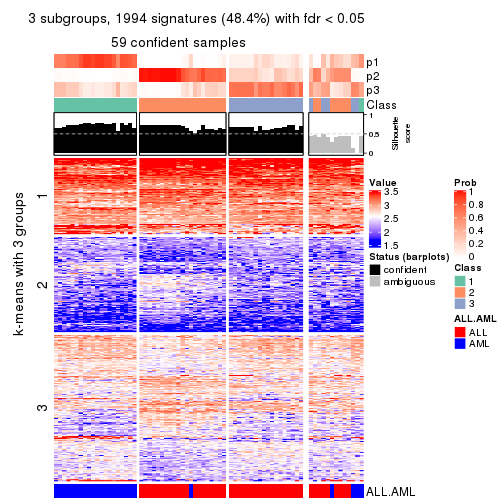

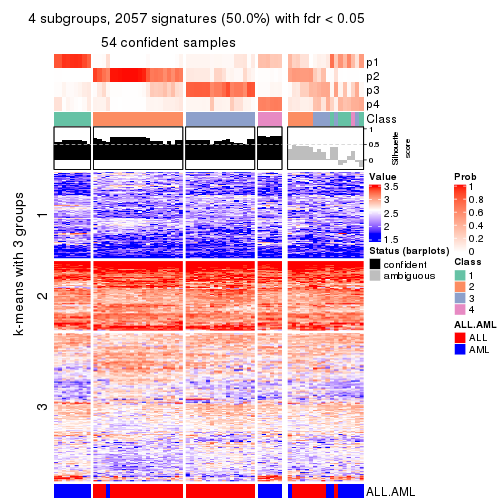

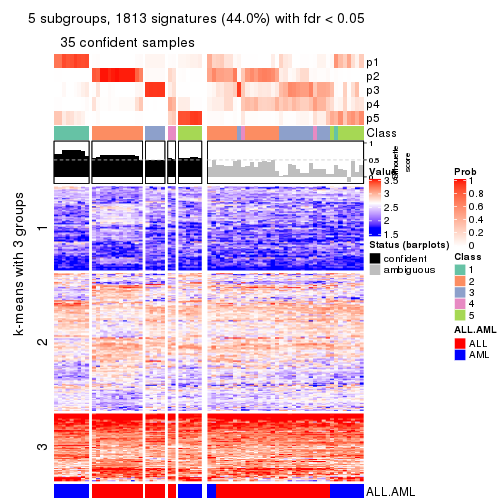

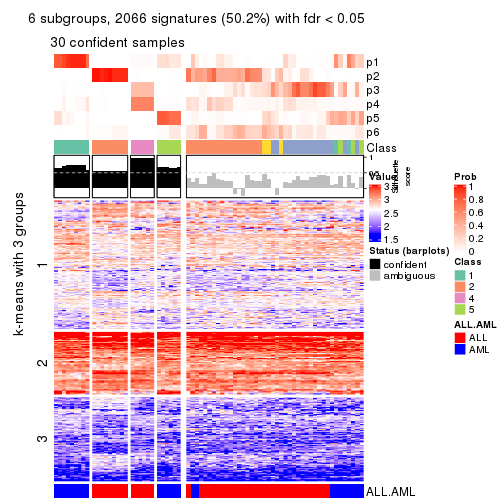

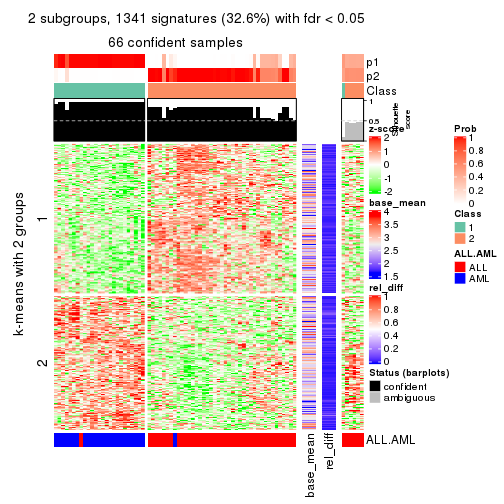

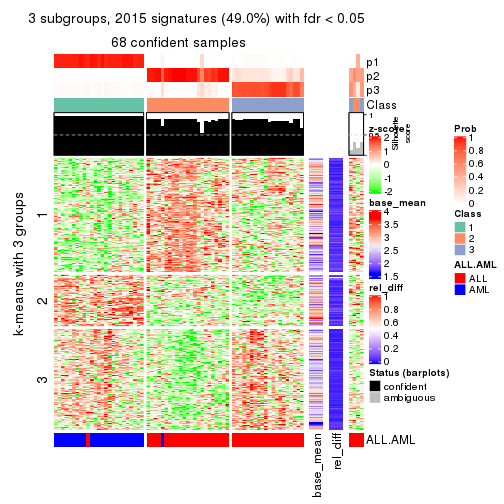

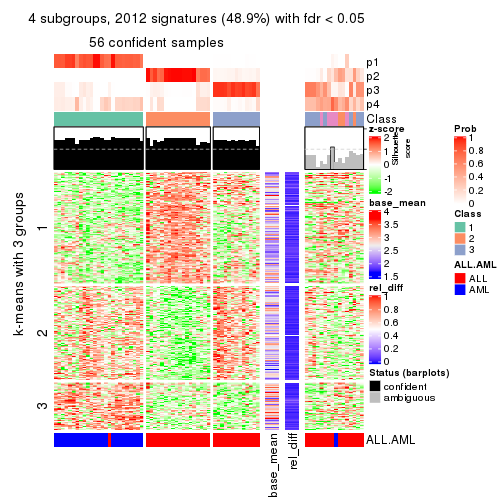

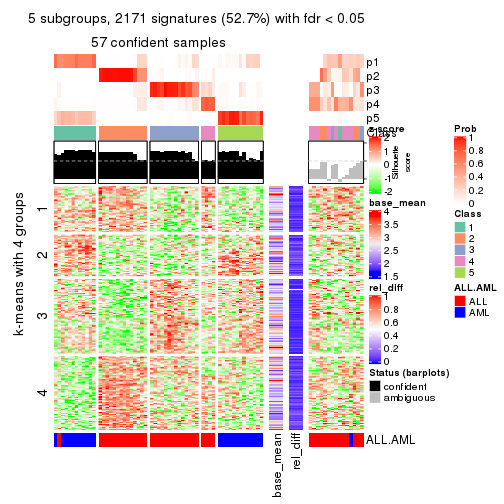

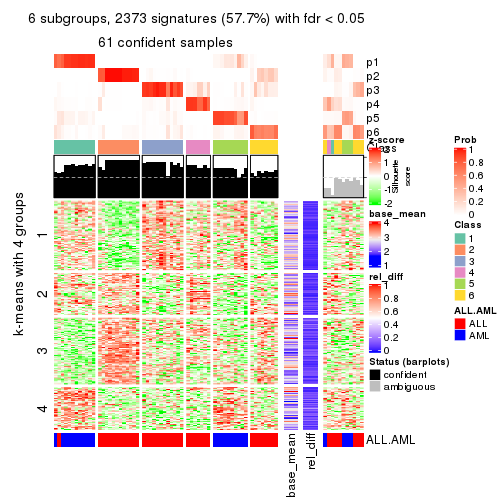

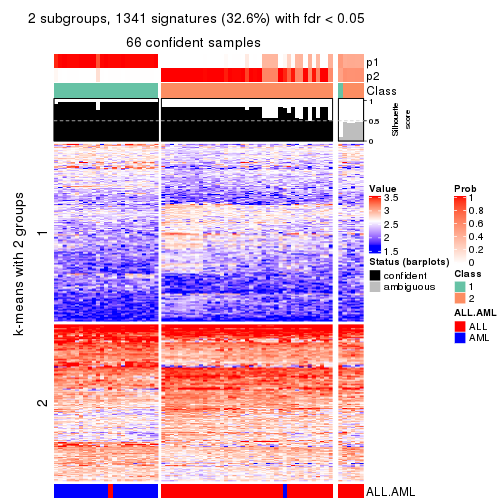

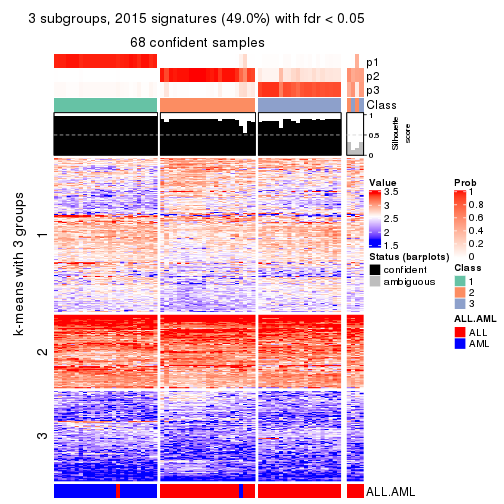

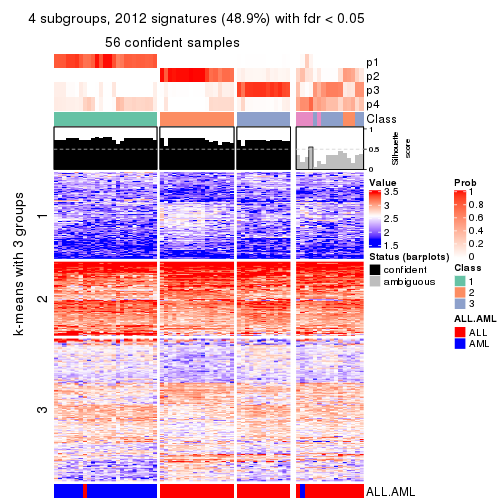

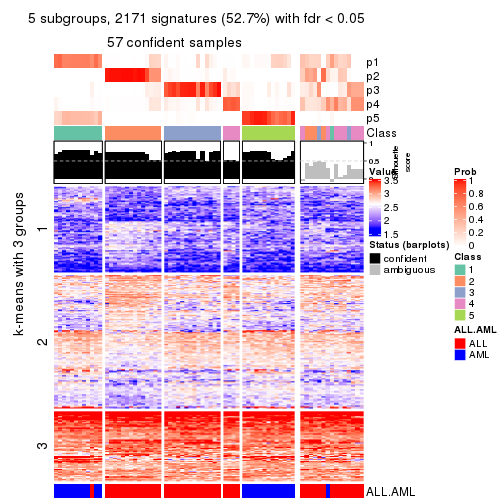

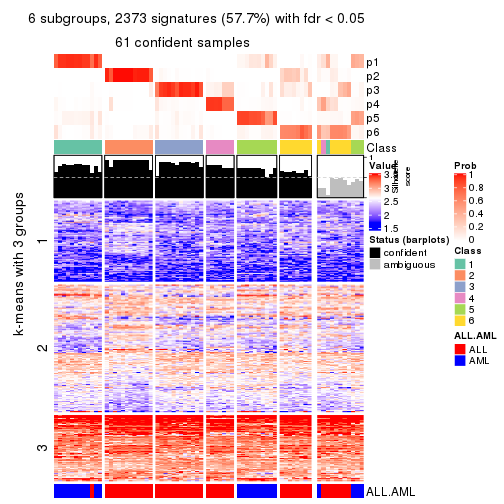

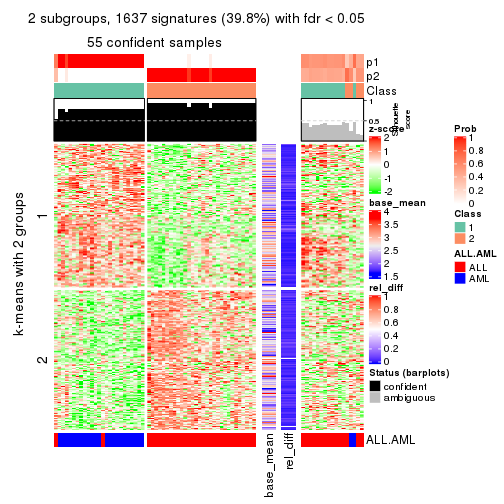

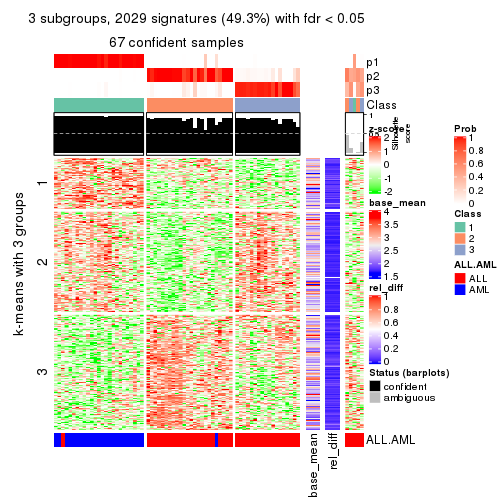

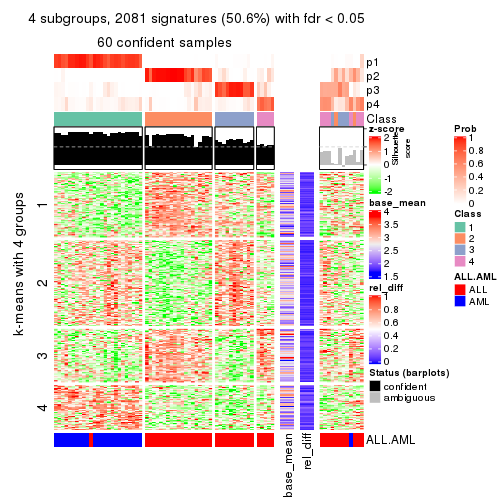

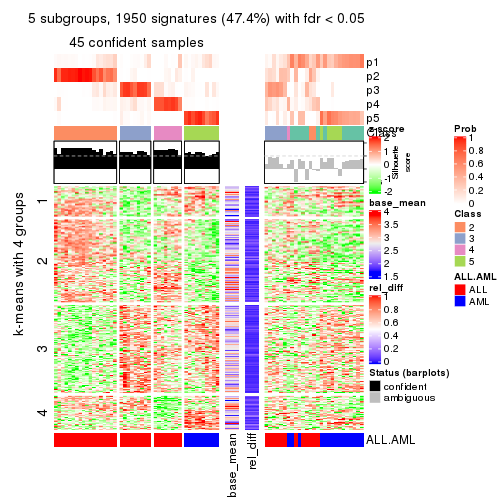

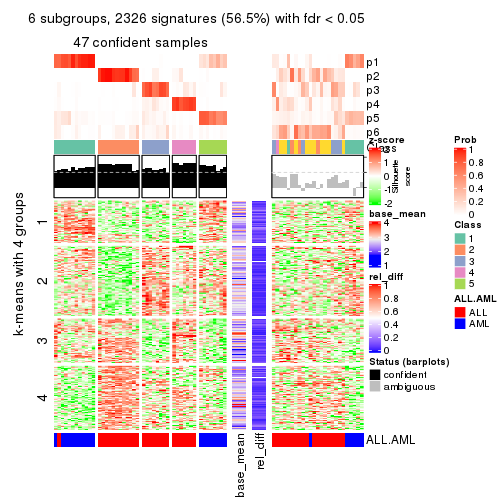

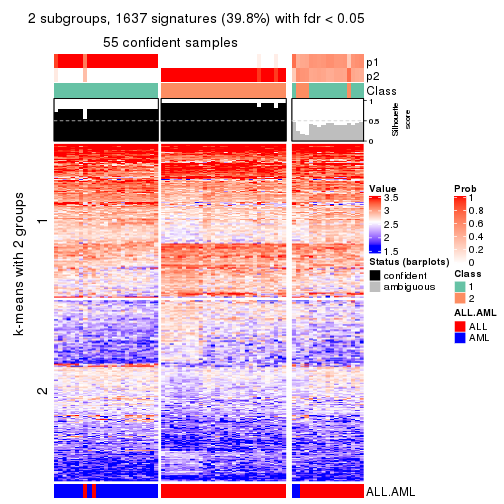

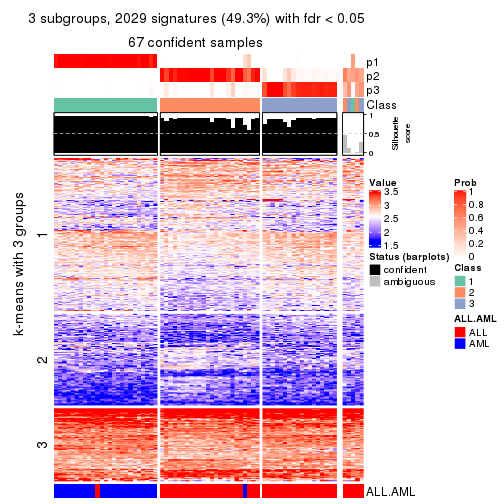

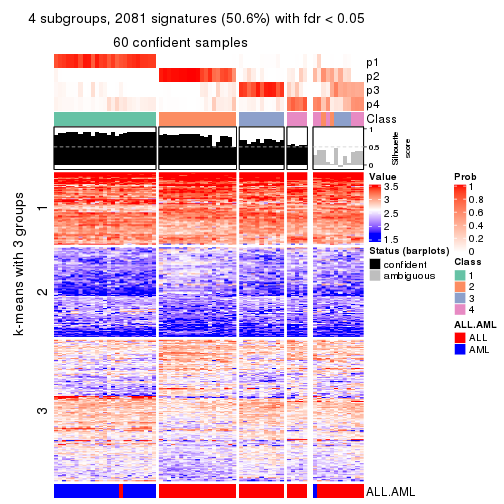

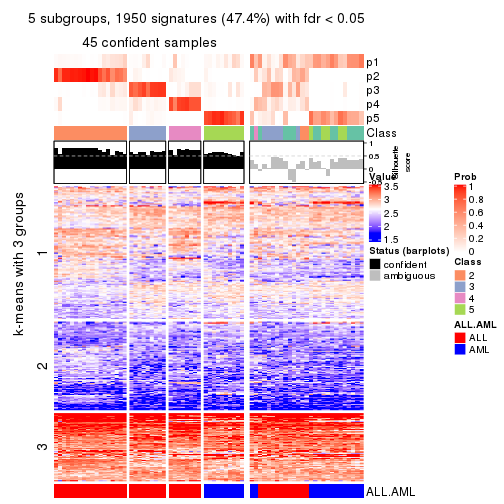

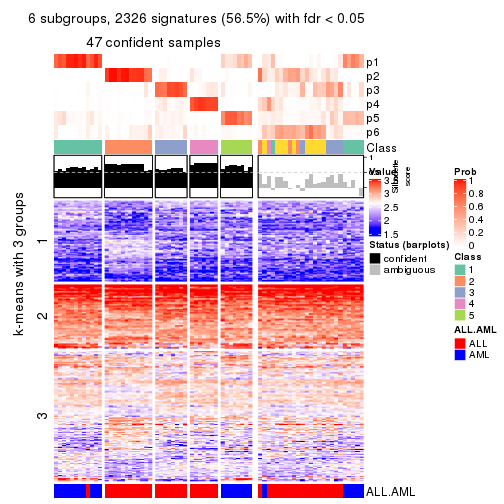

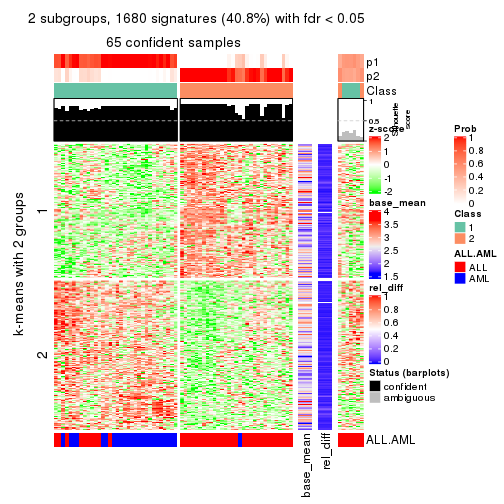

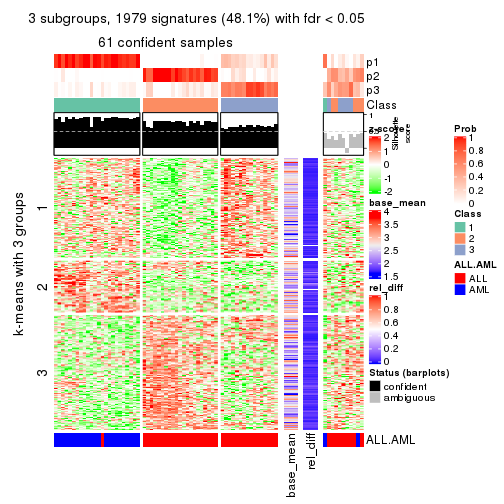

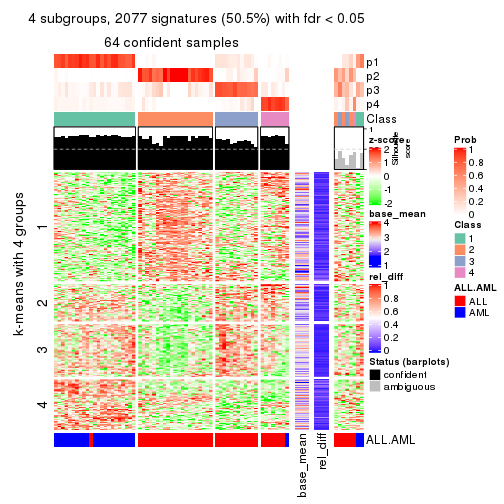

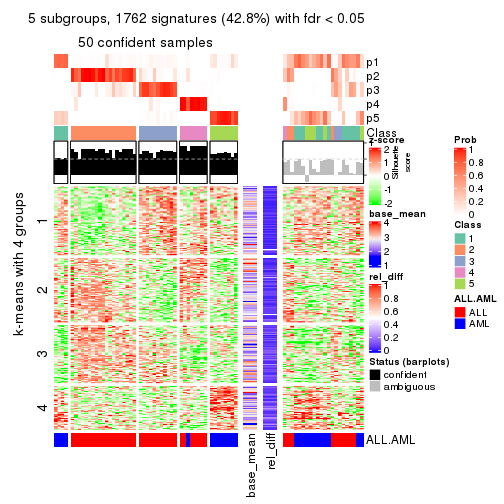

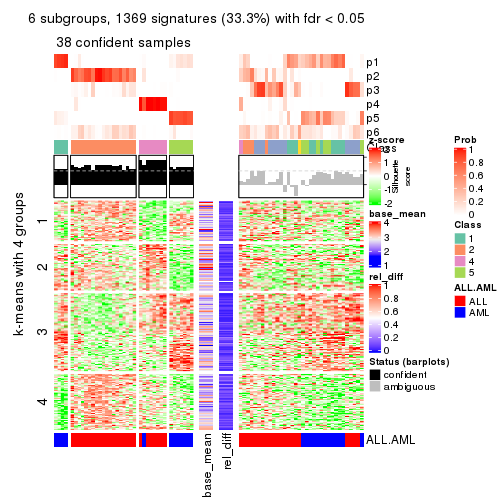

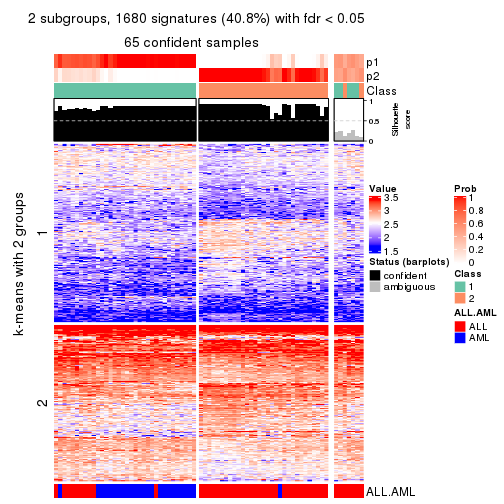

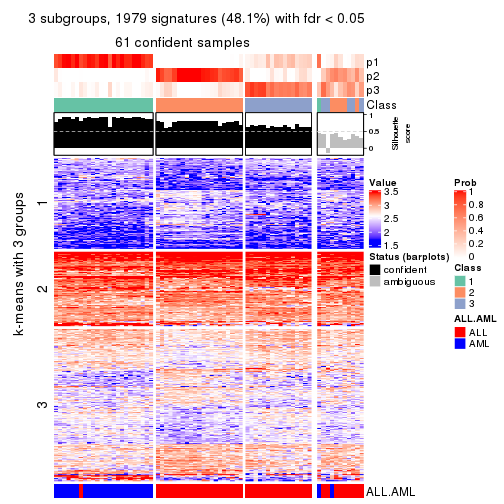

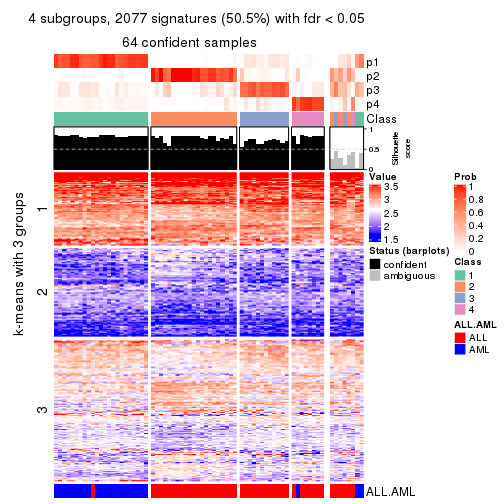

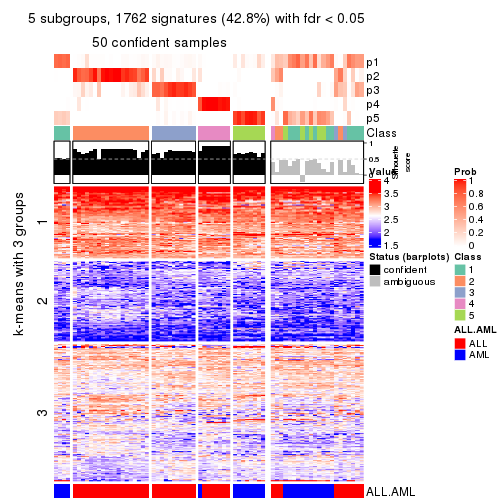

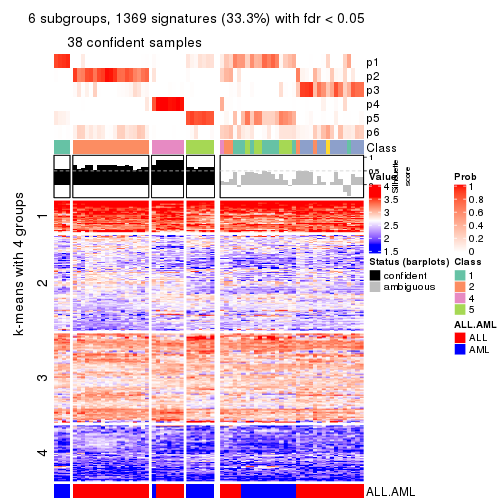

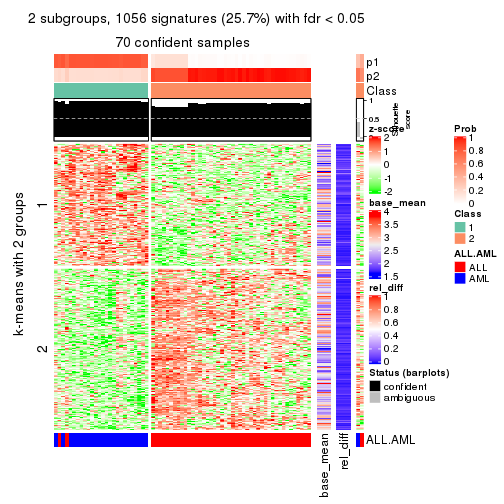

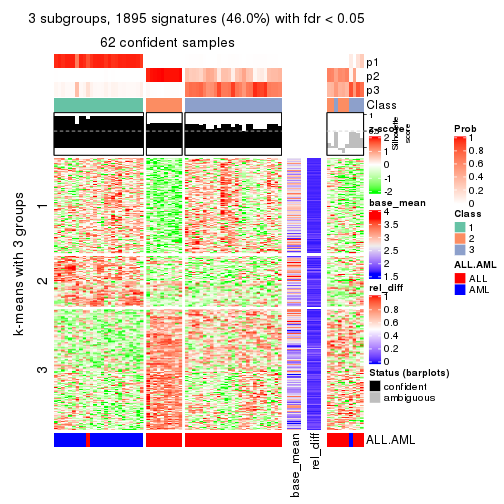

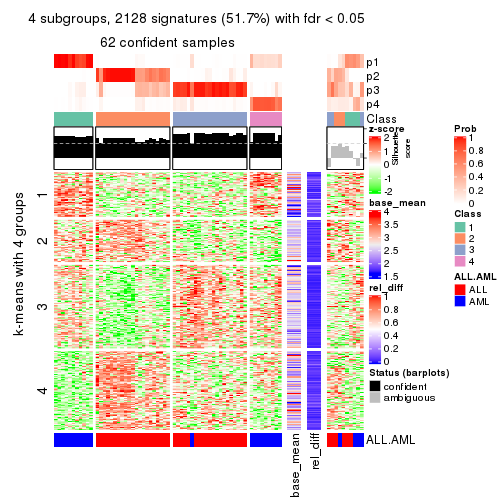

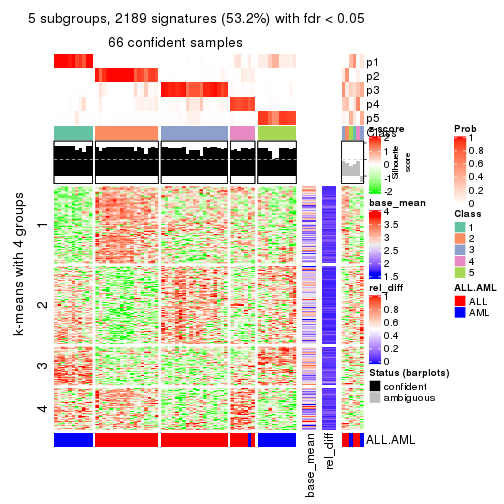

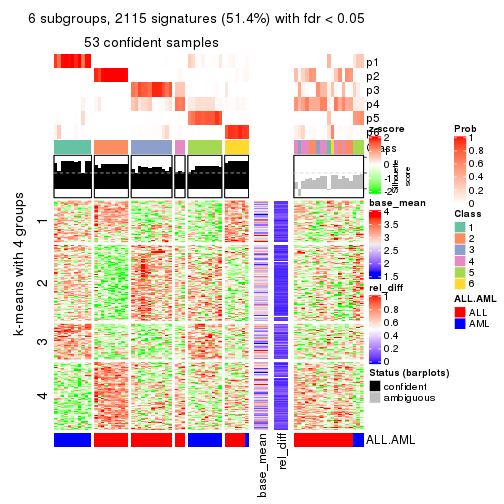

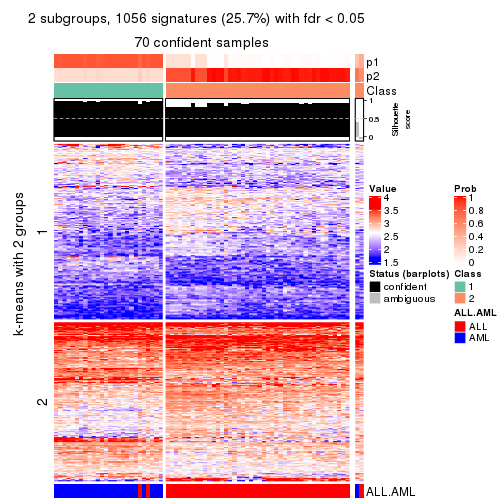

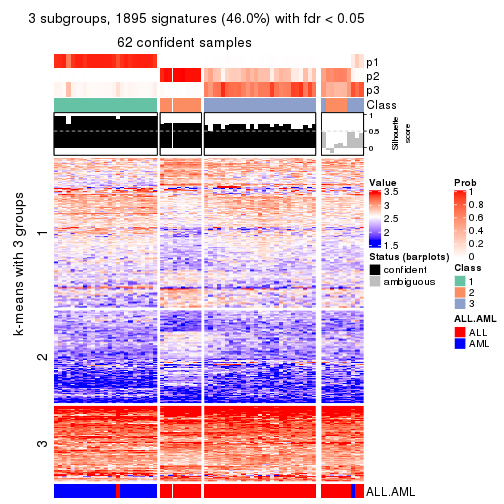

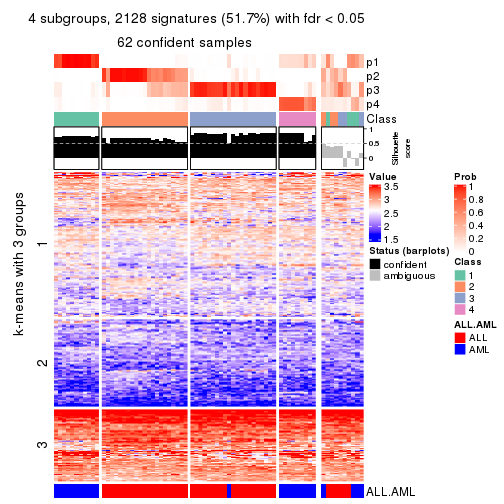

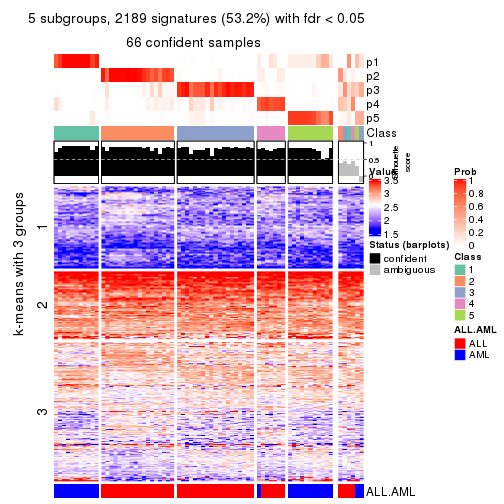

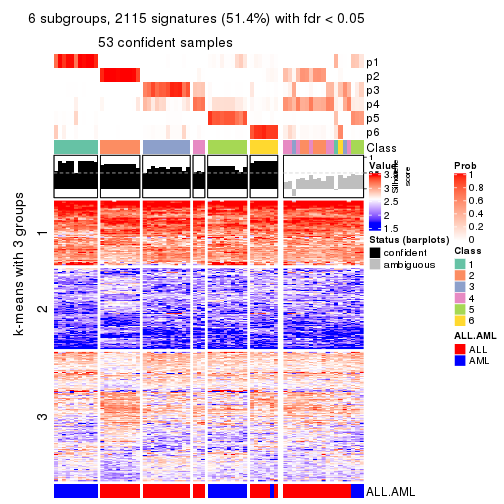

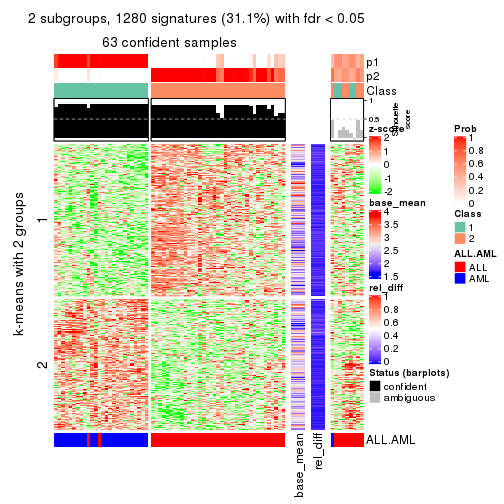

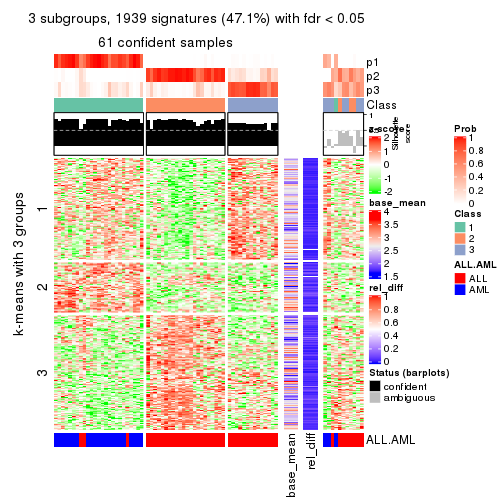

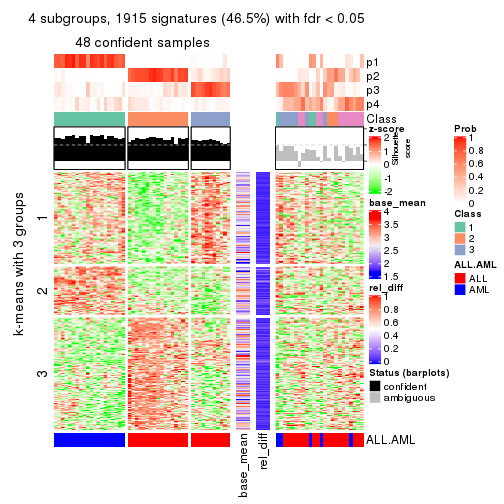

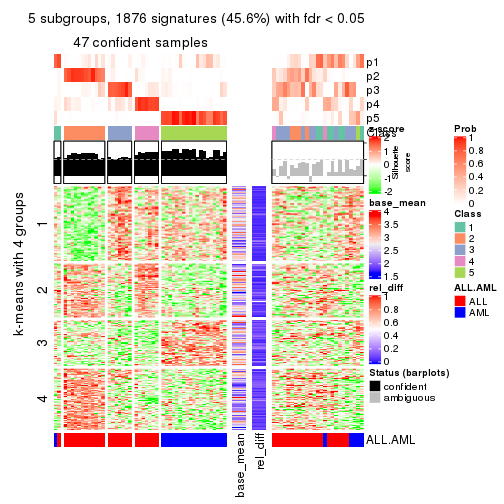

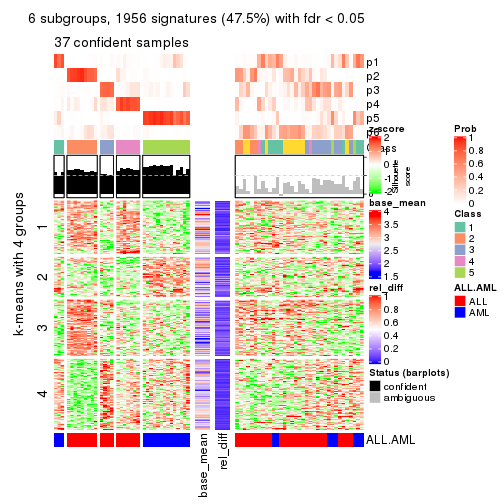

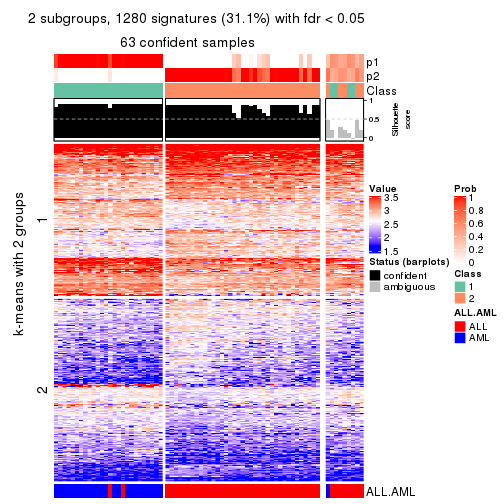

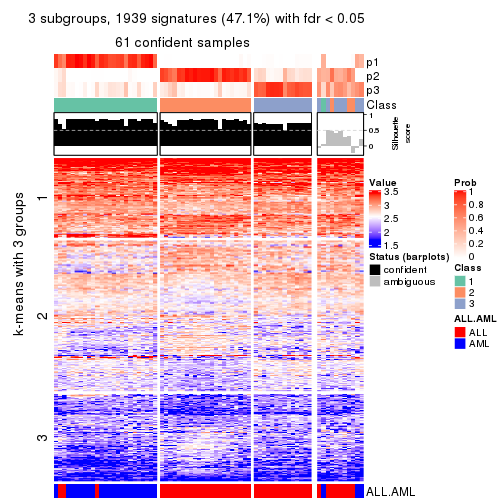

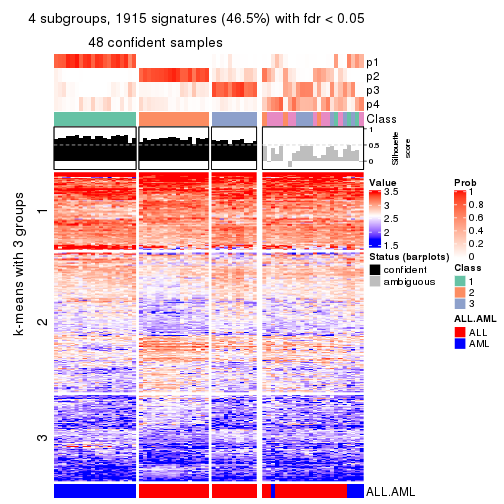

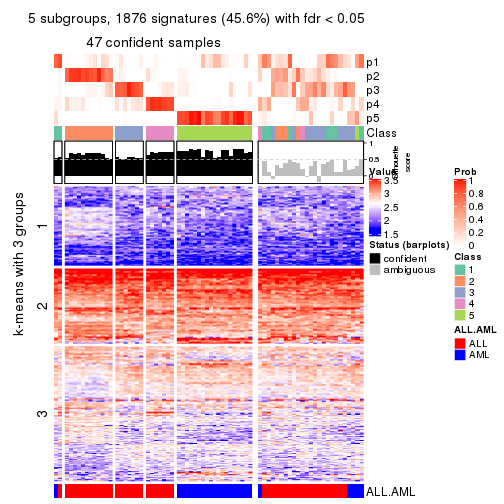

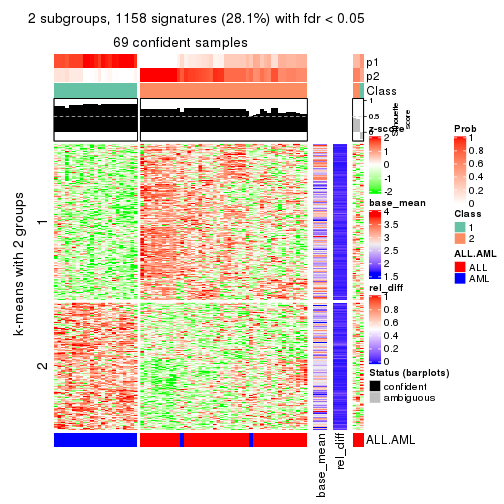

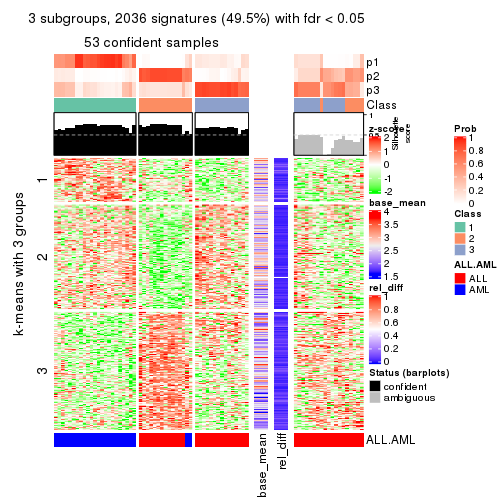

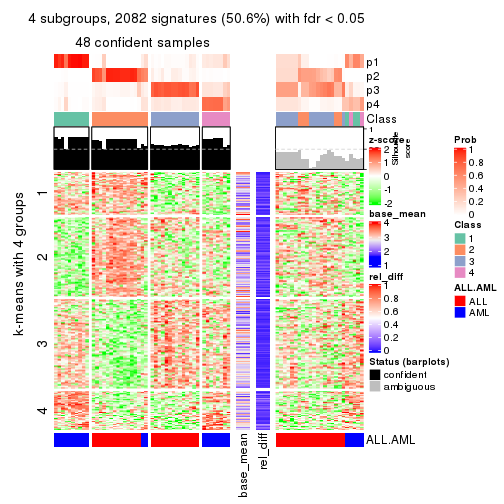

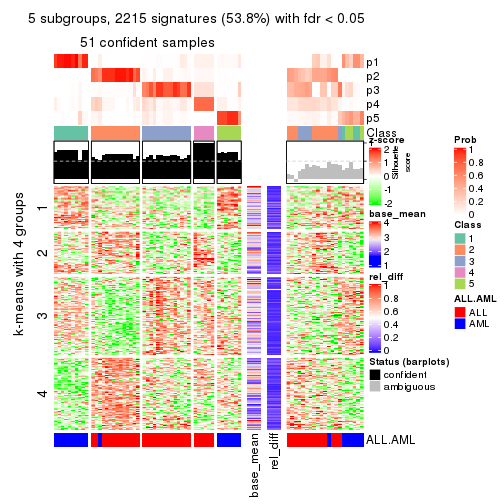

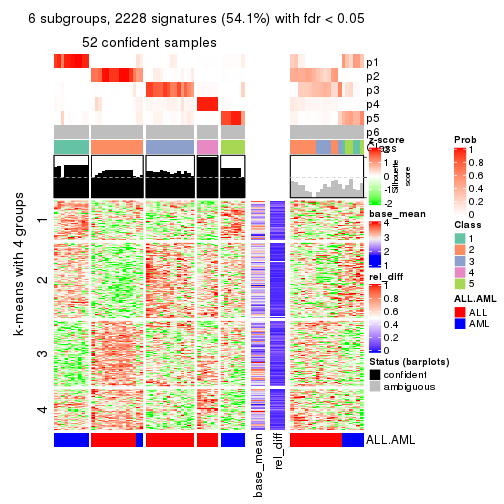

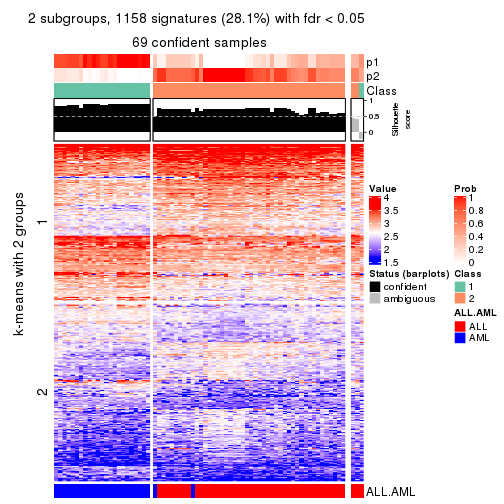

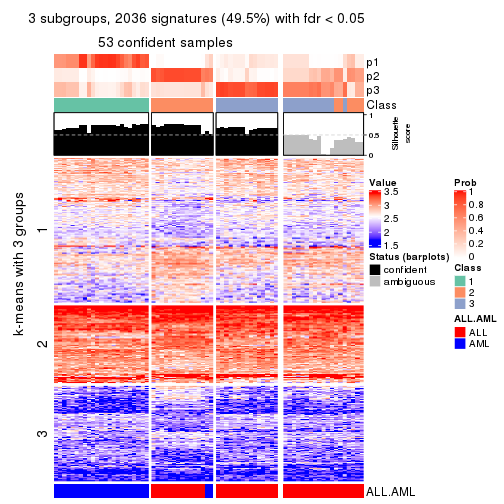

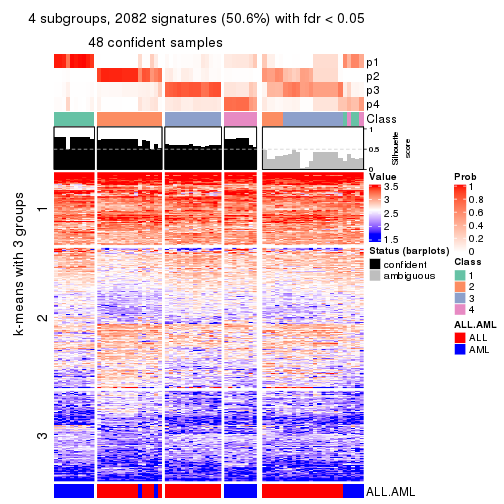

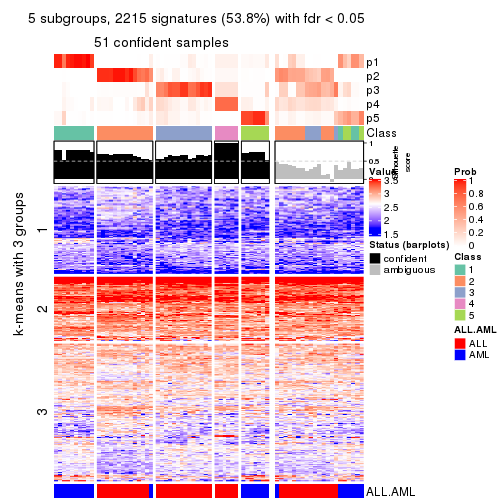

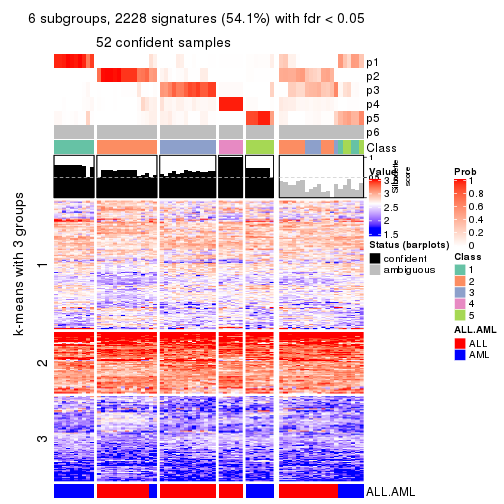

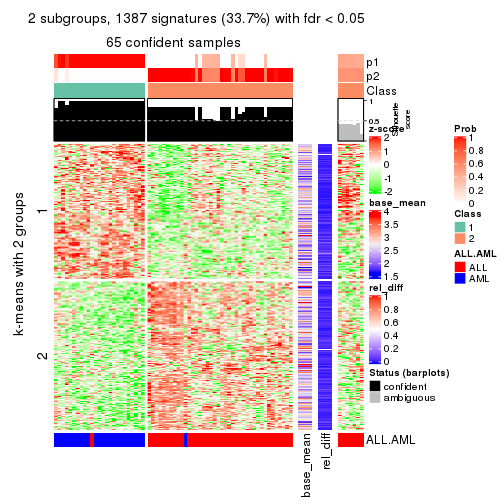

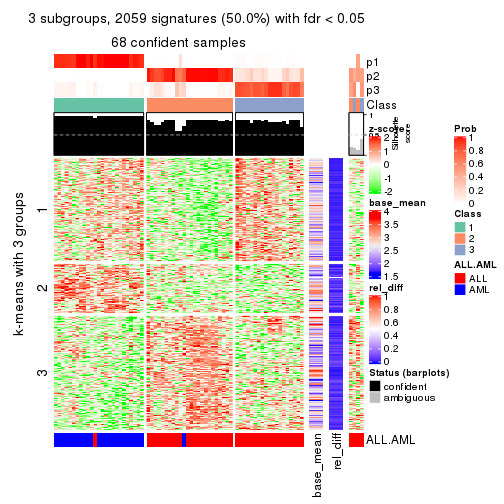

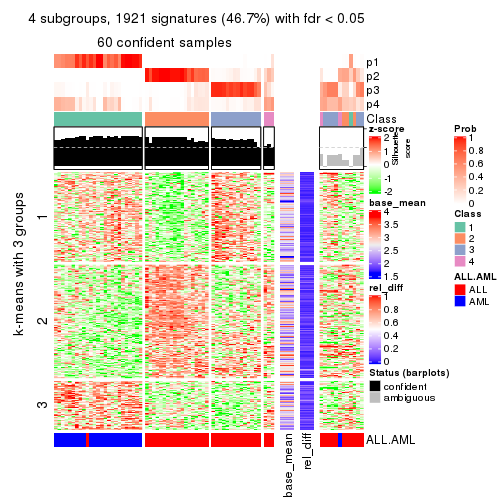

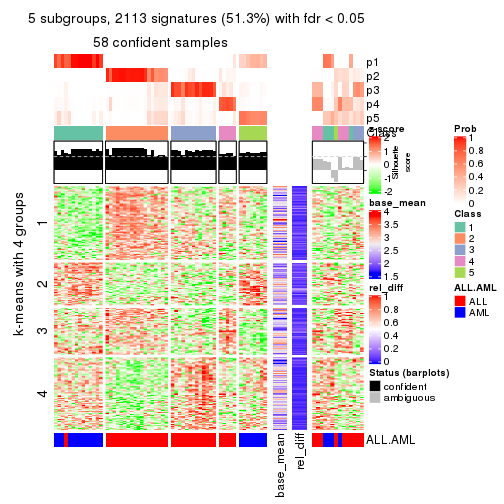

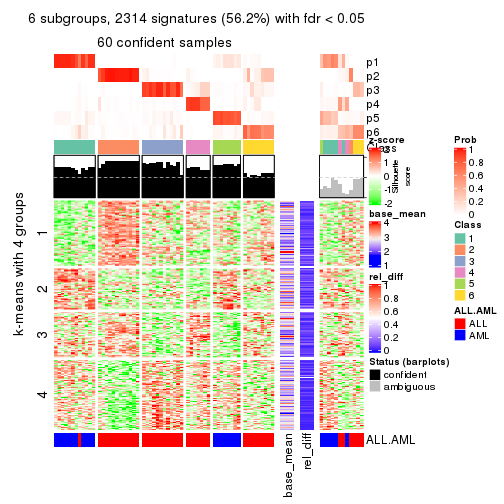

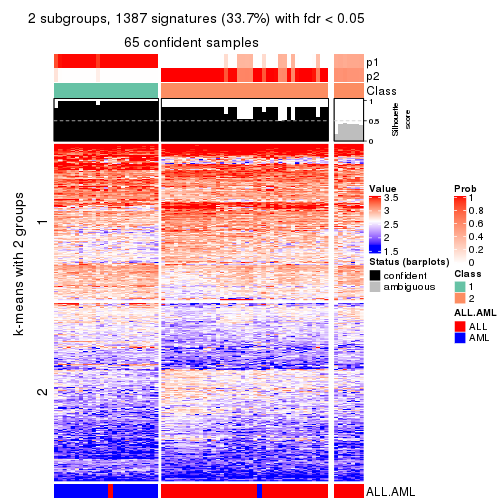

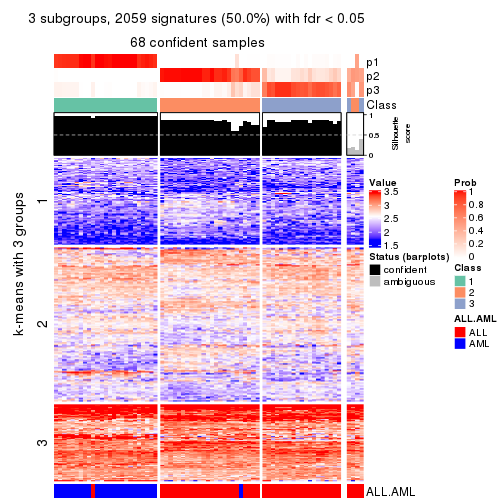

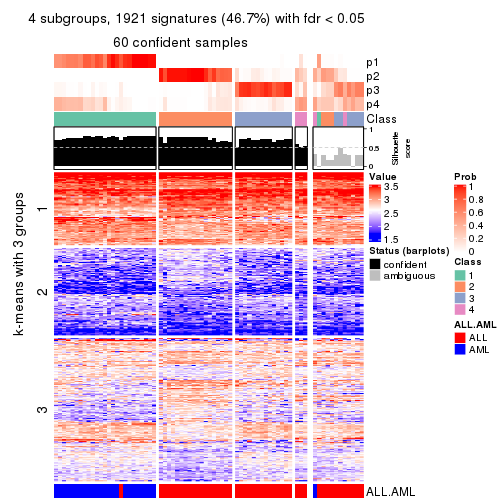

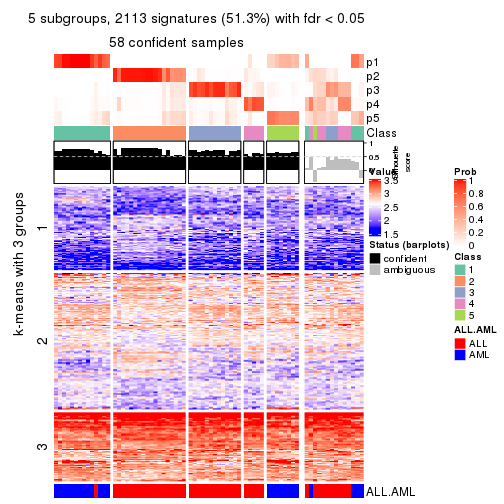

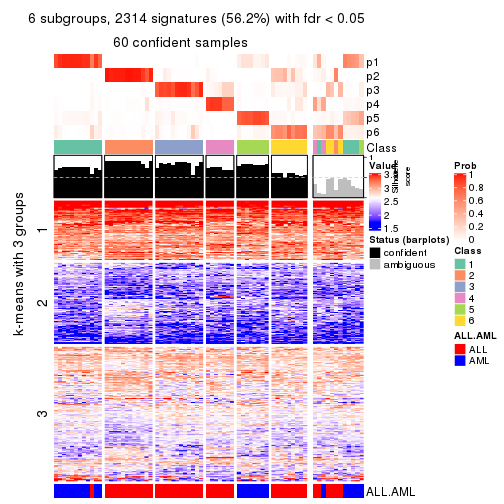

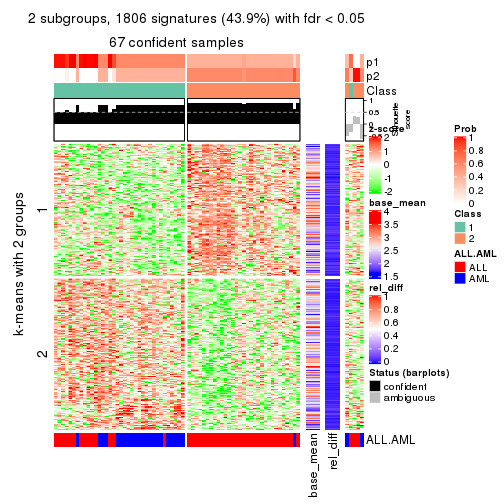

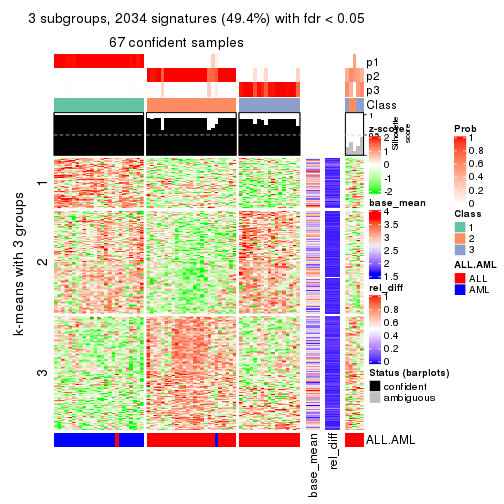

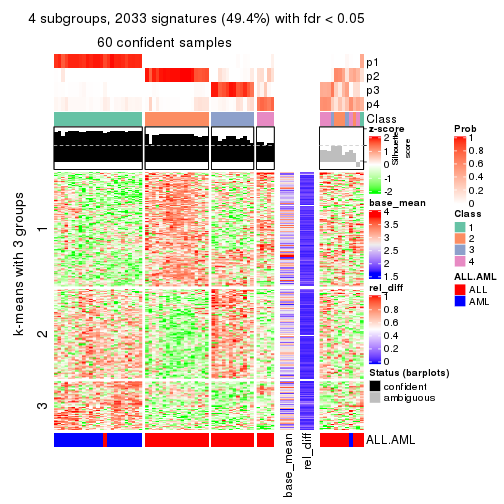

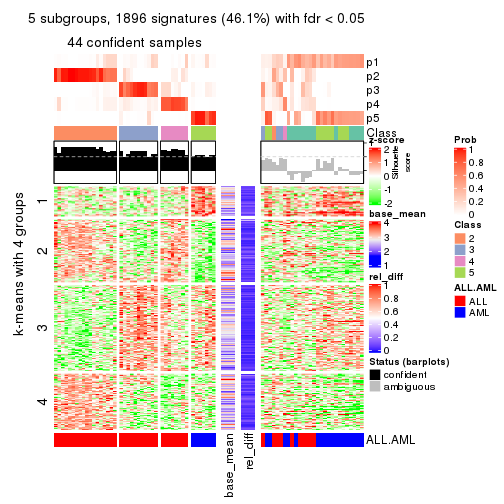

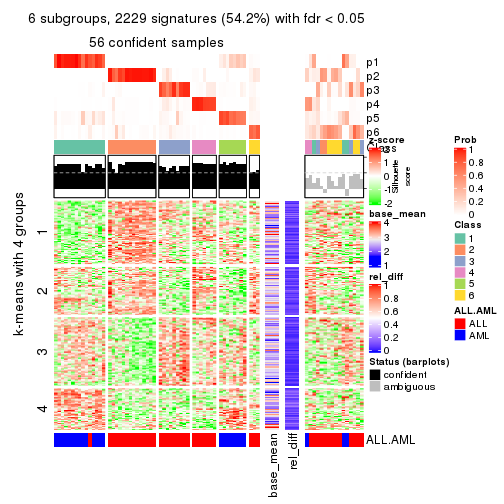

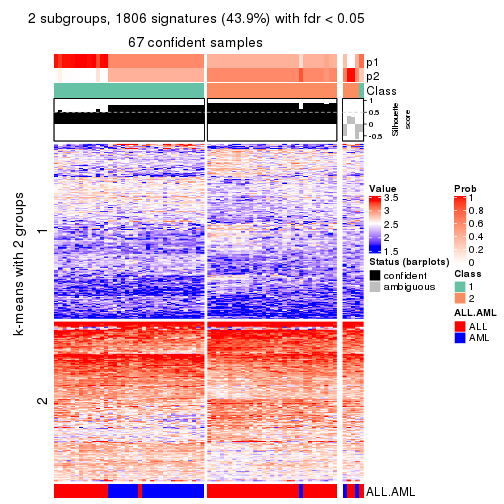

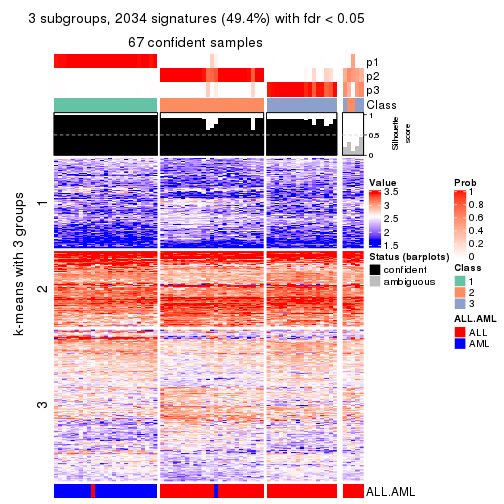

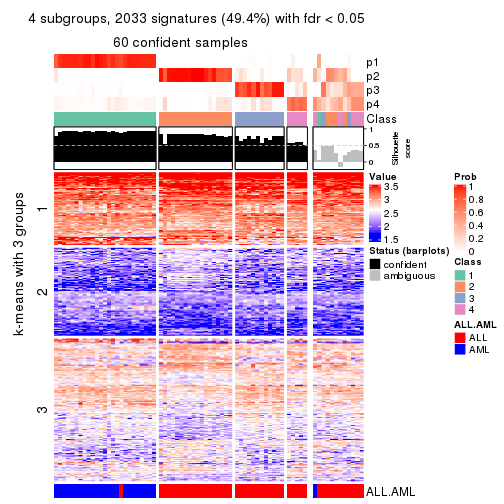

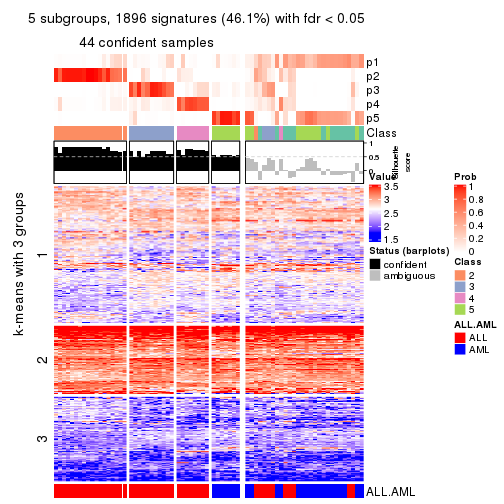

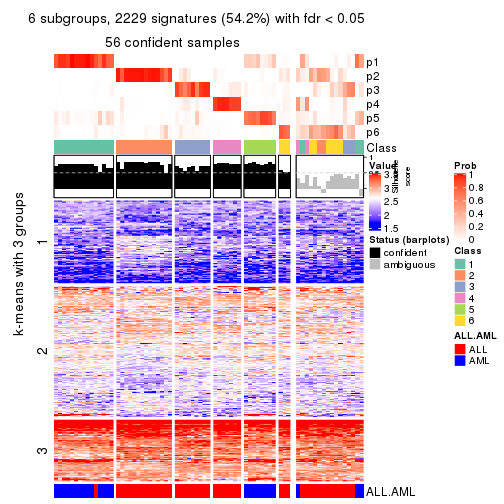

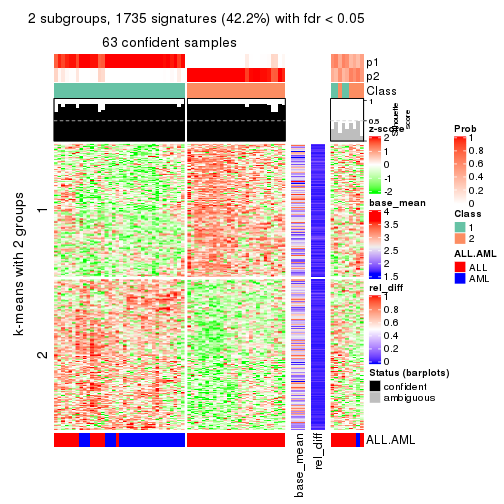

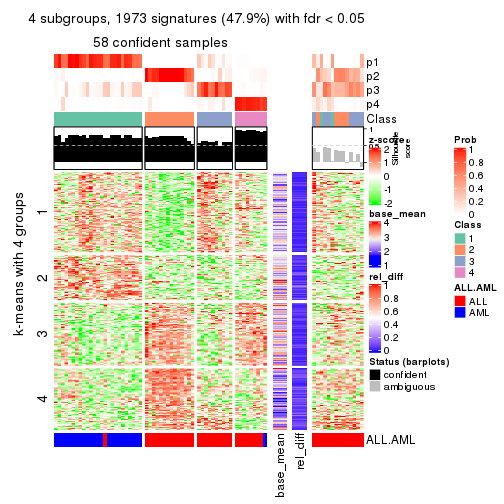

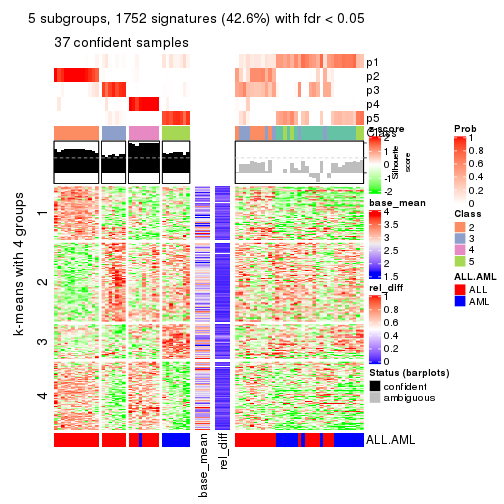

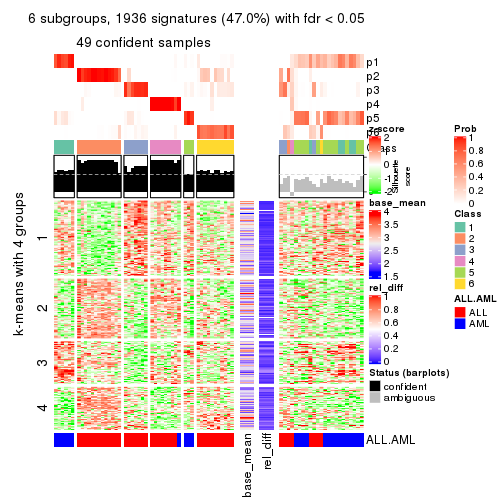

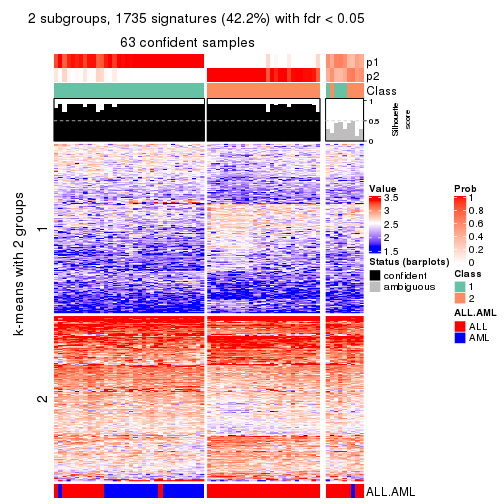

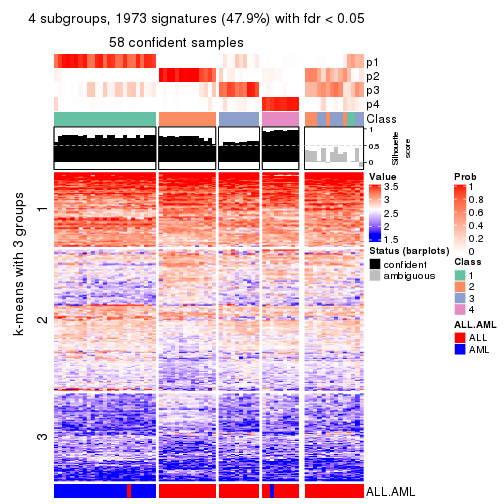

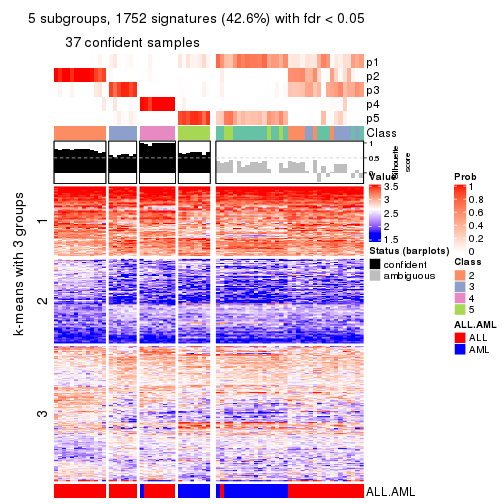

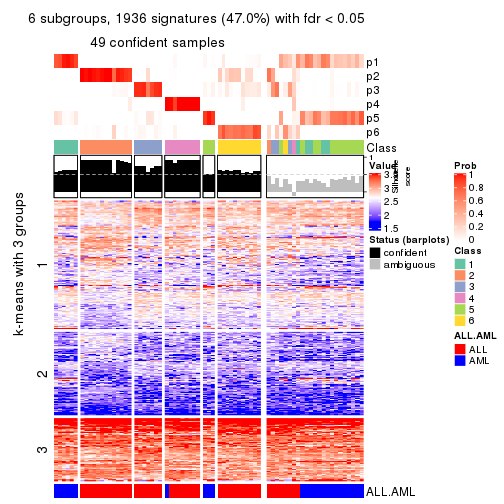

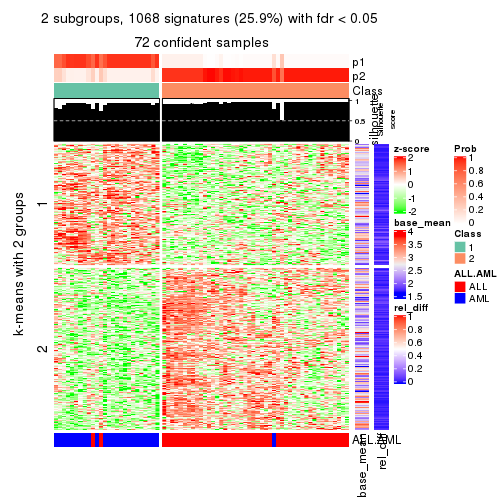

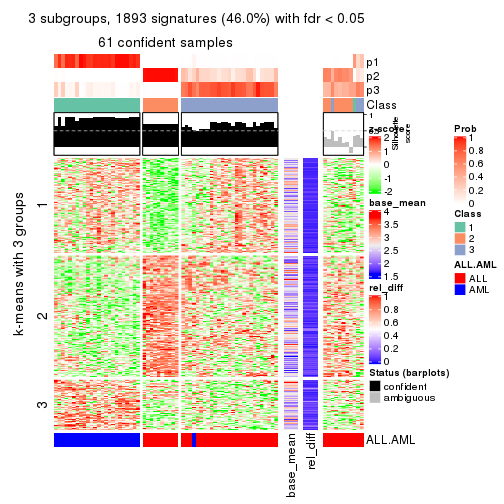

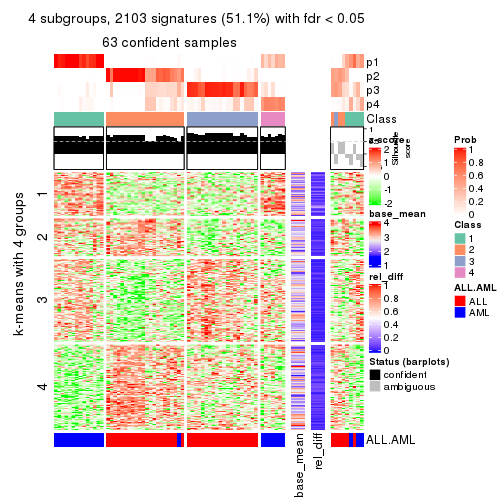

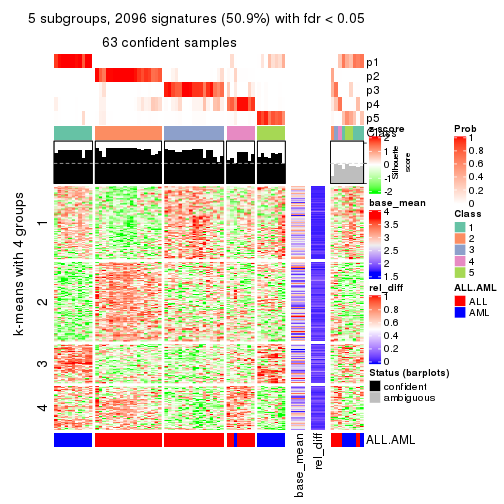

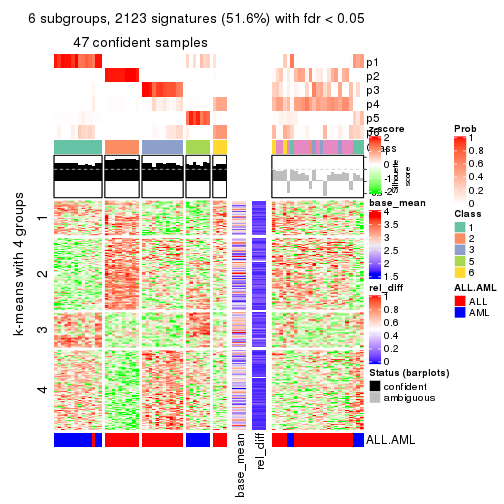

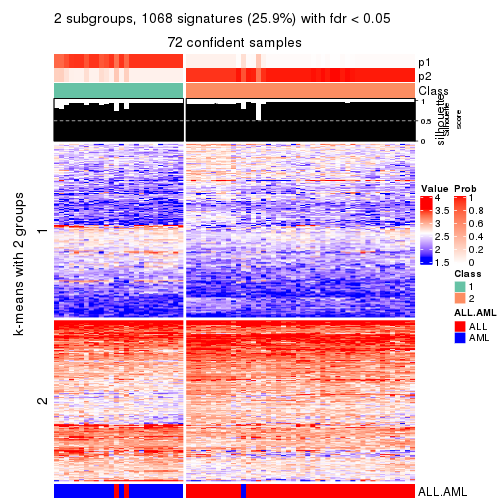

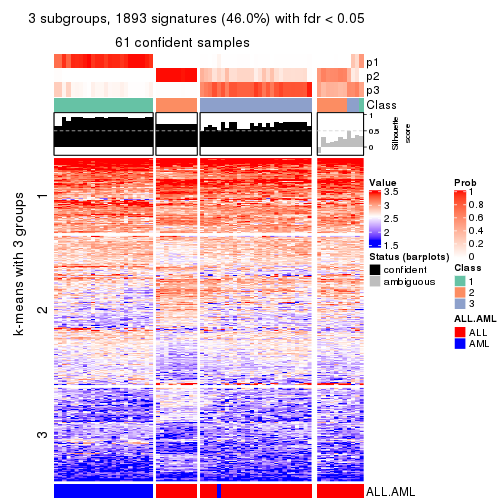

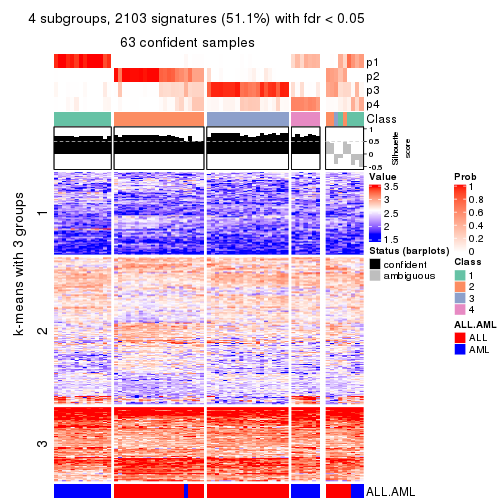

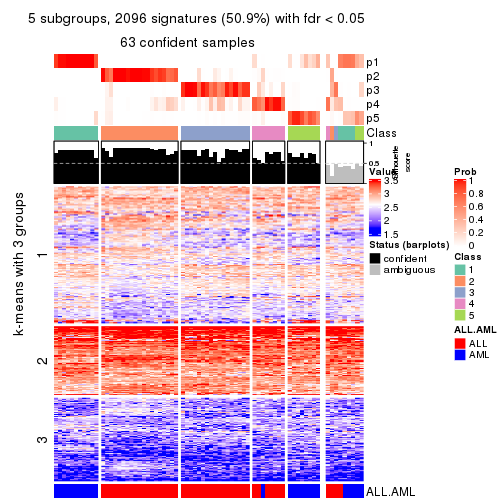

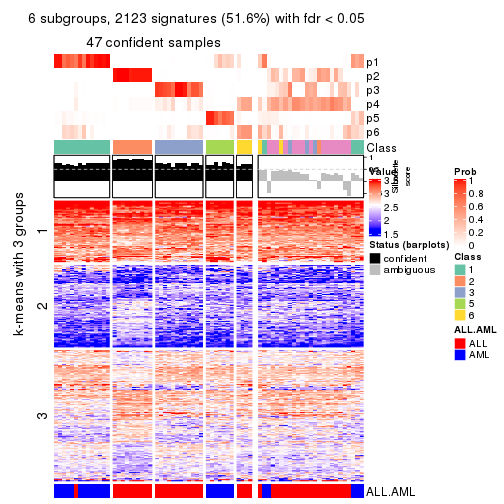

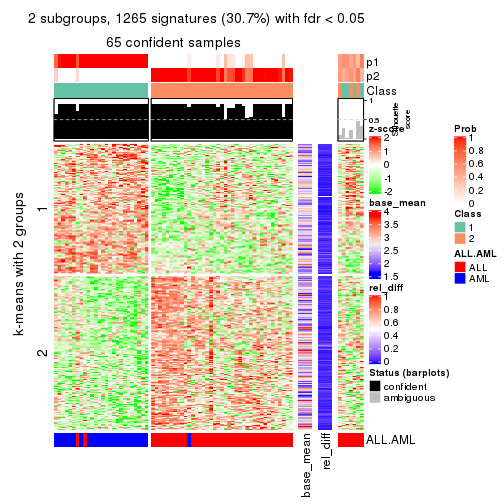

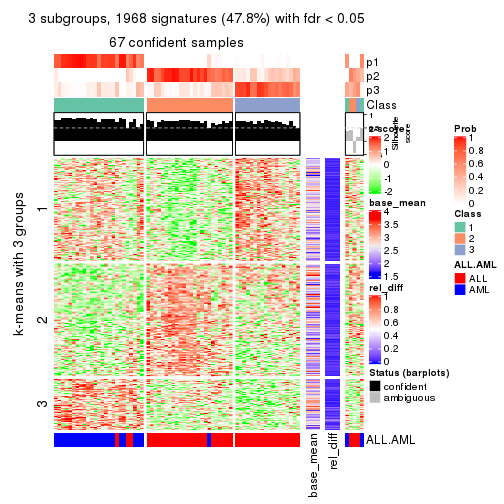

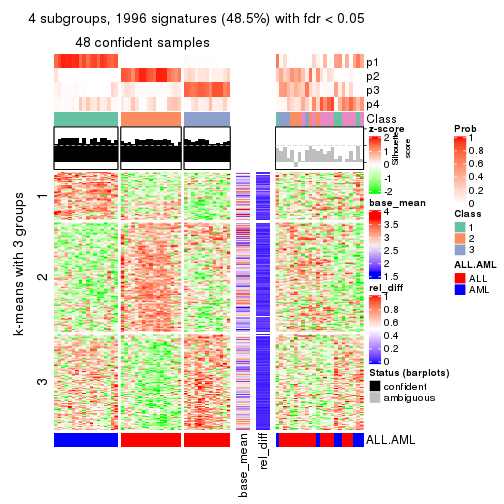

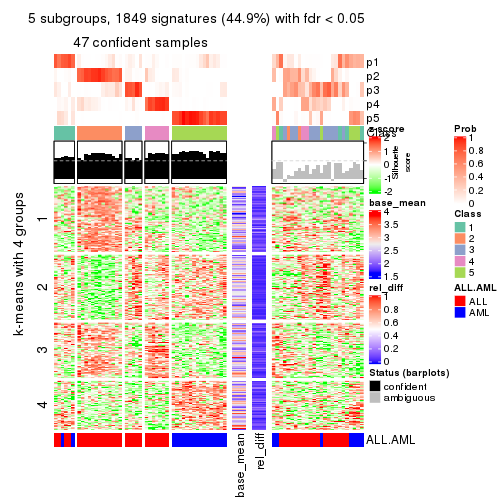

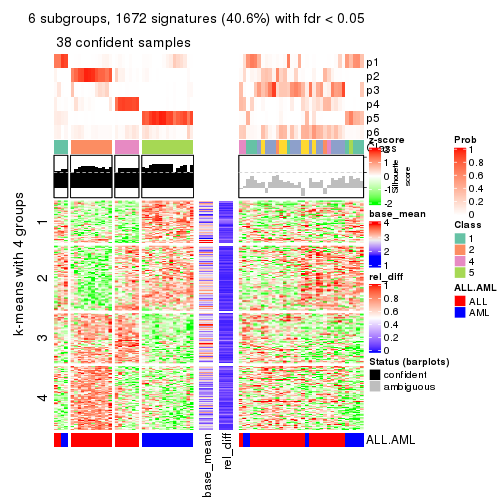

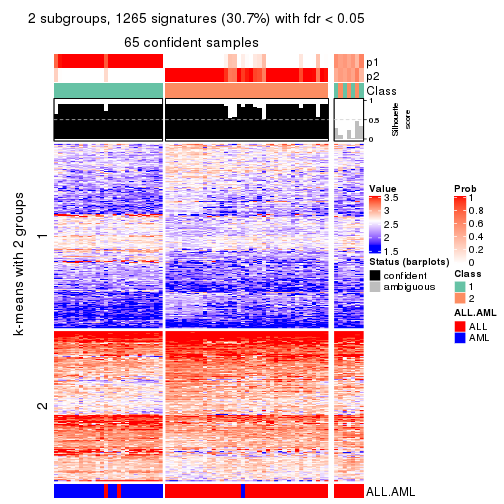

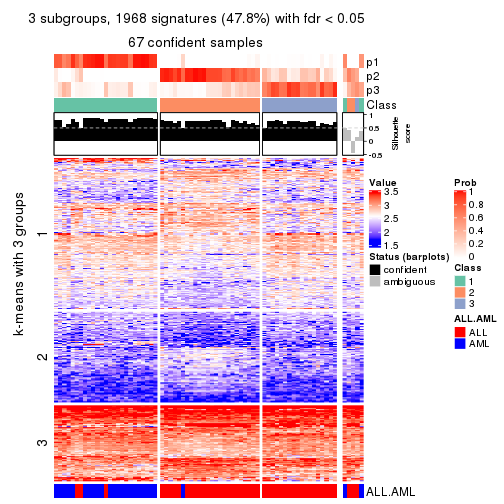

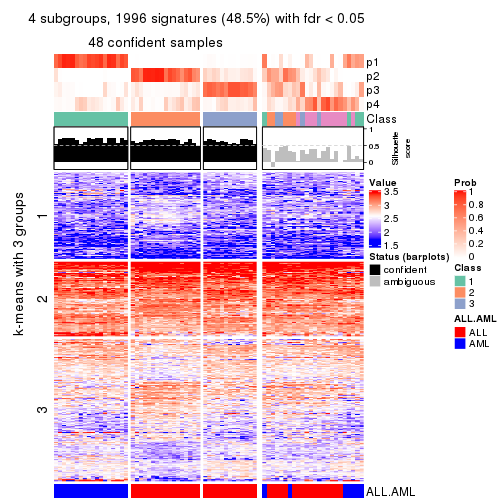

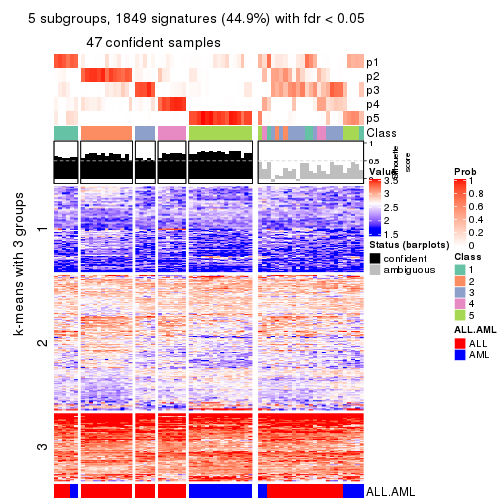

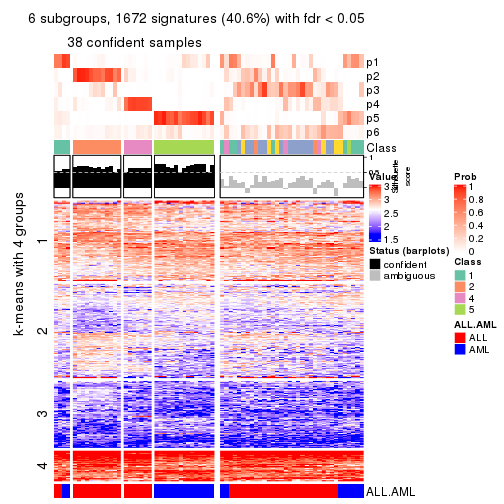

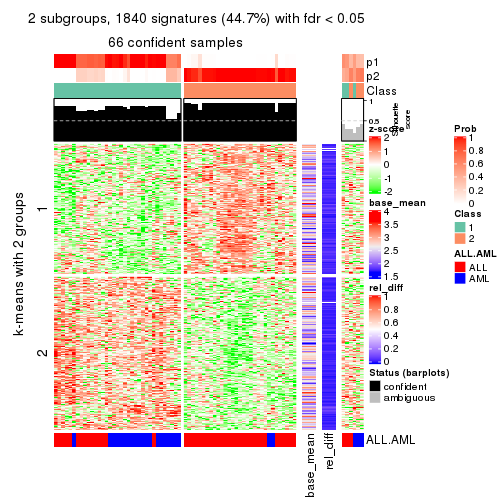

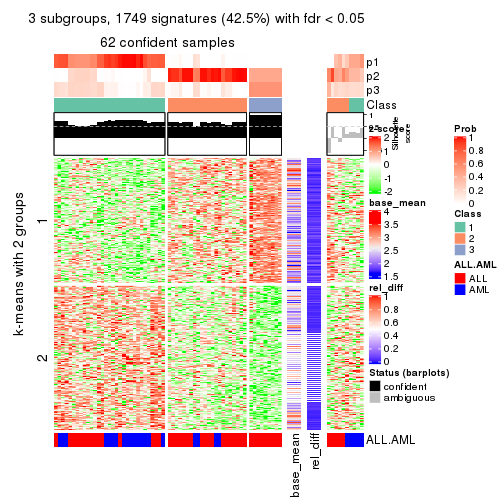

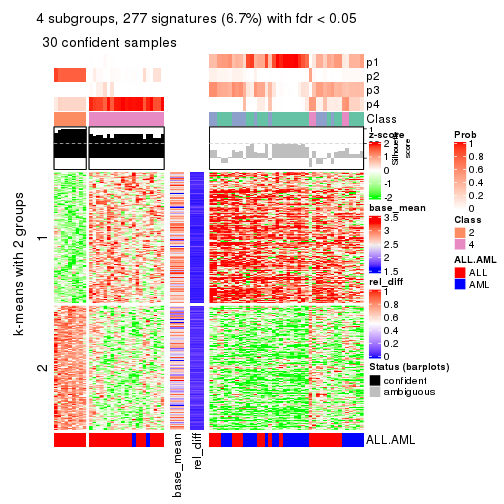

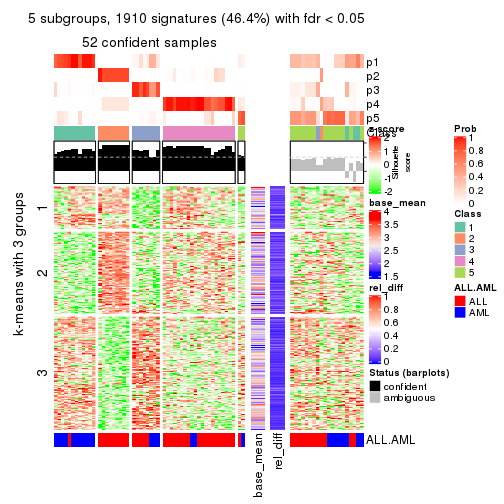

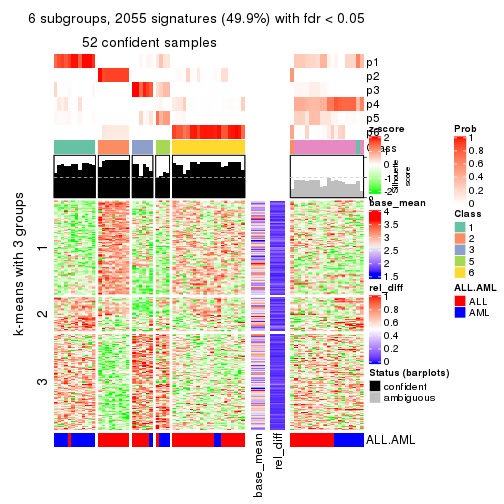

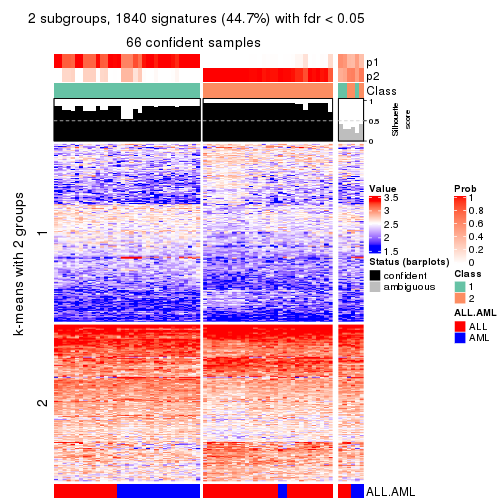

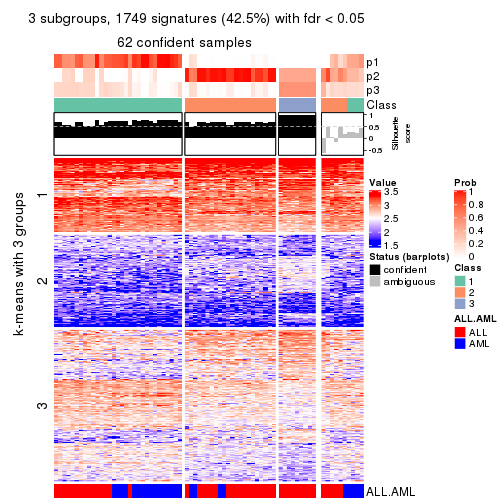

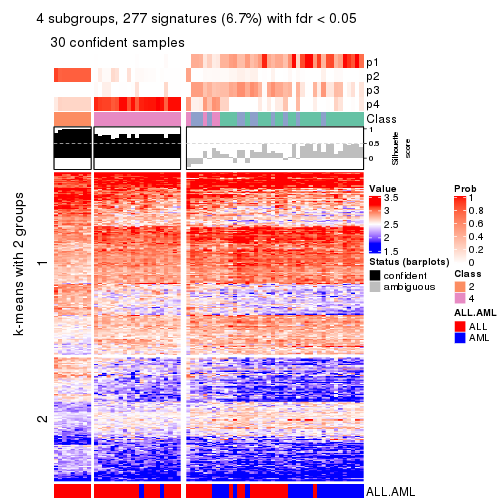

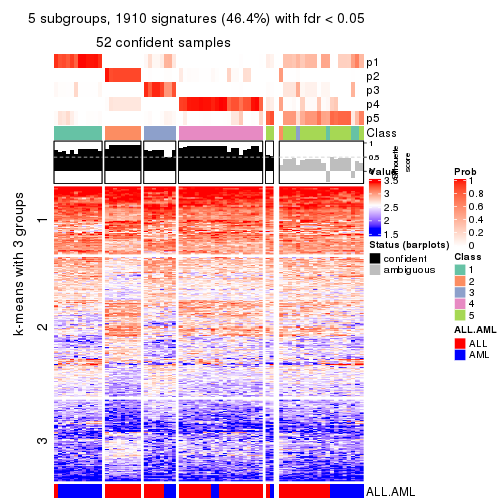

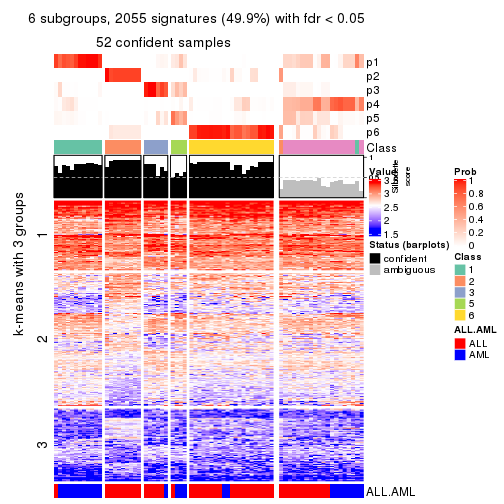

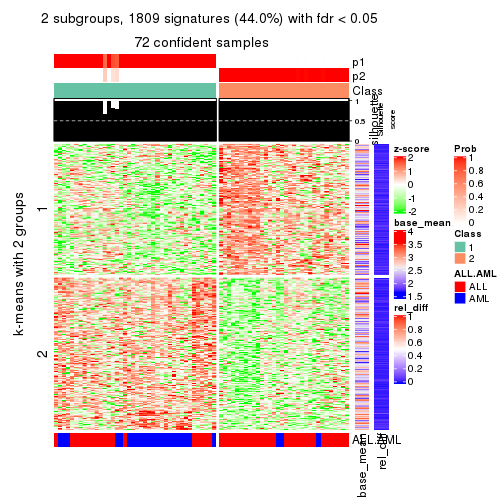

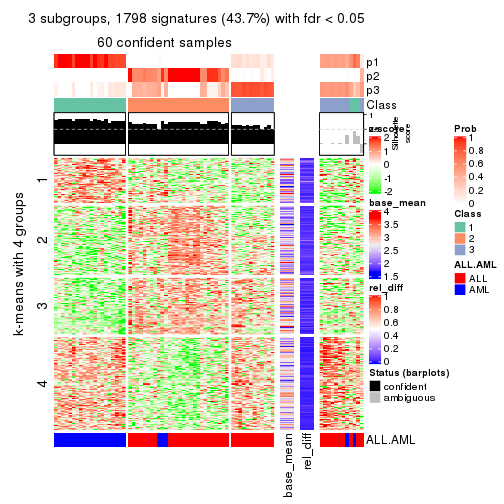

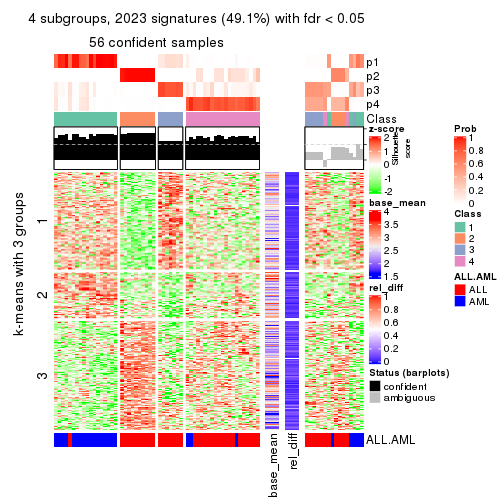

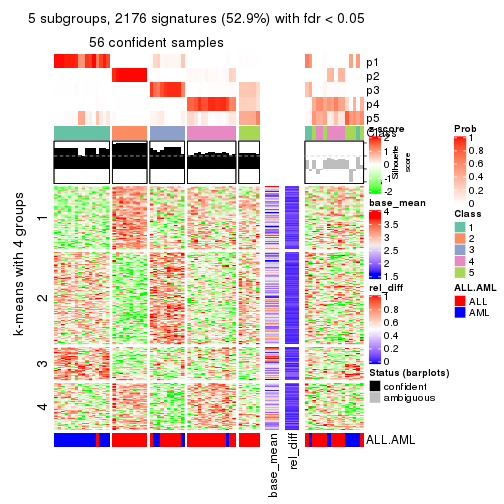

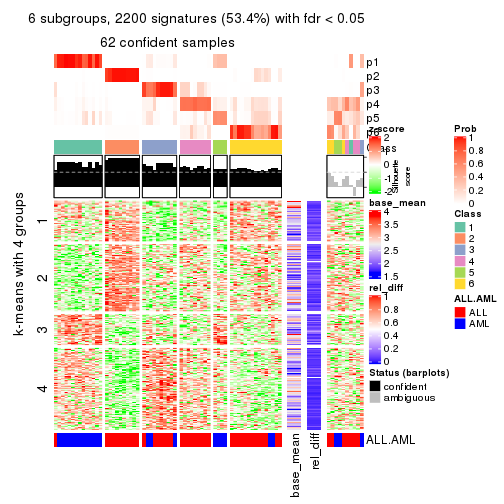

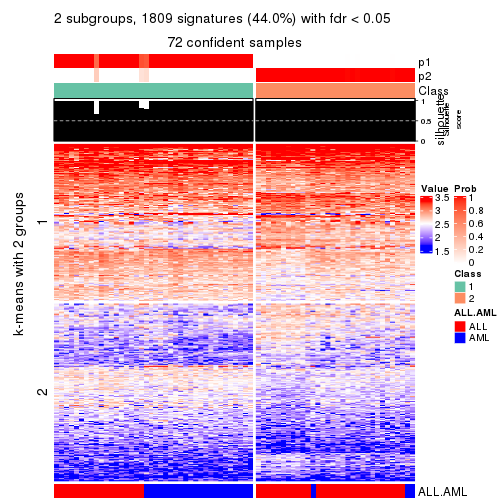

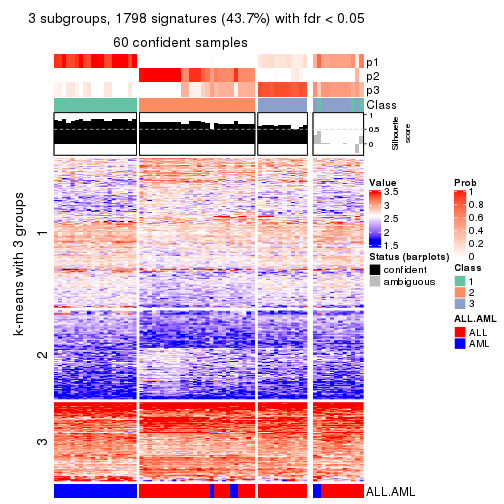

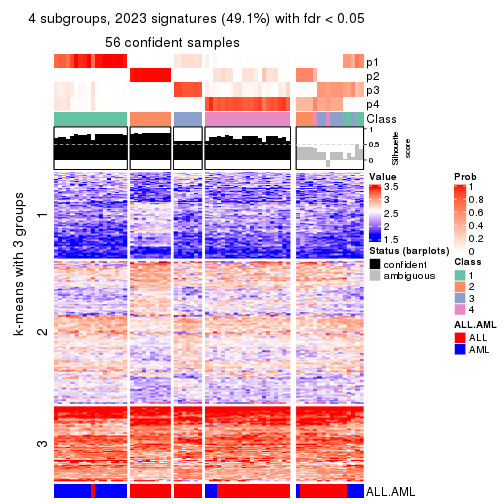

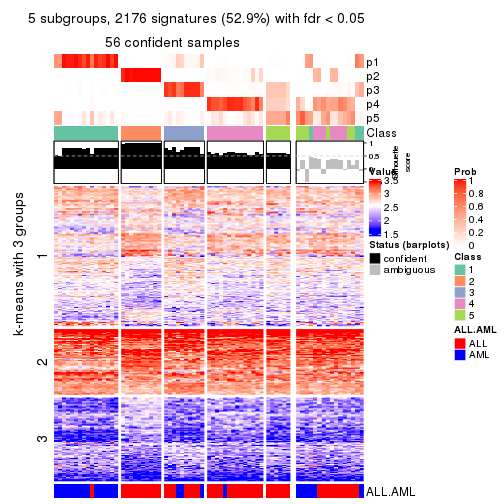

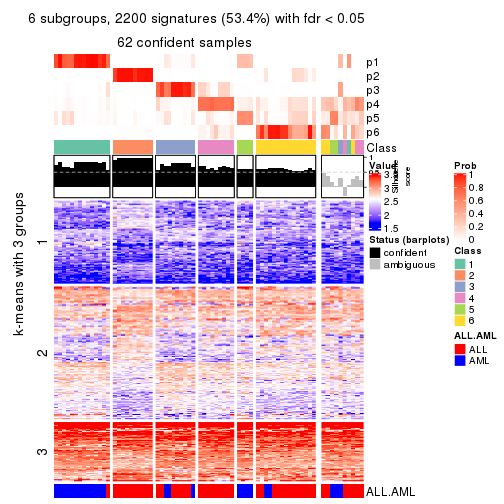

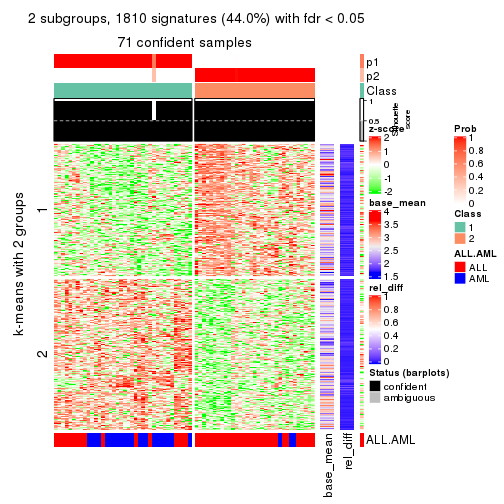

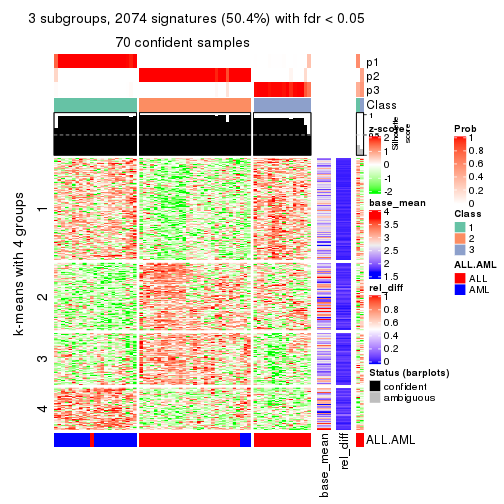

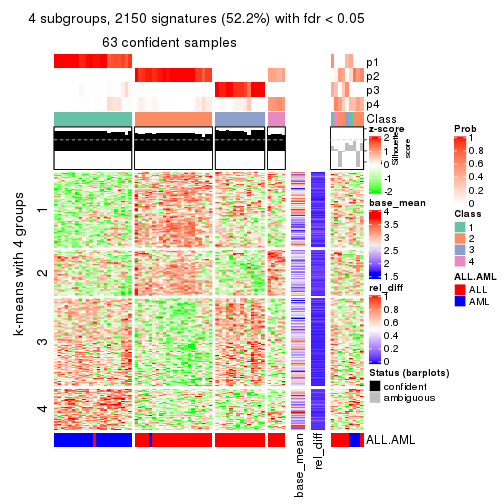

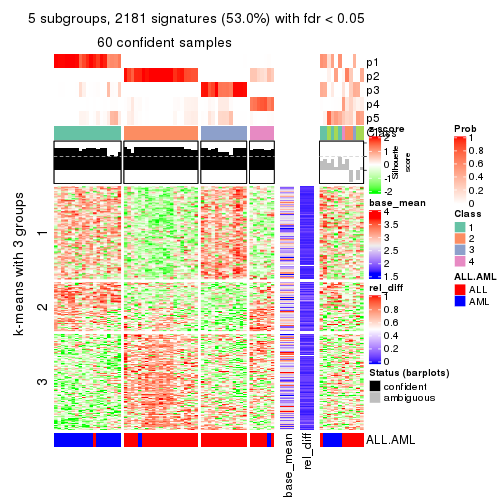

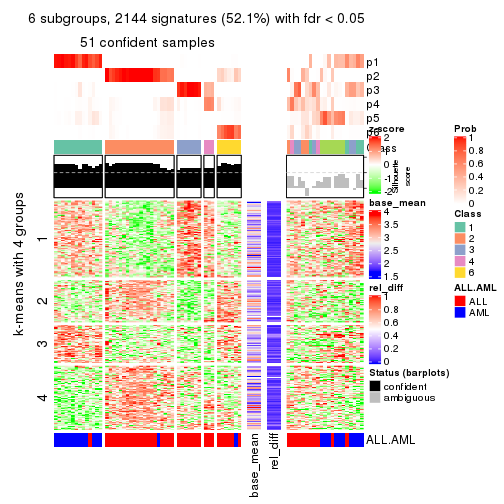

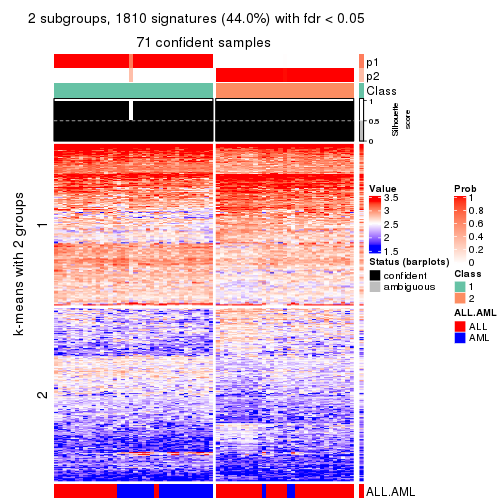

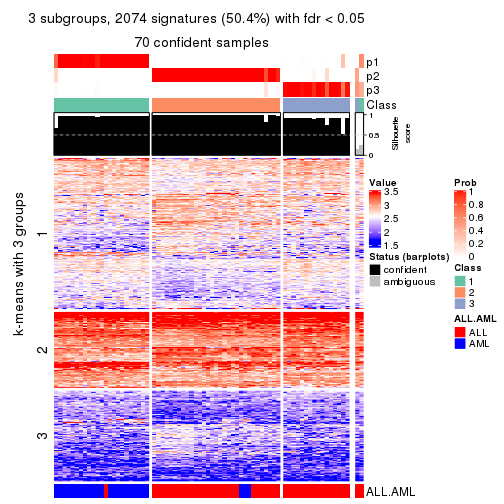

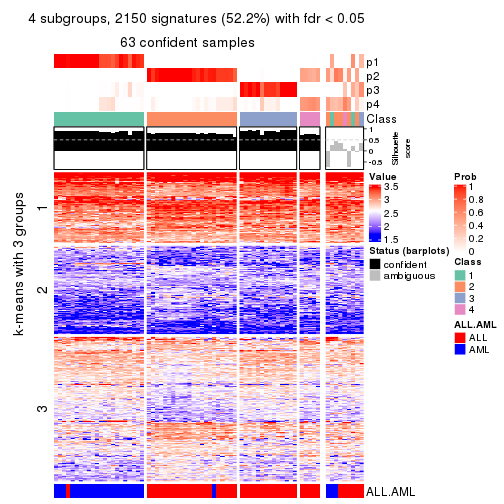

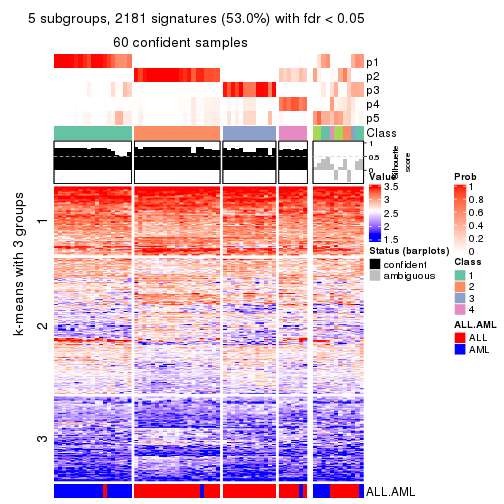

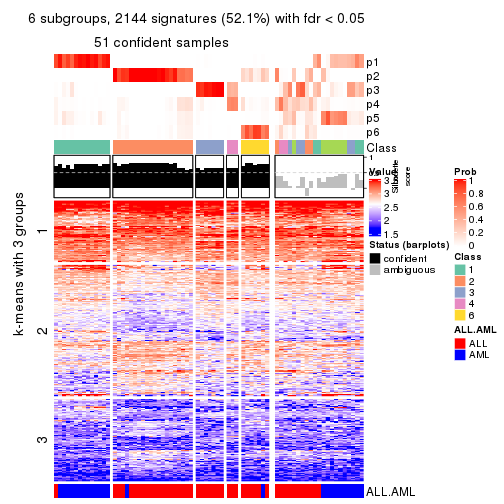

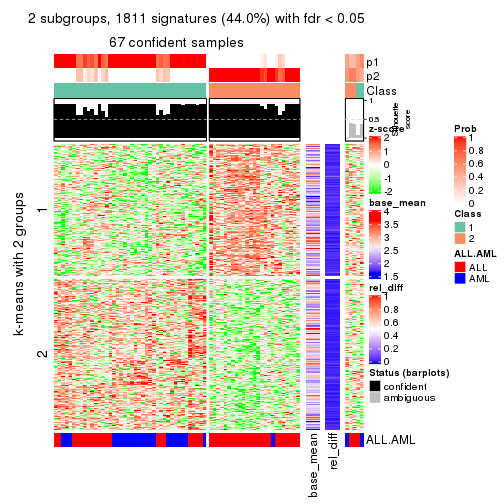

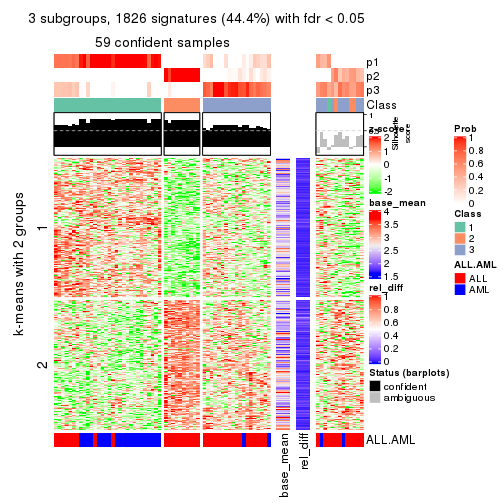

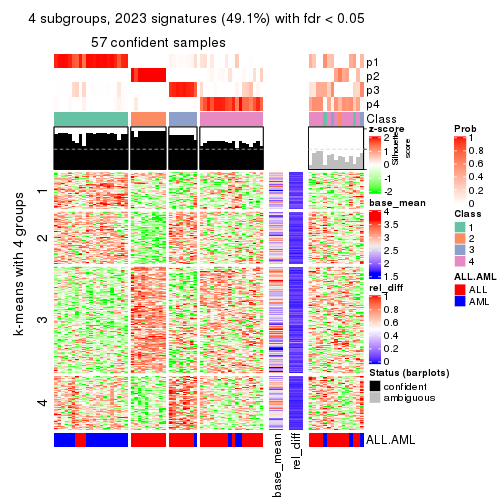

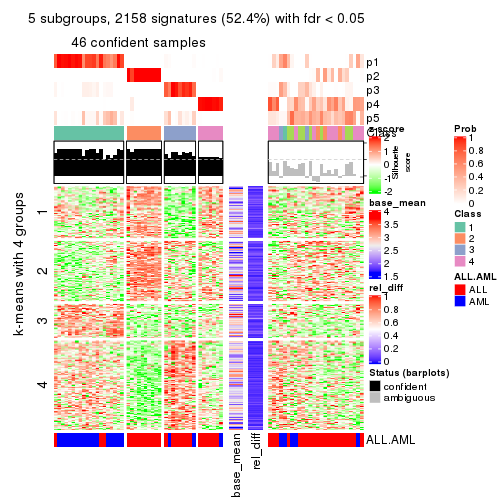

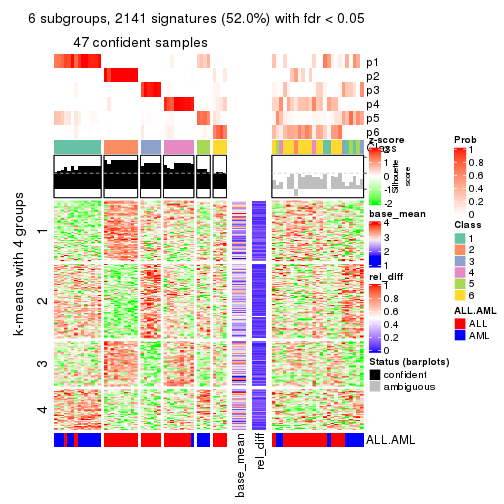

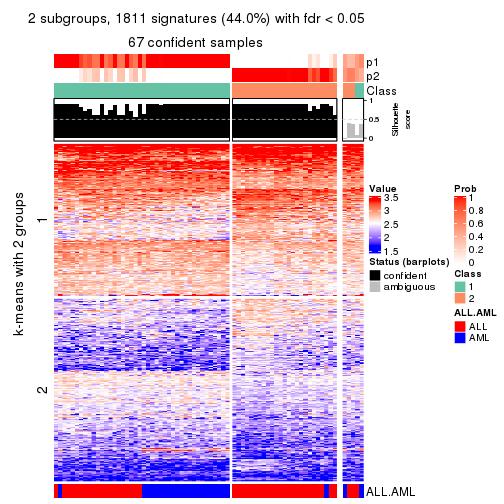

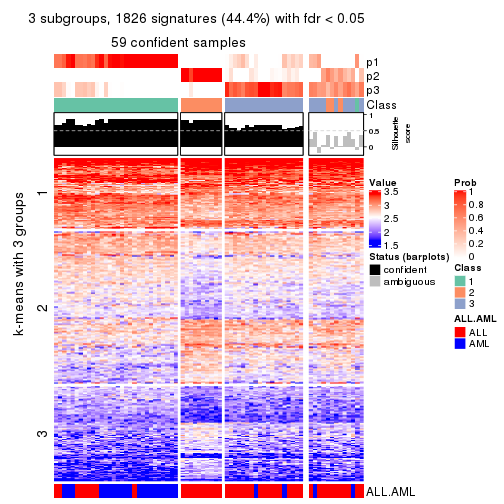

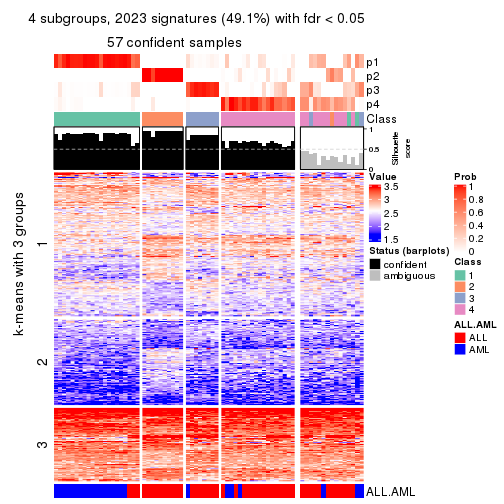

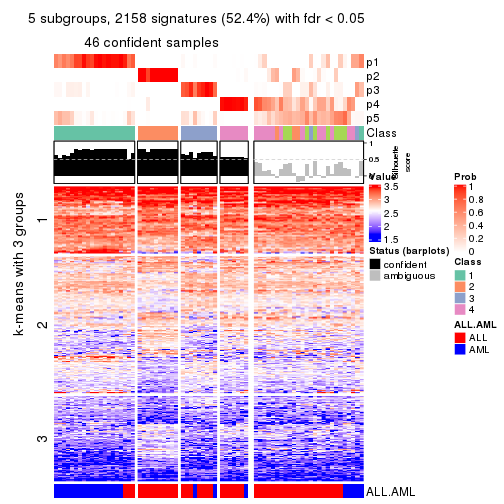

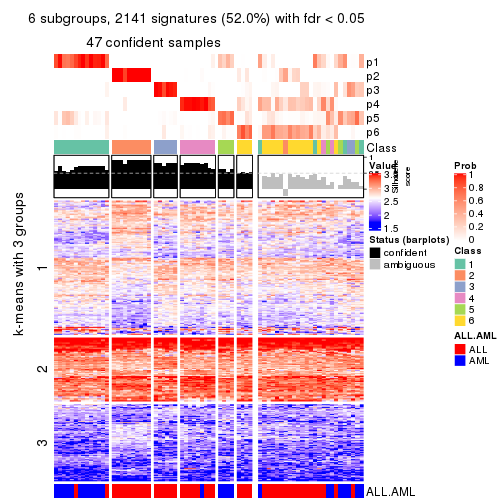

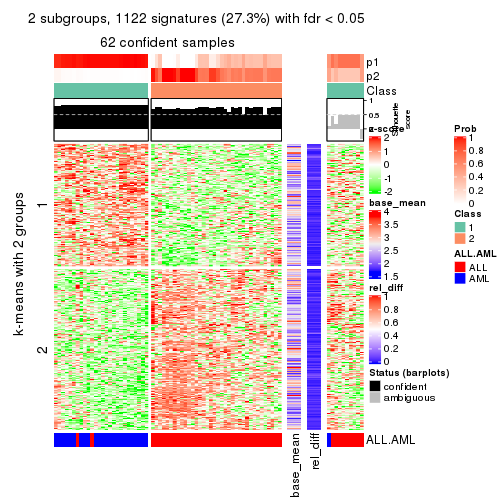

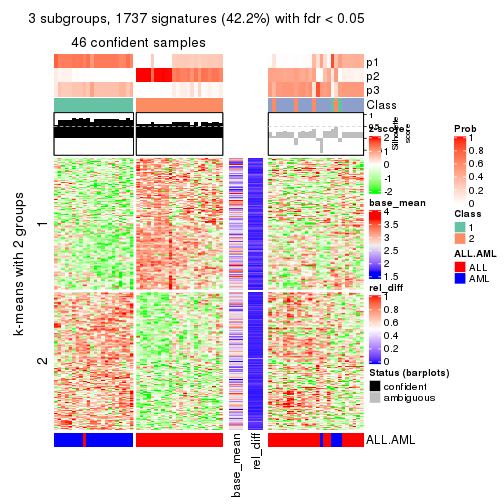

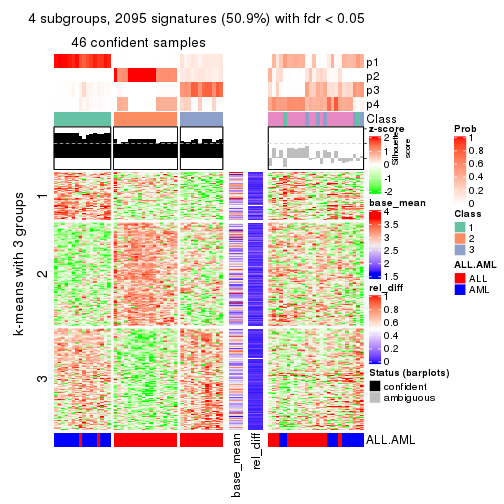

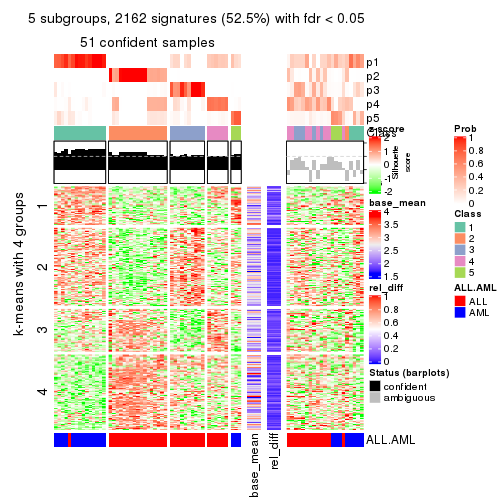

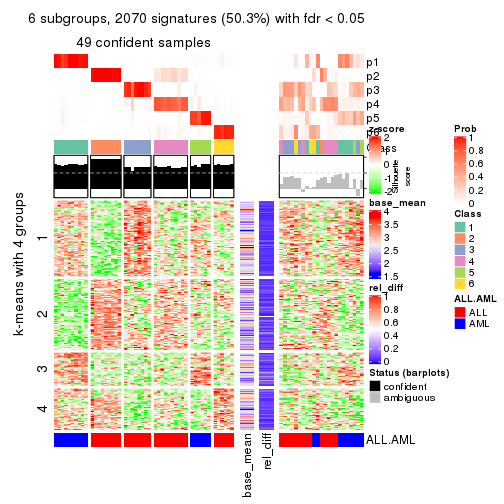

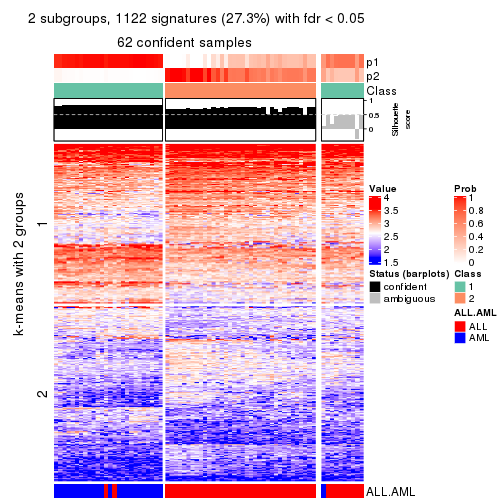

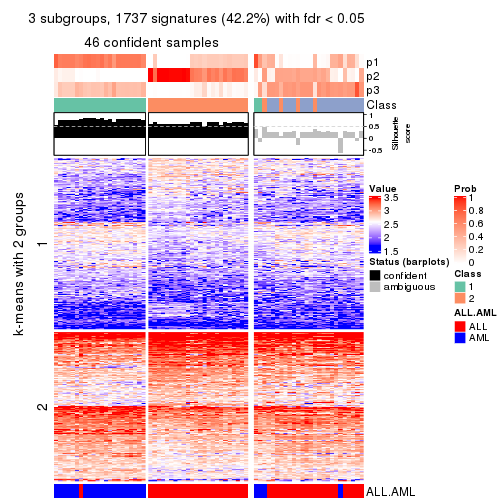

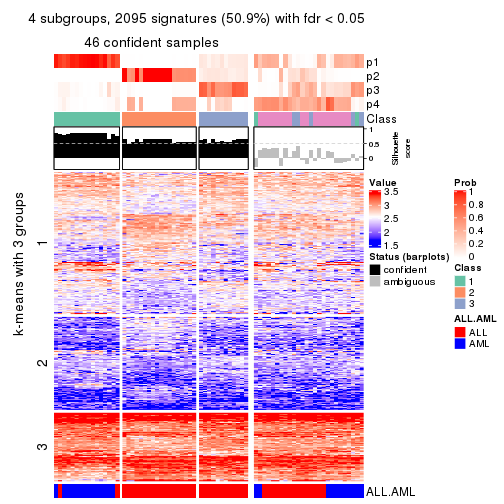

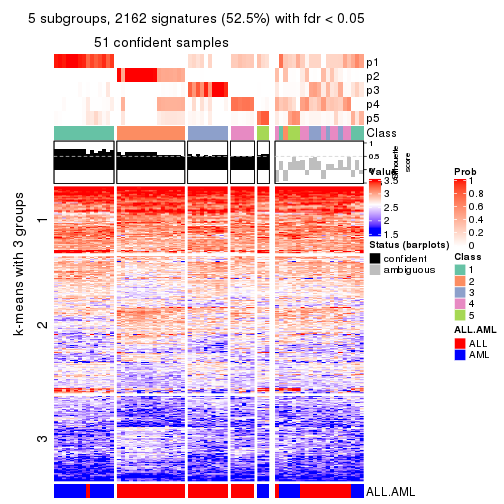

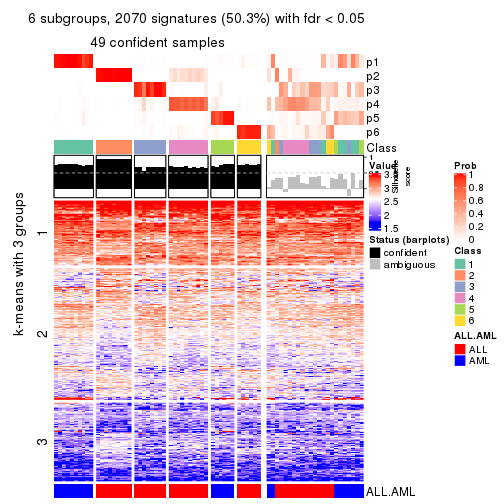

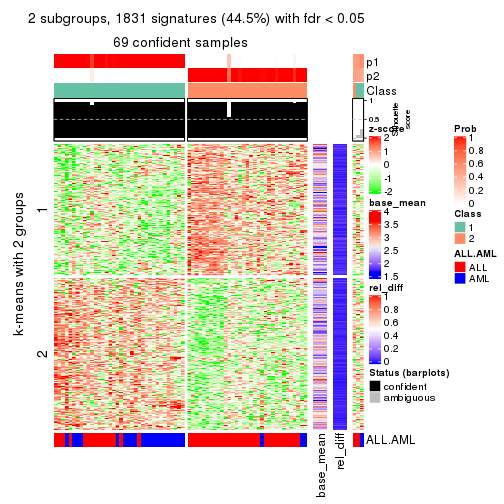

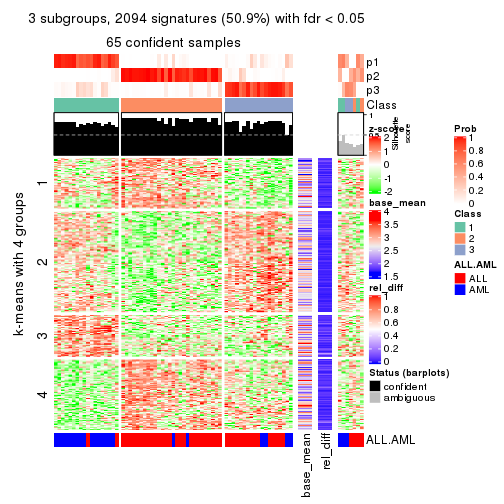

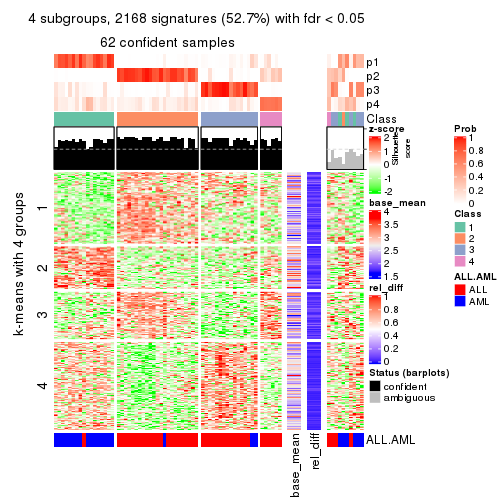

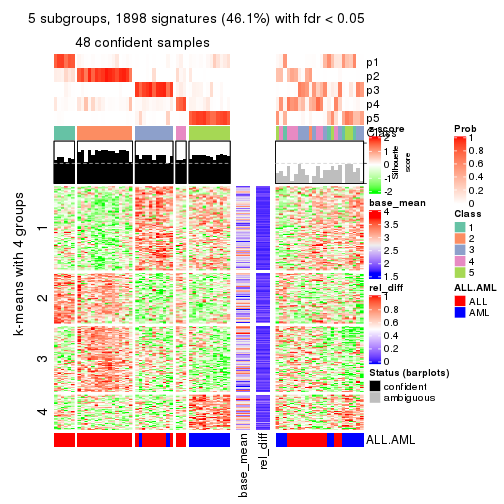

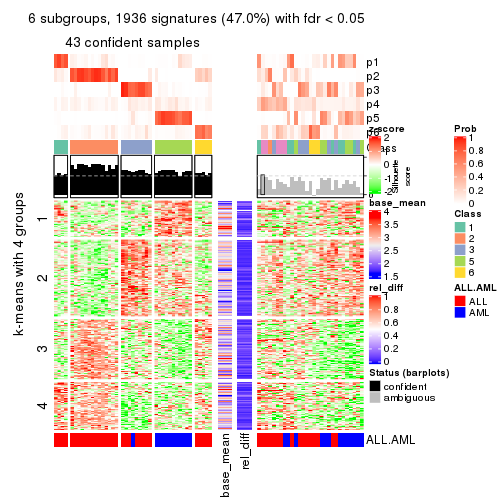

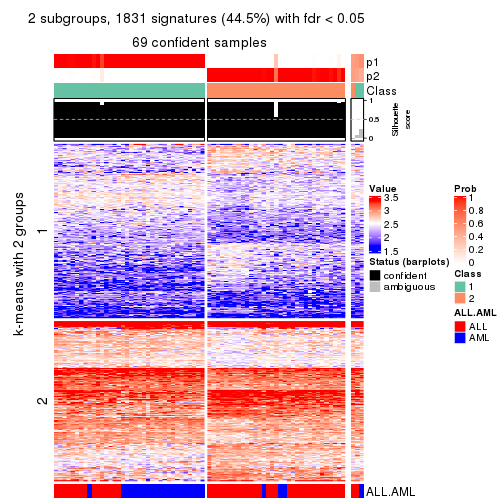

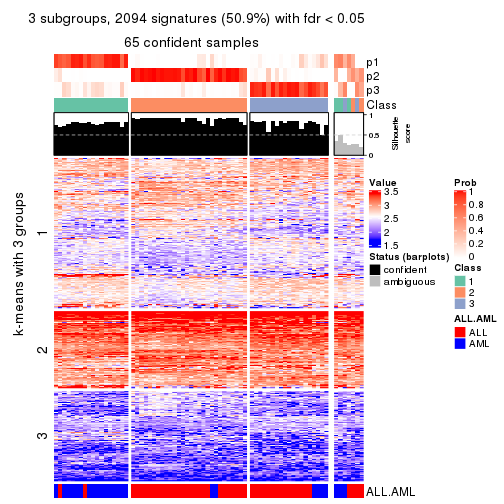

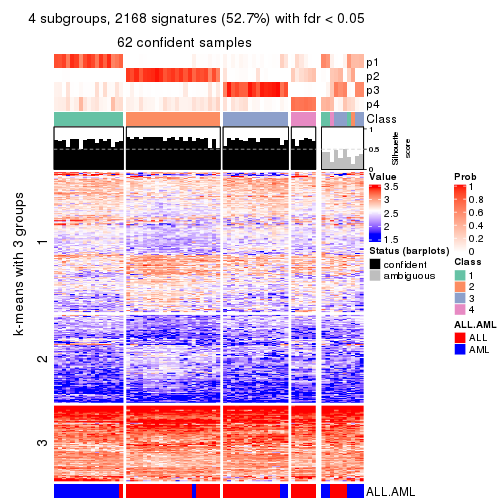

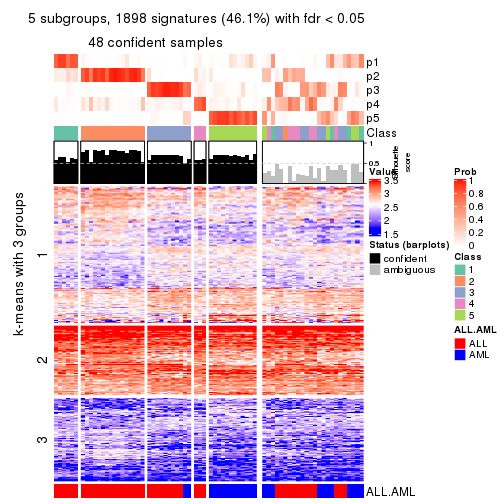

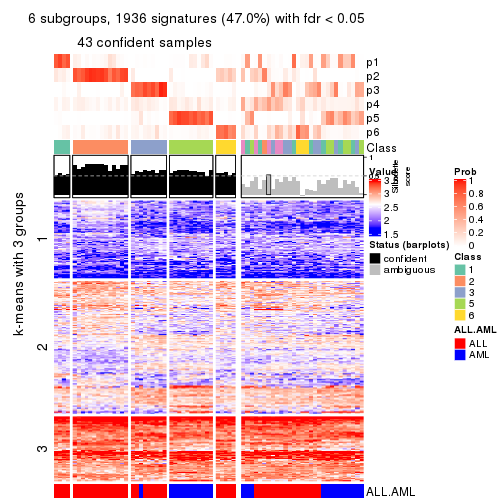

Signature heatmaps for all methods. (What is a signature heatmap?)

Note in following heatmaps, rows are scaled.

collect_plots(res_list, k = 2, fun = get_signatures, mc.cores = 4)

collect_plots(res_list, k = 3, fun = get_signatures, mc.cores = 4)

collect_plots(res_list, k = 4, fun = get_signatures, mc.cores = 4)

collect_plots(res_list, k = 5, fun = get_signatures, mc.cores = 4)

collect_plots(res_list, k = 6, fun = get_signatures, mc.cores = 4)

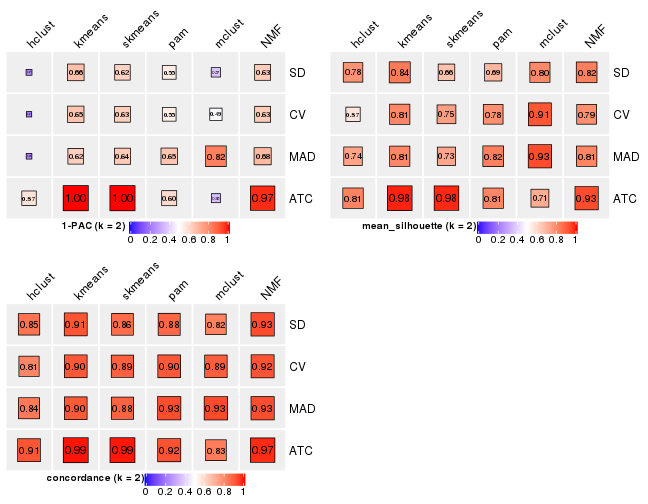

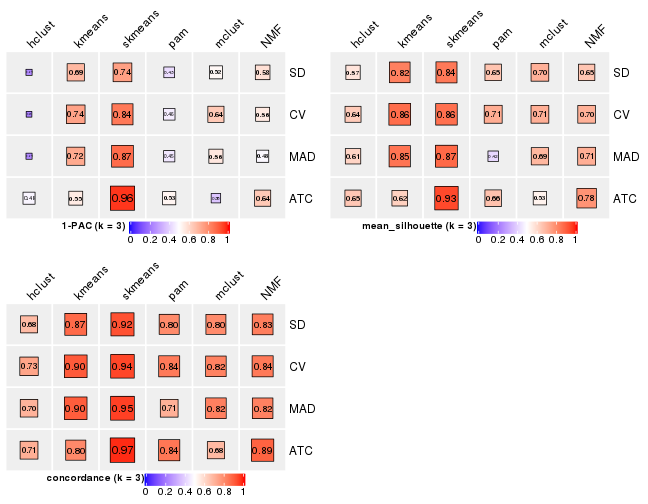

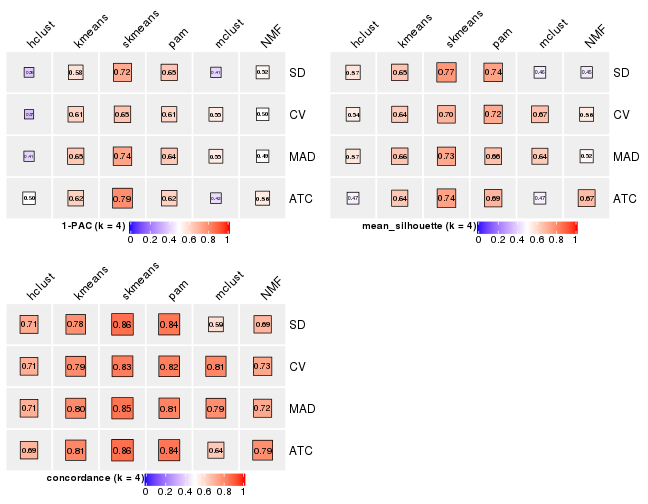

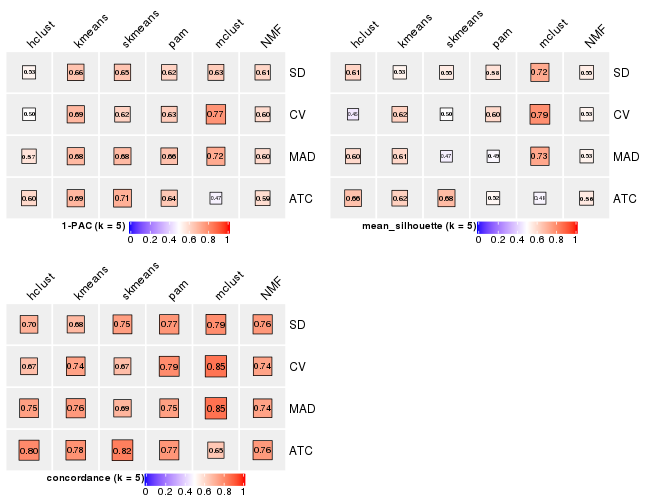

The statistics used for measuring the stability of consensus partitioning. (How are they defined?)

get_stats(res_list, k = 2)

#> k 1-PAC mean_silhouette concordance area_increased Rand Jaccard

#> SD:NMF 2 0.633 0.823 0.927 0.489 0.512 0.512

#> CV:NMF 2 0.633 0.787 0.916 0.491 0.507 0.507

#> MAD:NMF 2 0.680 0.811 0.925 0.490 0.507 0.507

#> ATC:NMF 2 0.972 0.927 0.973 0.505 0.495 0.495

#> SD:skmeans 2 0.617 0.659 0.865 0.497 0.512 0.512

#> CV:skmeans 2 0.629 0.748 0.890 0.497 0.495 0.495

#> MAD:skmeans 2 0.640 0.728 0.884 0.497 0.493 0.493

#> ATC:skmeans 2 1.000 0.977 0.991 0.505 0.496 0.496

#> SD:mclust 2 0.373 0.801 0.818 0.410 0.518 0.518

#> CV:mclust 2 0.489 0.908 0.895 0.431 0.532 0.532

#> MAD:mclust 2 0.824 0.927 0.932 0.442 0.532 0.532

#> ATC:mclust 2 0.360 0.711 0.828 0.426 0.493 0.493

#> SD:kmeans 2 0.662 0.836 0.911 0.479 0.540 0.540

#> CV:kmeans 2 0.653 0.806 0.905 0.484 0.532 0.532

#> MAD:kmeans 2 0.623 0.805 0.904 0.483 0.540 0.540

#> ATC:kmeans 2 1.000 0.982 0.992 0.503 0.499 0.499

#> SD:pam 2 0.548 0.686 0.876 0.490 0.493 0.493

#> CV:pam 2 0.546 0.784 0.904 0.496 0.496 0.496

#> MAD:pam 2 0.651 0.824 0.925 0.496 0.499 0.499

#> ATC:pam 2 0.597 0.808 0.918 0.475 0.518 0.518

#> SD:hclust 2 0.236 0.777 0.851 0.435 0.559 0.559

#> CV:hclust 2 0.214 0.570 0.805 0.454 0.512 0.512

#> MAD:hclust 2 0.227 0.737 0.838 0.444 0.549 0.549

#> ATC:hclust 2 0.574 0.813 0.911 0.486 0.495 0.495

get_stats(res_list, k = 3)

#> k 1-PAC mean_silhouette concordance area_increased Rand Jaccard

#> SD:NMF 3 0.582 0.651 0.826 0.337 0.809 0.635

#> CV:NMF 3 0.555 0.703 0.837 0.344 0.737 0.521

#> MAD:NMF 3 0.484 0.709 0.818 0.351 0.787 0.594

#> ATC:NMF 3 0.642 0.776 0.885 0.309 0.782 0.584

#> SD:skmeans 3 0.745 0.836 0.918 0.335 0.763 0.560

#> CV:skmeans 3 0.837 0.857 0.938 0.347 0.743 0.525

#> MAD:skmeans 3 0.866 0.872 0.946 0.346 0.734 0.511

#> ATC:skmeans 3 0.961 0.932 0.973 0.292 0.823 0.652

#> SD:mclust 3 0.518 0.704 0.795 0.454 0.806 0.654

#> CV:mclust 3 0.641 0.708 0.817 0.423 0.796 0.625

#> MAD:mclust 3 0.562 0.688 0.821 0.401 0.786 0.606

#> ATC:mclust 3 0.376 0.532 0.683 0.400 0.718 0.490

#> SD:kmeans 3 0.686 0.822 0.870 0.354 0.789 0.609

#> CV:kmeans 3 0.739 0.864 0.901 0.366 0.774 0.583

#> MAD:kmeans 3 0.721 0.848 0.896 0.361 0.786 0.605

#> ATC:kmeans 3 0.553 0.621 0.800 0.302 0.777 0.575

#> SD:pam 3 0.427 0.654 0.797 0.335 0.748 0.531

#> CV:pam 3 0.462 0.713 0.835 0.326 0.754 0.539

#> MAD:pam 3 0.448 0.417 0.706 0.317 0.716 0.503

#> ATC:pam 3 0.525 0.658 0.843 0.345 0.690 0.478

#> SD:hclust 3 0.245 0.570 0.676 0.432 0.737 0.543

#> CV:hclust 3 0.226 0.635 0.734 0.393 0.723 0.505

#> MAD:hclust 3 0.243 0.613 0.697 0.421 0.759 0.569

#> ATC:hclust 3 0.479 0.645 0.709 0.277 0.842 0.698

get_stats(res_list, k = 4)

#> k 1-PAC mean_silhouette concordance area_increased Rand Jaccard

#> SD:NMF 4 0.517 0.454 0.690 0.130 0.863 0.632

#> CV:NMF 4 0.497 0.556 0.731 0.127 0.869 0.633

#> MAD:NMF 4 0.493 0.522 0.720 0.123 0.865 0.627

#> ATC:NMF 4 0.558 0.673 0.788 0.118 0.900 0.717

#> SD:skmeans 4 0.718 0.768 0.857 0.111 0.913 0.745

#> CV:skmeans 4 0.655 0.698 0.828 0.107 0.896 0.700

#> MAD:skmeans 4 0.735 0.726 0.847 0.102 0.886 0.681

#> ATC:skmeans 4 0.789 0.740 0.857 0.102 0.925 0.789

#> SD:mclust 4 0.414 0.463 0.587 0.144 0.784 0.560

#> CV:mclust 4 0.550 0.668 0.806 0.133 0.768 0.457

#> MAD:mclust 4 0.550 0.636 0.787 0.149 0.813 0.535

#> ATC:mclust 4 0.424 0.471 0.640 0.161 0.733 0.362

#> SD:kmeans 4 0.580 0.653 0.780 0.125 0.900 0.718

#> CV:kmeans 4 0.615 0.639 0.792 0.115 0.957 0.869

#> MAD:kmeans 4 0.646 0.655 0.796 0.119 0.938 0.817

#> ATC:kmeans 4 0.620 0.644 0.808 0.118 0.818 0.526

#> SD:pam 4 0.648 0.737 0.840 0.118 0.912 0.747

#> CV:pam 4 0.608 0.723 0.822 0.116 0.937 0.812

#> MAD:pam 4 0.644 0.662 0.813 0.123 0.824 0.565

#> ATC:pam 4 0.619 0.692 0.842 0.143 0.861 0.645

#> SD:hclust 4 0.388 0.570 0.714 0.134 0.940 0.820

#> CV:hclust 4 0.370 0.544 0.706 0.132 0.962 0.883

#> MAD:hclust 4 0.409 0.566 0.706 0.137 0.949 0.845

#> ATC:hclust 4 0.495 0.470 0.695 0.138 0.869 0.676

get_stats(res_list, k = 5)

#> k 1-PAC mean_silhouette concordance area_increased Rand Jaccard

#> SD:NMF 5 0.608 0.548 0.765 0.0718 0.873 0.576

#> CV:NMF 5 0.595 0.529 0.738 0.0700 0.874 0.573

#> MAD:NMF 5 0.598 0.532 0.740 0.0694 0.876 0.575

#> ATC:NMF 5 0.590 0.557 0.763 0.0700 0.876 0.587

#> SD:skmeans 5 0.653 0.551 0.750 0.0682 0.929 0.750

#> CV:skmeans 5 0.617 0.503 0.671 0.0677 0.887 0.609

#> MAD:skmeans 5 0.679 0.465 0.693 0.0684 0.887 0.614

#> ATC:skmeans 5 0.706 0.678 0.825 0.0633 0.941 0.804

#> SD:mclust 5 0.634 0.721 0.790 0.1525 0.761 0.398

#> CV:mclust 5 0.774 0.787 0.849 0.1273 0.905 0.670

#> MAD:mclust 5 0.719 0.729 0.853 0.0984 0.885 0.615

#> ATC:mclust 5 0.471 0.479 0.646 0.0578 0.910 0.666

#> SD:kmeans 5 0.656 0.530 0.683 0.0757 0.922 0.732

#> CV:kmeans 5 0.687 0.620 0.740 0.0721 0.862 0.553

#> MAD:kmeans 5 0.683 0.605 0.761 0.0716 0.879 0.603

#> ATC:kmeans 5 0.689 0.624 0.780 0.0746 0.857 0.530

#> SD:pam 5 0.615 0.575 0.770 0.0794 0.889 0.631

#> CV:pam 5 0.633 0.600 0.793 0.0736 0.892 0.635

#> MAD:pam 5 0.661 0.486 0.746 0.0759 0.861 0.547

#> ATC:pam 5 0.644 0.521 0.770 0.0768 0.896 0.653

#> SD:hclust 5 0.526 0.612 0.703 0.0851 0.948 0.819

#> CV:hclust 5 0.503 0.450 0.665 0.0734 0.922 0.748

#> MAD:hclust 5 0.573 0.598 0.745 0.0754 0.901 0.674

#> ATC:hclust 5 0.601 0.663 0.797 0.0839 0.832 0.492

get_stats(res_list, k = 6)

#> k 1-PAC mean_silhouette concordance area_increased Rand Jaccard

#> SD:NMF 6 0.623 0.415 0.697 0.0416 0.903 0.606

#> CV:NMF 6 0.599 0.459 0.665 0.0441 0.895 0.558

#> MAD:NMF 6 0.611 0.438 0.670 0.0424 0.885 0.530

#> ATC:NMF 6 0.597 0.514 0.685 0.0426 0.942 0.734

#> SD:skmeans 6 0.673 0.561 0.755 0.0479 0.922 0.675

#> CV:skmeans 6 0.629 0.505 0.717 0.0443 0.884 0.524

#> MAD:skmeans 6 0.708 0.641 0.797 0.0465 0.888 0.536

#> ATC:skmeans 6 0.719 0.590 0.786 0.0431 0.914 0.681

#> SD:mclust 6 0.694 0.612 0.778 0.0494 0.940 0.718

#> CV:mclust 6 0.751 0.617 0.762 0.0509 0.922 0.653

#> MAD:mclust 6 0.672 0.573 0.713 0.0392 0.886 0.543

#> ATC:mclust 6 0.701 0.599 0.790 0.1118 0.869 0.485

#> SD:kmeans 6 0.710 0.652 0.782 0.0496 0.852 0.445

#> CV:kmeans 6 0.727 0.715 0.802 0.0472 0.915 0.614

#> MAD:kmeans 6 0.745 0.692 0.795 0.0480 0.917 0.629

#> ATC:kmeans 6 0.712 0.656 0.769 0.0471 0.926 0.674

#> SD:pam 6 0.651 0.449 0.724 0.0473 0.878 0.524

#> CV:pam 6 0.651 0.483 0.711 0.0433 0.962 0.830

#> MAD:pam 6 0.731 0.602 0.801 0.0519 0.852 0.419

#> ATC:pam 6 0.676 0.582 0.763 0.0422 0.856 0.458

#> SD:hclust 6 0.591 0.587 0.716 0.0493 1.000 1.000

#> CV:hclust 6 0.555 0.431 0.637 0.0435 0.918 0.708

#> MAD:hclust 6 0.663 0.590 0.726 0.0402 1.000 1.000

#> ATC:hclust 6 0.622 0.687 0.782 0.0352 0.975 0.882

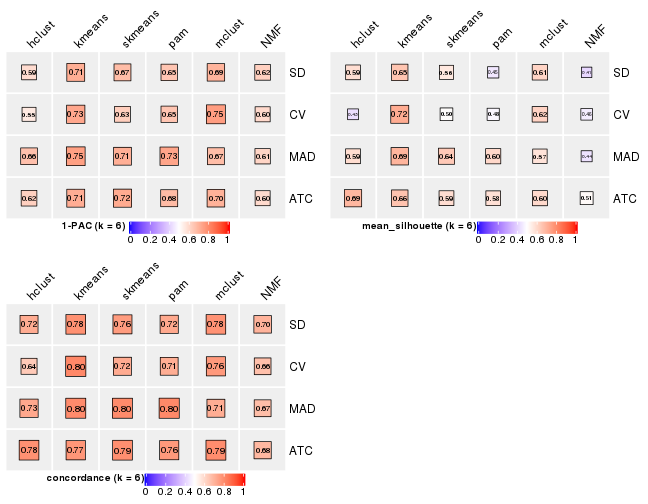

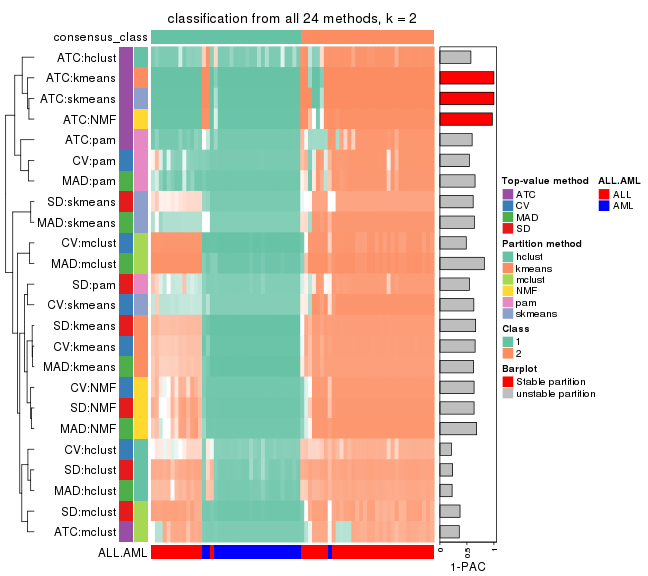

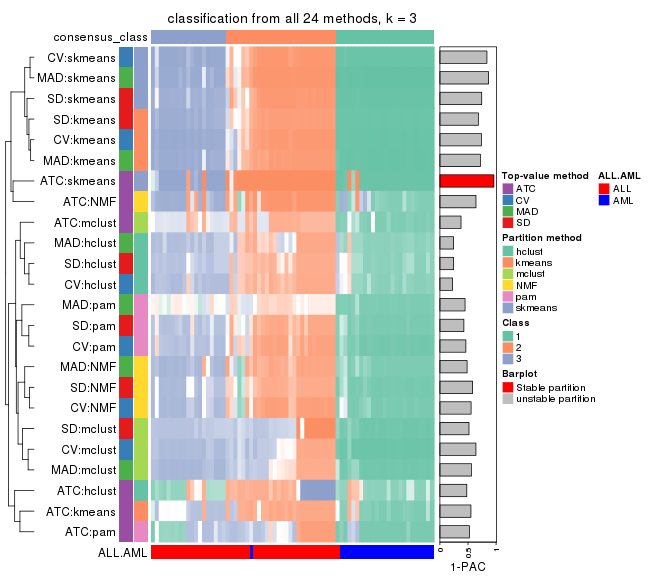

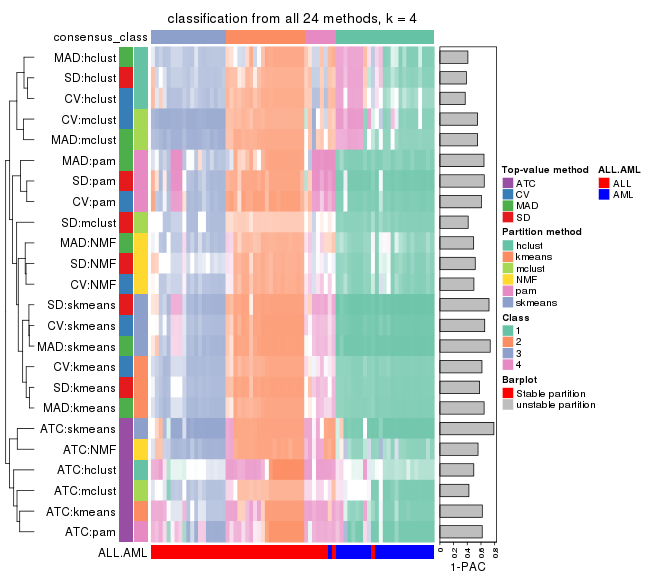

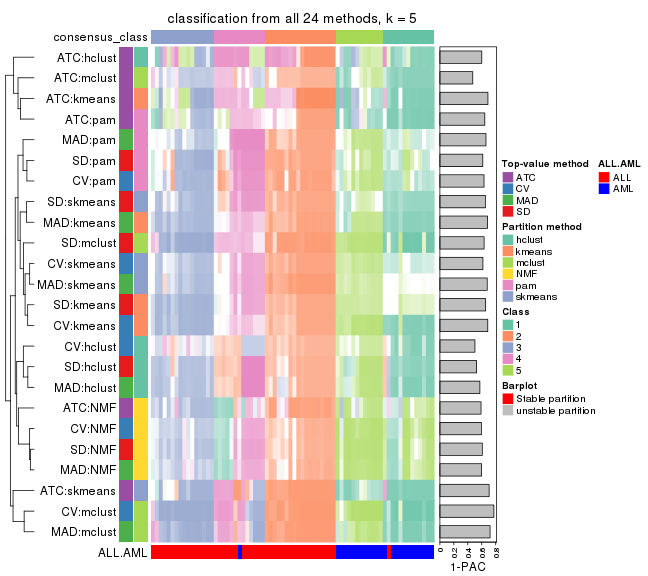

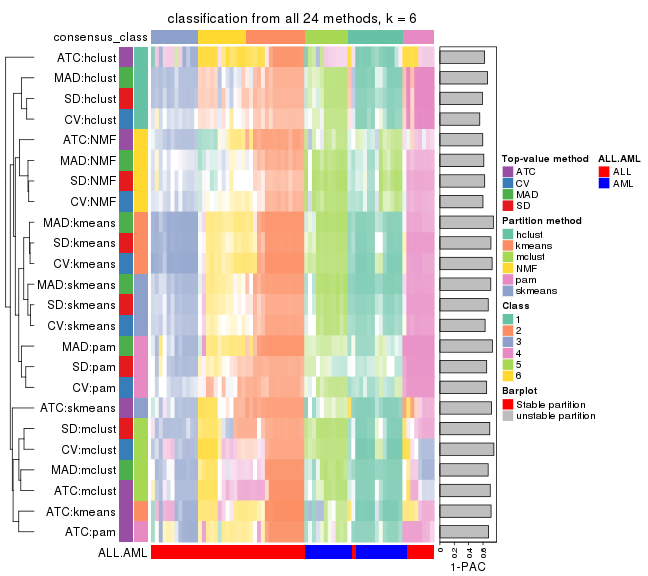

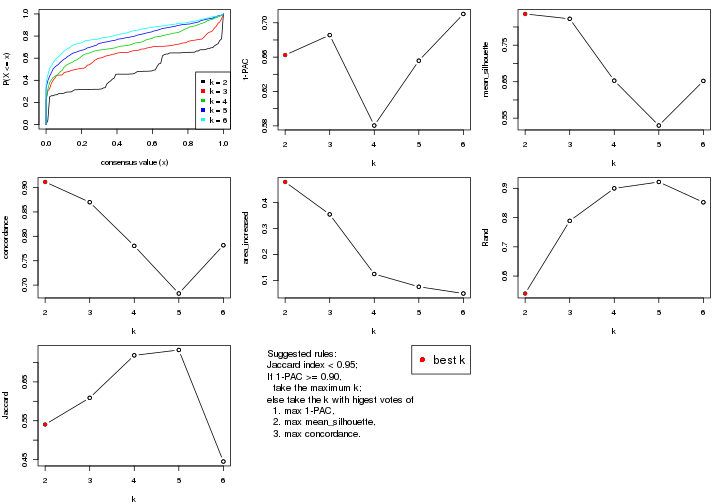

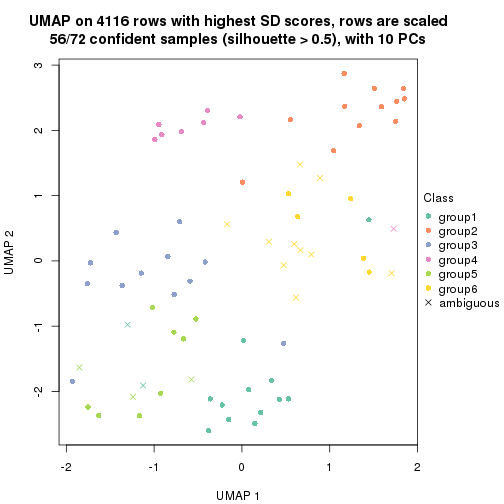

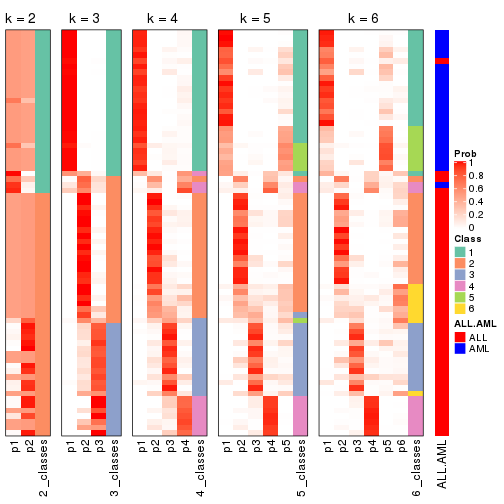

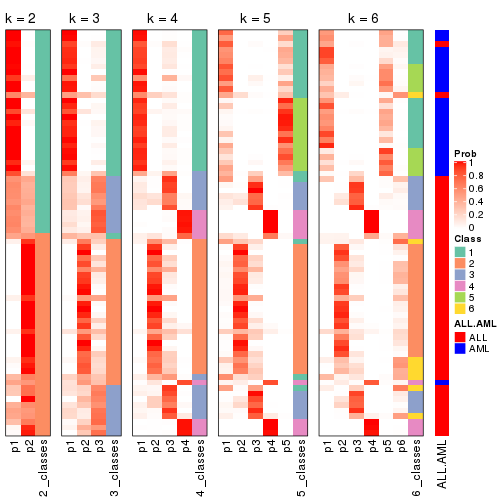

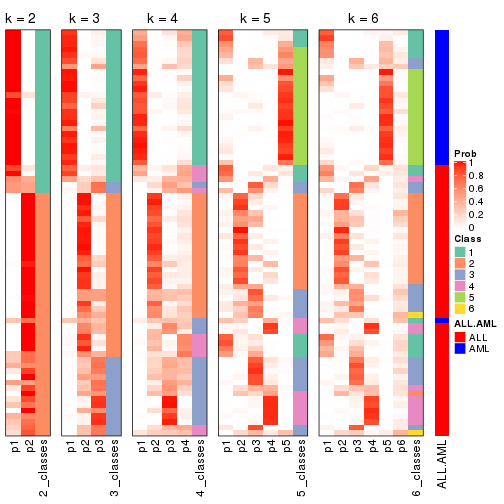

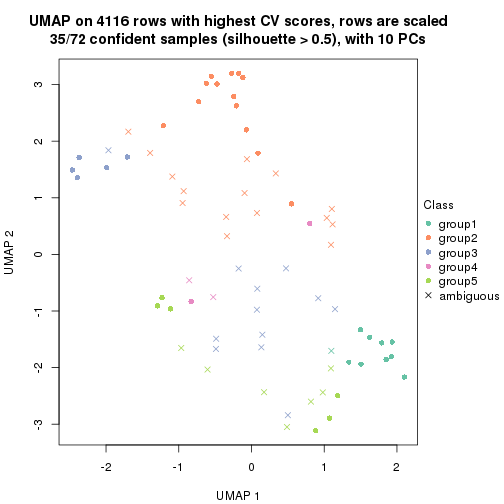

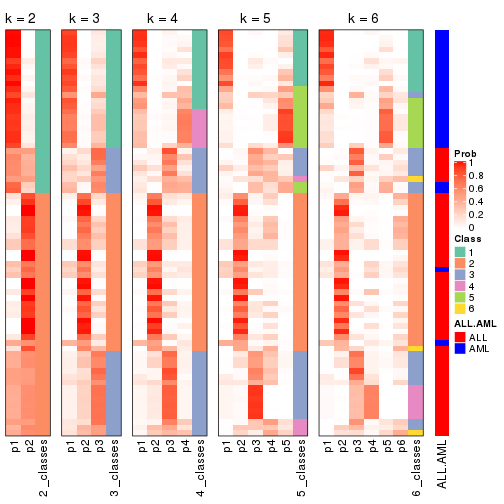

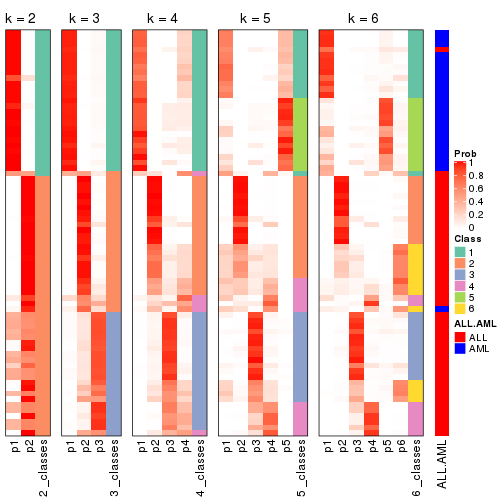

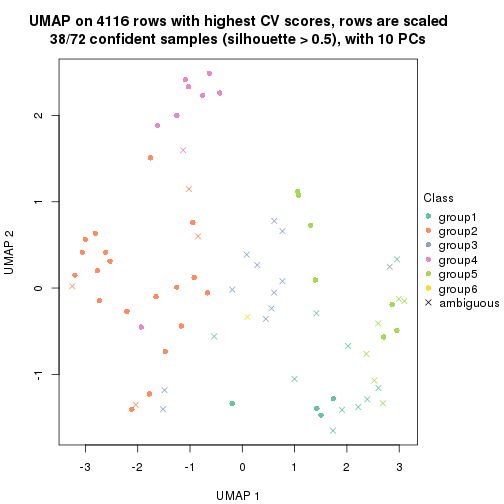

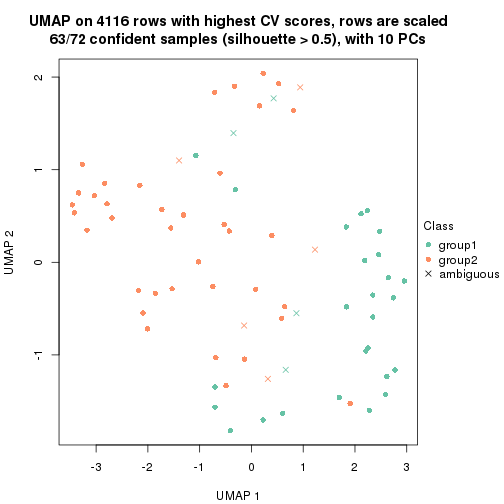

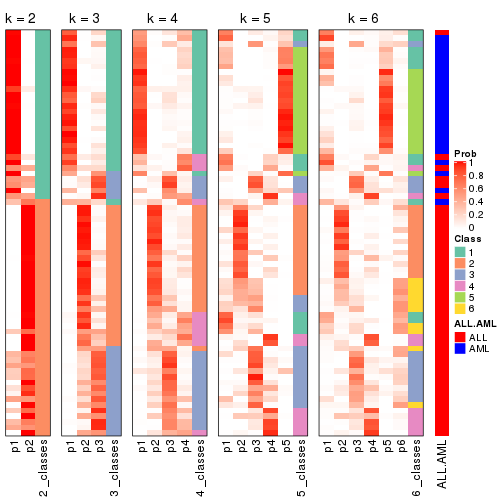

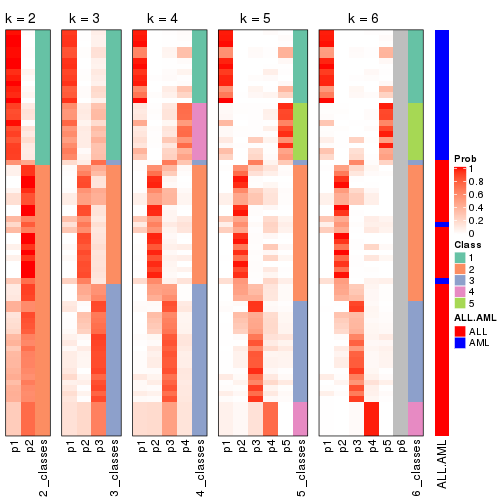

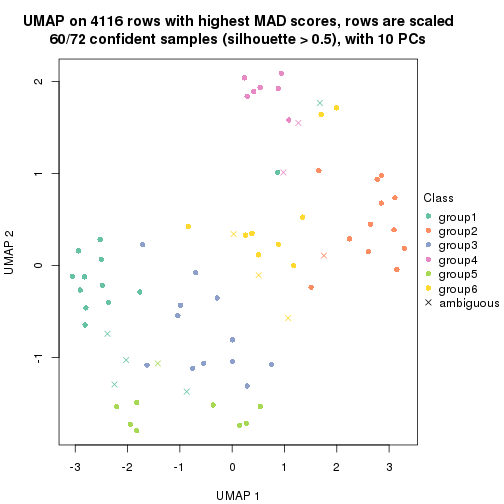

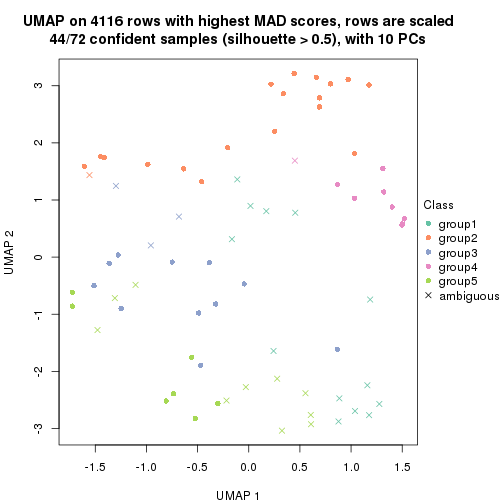

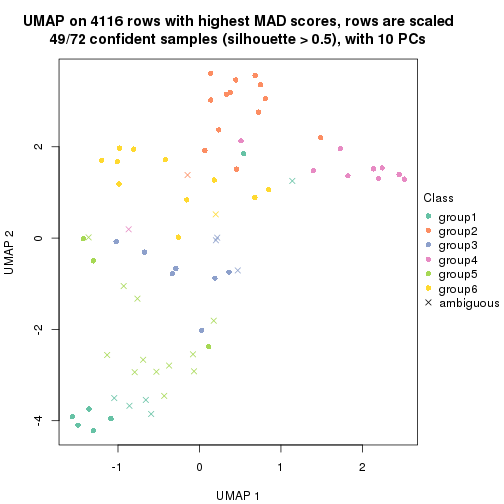

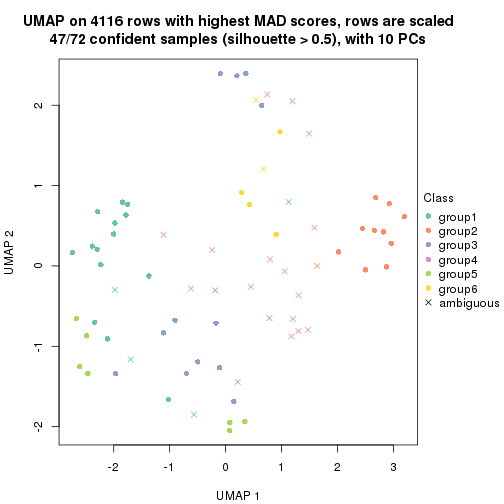

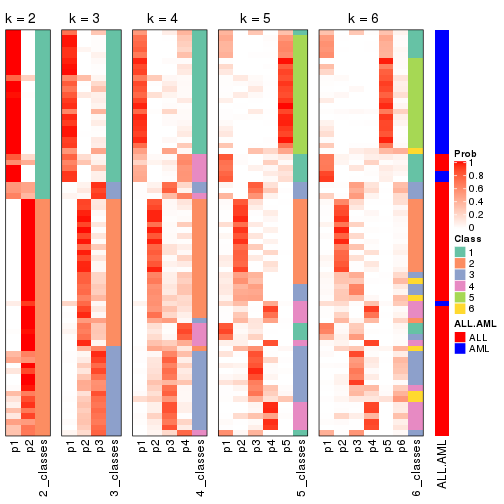

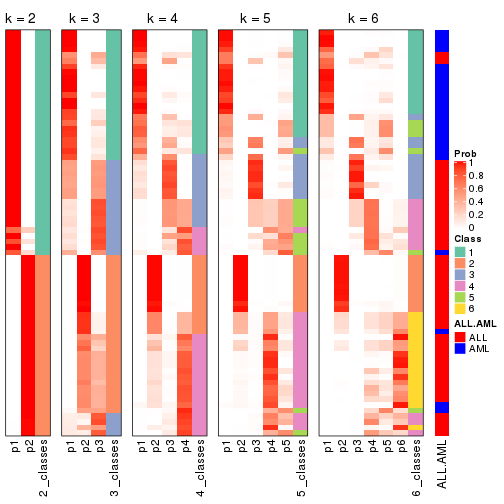

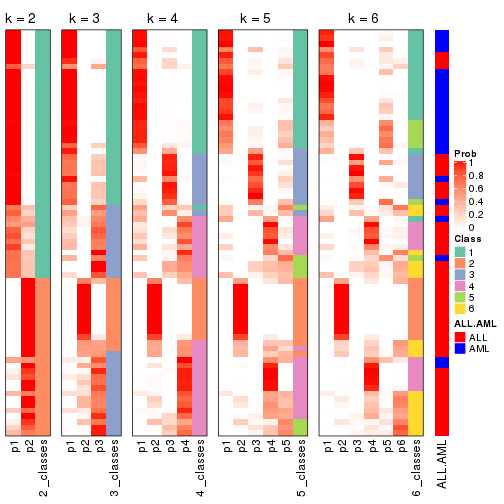

Following heatmap plots the partition for each combination of methods and the lightness correspond to the silhouette scores for samples in each method. On top the consensus subgroup is inferred from all methods by taking the mean silhouette scores as weight.

collect_stats(res_list, k = 2)

collect_stats(res_list, k = 3)

collect_stats(res_list, k = 4)

collect_stats(res_list, k = 5)

collect_stats(res_list, k = 6)

Collect partitions from all methods:

collect_classes(res_list, k = 2)

collect_classes(res_list, k = 3)

collect_classes(res_list, k = 4)

collect_classes(res_list, k = 5)

collect_classes(res_list, k = 6)

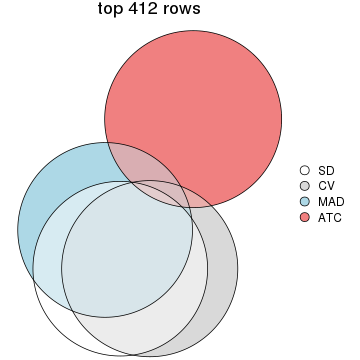

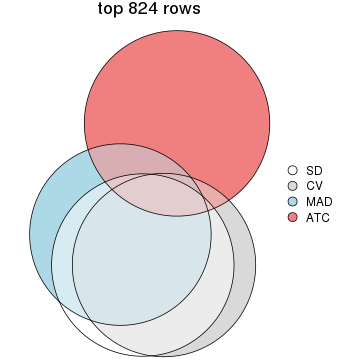

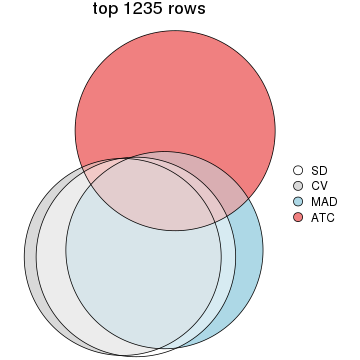

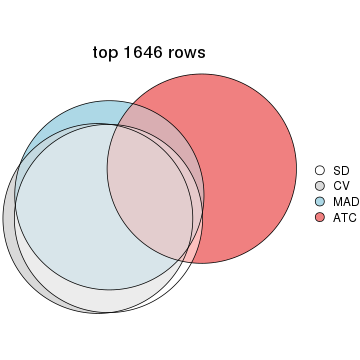

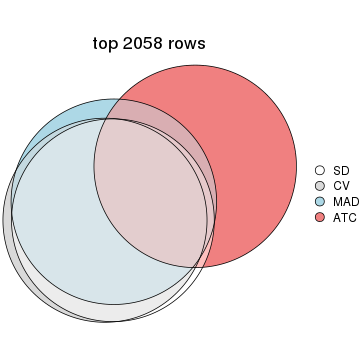

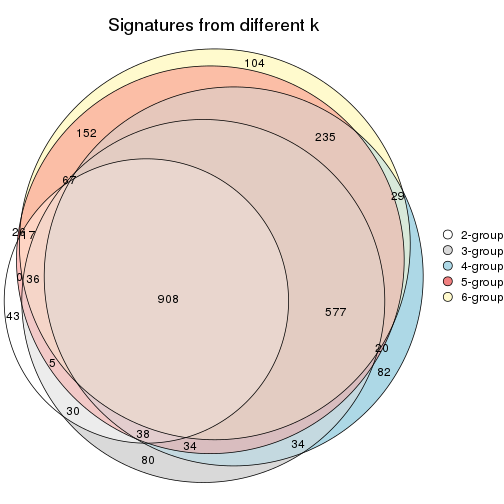

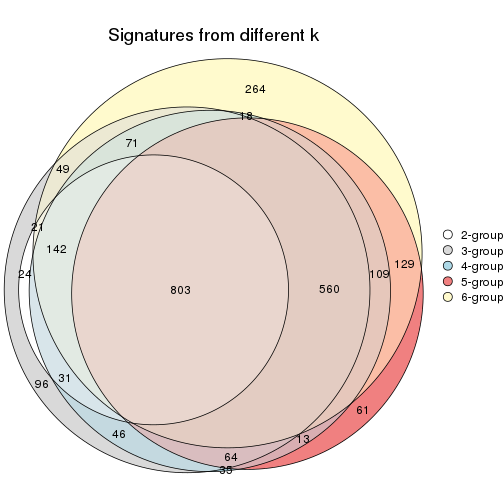

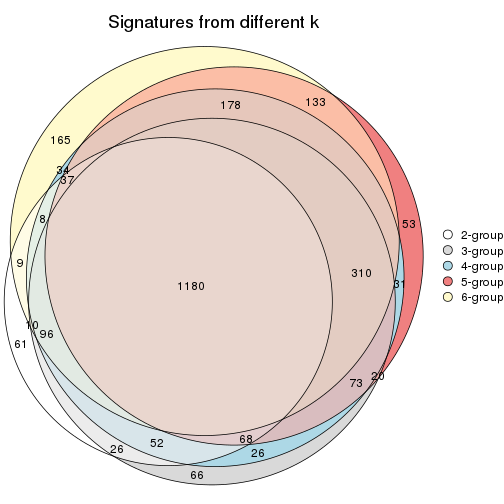

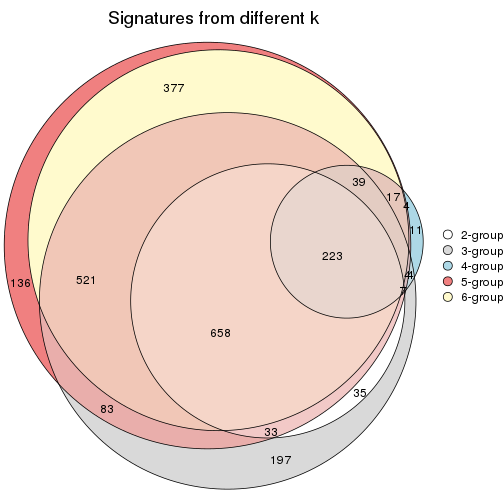

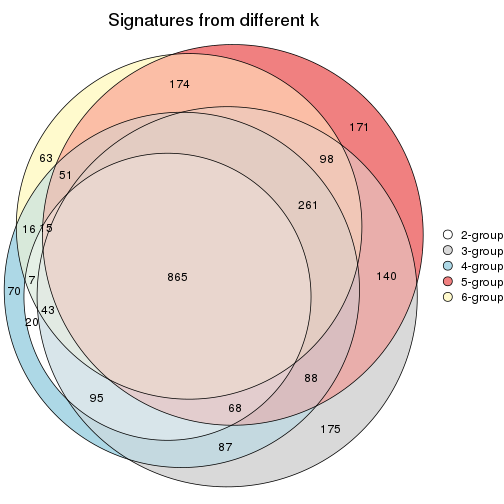

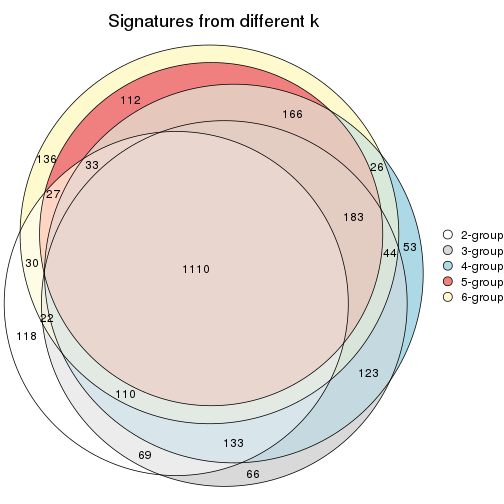

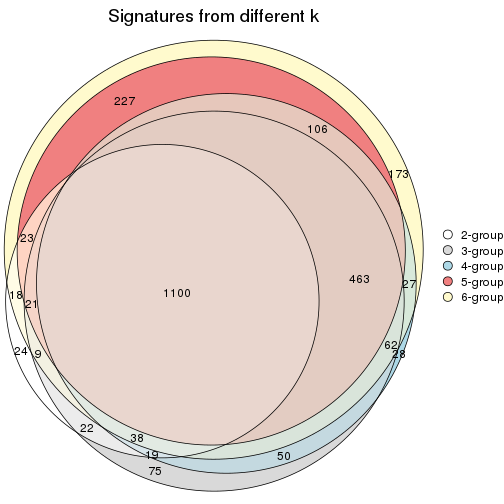

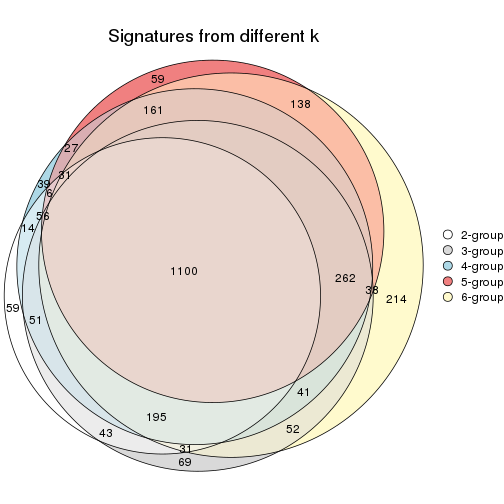

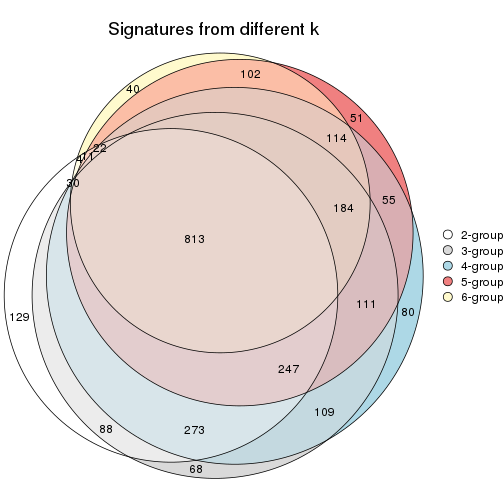

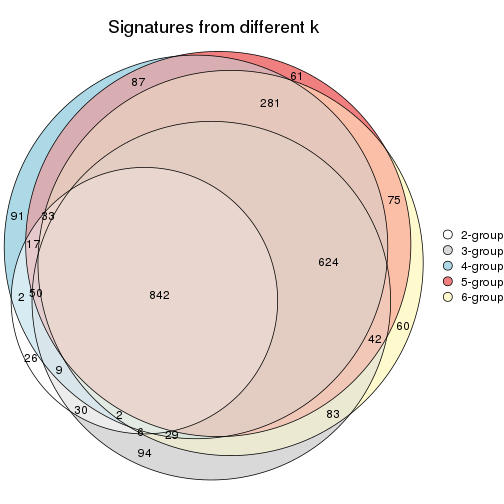

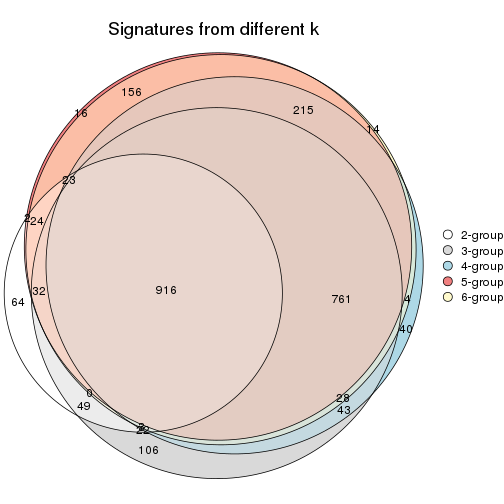

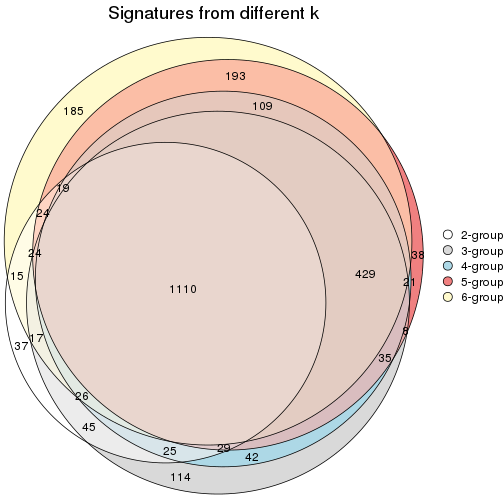

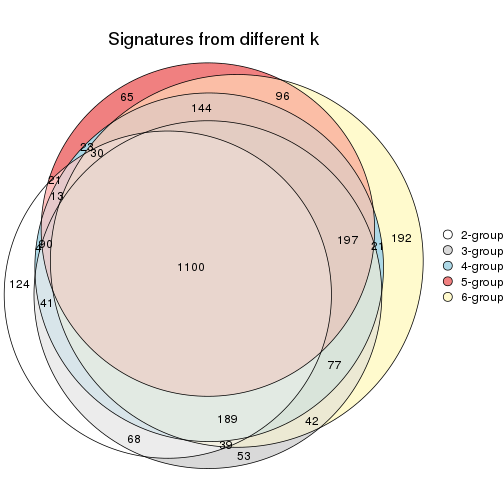

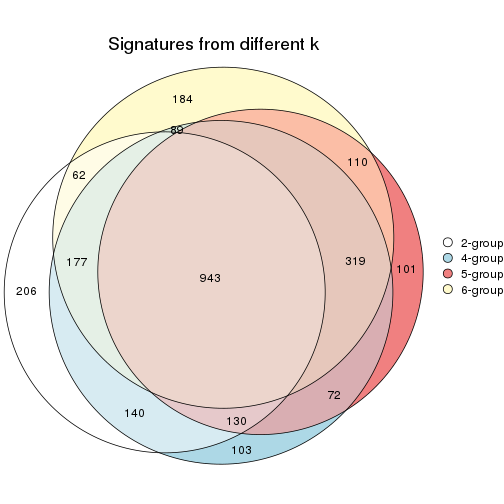

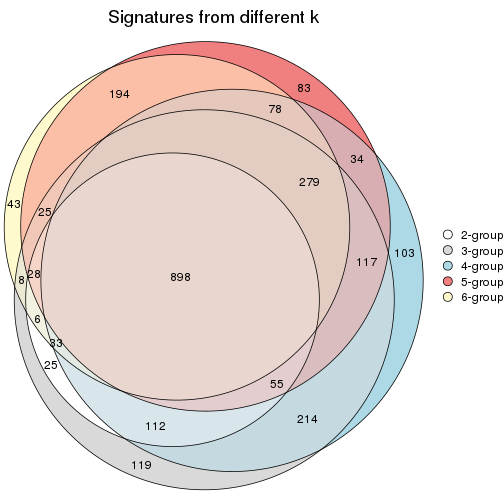

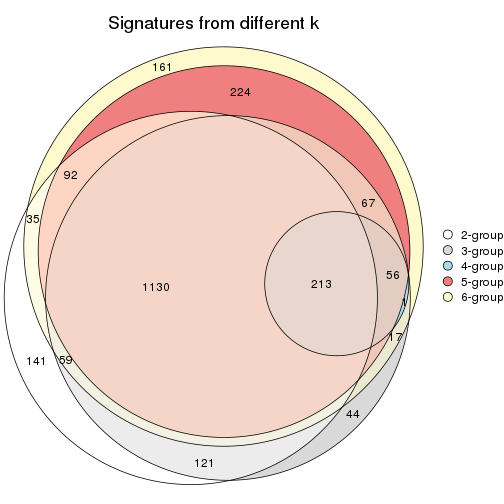

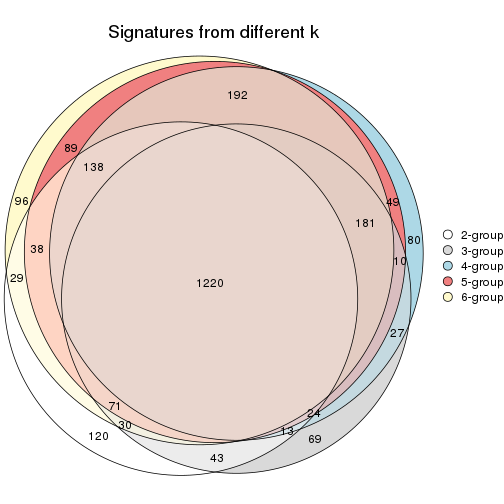

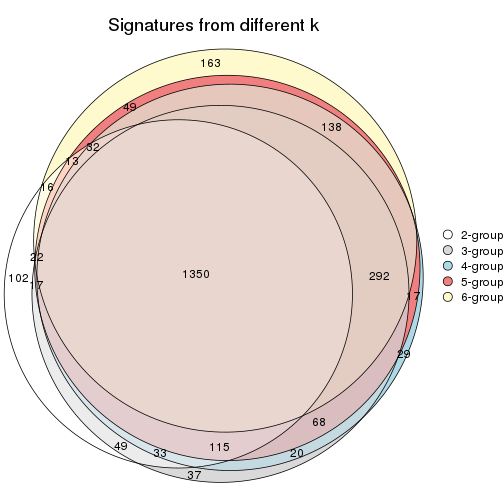

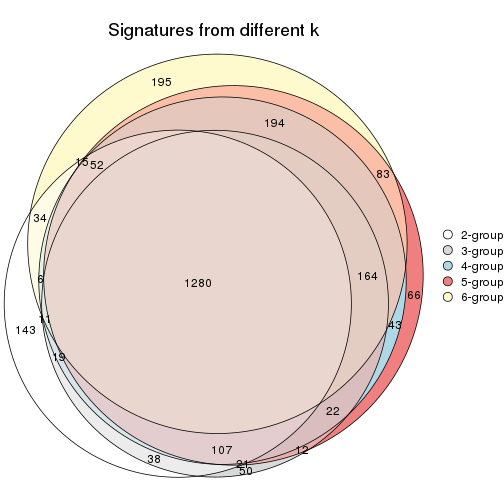

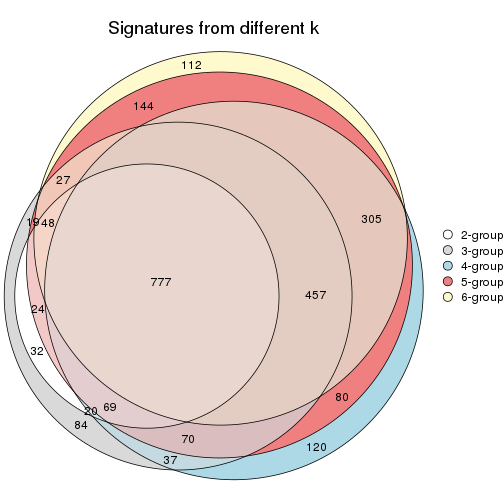

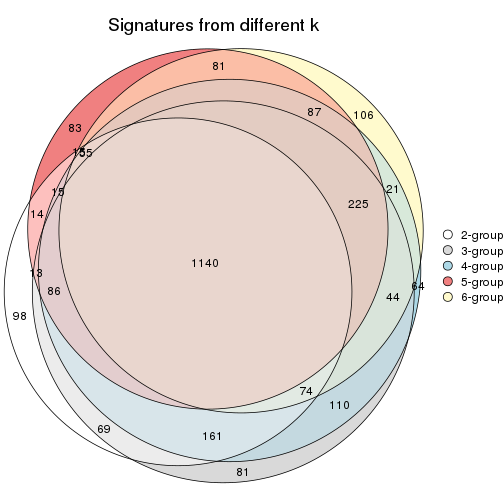

Overlap of top rows from different top-row methods:

top_rows_overlap(res_list, top_n = 412, method = "euler")

top_rows_overlap(res_list, top_n = 824, method = "euler")

top_rows_overlap(res_list, top_n = 1235, method = "euler")

top_rows_overlap(res_list, top_n = 1646, method = "euler")

top_rows_overlap(res_list, top_n = 2058, method = "euler")

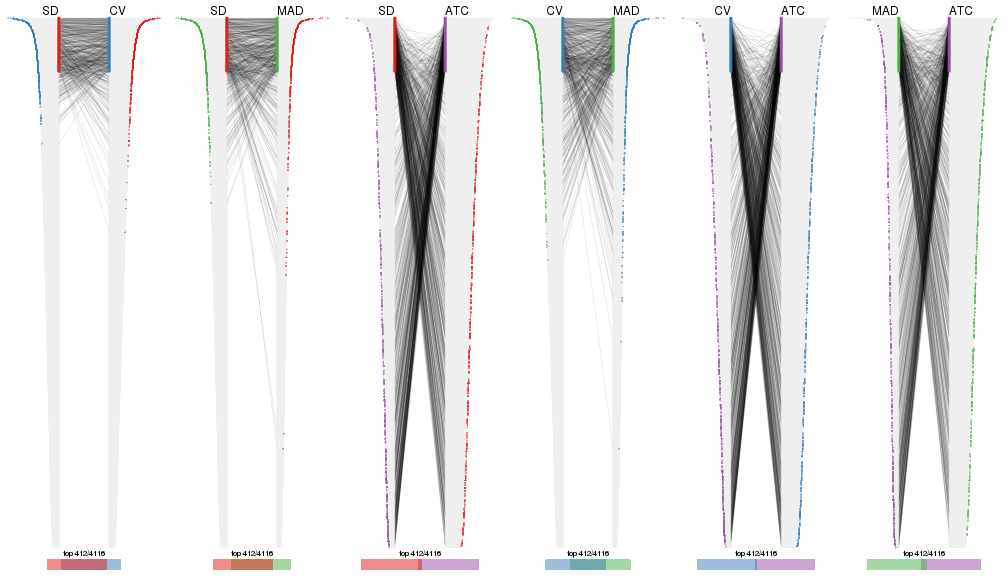

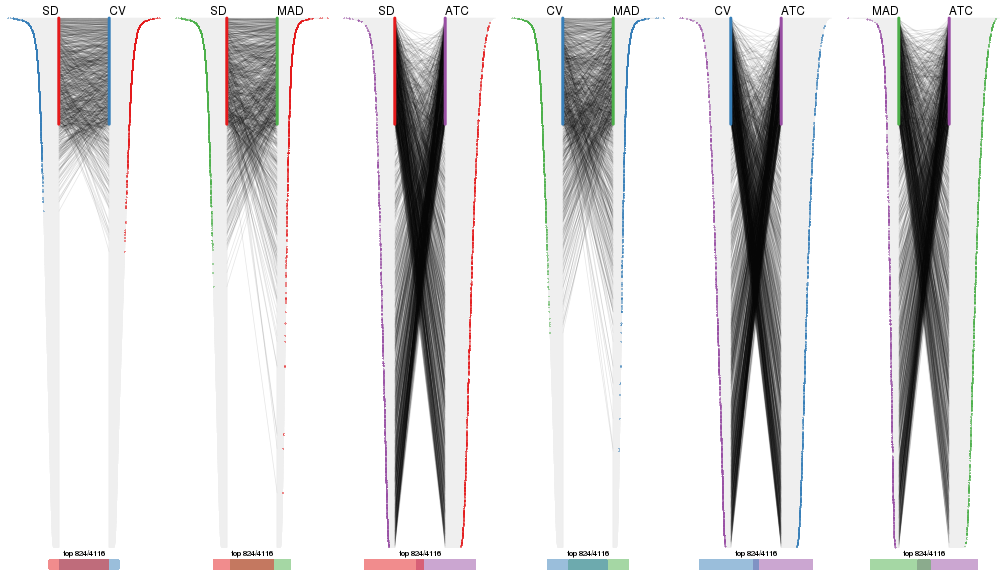

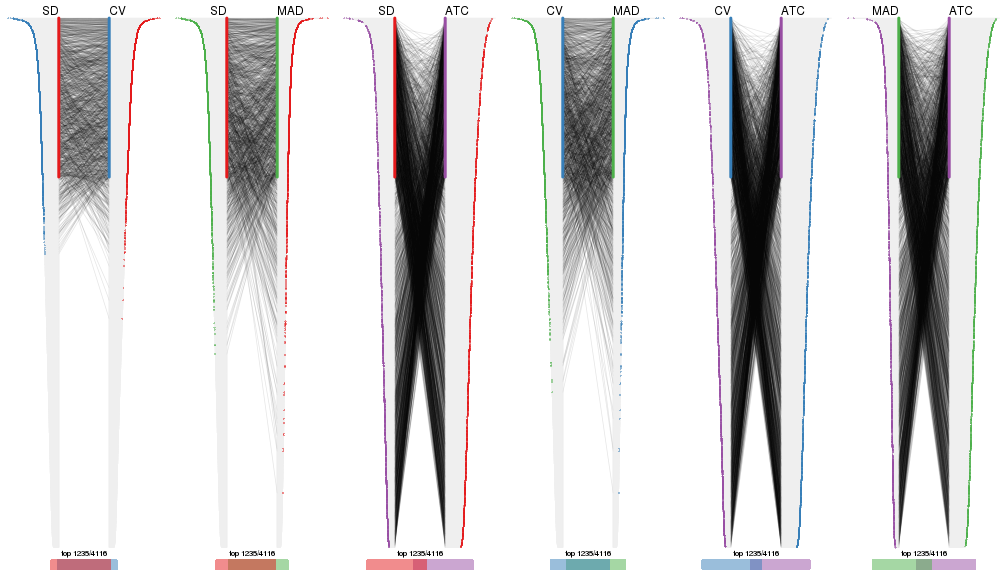

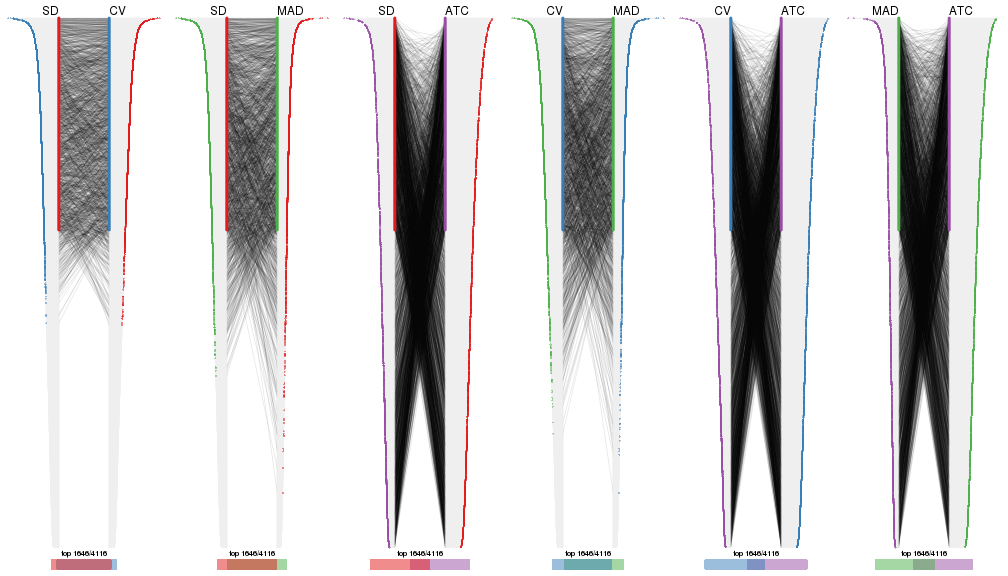

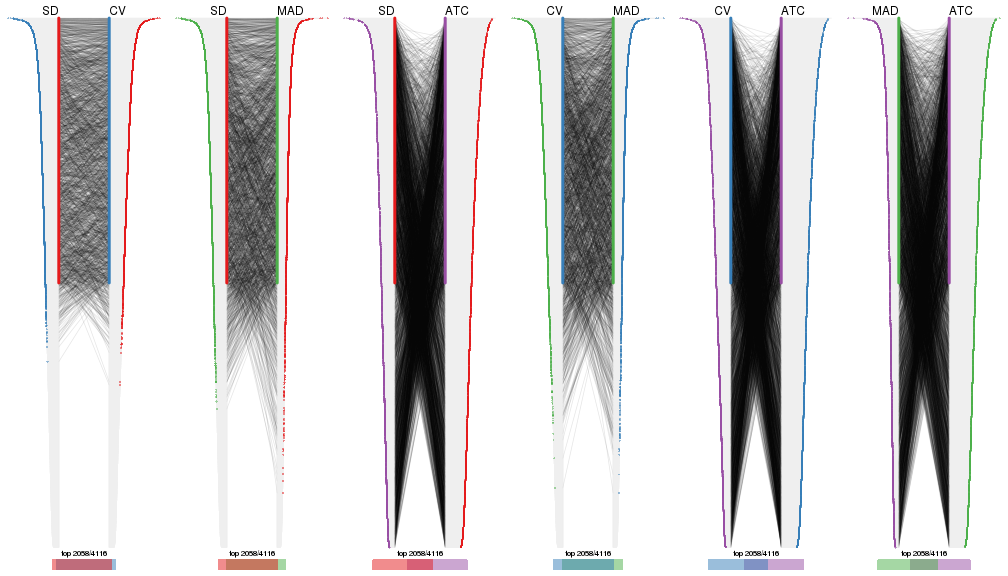

Also visualize the correspondance of rankings between different top-row methods:

top_rows_overlap(res_list, top_n = 412, method = "correspondance")

top_rows_overlap(res_list, top_n = 824, method = "correspondance")

top_rows_overlap(res_list, top_n = 1235, method = "correspondance")

top_rows_overlap(res_list, top_n = 1646, method = "correspondance")

top_rows_overlap(res_list, top_n = 2058, method = "correspondance")

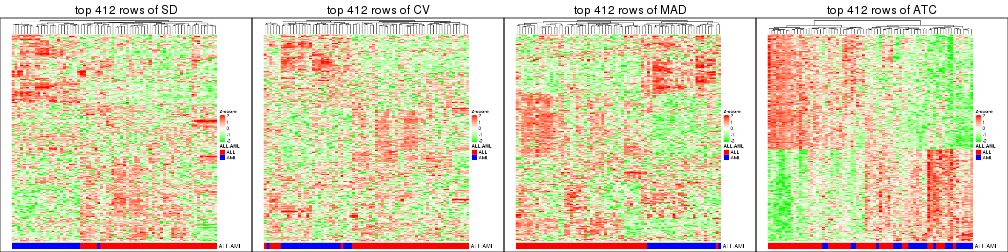

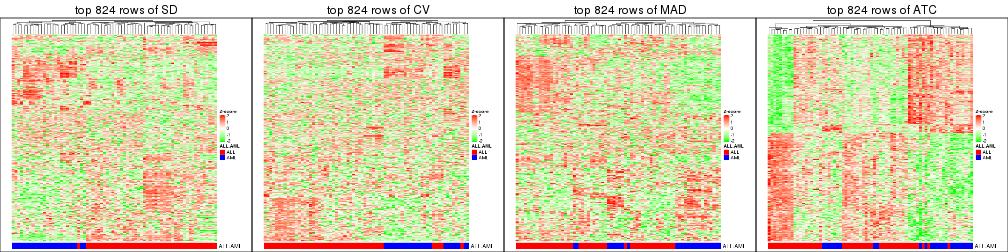

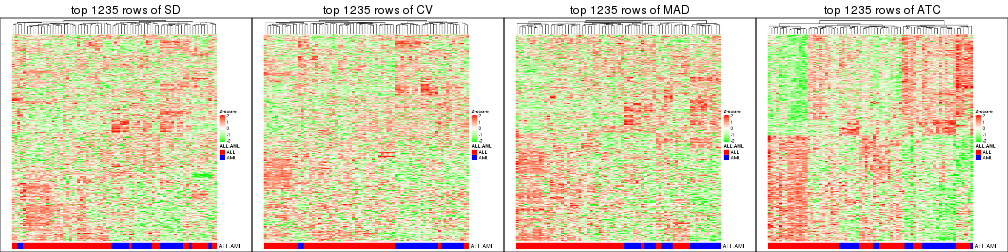

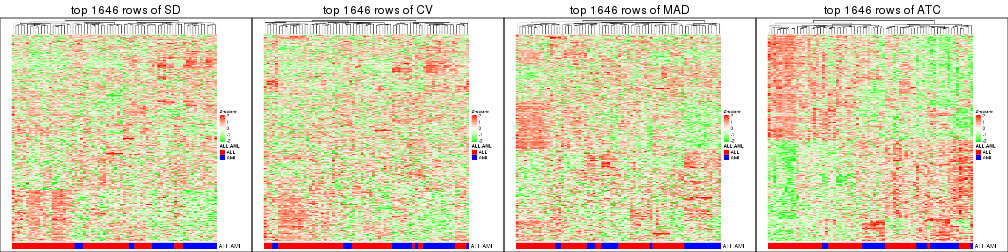

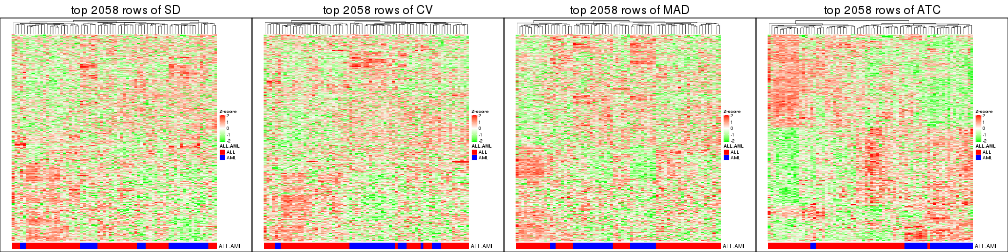

Heatmaps of the top rows:

top_rows_heatmap(res_list, top_n = 412)

top_rows_heatmap(res_list, top_n = 824)

top_rows_heatmap(res_list, top_n = 1235)

top_rows_heatmap(res_list, top_n = 1646)

top_rows_heatmap(res_list, top_n = 2058)

Test correlation between subgroups and known annotations. If the known annotation is numeric, one-way ANOVA test is applied, and if the known annotation is discrete, chi-squared contingency table test is applied.

test_to_known_factors(res_list, k = 2)

#> n ALL.AML(p) k

#> SD:NMF 66 1.35e-12 2

#> CV:NMF 63 7.82e-13 2

#> MAD:NMF 65 2.13e-12 2

#> ATC:NMF 69 5.41e-05 2

#> SD:skmeans 54 1.01e-11 2

#> CV:skmeans 55 3.77e-11 2

#> MAD:skmeans 67 2.39e-06 2

#> ATC:skmeans 71 5.23e-05 2

#> SD:mclust 70 2.94e-14 2

#> CV:mclust 70 2.94e-14 2

#> MAD:mclust 72 8.75e-14 2

#> ATC:mclust 62 1.26e-12 2

#> SD:kmeans 71 2.08e-14 2

#> CV:kmeans 66 2.15e-13 2

#> MAD:kmeans 65 3.43e-13 2

#> ATC:kmeans 72 1.50e-04 2

#> SD:pam 54 1.01e-11 2

#> CV:pam 65 1.04e-07 2

#> MAD:pam 63 2.89e-07 2

#> ATC:pam 67 1.65e-04 2

#> SD:hclust 70 4.70e-15 2

#> CV:hclust 49 1.25e-10 2

#> MAD:hclust 69 5.21e-14 2

#> ATC:hclust 66 4.16e-05 2

test_to_known_factors(res_list, k = 3)

#> n ALL.AML(p) k

#> SD:NMF 59 7.38e-12 3

#> CV:NMF 61 1.74e-11 3

#> MAD:NMF 67 8.26e-12 3

#> ATC:NMF 65 2.97e-08 3

#> SD:skmeans 65 4.40e-13 3

#> CV:skmeans 67 1.71e-13 3

#> MAD:skmeans 67 1.71e-13 3

#> ATC:skmeans 70 1.85e-12 3

#> SD:mclust 60 6.91e-13 3

#> CV:mclust 62 2.60e-13 3

#> MAD:mclust 61 4.15e-13 3

#> ATC:mclust 46 5.78e-10 3

#> SD:kmeans 66 2.72e-13 3

#> CV:kmeans 68 1.06e-13 3

#> MAD:kmeans 68 1.06e-13 3

#> ATC:kmeans 60 2.70e-11 3

#> SD:pam 58 1.94e-12 3

#> CV:pam 61 4.37e-13 3

#> MAD:pam 24 NA 3

#> ATC:pam 59 3.74e-06 3

#> SD:hclust 61 5.68e-14 3

#> CV:hclust 59 1.23e-12 3

#> MAD:hclust 53 1.00e-10 3

#> ATC:hclust 62 3.04e-03 3

test_to_known_factors(res_list, k = 4)

#> n ALL.AML(p) k

#> SD:NMF 38 5.34e-09 4

#> CV:NMF 48 3.78e-11 4

#> MAD:NMF 48 3.78e-11 4

#> ATC:NMF 62 1.00e-09 4

#> SD:skmeans 64 6.16e-13 4

#> CV:skmeans 60 4.20e-12 4

#> MAD:skmeans 60 4.20e-12 4

#> ATC:skmeans 63 8.36e-12 4

#> SD:mclust 35 2.99e-07 4

#> CV:mclust 62 1.78e-12 4

#> MAD:mclust 63 1.06e-12 4

#> ATC:mclust 46 6.40e-09 4

#> SD:kmeans 63 9.95e-13 4

#> CV:kmeans 57 1.78e-11 4

#> MAD:kmeans 60 4.20e-12 4

#> ATC:kmeans 56 7.62e-09 4

#> SD:pam 63 6.74e-12 4

#> CV:pam 64 4.11e-12 4

#> MAD:pam 58 6.37e-11 4

#> ATC:pam 57 5.47e-07 4

#> SD:hclust 51 3.35e-10 4

#> CV:hclust 54 1.06e-10 4

#> MAD:hclust 48 7.21e-09 4

#> ATC:hclust 30 8.73e-01 4

test_to_known_factors(res_list, k = 5)

#> n ALL.AML(p) k

#> SD:NMF 49 9.13e-09 5

#> CV:NMF 47 4.06e-09 5

#> MAD:NMF 47 2.27e-08 5

#> ATC:NMF 48 4.17e-08 5

#> SD:skmeans 51 4.27e-09 5

#> CV:skmeans 45 9.25e-10 5

#> MAD:skmeans 44 1.51e-09 5

#> ATC:skmeans 60 2.60e-10 5

#> SD:mclust 64 2.68e-12 5

#> CV:mclust 66 9.88e-13 5

#> MAD:mclust 63 4.79e-12 5

#> ATC:mclust 51 1.68e-09 5

#> SD:kmeans 50 7.99e-11 5

#> CV:kmeans 57 7.59e-11 5

#> MAD:kmeans 58 5.31e-11 5

#> ATC:kmeans 56 9.68e-08 5

#> SD:pam 45 1.81e-08 5

#> CV:pam 50 3.20e-09 5

#> MAD:pam 37 4.82e-07 5

#> ATC:pam 46 1.05e-05 5

#> SD:hclust 55 2.43e-10 5

#> CV:hclust 35 4.65e-07 5

#> MAD:hclust 51 1.57e-09 5

#> ATC:hclust 52 8.51e-06 5

test_to_known_factors(res_list, k = 6)

#> n ALL.AML(p) k

#> SD:NMF 33 3.22e-07 6

#> CV:NMF 37 1.80e-07 6

#> MAD:NMF 38 2.02e-07 6

#> ATC:NMF 44 1.87e-07 6

#> SD:skmeans 48 2.14e-08 6

#> CV:skmeans 47 9.43e-09 6

#> MAD:skmeans 56 5.18e-10 6

#> ATC:skmeans 51 1.22e-07 6

#> SD:mclust 58 1.74e-10 6

#> CV:mclust 53 1.78e-09 6

#> MAD:mclust 47 9.40e-09 6

#> ATC:mclust 49 2.22e-09 6

#> SD:kmeans 56 5.63e-10 6

#> CV:kmeans 61 5.20e-11 6

#> MAD:kmeans 60 9.09e-11 6

#> ATC:kmeans 62 8.55e-08 6

#> SD:pam 31 3.96e-01 6

#> CV:pam 38 2.03e-07 6

#> MAD:pam 49 2.89e-08 6

#> ATC:pam 47 1.08e-06 6

#> SD:hclust 48 9.44e-10 6

#> CV:hclust 30 1.38e-06 6

#> MAD:hclust 52 4.99e-09 6

#> ATC:hclust 52 1.57e-06 6

The object with results only for a single top-value method and a single partition method can be extracted as:

res = res_list["SD", "hclust"]

# you can also extract it by

# res = res_list["SD:hclust"]

A summary of res and all the functions that can be applied to it:

res

#> A 'ConsensusPartition' object with k = 2, 3, 4, 5, 6.

#> On a matrix with 4116 rows and 72 columns.

#> Top rows (412, 824, 1235, 1646, 2058) are extracted by 'SD' method.

#> Subgroups are detected by 'hclust' method.

#> Performed in total 1250 partitions by row resampling.

#> Best k for subgroups seems to be 2.

#>

#> Following methods can be applied to this 'ConsensusPartition' object:

#> [1] "cola_report" "collect_classes" "collect_plots"

#> [4] "collect_stats" "colnames" "compare_signatures"

#> [7] "consensus_heatmap" "dimension_reduction" "functional_enrichment"

#> [10] "get_anno_col" "get_anno" "get_classes"

#> [13] "get_consensus" "get_matrix" "get_membership"

#> [16] "get_param" "get_signatures" "get_stats"

#> [19] "is_best_k" "is_stable_k" "membership_heatmap"

#> [22] "ncol" "nrow" "plot_ecdf"

#> [25] "rownames" "select_partition_number" "show"

#> [28] "suggest_best_k" "test_to_known_factors"

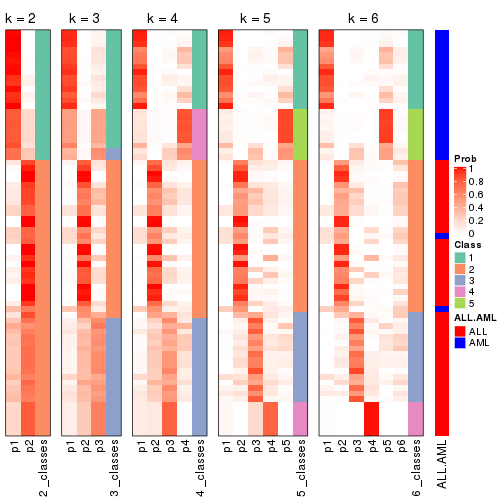

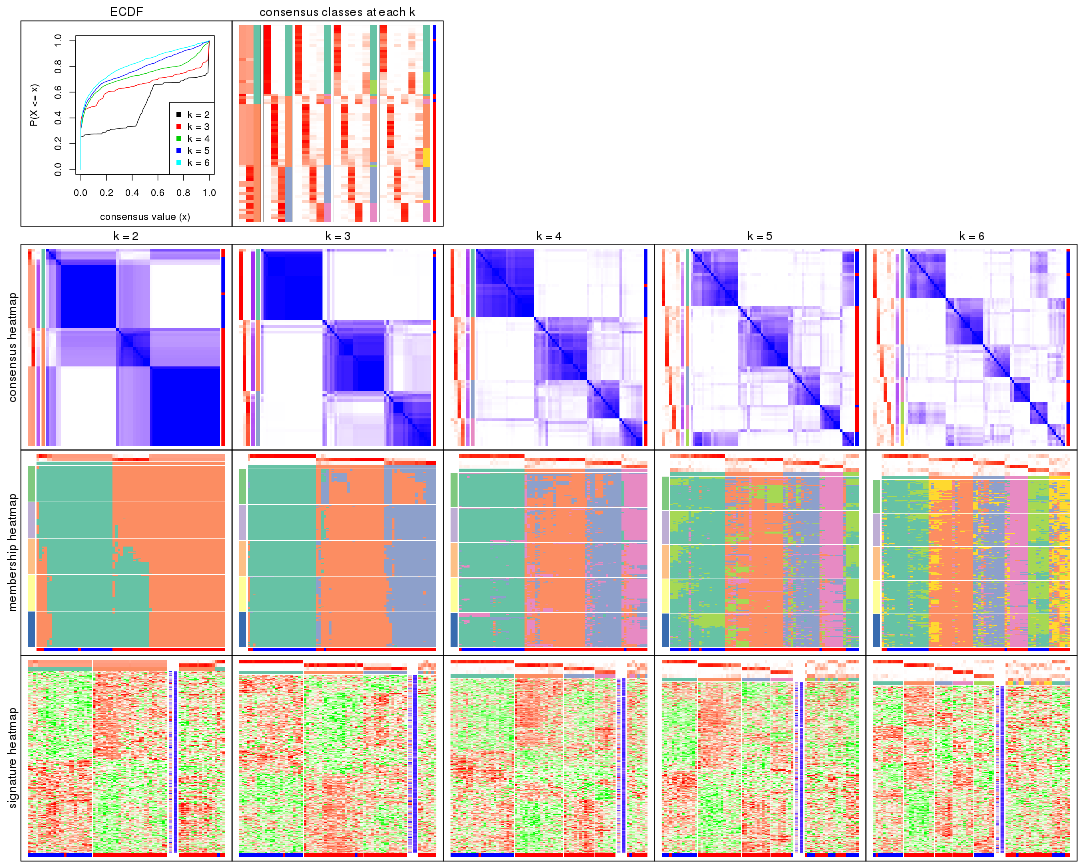

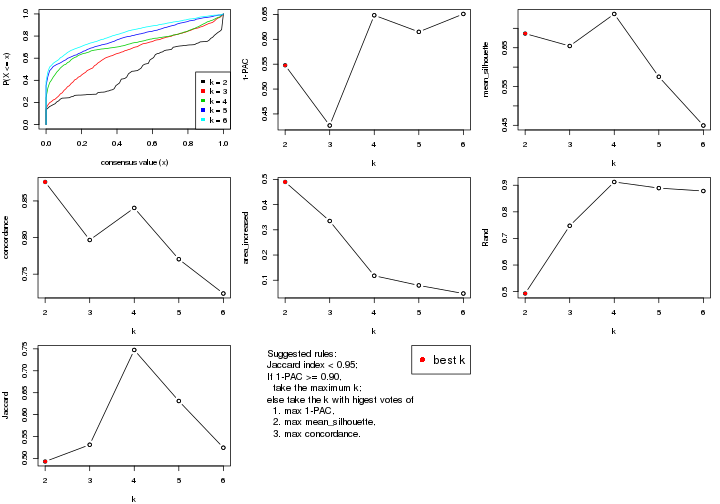

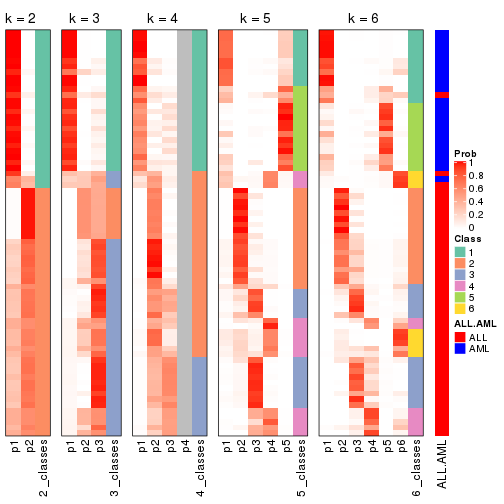

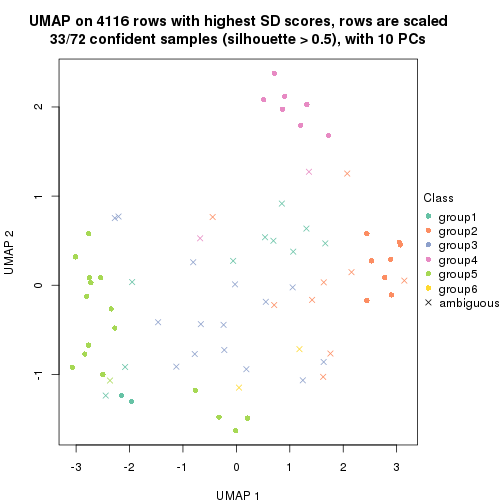

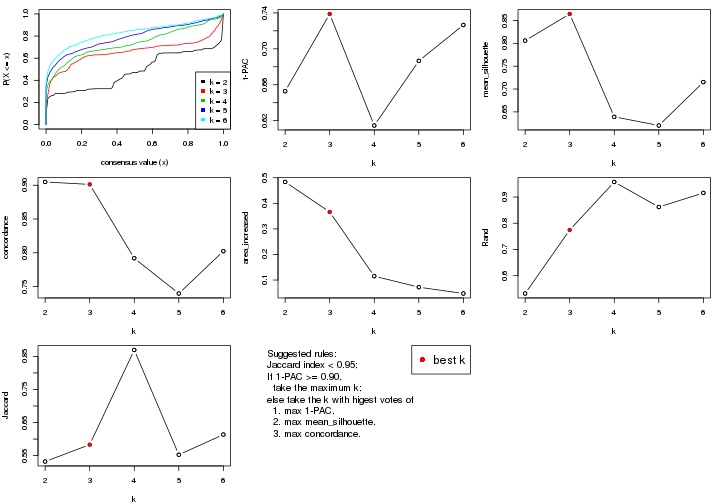

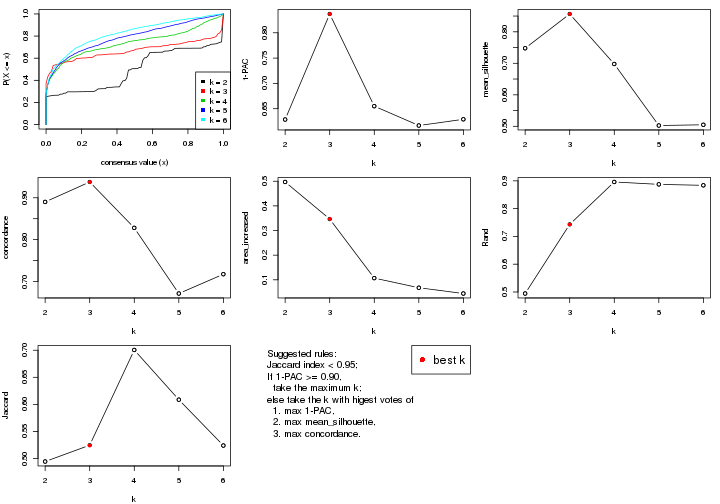

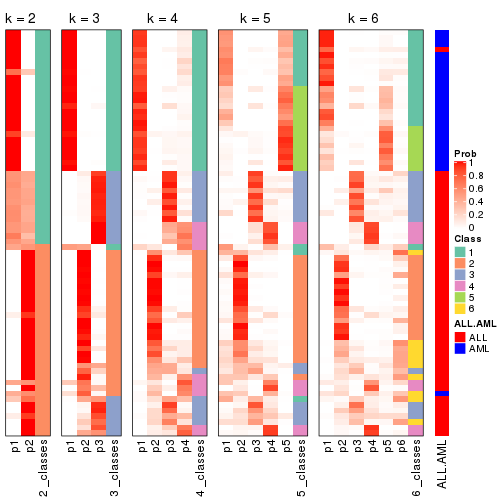

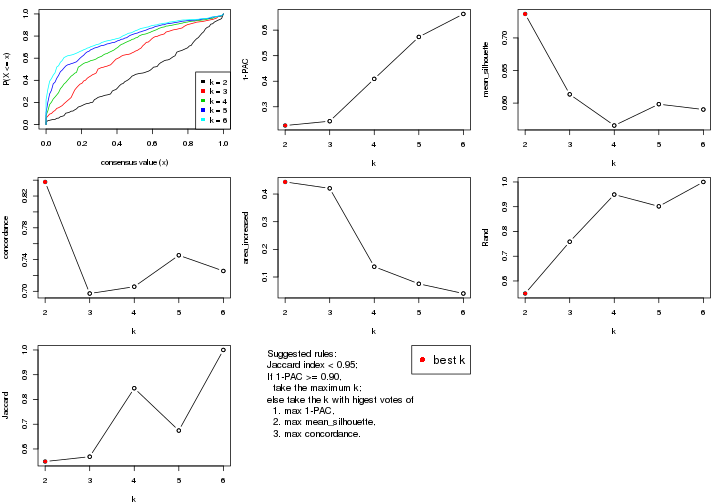

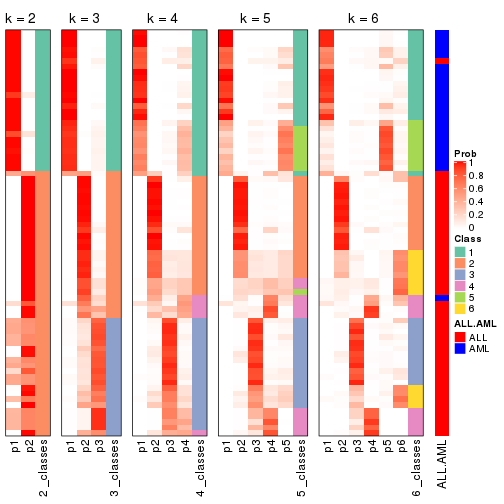

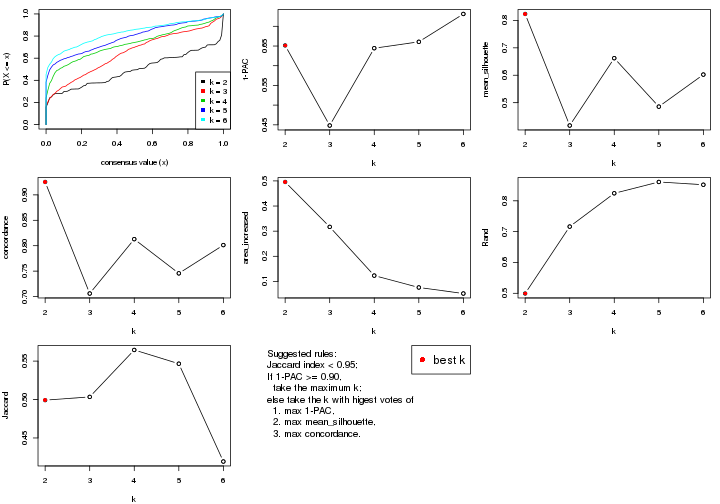

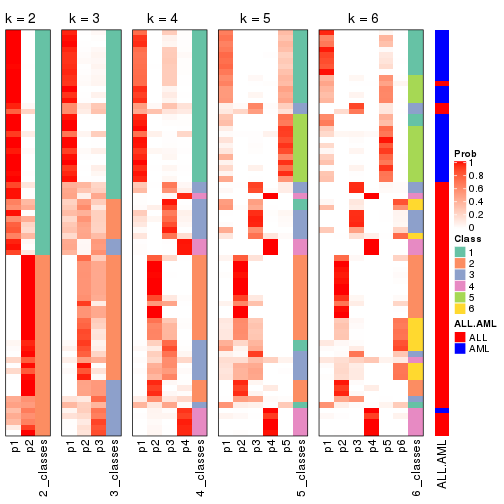

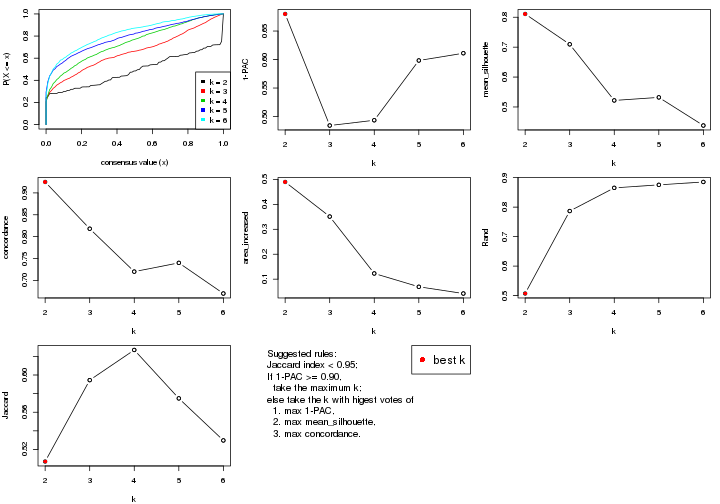

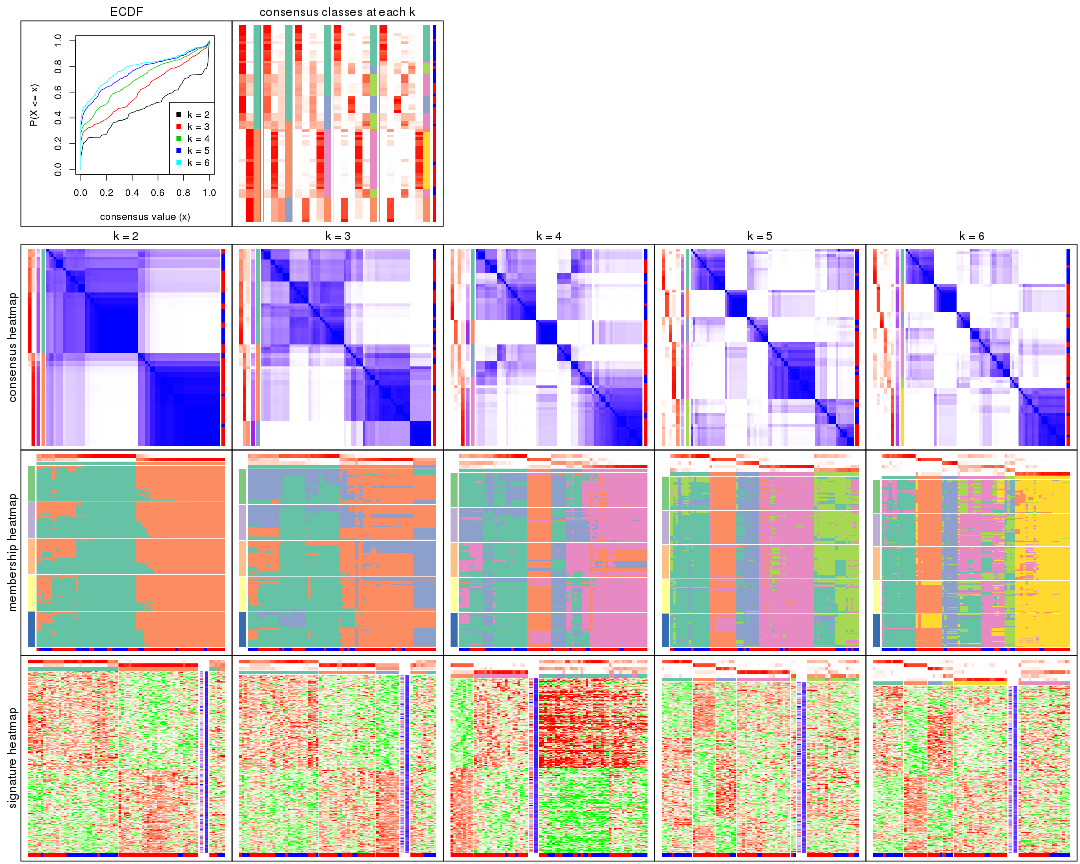

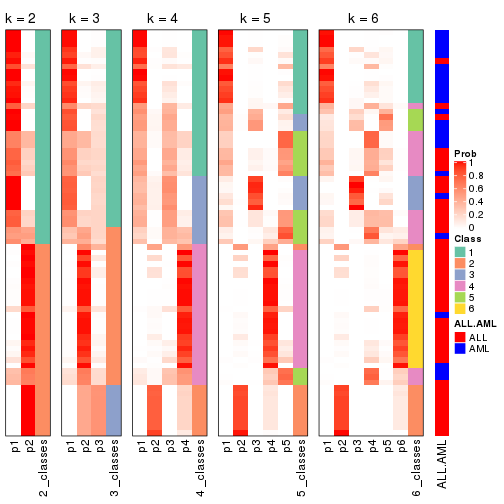

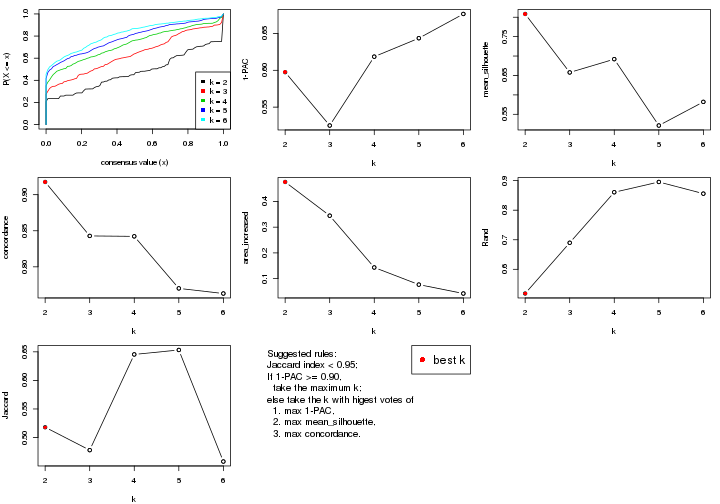

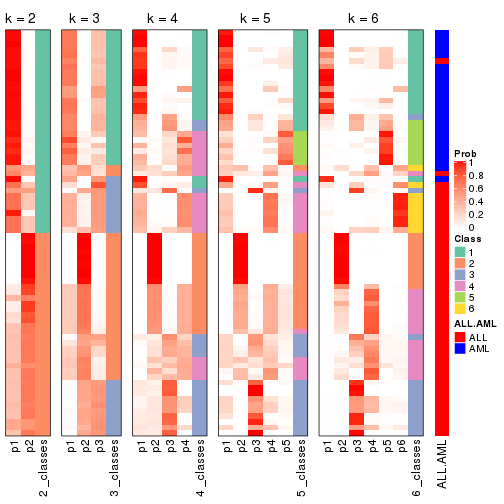

collect_plots() function collects all the plots made from res for all k (number of partitions)

into one single page to provide an easy and fast comparison between different k.

collect_plots(res)

The plots are:

k and the heatmap of

predicted classes for each k.k.k.k.All the plots in panels can be made by individual functions and they are plotted later in this section.

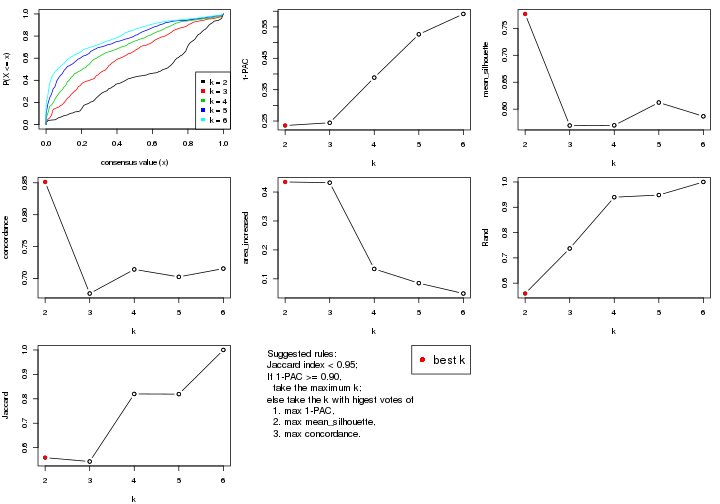

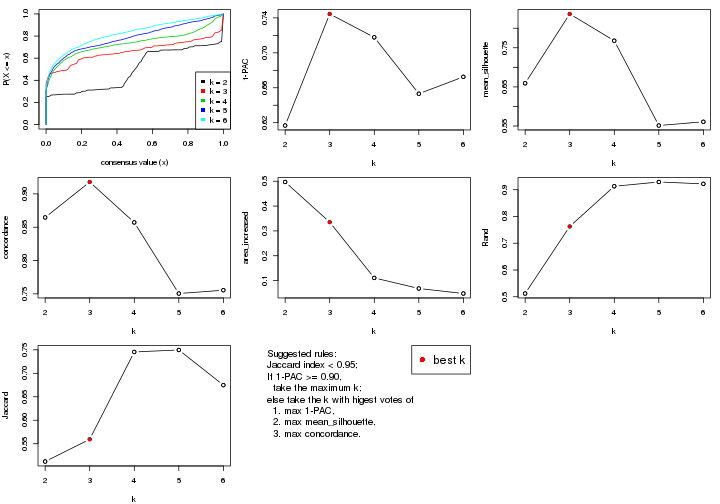

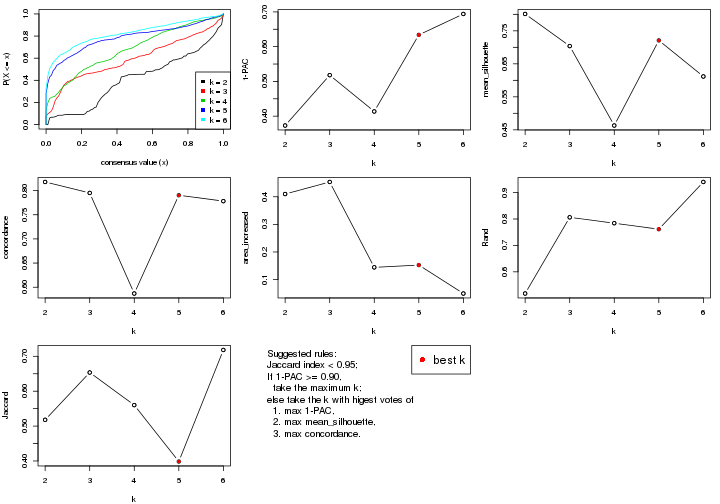

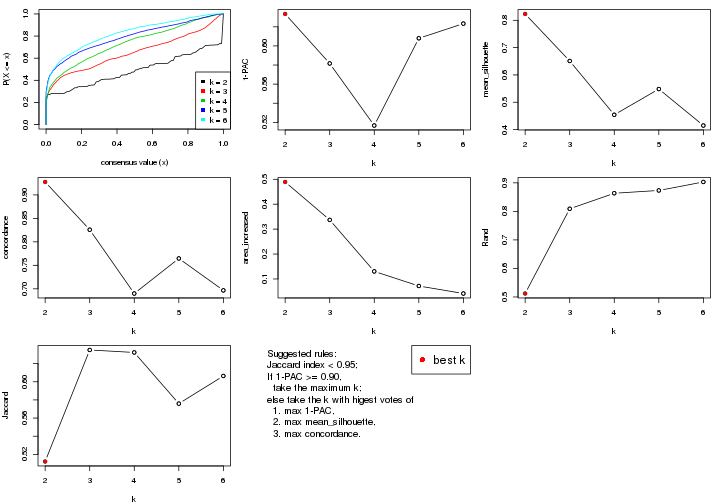

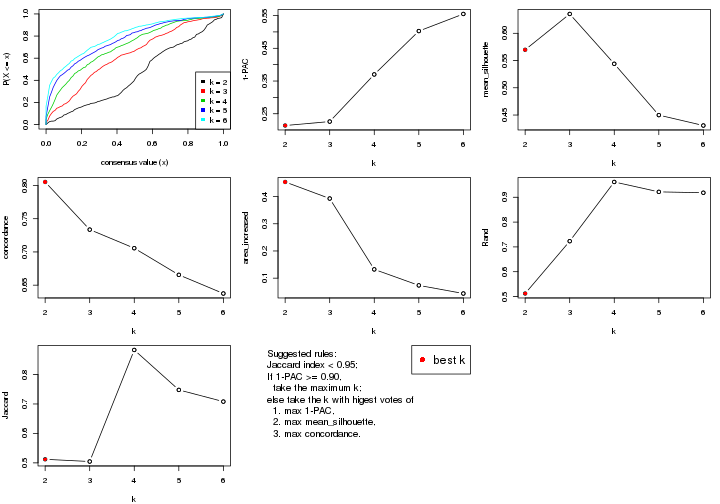

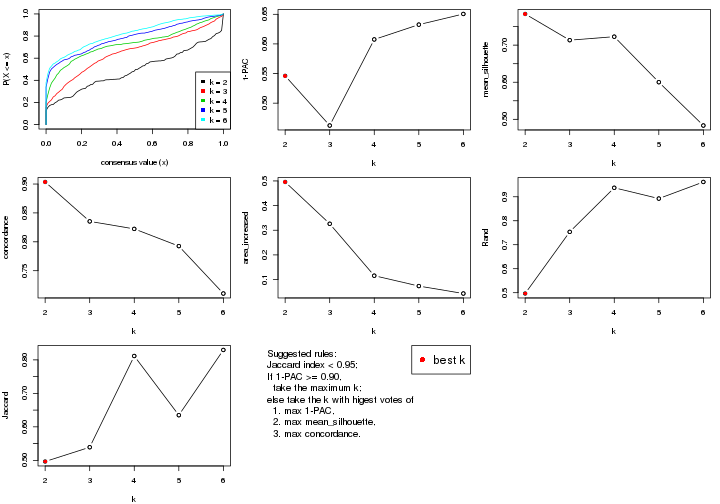

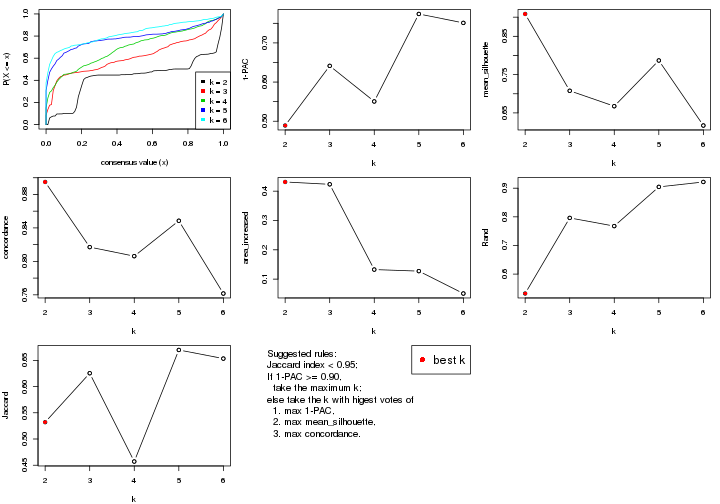

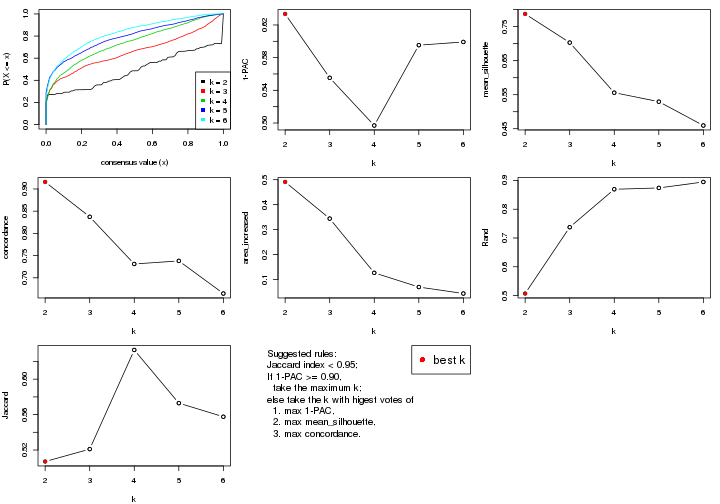

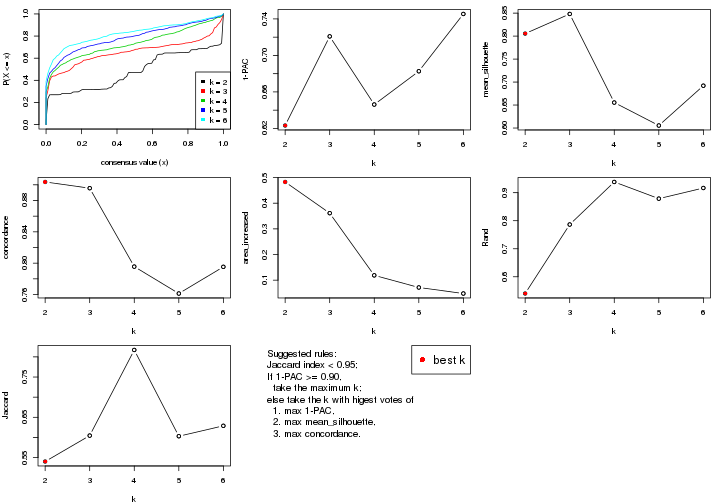

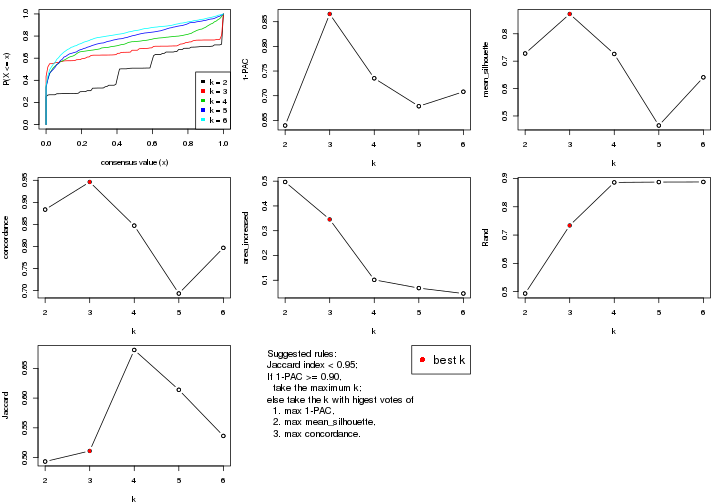

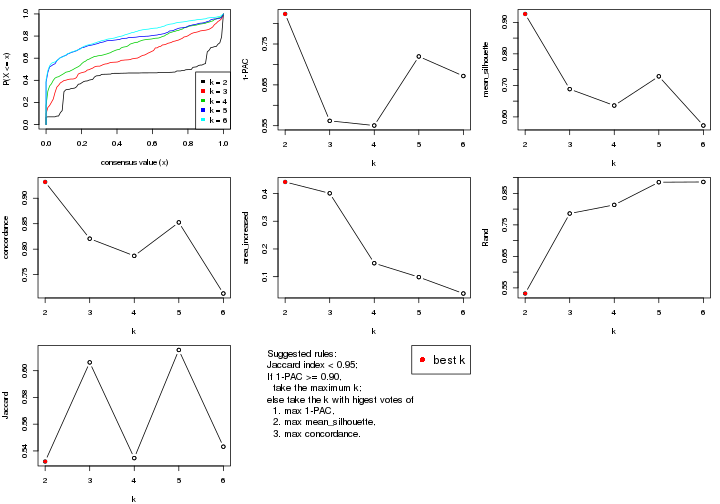

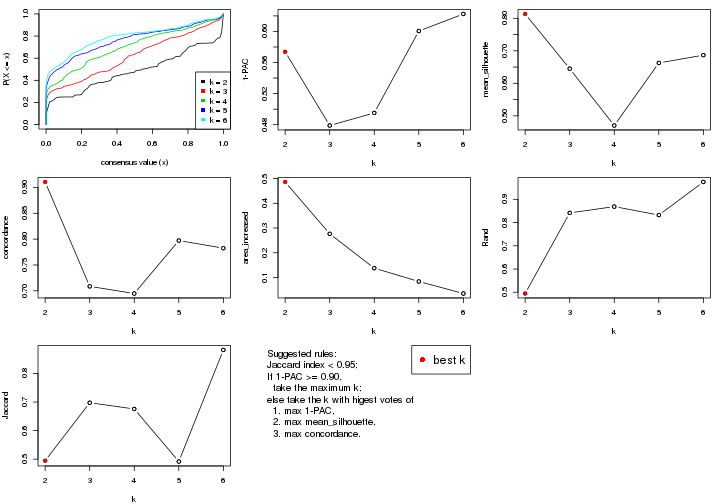

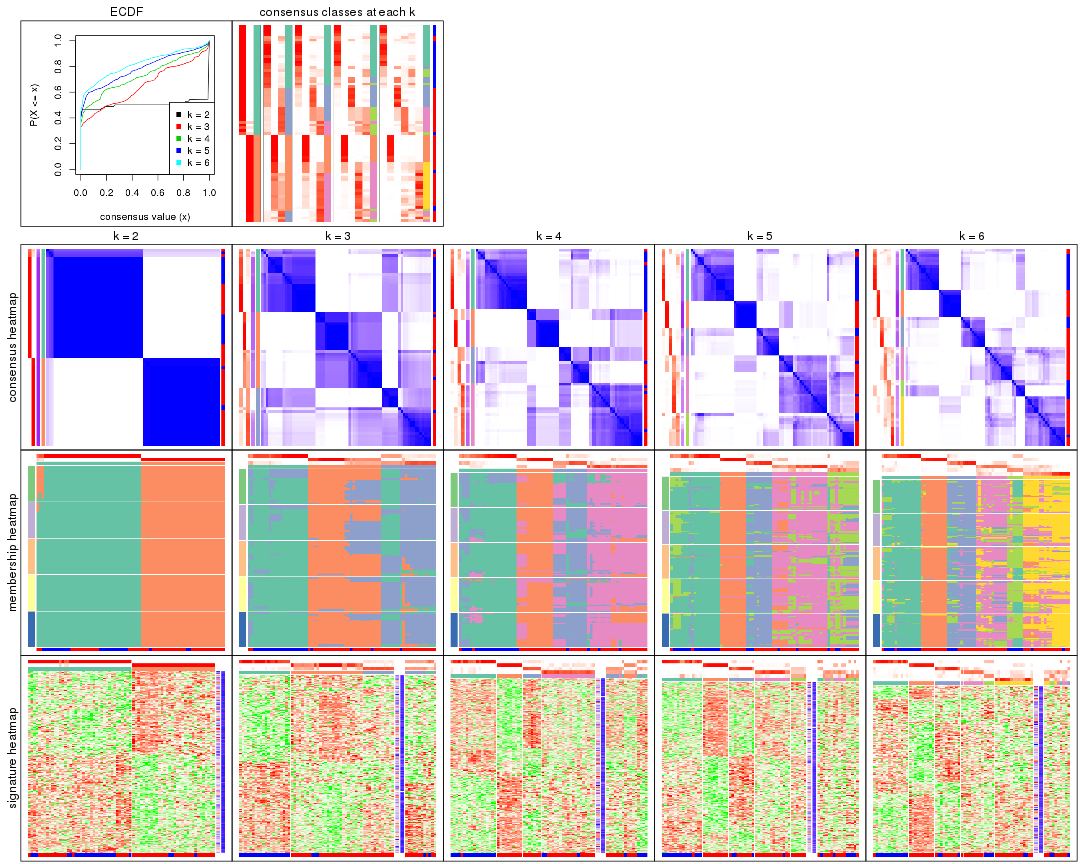

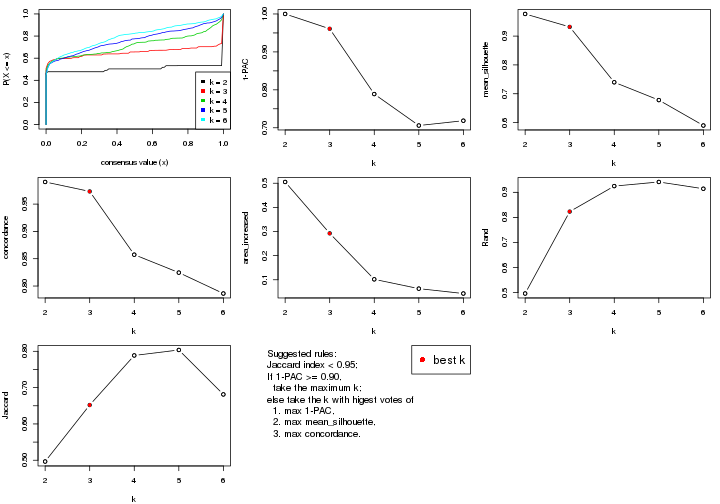

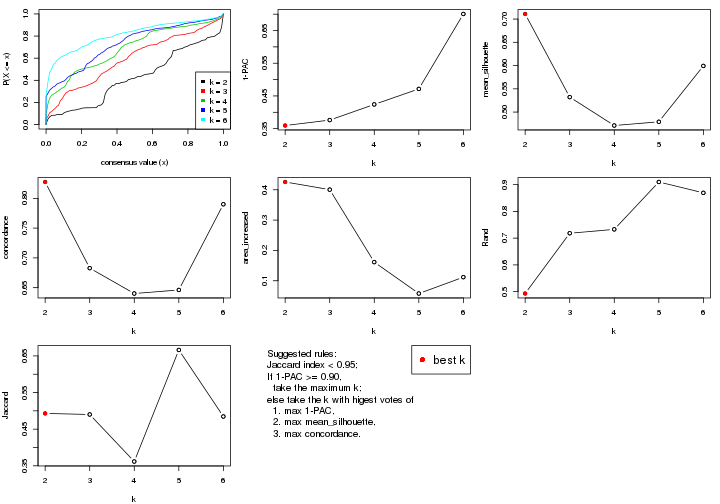

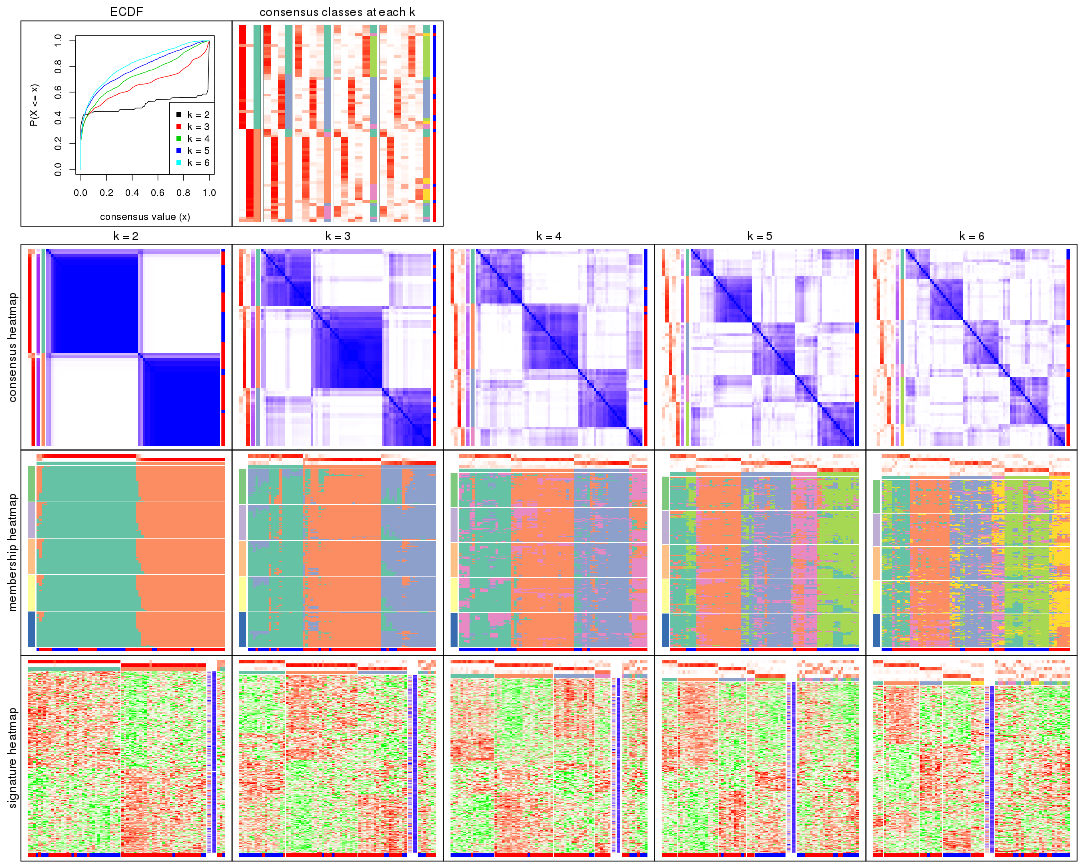

select_partition_number() produces several plots showing different

statistics for choosing “optimized” k. There are following statistics:

k;k, the area increased is defined as \(A_k - A_{k-1}\).The detailed explanations of these statistics can be found in the cola vignette.

Generally speaking, lower PAC score, higher mean silhouette score or higher

concordance corresponds to better partition. Rand index and Jaccard index

measure how similar the current partition is compared to partition with k-1.

If they are too similar, we won't accept k is better than k-1.

select_partition_number(res)

The numeric values for all these statistics can be obtained by get_stats().

get_stats(res)

#> k 1-PAC mean_silhouette concordance area_increased Rand Jaccard

#> 2 2 0.236 0.777 0.851 0.4348 0.559 0.559

#> 3 3 0.245 0.570 0.676 0.4324 0.737 0.543

#> 4 4 0.388 0.570 0.714 0.1342 0.940 0.820

#> 5 5 0.526 0.612 0.703 0.0851 0.948 0.819

#> 6 6 0.591 0.587 0.716 0.0493 1.000 1.000

suggest_best_k() suggests the best \(k\) based on these statistics. The rules are as follows:

suggest_best_k(res)

#> [1] 2

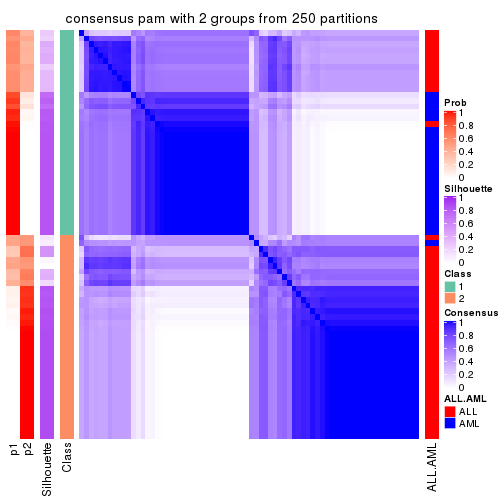

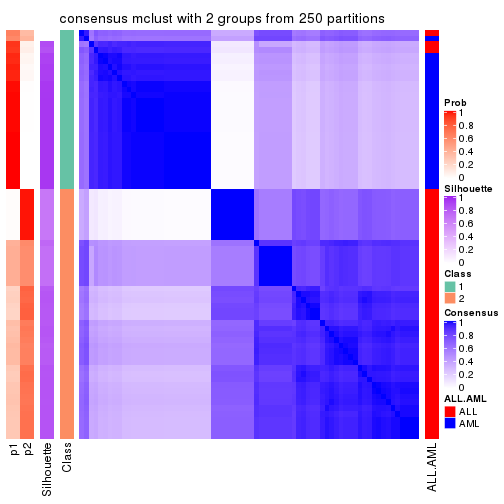

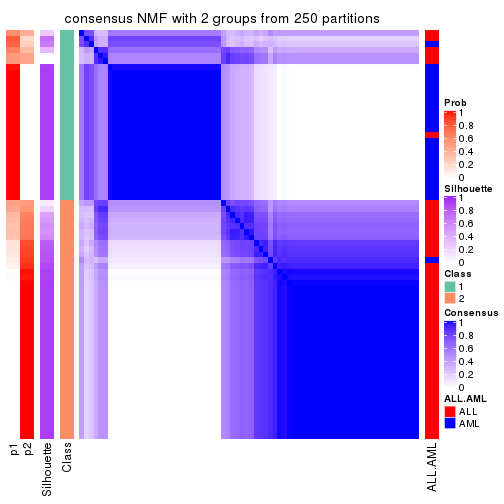

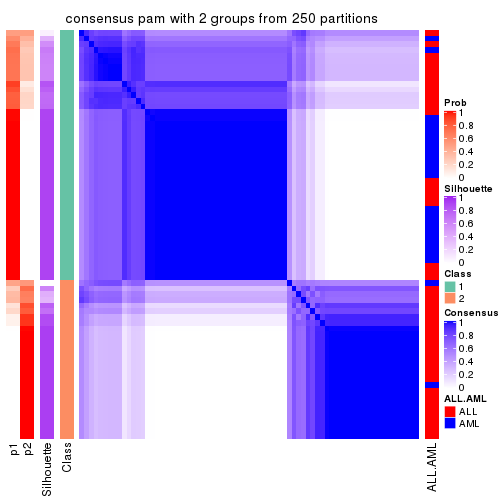

Following shows the table of the partitions (You need to click the show/hide

code output link to see it). The membership matrix (columns with name p*)

is inferred by

clue::cl_consensus()

function with the SE method. Basically the value in the membership matrix

represents the probability to belong to a certain group. The finall class

label for an item is determined with the group with highest probability it

belongs to.

In get_classes() function, the entropy is calculated from the membership

matrix and the silhouette score is calculated from the consensus matrix.

cbind(get_classes(res, k = 2), get_membership(res, k = 2))

#> class entropy silhouette p1 p2

#> sample_39 2 0.8763 0.742 0.296 0.704

#> sample_40 2 0.9866 0.489 0.432 0.568

#> sample_42 2 0.5519 0.808 0.128 0.872

#> sample_47 2 0.1843 0.804 0.028 0.972

#> sample_48 2 0.0000 0.793 0.000 1.000

#> sample_49 2 0.9358 0.682 0.352 0.648

#> sample_41 2 0.0000 0.793 0.000 1.000

#> sample_43 2 0.4562 0.810 0.096 0.904

#> sample_44 2 0.5294 0.821 0.120 0.880

#> sample_45 2 0.5294 0.821 0.120 0.880

#> sample_46 2 0.5519 0.820 0.128 0.872

#> sample_70 2 0.6048 0.818 0.148 0.852

#> sample_71 2 0.7602 0.759 0.220 0.780

#> sample_72 2 0.7602 0.759 0.220 0.780

#> sample_68 2 0.0000 0.793 0.000 1.000

#> sample_69 2 0.0000 0.793 0.000 1.000

#> sample_67 2 0.7883 0.702 0.236 0.764

#> sample_55 2 0.8713 0.744 0.292 0.708

#> sample_56 2 0.8861 0.737 0.304 0.696

#> sample_59 2 0.8443 0.766 0.272 0.728

#> sample_52 1 0.7139 0.781 0.804 0.196

#> sample_53 1 0.0376 0.866 0.996 0.004

#> sample_51 1 0.0000 0.865 1.000 0.000

#> sample_50 1 0.0000 0.865 1.000 0.000

#> sample_54 1 0.7219 0.774 0.800 0.200

#> sample_57 1 0.6973 0.789 0.812 0.188

#> sample_58 1 0.0000 0.865 1.000 0.000

#> sample_60 1 0.7219 0.774 0.800 0.200

#> sample_61 1 0.0672 0.868 0.992 0.008

#> sample_65 1 0.1184 0.869 0.984 0.016

#> sample_66 2 0.8016 0.607 0.244 0.756

#> sample_63 1 0.7139 0.781 0.804 0.196

#> sample_64 1 0.8763 0.560 0.704 0.296

#> sample_62 1 0.7139 0.781 0.804 0.196

#> sample_1 2 0.8555 0.753 0.280 0.720

#> sample_2 2 0.7453 0.727 0.212 0.788

#> sample_3 2 0.7299 0.801 0.204 0.796

#> sample_4 2 0.8499 0.757 0.276 0.724

#> sample_5 2 0.0000 0.793 0.000 1.000

#> sample_6 2 0.7299 0.801 0.204 0.796

#> sample_7 2 0.8555 0.754 0.280 0.720

#> sample_8 2 0.8661 0.750 0.288 0.712

#> sample_9 2 0.7299 0.801 0.204 0.796

#> sample_10 2 0.7299 0.801 0.204 0.796

#> sample_11 2 0.7299 0.801 0.204 0.796

#> sample_12 2 0.9522 0.640 0.372 0.628

#> sample_13 2 0.0000 0.793 0.000 1.000

#> sample_14 2 0.3274 0.803 0.060 0.940

#> sample_15 2 0.0000 0.793 0.000 1.000

#> sample_16 2 0.4161 0.819 0.084 0.916

#> sample_17 2 0.2948 0.800 0.052 0.948

#> sample_18 2 0.8081 0.791 0.248 0.752

#> sample_19 2 0.4161 0.819 0.084 0.916

#> sample_20 2 0.0000 0.793 0.000 1.000

#> sample_21 2 0.0000 0.793 0.000 1.000

#> sample_22 2 0.8861 0.737 0.304 0.696

#> sample_23 2 0.7299 0.801 0.204 0.796

#> sample_24 2 0.0000 0.793 0.000 1.000

#> sample_25 2 0.9044 0.725 0.320 0.680

#> sample_26 2 0.4298 0.820 0.088 0.912

#> sample_27 2 0.9358 0.682 0.352 0.648

#> sample_34 1 0.6247 0.814 0.844 0.156

#> sample_35 1 0.8555 0.606 0.720 0.280

#> sample_36 1 0.0672 0.867 0.992 0.008

#> sample_37 1 0.0376 0.867 0.996 0.004

#> sample_38 1 0.3584 0.853 0.932 0.068

#> sample_28 1 0.4022 0.850 0.920 0.080

#> sample_29 2 0.9248 0.365 0.340 0.660

#> sample_30 1 0.0672 0.867 0.992 0.008

#> sample_31 1 0.3584 0.863 0.932 0.068

#> sample_32 1 0.2948 0.867 0.948 0.052

#> sample_33 1 0.0000 0.865 1.000 0.000

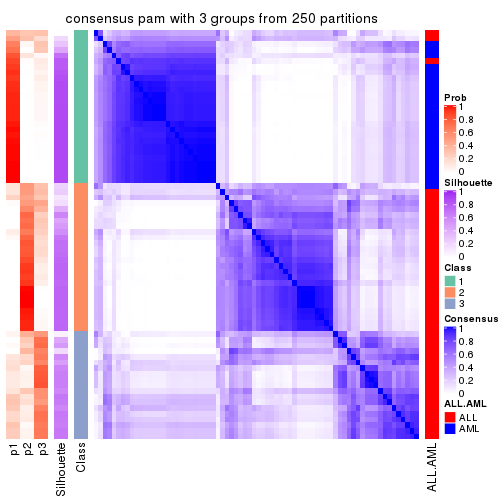

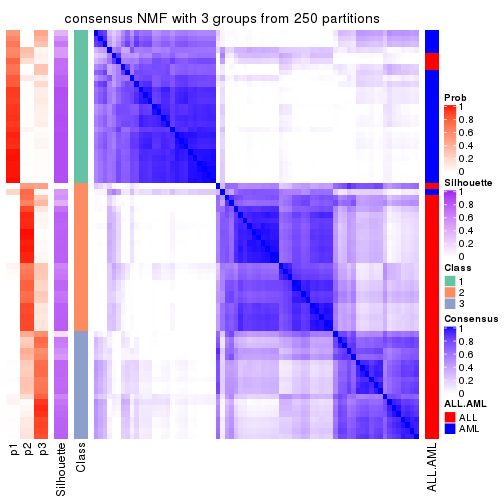

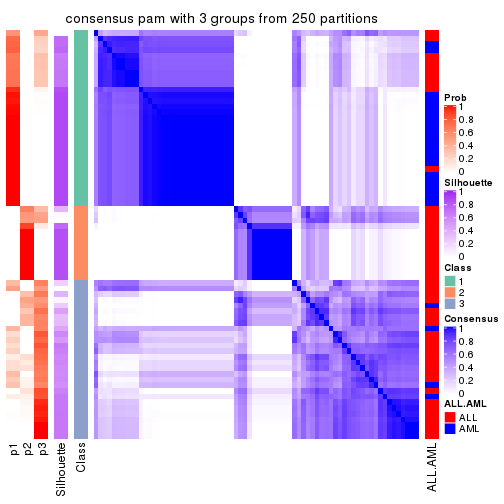

cbind(get_classes(res, k = 3), get_membership(res, k = 3))

#> class entropy silhouette p1 p2 p3

#> sample_39 3 0.7828 0.6469 0.068 0.340 0.592

#> sample_40 3 0.7596 0.5801 0.100 0.228 0.672

#> sample_42 2 0.5757 0.6360 0.056 0.792 0.152

#> sample_47 2 0.3116 0.6799 0.000 0.892 0.108

#> sample_48 2 0.0237 0.7183 0.000 0.996 0.004

#> sample_49 3 0.8875 0.6245 0.136 0.336 0.528

#> sample_41 2 0.0424 0.7185 0.000 0.992 0.008

#> sample_43 2 0.4748 0.6545 0.024 0.832 0.144

#> sample_44 2 0.6126 0.1650 0.004 0.644 0.352

#> sample_45 2 0.5929 0.2492 0.004 0.676 0.320

#> sample_46 2 0.6228 0.0877 0.004 0.624 0.372

#> sample_70 2 0.6587 -0.1208 0.008 0.568 0.424

#> sample_71 2 0.7281 0.5634 0.148 0.712 0.140

#> sample_72 2 0.7281 0.5634 0.148 0.712 0.140

#> sample_68 2 0.0424 0.7185 0.000 0.992 0.008

#> sample_69 2 0.0237 0.7183 0.000 0.996 0.004

#> sample_67 2 0.8685 0.4351 0.192 0.596 0.212

#> sample_55 3 0.8043 0.5980 0.072 0.372 0.556

#> sample_56 3 0.7867 0.6207 0.068 0.348 0.584

#> sample_59 3 0.7990 0.5380 0.064 0.404 0.532

#> sample_52 1 0.7581 0.5308 0.496 0.040 0.464

#> sample_53 1 0.1964 0.7555 0.944 0.000 0.056

#> sample_51 1 0.1753 0.7617 0.952 0.000 0.048

#> sample_50 1 0.2165 0.7650 0.936 0.000 0.064

#> sample_54 1 0.7584 0.5254 0.488 0.040 0.472

#> sample_57 1 0.7484 0.5387 0.504 0.036 0.460

#> sample_58 1 0.3941 0.7552 0.844 0.000 0.156

#> sample_60 1 0.7584 0.5254 0.488 0.040 0.472

#> sample_61 1 0.3918 0.7627 0.856 0.004 0.140

#> sample_65 1 0.3826 0.7662 0.868 0.008 0.124

#> sample_66 2 0.7437 0.4972 0.200 0.692 0.108

#> sample_63 1 0.7581 0.5308 0.496 0.040 0.464

#> sample_64 3 0.7363 -0.2749 0.372 0.040 0.588

#> sample_62 1 0.7581 0.5308 0.496 0.040 0.464

#> sample_1 3 0.7676 0.6440 0.056 0.360 0.584

#> sample_2 2 0.8578 0.4475 0.172 0.604 0.224

#> sample_3 3 0.7697 0.5048 0.084 0.272 0.644

#> sample_4 3 0.7589 0.6384 0.052 0.360 0.588

#> sample_5 2 0.0424 0.7185 0.000 0.992 0.008

#> sample_6 3 0.7697 0.5048 0.084 0.272 0.644

#> sample_7 3 0.7676 0.6401 0.056 0.360 0.584

#> sample_8 3 0.7600 0.6466 0.056 0.344 0.600

#> sample_9 3 0.7697 0.5048 0.084 0.272 0.644

#> sample_10 3 0.7697 0.5048 0.084 0.272 0.644

#> sample_11 3 0.7697 0.5048 0.084 0.272 0.644

#> sample_12 3 0.9633 0.5556 0.216 0.340 0.444

#> sample_13 2 0.0237 0.7183 0.000 0.996 0.004

#> sample_14 2 0.6355 0.5092 0.024 0.696 0.280

#> sample_15 2 0.0424 0.7185 0.000 0.992 0.008

#> sample_16 2 0.4861 0.5837 0.012 0.808 0.180

#> sample_17 2 0.5384 0.6192 0.024 0.788 0.188

#> sample_18 3 0.7839 0.3787 0.052 0.464 0.484

#> sample_19 2 0.4805 0.5880 0.012 0.812 0.176

#> sample_20 2 0.0237 0.7183 0.000 0.996 0.004

#> sample_21 2 0.1031 0.7122 0.000 0.976 0.024

#> sample_22 3 0.8546 0.6397 0.108 0.348 0.544

#> sample_23 3 0.7697 0.5048 0.084 0.272 0.644

#> sample_24 2 0.0592 0.7173 0.000 0.988 0.012

#> sample_25 3 0.9141 0.5769 0.152 0.360 0.488

#> sample_26 2 0.4915 0.5767 0.012 0.804 0.184

#> sample_27 3 0.8875 0.6245 0.136 0.336 0.528

#> sample_34 1 0.6529 0.6185 0.620 0.012 0.368

#> sample_35 3 0.7091 -0.3436 0.416 0.024 0.560

#> sample_36 1 0.3030 0.7715 0.904 0.004 0.092

#> sample_37 1 0.2165 0.7534 0.936 0.000 0.064

#> sample_38 1 0.4099 0.7107 0.852 0.008 0.140

#> sample_28 1 0.4413 0.7209 0.860 0.036 0.104

#> sample_29 2 0.8082 0.3748 0.296 0.608 0.096

#> sample_30 1 0.3030 0.7715 0.904 0.004 0.092

#> sample_31 1 0.5167 0.7522 0.792 0.016 0.192

#> sample_32 1 0.4602 0.7650 0.852 0.040 0.108

#> sample_33 1 0.1860 0.7561 0.948 0.000 0.052

cbind(get_classes(res, k = 4), get_membership(res, k = 4))

#> class entropy silhouette p1 p2 p3 p4

#> sample_39 3 0.7328 0.625 0.040 0.244 0.608 0.108

#> sample_40 3 0.8260 0.378 0.036 0.160 0.416 0.388

#> sample_42 2 0.6202 0.604 0.052 0.728 0.144 0.076

#> sample_47 2 0.3674 0.659 0.000 0.848 0.116 0.036

#> sample_48 2 0.0188 0.695 0.000 0.996 0.000 0.004

#> sample_49 3 0.8442 0.609 0.112 0.244 0.532 0.112

#> sample_41 2 0.0817 0.693 0.000 0.976 0.024 0.000

#> sample_43 2 0.5481 0.618 0.024 0.764 0.140 0.072

#> sample_44 2 0.6869 0.173 0.000 0.564 0.304 0.132

#> sample_45 2 0.6592 0.289 0.000 0.612 0.260 0.128

#> sample_46 2 0.6835 0.147 0.000 0.560 0.316 0.124

#> sample_70 2 0.7192 -0.131 0.000 0.472 0.388 0.140

#> sample_71 2 0.7227 0.544 0.140 0.652 0.152 0.056

#> sample_72 2 0.7227 0.544 0.140 0.652 0.152 0.056

#> sample_68 2 0.0336 0.695 0.000 0.992 0.008 0.000

#> sample_69 2 0.0188 0.695 0.000 0.996 0.000 0.004

#> sample_67 2 0.8327 0.409 0.180 0.520 0.244 0.056

#> sample_55 3 0.8441 0.489 0.024 0.288 0.408 0.280

#> sample_56 3 0.8174 0.533 0.020 0.248 0.464 0.268

#> sample_59 3 0.8327 0.416 0.016 0.312 0.388 0.284

#> sample_52 4 0.3940 0.774 0.152 0.020 0.004 0.824

#> sample_53 1 0.0895 0.762 0.976 0.000 0.020 0.004

#> sample_51 1 0.1109 0.768 0.968 0.000 0.004 0.028

#> sample_50 1 0.1824 0.767 0.936 0.000 0.004 0.060

#> sample_54 4 0.4020 0.772 0.128 0.020 0.016 0.836

#> sample_57 4 0.4178 0.771 0.140 0.020 0.016 0.824

#> sample_58 1 0.5510 0.403 0.600 0.000 0.024 0.376

#> sample_60 4 0.4020 0.772 0.128 0.020 0.016 0.836

#> sample_61 1 0.5497 0.569 0.672 0.000 0.044 0.284

#> sample_65 1 0.5038 0.581 0.684 0.000 0.020 0.296

#> sample_66 2 0.7557 0.516 0.172 0.632 0.104 0.092

#> sample_63 4 0.3988 0.773 0.156 0.020 0.004 0.820

#> sample_64 4 0.6854 0.543 0.136 0.004 0.260 0.600

#> sample_62 4 0.3940 0.774 0.152 0.020 0.004 0.824

#> sample_1 3 0.7960 0.617 0.040 0.272 0.536 0.152

#> sample_2 2 0.8217 0.413 0.164 0.524 0.260 0.052

#> sample_3 3 0.5216 0.497 0.100 0.120 0.772 0.008

#> sample_4 3 0.7920 0.603 0.036 0.272 0.536 0.156

#> sample_5 2 0.0469 0.695 0.000 0.988 0.012 0.000

#> sample_6 3 0.5216 0.497 0.100 0.120 0.772 0.008

#> sample_7 3 0.7955 0.604 0.036 0.272 0.532 0.160

#> sample_8 3 0.7199 0.627 0.040 0.248 0.616 0.096

#> sample_9 3 0.5216 0.497 0.100 0.120 0.772 0.008

#> sample_10 3 0.5216 0.497 0.100 0.120 0.772 0.008

#> sample_11 3 0.5216 0.497 0.100 0.120 0.772 0.008

#> sample_12 3 0.8927 0.524 0.192 0.248 0.468 0.092

#> sample_13 2 0.0188 0.695 0.000 0.996 0.000 0.004

#> sample_14 2 0.6651 0.432 0.016 0.596 0.320 0.068

#> sample_15 2 0.0469 0.695 0.000 0.988 0.012 0.000

#> sample_16 2 0.5350 0.544 0.008 0.744 0.188 0.060

#> sample_17 2 0.5872 0.563 0.012 0.704 0.216 0.068

#> sample_18 3 0.8306 0.316 0.036 0.376 0.420 0.168

#> sample_19 2 0.5310 0.548 0.008 0.748 0.184 0.060

#> sample_20 2 0.0188 0.695 0.000 0.996 0.000 0.004

#> sample_21 2 0.2036 0.677 0.000 0.936 0.032 0.032

#> sample_22 3 0.7636 0.617 0.084 0.256 0.588 0.072

#> sample_23 3 0.5216 0.497 0.100 0.120 0.772 0.008

#> sample_24 2 0.1022 0.692 0.000 0.968 0.032 0.000

#> sample_25 3 0.8509 0.549 0.136 0.264 0.512 0.088

#> sample_26 2 0.5466 0.532 0.008 0.732 0.200 0.060

#> sample_27 3 0.8442 0.609 0.112 0.244 0.532 0.112

#> sample_34 4 0.7337 0.224 0.400 0.004 0.136 0.460

#> sample_35 4 0.7135 0.509 0.200 0.000 0.240 0.560

#> sample_36 1 0.3585 0.730 0.828 0.004 0.004 0.164

#> sample_37 1 0.1109 0.756 0.968 0.000 0.028 0.004

#> sample_38 1 0.3652 0.682 0.856 0.000 0.092 0.052

#> sample_28 1 0.3840 0.698 0.860 0.012 0.076 0.052

#> sample_29 2 0.7923 0.427 0.268 0.560 0.076 0.096

#> sample_30 1 0.3631 0.728 0.824 0.004 0.004 0.168

#> sample_31 1 0.6013 0.414 0.600 0.004 0.044 0.352

#> sample_32 1 0.5364 0.651 0.720 0.016 0.028 0.236

#> sample_33 1 0.0927 0.763 0.976 0.000 0.016 0.008

cbind(get_classes(res, k = 5), get_membership(res, k = 5))

#> class entropy silhouette p1 p2 p3 p4 p5

#> sample_39 3 0.3886 0.6632 0.000 0.084 0.828 0.068 0.020

#> sample_40 3 0.5410 0.4043 0.000 0.040 0.644 0.028 0.288

#> sample_42 2 0.6725 0.5607 0.032 0.644 0.160 0.116 0.048

#> sample_47 2 0.3982 0.6570 0.000 0.816 0.088 0.084 0.012

#> sample_48 2 0.1124 0.6870 0.000 0.960 0.036 0.004 0.000

#> sample_49 3 0.4894 0.6534 0.040 0.084 0.792 0.040 0.044

#> sample_41 2 0.1216 0.6820 0.000 0.960 0.020 0.020 0.000

#> sample_43 2 0.6084 0.5737 0.012 0.684 0.144 0.116 0.044

#> sample_44 2 0.7194 0.0156 0.000 0.440 0.372 0.132 0.056

#> sample_45 2 0.6914 0.2459 0.000 0.544 0.268 0.132 0.056

#> sample_46 2 0.7097 0.0927 0.000 0.492 0.320 0.132 0.056

#> sample_70 3 0.7238 0.2063 0.000 0.360 0.444 0.140 0.056

#> sample_71 2 0.8113 0.4712 0.120 0.520 0.196 0.120 0.044

#> sample_72 2 0.8113 0.4712 0.120 0.520 0.196 0.120 0.044

#> sample_68 2 0.0324 0.6856 0.000 0.992 0.004 0.004 0.000

#> sample_69 2 0.0865 0.6860 0.000 0.972 0.024 0.004 0.000

#> sample_67 2 0.7658 0.4456 0.164 0.496 0.024 0.268 0.048

#> sample_55 3 0.7621 0.6150 0.004 0.144 0.524 0.132 0.196

#> sample_56 3 0.6709 0.6434 0.004 0.088 0.624 0.116 0.168

#> sample_59 3 0.7877 0.5478 0.004 0.168 0.492 0.148 0.188

#> sample_52 5 0.2840 0.7569 0.108 0.012 0.004 0.004 0.872

#> sample_53 1 0.0865 0.7522 0.972 0.000 0.000 0.024 0.004

#> sample_51 1 0.1251 0.7581 0.956 0.000 0.000 0.008 0.036

#> sample_50 1 0.1877 0.7562 0.924 0.000 0.000 0.012 0.064

#> sample_54 5 0.3179 0.7575 0.072 0.012 0.012 0.028 0.876

#> sample_57 5 0.3329 0.7567 0.088 0.012 0.012 0.024 0.864

#> sample_58 1 0.5775 0.3136 0.520 0.000 0.048 0.020 0.412

#> sample_60 5 0.3179 0.7575 0.072 0.012 0.012 0.028 0.876

#> sample_61 1 0.5821 0.5082 0.600 0.000 0.072 0.020 0.308

#> sample_65 1 0.5393 0.5261 0.620 0.000 0.040 0.020 0.320

#> sample_66 2 0.7237 0.5515 0.160 0.604 0.028 0.124 0.084

#> sample_63 5 0.2891 0.7553 0.112 0.012 0.004 0.004 0.868

#> sample_64 5 0.6024 0.4888 0.084 0.000 0.348 0.016 0.552

#> sample_62 5 0.2840 0.7569 0.108 0.012 0.004 0.004 0.872

#> sample_1 3 0.5578 0.6751 0.004 0.128 0.724 0.068 0.076

#> sample_2 2 0.7520 0.4380 0.144 0.496 0.020 0.292 0.048

#> sample_3 4 0.4221 1.0000 0.044 0.000 0.188 0.764 0.004

#> sample_4 3 0.6376 0.6385 0.000 0.192 0.636 0.080 0.092

#> sample_5 2 0.0451 0.6856 0.000 0.988 0.004 0.008 0.000

#> sample_6 4 0.4221 1.0000 0.044 0.000 0.188 0.764 0.004

#> sample_7 3 0.6247 0.6472 0.000 0.176 0.652 0.076 0.096

#> sample_8 3 0.3706 0.6408 0.000 0.064 0.840 0.076 0.020

#> sample_9 4 0.4221 1.0000 0.044 0.000 0.188 0.764 0.004

#> sample_10 4 0.4221 1.0000 0.044 0.000 0.188 0.764 0.004

#> sample_11 4 0.4221 1.0000 0.044 0.000 0.188 0.764 0.004

#> sample_12 3 0.8176 0.5101 0.176 0.144 0.520 0.112 0.048

#> sample_13 2 0.1124 0.6870 0.000 0.960 0.036 0.004 0.000

#> sample_14 2 0.5447 0.3753 0.000 0.532 0.008 0.416 0.044

#> sample_15 2 0.0451 0.6856 0.000 0.988 0.004 0.008 0.000

#> sample_16 2 0.5760 0.5018 0.004 0.644 0.252 0.084 0.016

#> sample_17 2 0.5015 0.5544 0.000 0.652 0.004 0.296 0.048

#> sample_18 3 0.8009 0.4658 0.008 0.256 0.448 0.192 0.096

#> sample_19 2 0.5610 0.5249 0.004 0.668 0.228 0.084 0.016

#> sample_20 2 0.1124 0.6870 0.000 0.960 0.036 0.004 0.000

#> sample_21 2 0.3113 0.6438 0.000 0.872 0.048 0.068 0.012

#> sample_22 3 0.5327 0.5983 0.060 0.068 0.748 0.116 0.008

#> sample_23 4 0.4221 1.0000 0.044 0.000 0.188 0.764 0.004

#> sample_24 2 0.1741 0.6802 0.000 0.936 0.040 0.024 0.000

#> sample_25 3 0.7669 0.5574 0.116 0.156 0.568 0.128 0.032

#> sample_26 2 0.5787 0.5052 0.004 0.648 0.240 0.092 0.016

#> sample_27 3 0.4894 0.6534 0.040 0.084 0.792 0.040 0.044

#> sample_34 5 0.6833 0.2094 0.356 0.004 0.168 0.012 0.460

#> sample_35 5 0.6503 0.4989 0.148 0.000 0.304 0.016 0.532

#> sample_36 1 0.3245 0.7151 0.824 0.004 0.004 0.004 0.164

#> sample_37 1 0.1041 0.7481 0.964 0.000 0.000 0.032 0.004

#> sample_38 1 0.3450 0.6843 0.848 0.000 0.008 0.084 0.060

#> sample_28 1 0.3737 0.6983 0.852 0.008 0.040 0.040 0.060

#> sample_29 2 0.7337 0.4842 0.252 0.544 0.012 0.104 0.088

#> sample_30 1 0.3285 0.7127 0.820 0.004 0.004 0.004 0.168

#> sample_31 1 0.5651 0.4029 0.572 0.004 0.056 0.008 0.360

#> sample_32 1 0.5250 0.6274 0.696 0.016 0.016 0.036 0.236

#> sample_33 1 0.0898 0.7524 0.972 0.000 0.000 0.020 0.008

cbind(get_classes(res, k = 6), get_membership(res, k = 6))

#> class entropy silhouette p1 p2 p3 p4 p5 p6

#> sample_39 3 0.3993 0.6747 0.000 0.040 0.800 0.108 0.004 0.048

#> sample_40 3 0.5866 0.4010 0.000 0.028 0.624 0.028 0.228 0.092

#> sample_42 2 0.6609 0.4571 0.032 0.512 0.104 0.020 0.012 0.320

#> sample_47 2 0.4035 0.6186 0.000 0.788 0.048 0.028 0.004 0.132

#> sample_48 2 0.1257 0.6487 0.000 0.952 0.028 0.000 0.000 0.020

#> sample_49 3 0.3920 0.6497 0.008 0.020 0.824 0.048 0.020 0.080

#> sample_41 2 0.2032 0.6408 0.000 0.920 0.024 0.020 0.000 0.036

#> sample_43 2 0.5993 0.4790 0.008 0.548 0.104 0.012 0.012 0.316

#> sample_44 2 0.6638 0.0334 0.000 0.380 0.340 0.012 0.012 0.256

#> sample_45 2 0.6352 0.2579 0.000 0.488 0.204 0.012 0.012 0.284

#> sample_46 2 0.6609 0.1420 0.000 0.440 0.252 0.012 0.016 0.280

#> sample_70 3 0.6936 0.1542 0.000 0.284 0.404 0.028 0.016 0.268

#> sample_71 2 0.7906 0.3907 0.112 0.428 0.184 0.044 0.004 0.228

#> sample_72 2 0.7906 0.3907 0.112 0.428 0.184 0.044 0.004 0.228

#> sample_68 2 0.1074 0.6454 0.000 0.960 0.012 0.000 0.000 0.028

#> sample_69 2 0.1257 0.6487 0.000 0.952 0.020 0.000 0.000 0.028

#> sample_67 2 0.7454 0.4222 0.156 0.432 0.000 0.184 0.008 0.220

#> sample_55 3 0.6890 0.5711 0.000 0.076 0.524 0.020 0.148 0.232

#> sample_56 3 0.5499 0.5955 0.000 0.020 0.644 0.008 0.128 0.200

#> sample_59 3 0.7004 0.5029 0.000 0.100 0.472 0.008 0.140 0.280

#> sample_52 5 0.2056 0.7356 0.080 0.004 0.000 0.000 0.904 0.012

#> sample_53 1 0.1364 0.7507 0.952 0.000 0.000 0.012 0.020 0.016

#> sample_51 1 0.1152 0.7555 0.952 0.000 0.000 0.004 0.044 0.000

#> sample_50 1 0.1732 0.7535 0.920 0.000 0.000 0.004 0.072 0.004

#> sample_54 5 0.2457 0.7368 0.044 0.004 0.016 0.000 0.900 0.036

#> sample_57 5 0.2697 0.7349 0.064 0.004 0.016 0.000 0.884 0.032

#> sample_58 1 0.5958 0.2821 0.460 0.000 0.036 0.000 0.408 0.096

#> sample_60 5 0.2457 0.7368 0.044 0.004 0.016 0.000 0.900 0.036

#> sample_61 1 0.6061 0.4722 0.536 0.000 0.060 0.000 0.312 0.092

#> sample_65 1 0.5371 0.5108 0.576 0.000 0.024 0.000 0.328 0.072

#> sample_66 2 0.6862 0.4917 0.156 0.500 0.000 0.040 0.032 0.272

#> sample_63 5 0.2110 0.7342 0.084 0.004 0.000 0.000 0.900 0.012

#> sample_64 5 0.6675 0.3969 0.060 0.000 0.312 0.016 0.496 0.116

#> sample_62 5 0.2056 0.7356 0.080 0.004 0.000 0.000 0.904 0.012

#> sample_1 3 0.5354 0.6679 0.000 0.076 0.728 0.080 0.060 0.056

#> sample_2 2 0.7408 0.4152 0.136 0.436 0.000 0.212 0.008 0.208

#> sample_3 4 0.0363 1.0000 0.012 0.000 0.000 0.988 0.000 0.000

#> sample_4 3 0.6288 0.6238 0.000 0.124 0.648 0.084 0.068 0.076

#> sample_5 2 0.1218 0.6454 0.000 0.956 0.012 0.004 0.000 0.028

#> sample_6 4 0.0363 1.0000 0.012 0.000 0.000 0.988 0.000 0.000

#> sample_7 3 0.6001 0.6317 0.000 0.100 0.676 0.084 0.068 0.072

#> sample_8 3 0.2617 0.6580 0.000 0.012 0.872 0.100 0.000 0.016

#> sample_9 4 0.0363 1.0000 0.012 0.000 0.000 0.988 0.000 0.000

#> sample_10 4 0.0363 1.0000 0.012 0.000 0.000 0.988 0.000 0.000

#> sample_11 4 0.0363 1.0000 0.012 0.000 0.000 0.988 0.000 0.000

#> sample_12 3 0.8275 0.5131 0.168 0.096 0.472 0.108 0.028 0.128

#> sample_13 2 0.1257 0.6487 0.000 0.952 0.028 0.000 0.000 0.020

#> sample_14 2 0.5943 0.3436 0.000 0.500 0.000 0.360 0.032 0.108

#> sample_15 2 0.1218 0.6454 0.000 0.956 0.012 0.004 0.000 0.028

#> sample_16 2 0.5732 0.4398 0.000 0.580 0.264 0.016 0.004 0.136

#> sample_17 2 0.5846 0.5099 0.000 0.604 0.008 0.244 0.036 0.108

#> sample_18 3 0.8117 0.3973 0.000 0.196 0.412 0.120 0.076 0.196

#> sample_19 2 0.5461 0.4869 0.000 0.632 0.212 0.016 0.004 0.136

#> sample_20 2 0.1257 0.6487 0.000 0.952 0.028 0.000 0.000 0.020

#> sample_21 2 0.4238 0.5391 0.000 0.724 0.028 0.016 0.004 0.228

#> sample_22 3 0.4878 0.6298 0.060 0.012 0.732 0.152 0.000 0.044

#> sample_23 4 0.0363 1.0000 0.012 0.000 0.000 0.988 0.000 0.000

#> sample_24 2 0.2677 0.6363 0.000 0.884 0.056 0.024 0.000 0.036

#> sample_25 3 0.7730 0.5432 0.112 0.104 0.520 0.120 0.008 0.136

#> sample_26 2 0.5644 0.4706 0.000 0.612 0.224 0.020 0.004 0.140

#> sample_27 3 0.3920 0.6497 0.008 0.020 0.824 0.048 0.020 0.080

#> sample_34 5 0.6890 0.1595 0.328 0.000 0.164 0.016 0.444 0.048

#> sample_35 5 0.6926 0.4207 0.120 0.000 0.300 0.020 0.484 0.076

#> sample_36 1 0.2882 0.7116 0.812 0.000 0.000 0.000 0.180 0.008

#> sample_37 1 0.1369 0.7472 0.952 0.000 0.000 0.016 0.016 0.016

#> sample_38 1 0.3238 0.6795 0.848 0.000 0.000 0.056 0.024 0.072

#> sample_28 1 0.3597 0.6957 0.844 0.004 0.024 0.028 0.028 0.072

#> sample_29 2 0.6881 0.4313 0.248 0.444 0.000 0.016 0.032 0.260

#> sample_30 1 0.2915 0.7093 0.808 0.000 0.000 0.000 0.184 0.008

#> sample_31 1 0.5704 0.4120 0.540 0.000 0.048 0.004 0.356 0.052

#> sample_32 1 0.4934 0.6332 0.672 0.012 0.000 0.008 0.240 0.068

#> sample_33 1 0.1346 0.7510 0.952 0.000 0.000 0.008 0.024 0.016

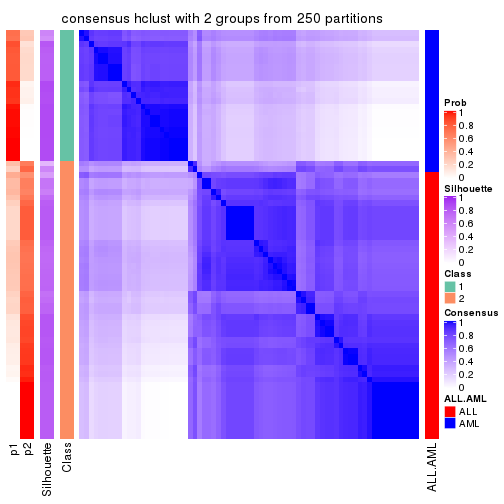

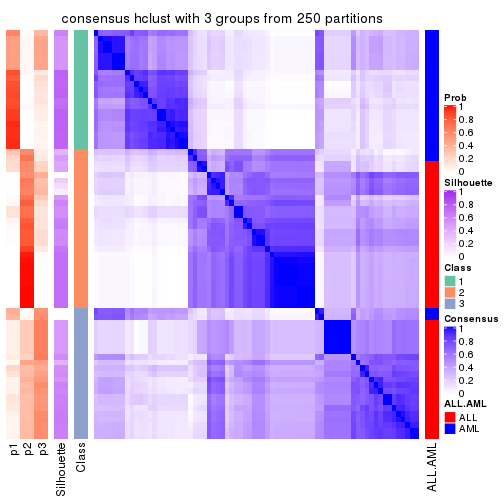

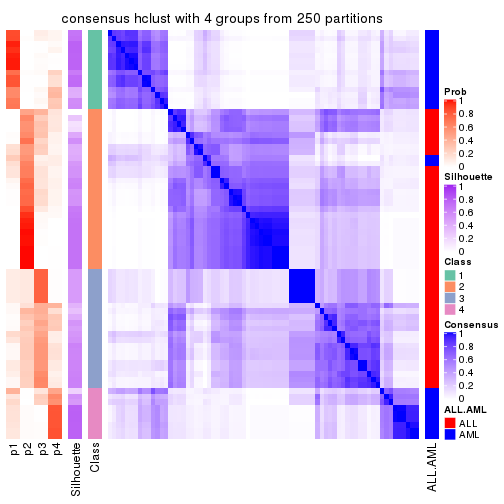

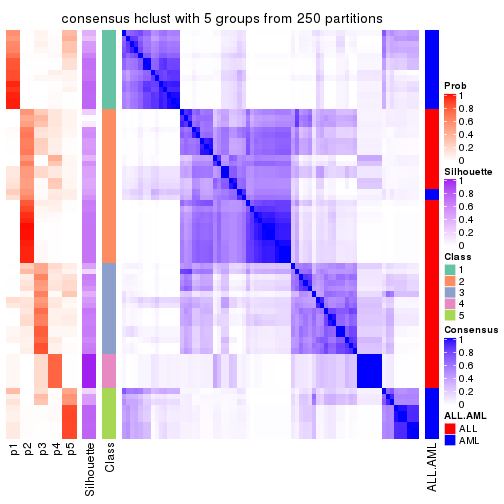

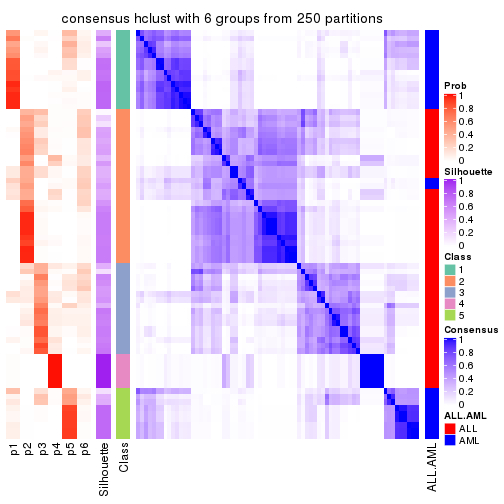

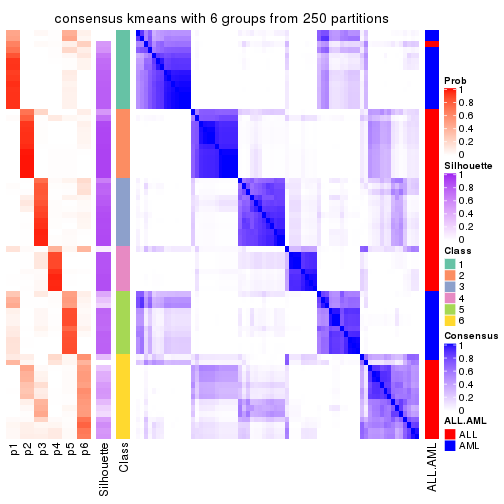

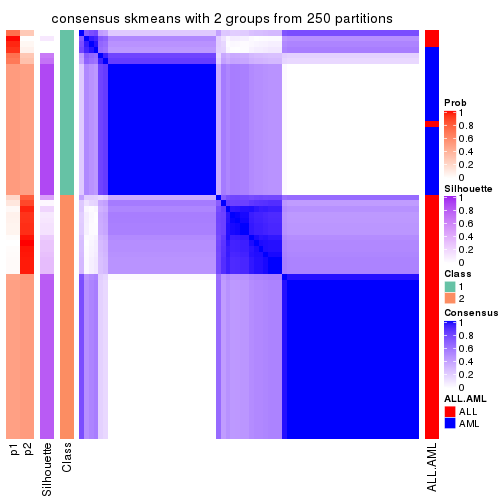

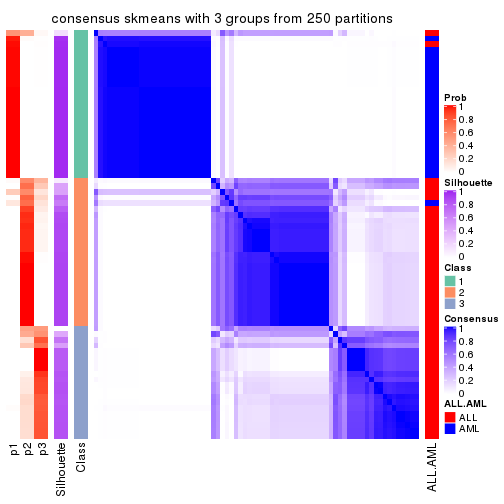

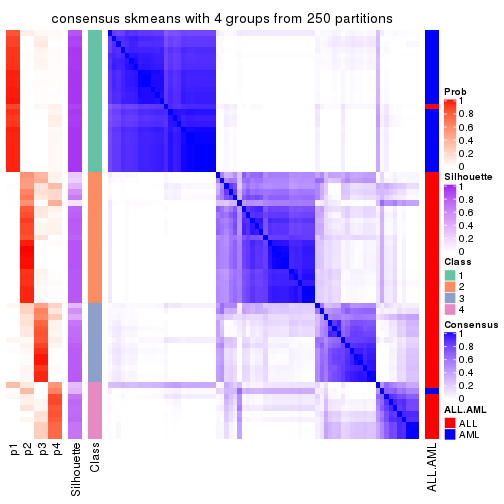

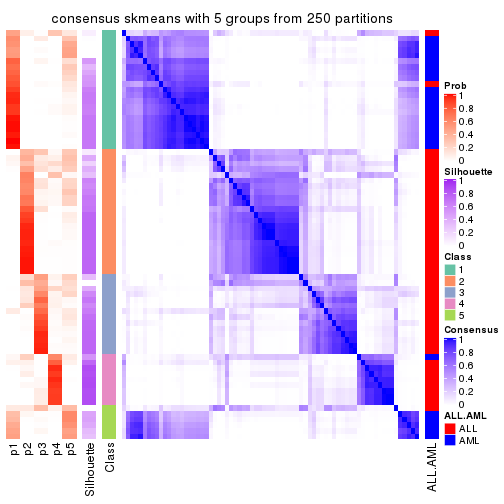

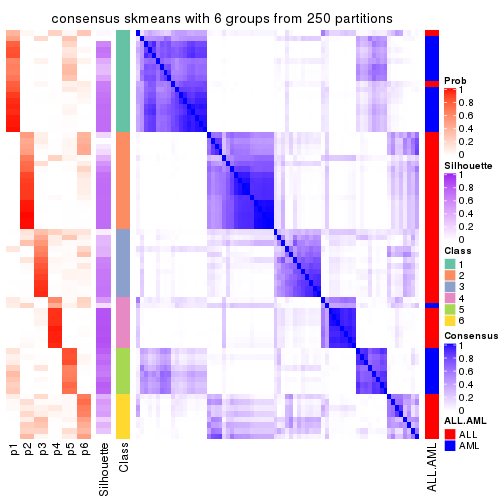

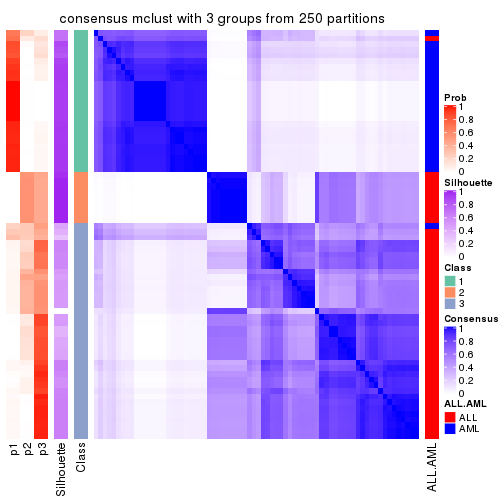

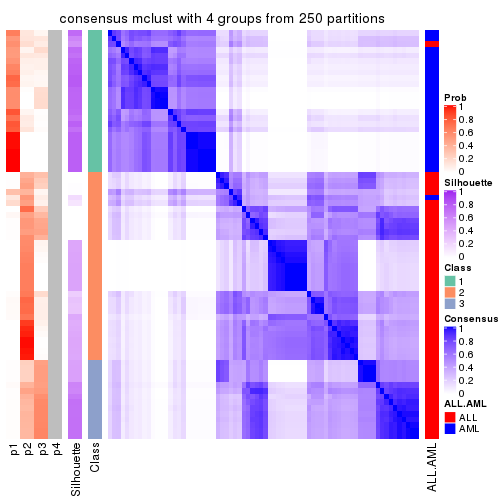

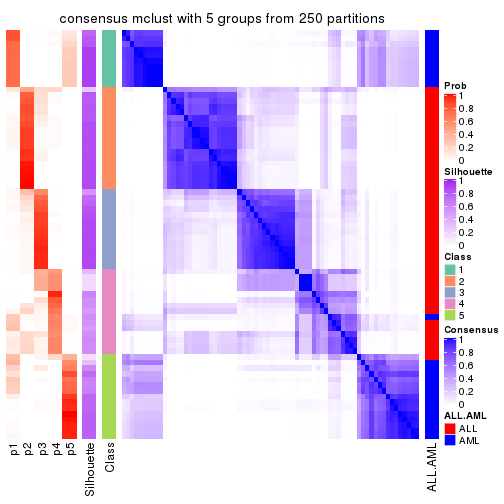

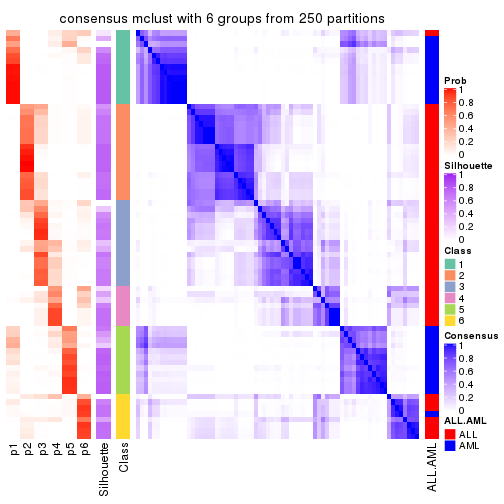

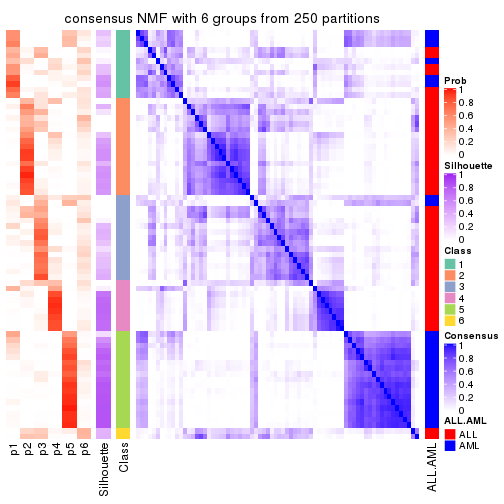

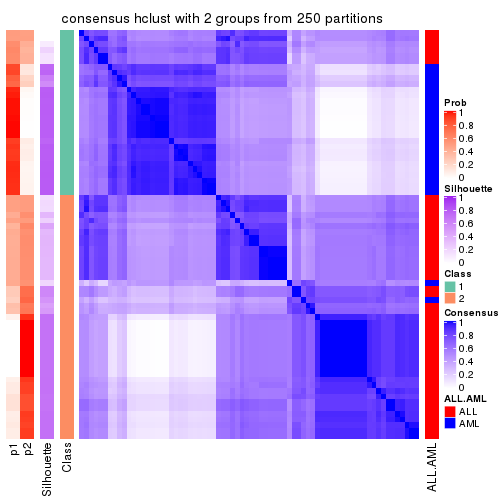

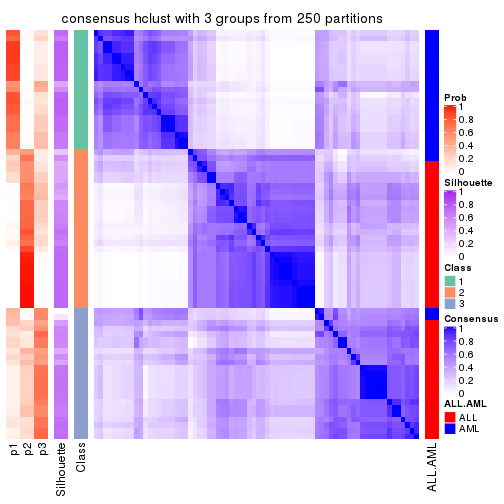

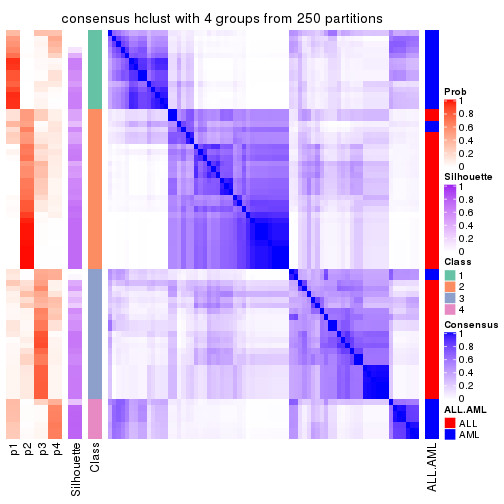

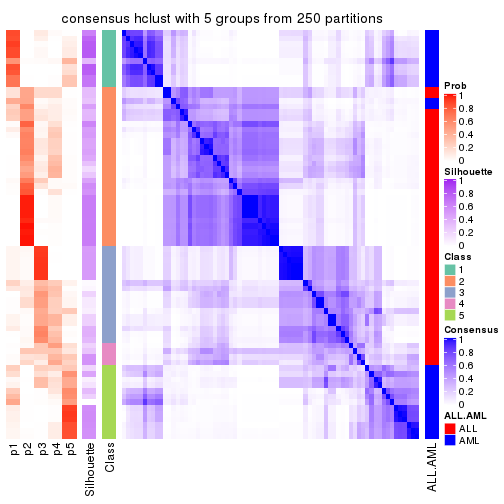

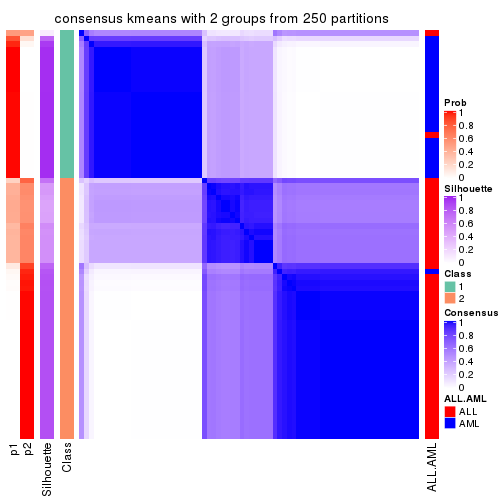

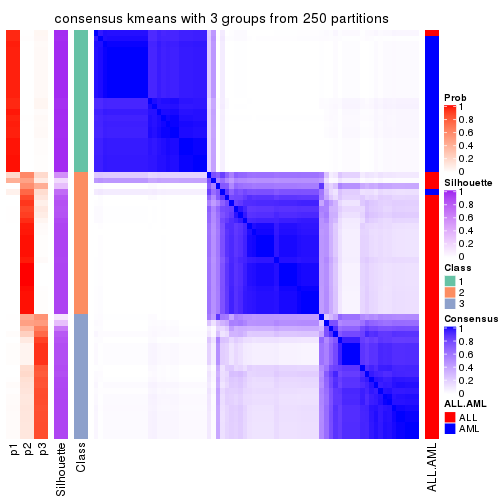

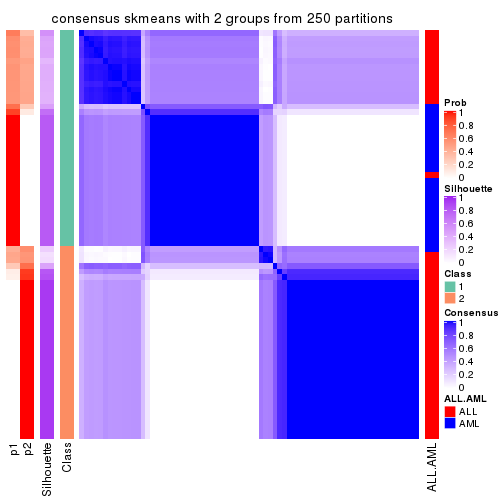

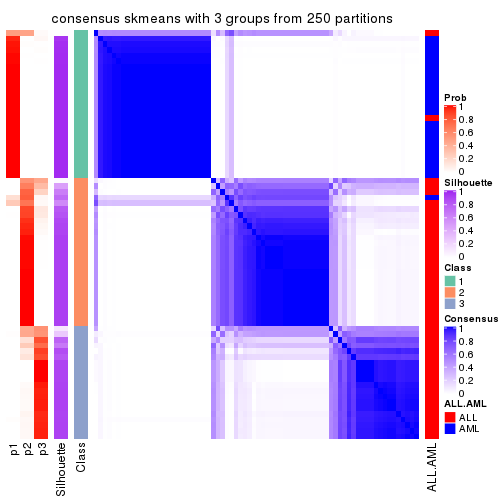

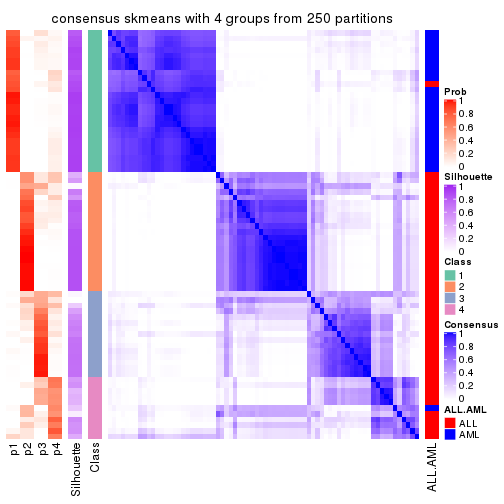

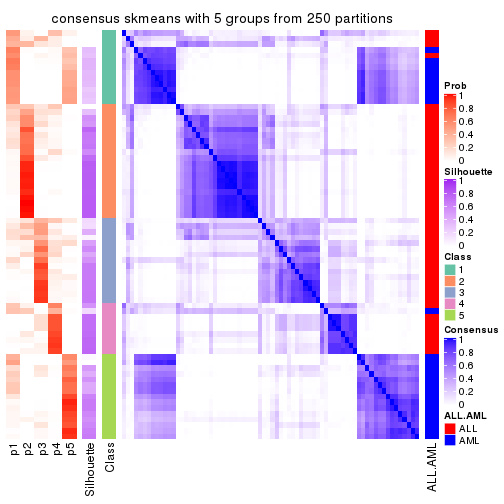

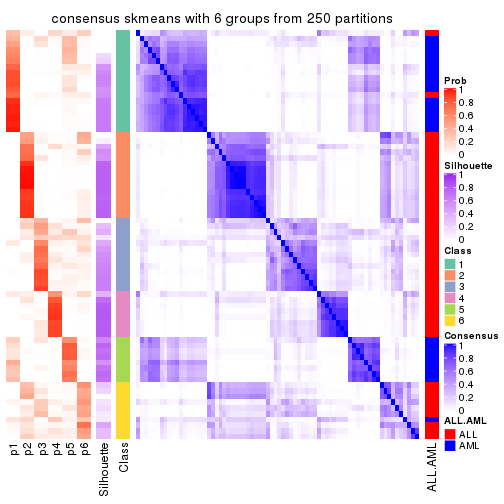

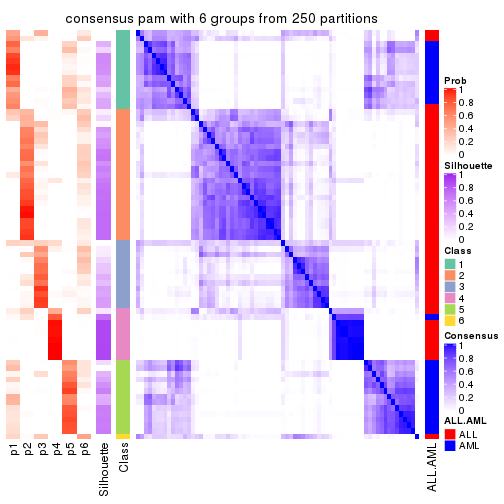

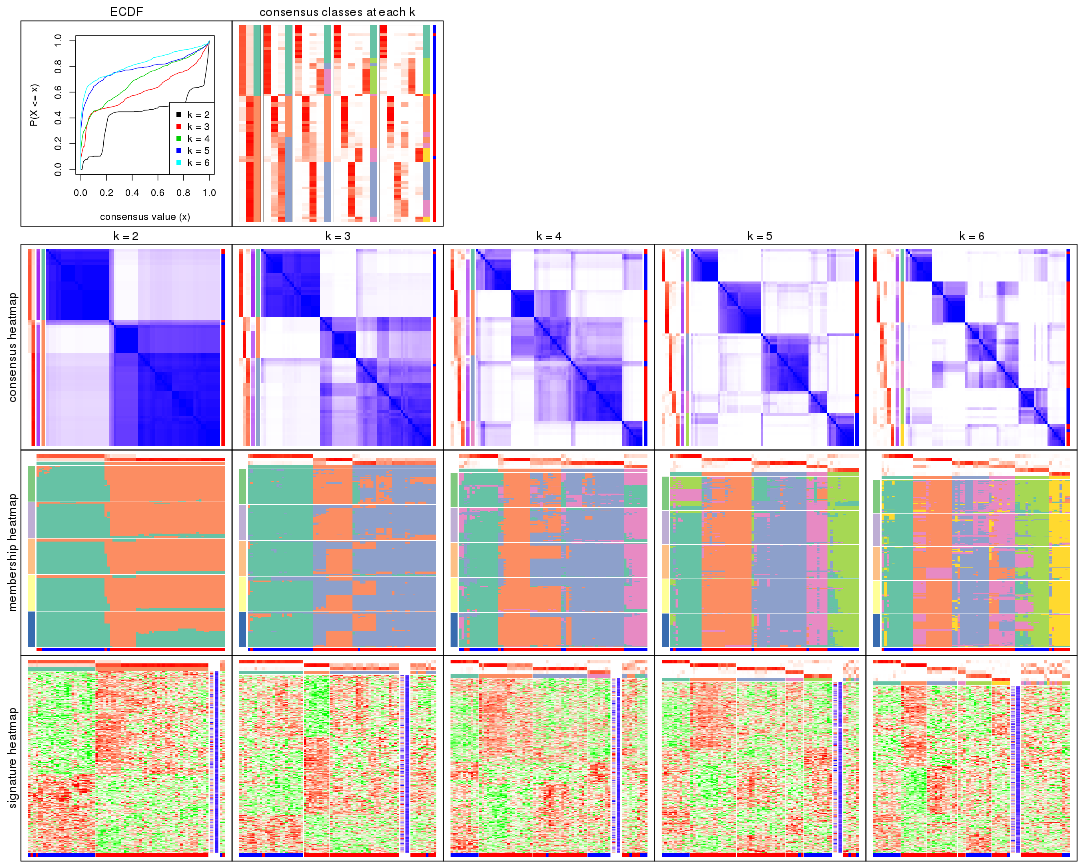

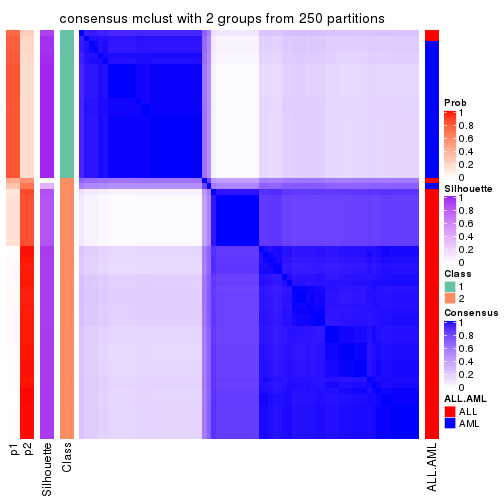

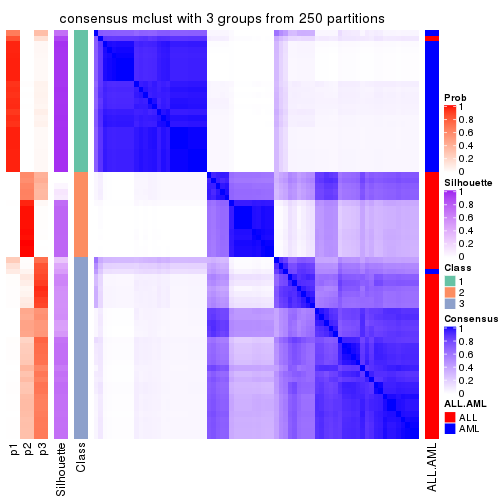

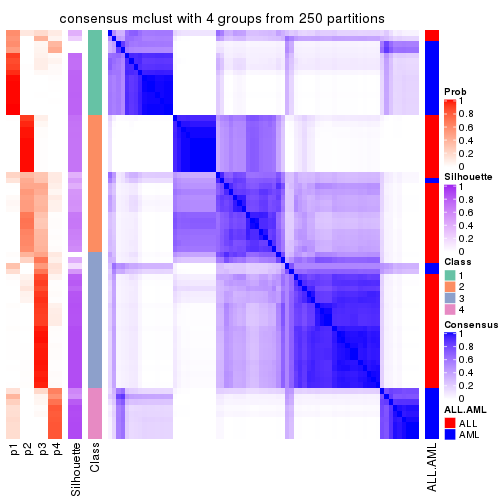

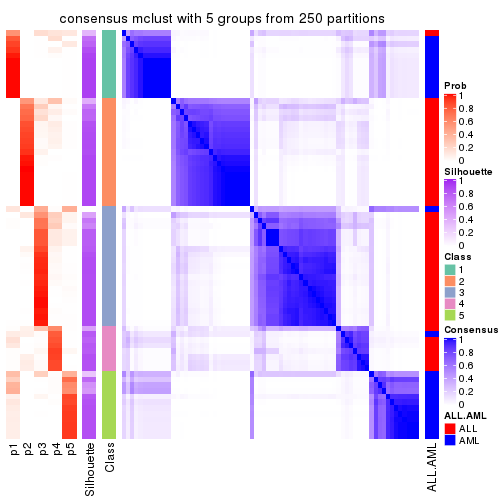

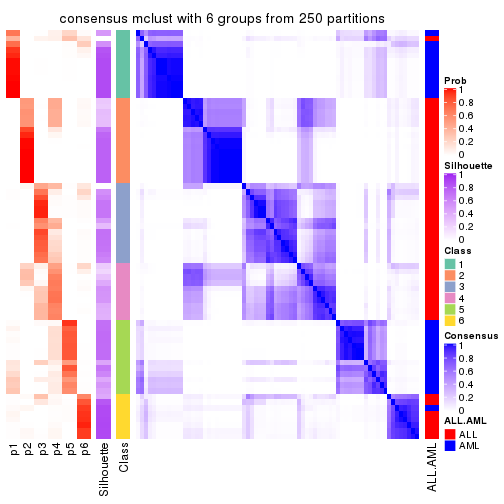

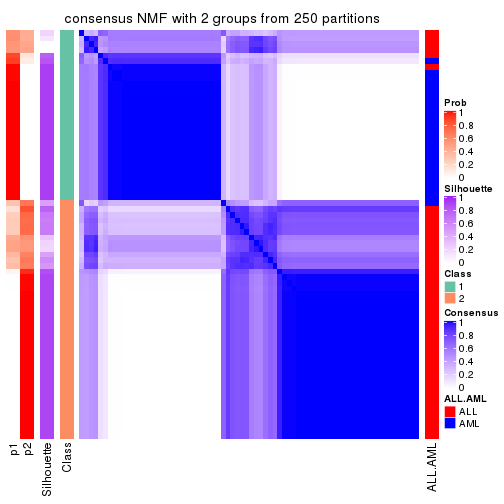

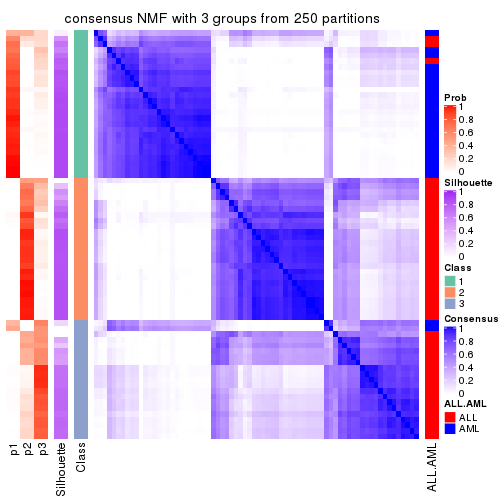

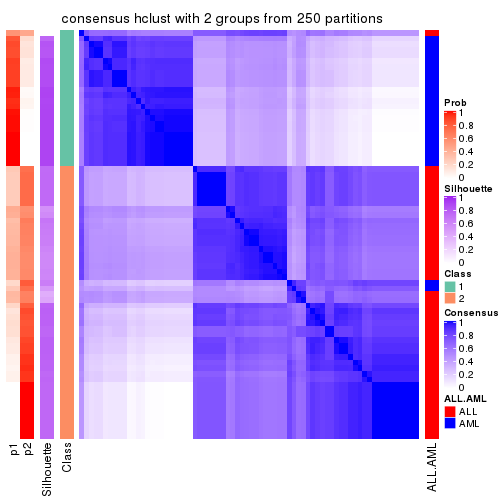

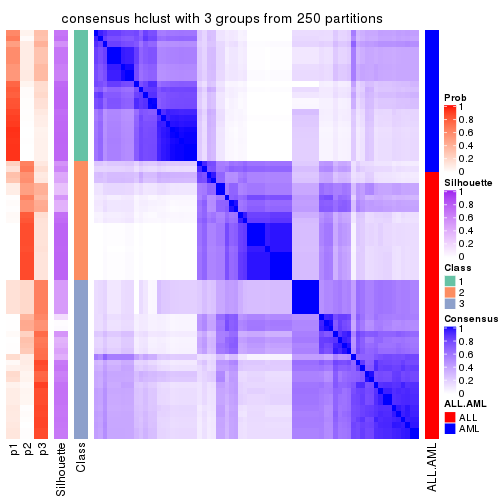

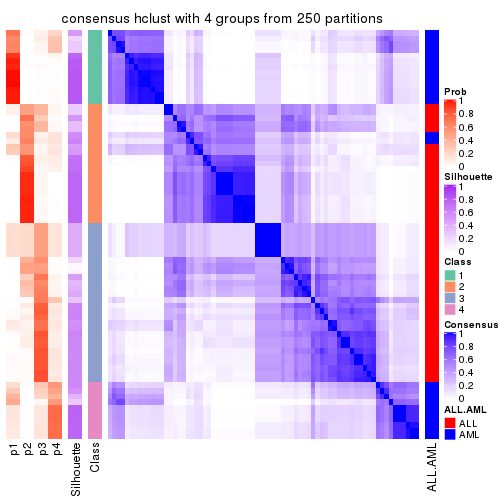

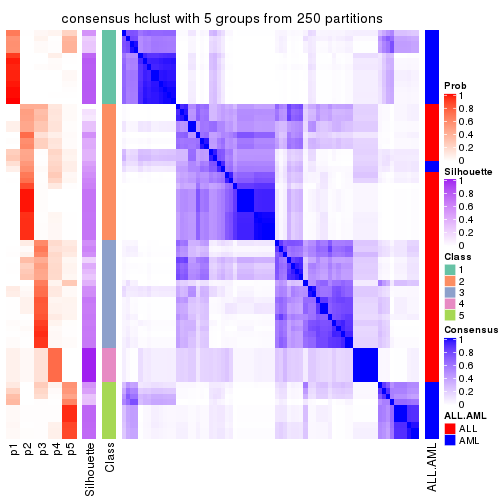

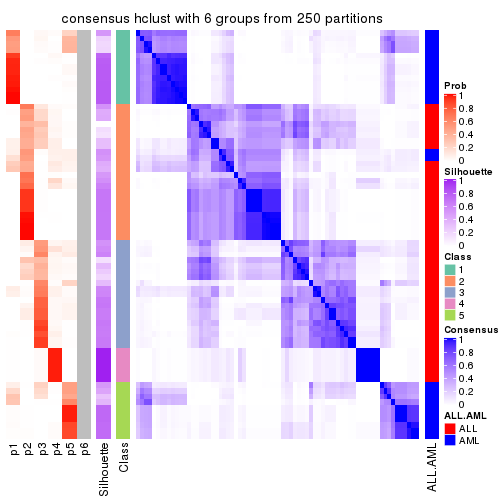

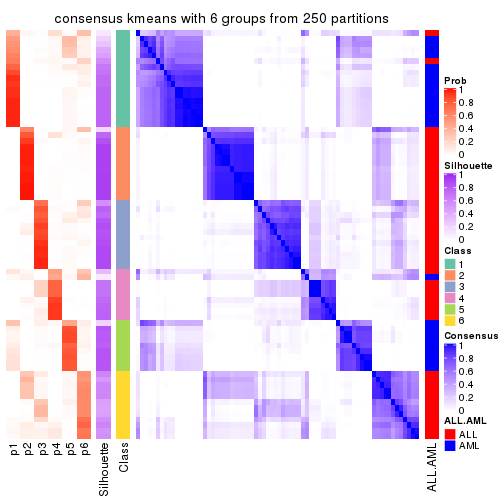

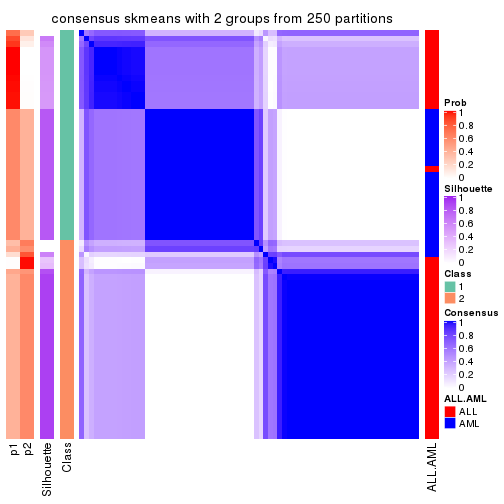

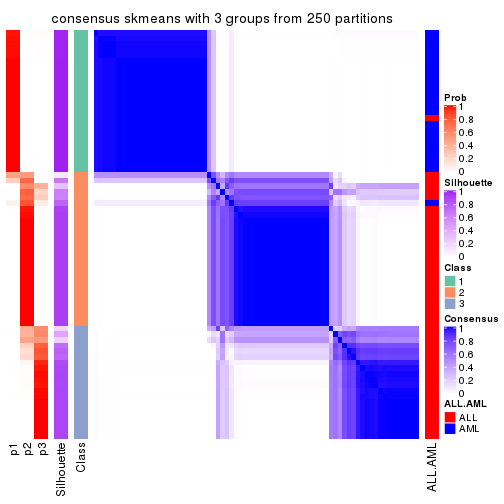

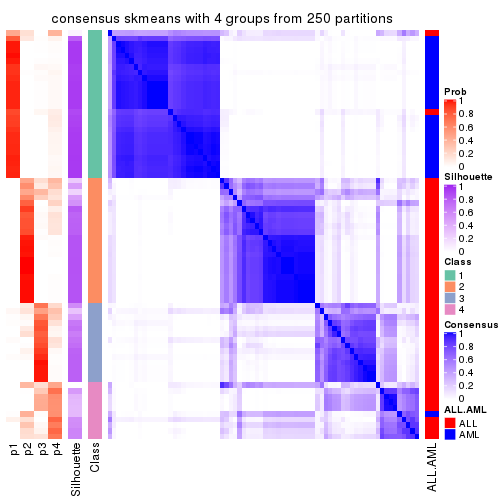

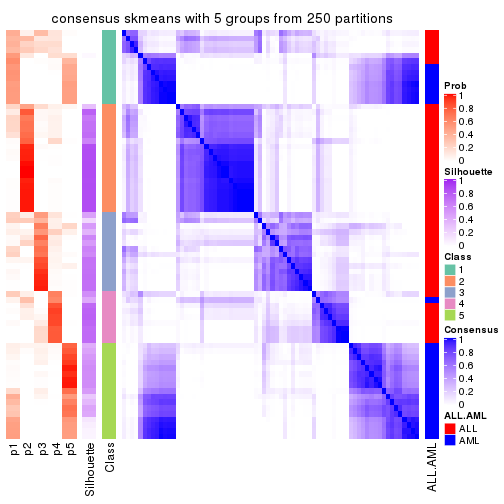

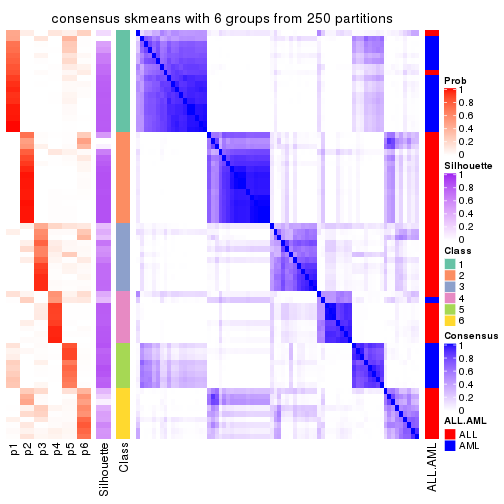

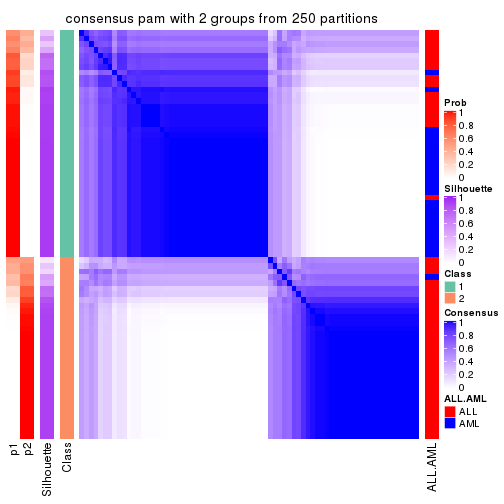

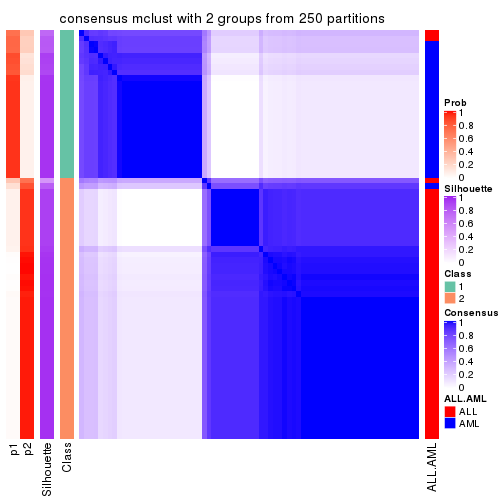

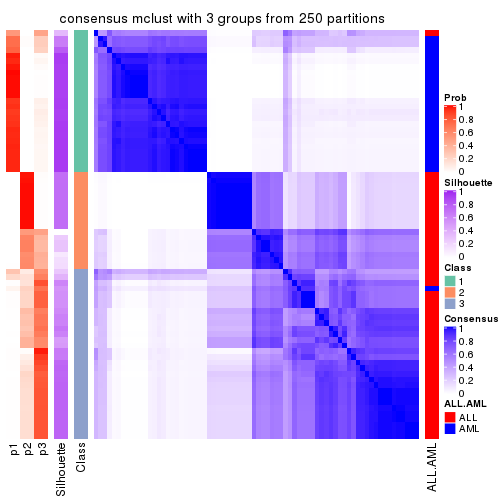

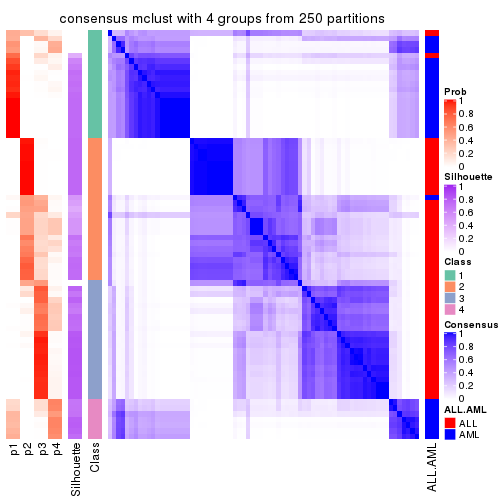

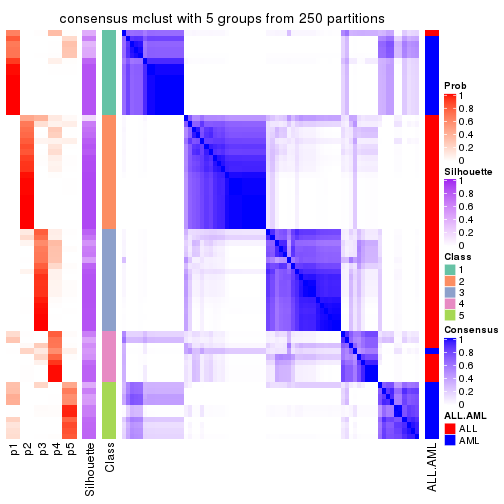

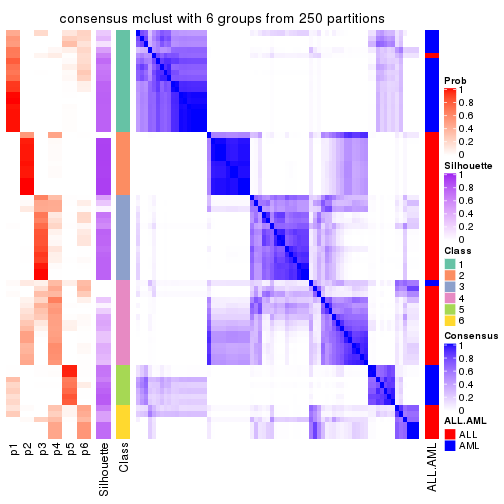

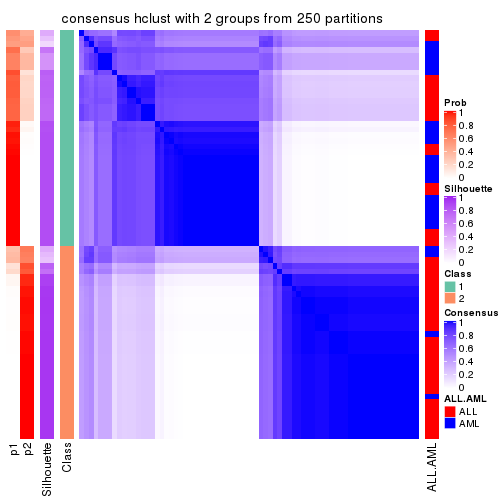

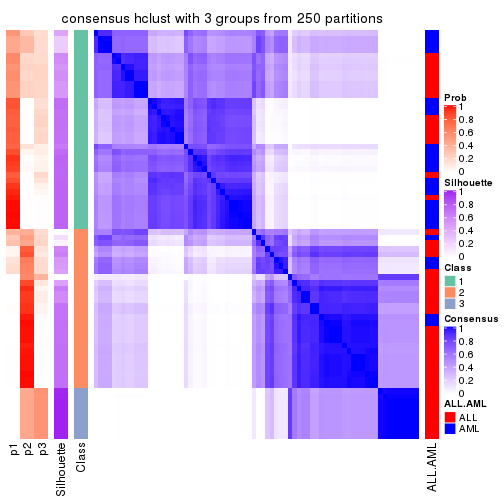

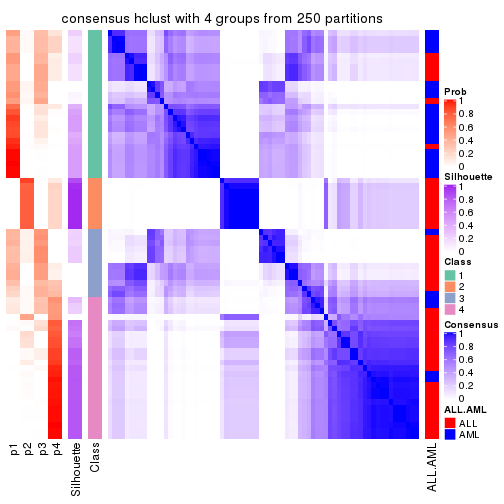

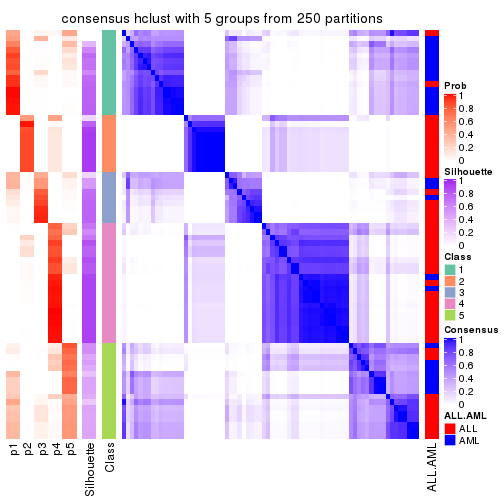

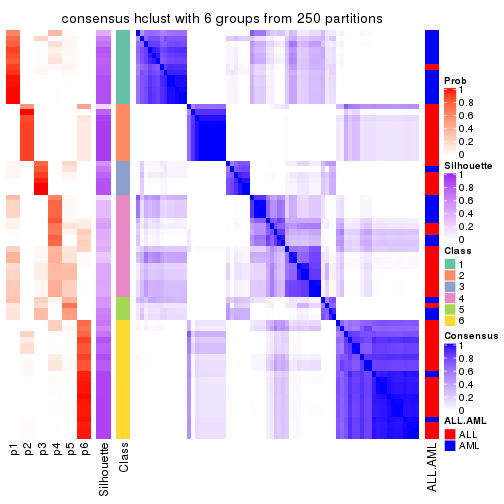

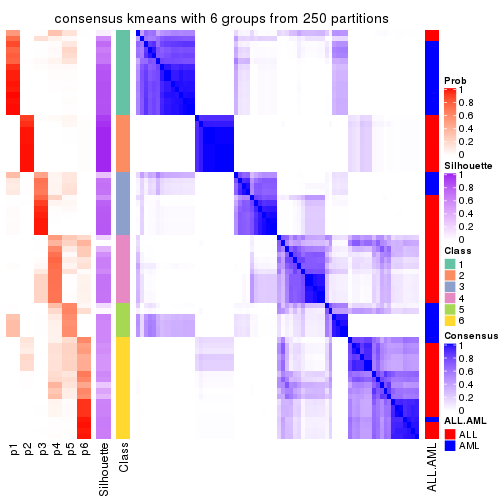

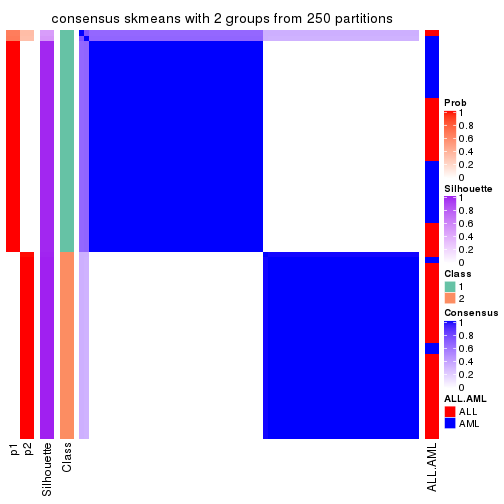

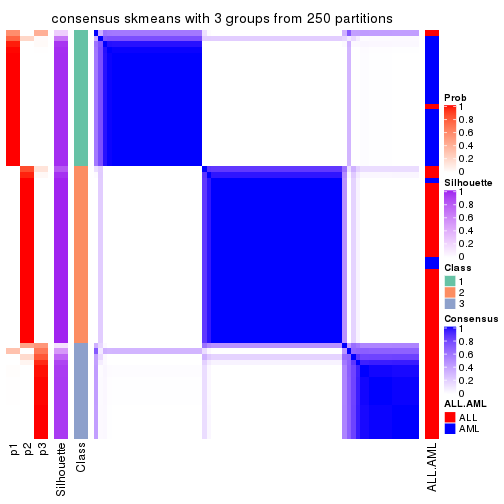

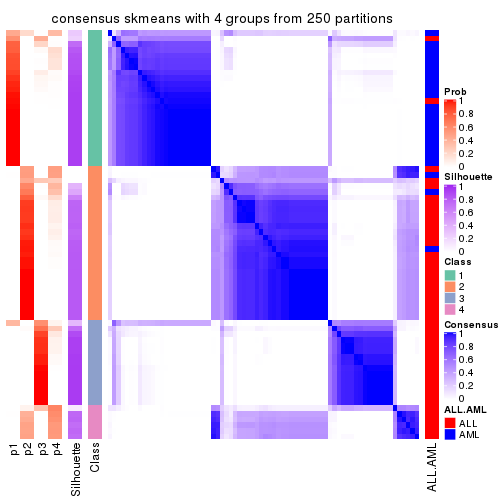

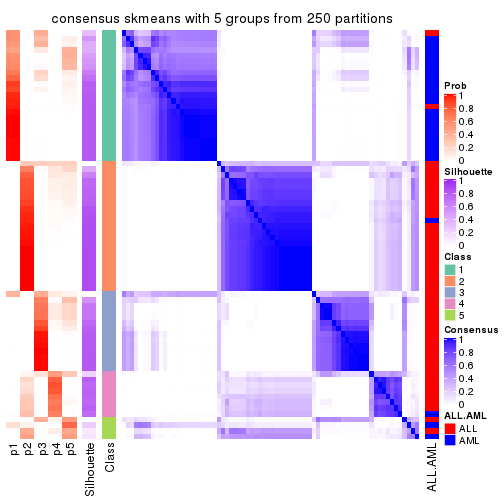

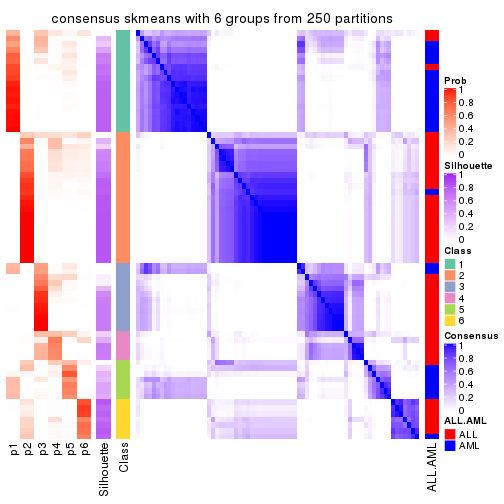

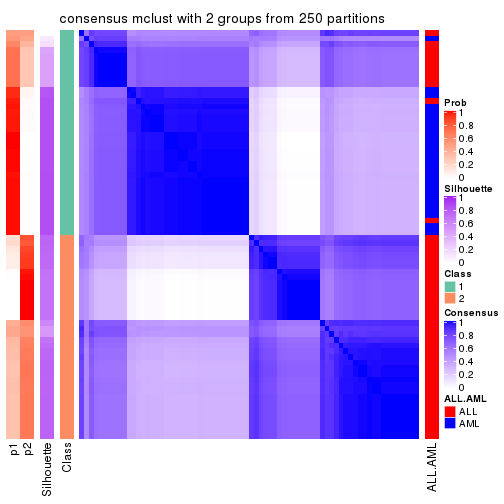

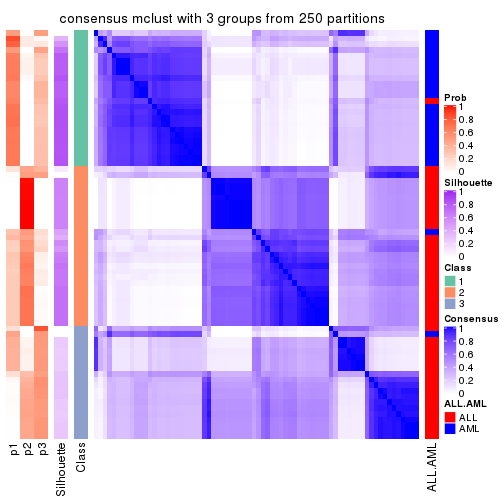

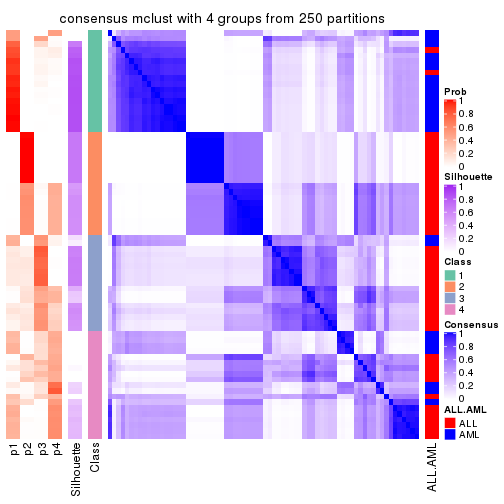

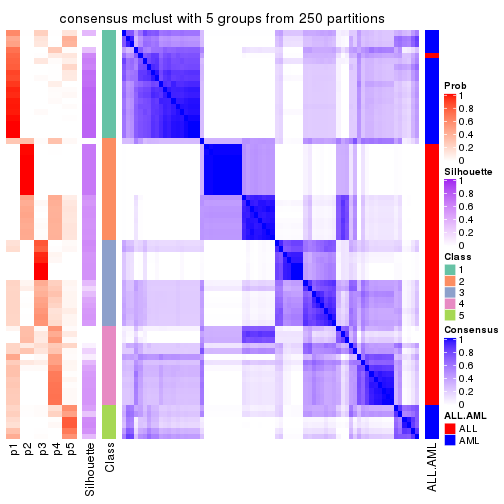

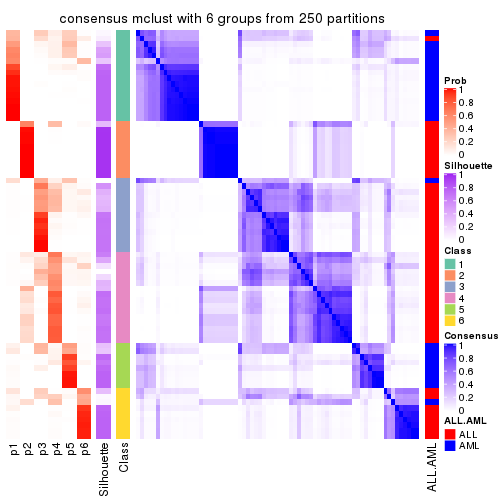

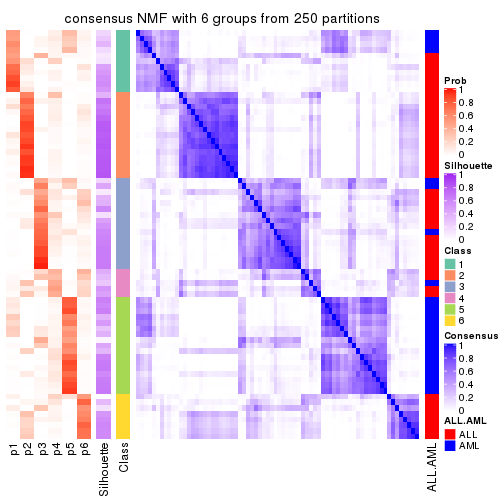

Heatmaps for the consensus matrix. It visualizes the probability of two samples to be in a same group.

consensus_heatmap(res, k = 2)

consensus_heatmap(res, k = 3)

consensus_heatmap(res, k = 4)

consensus_heatmap(res, k = 5)

consensus_heatmap(res, k = 6)

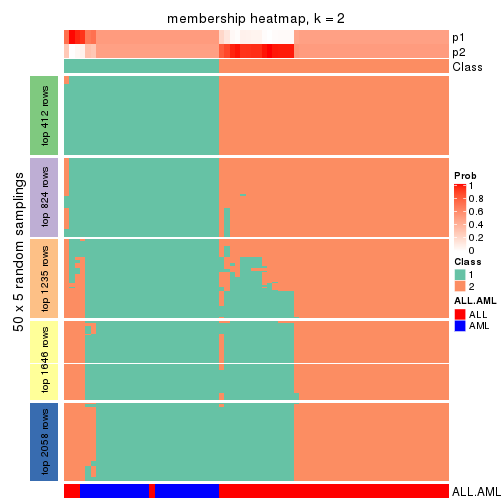

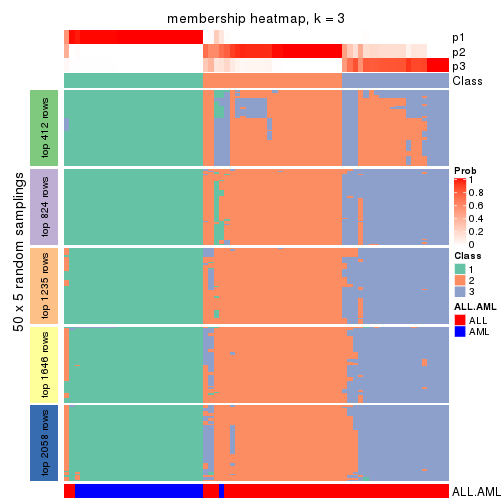

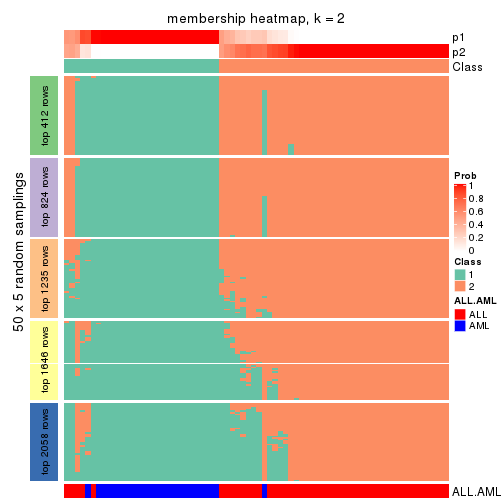

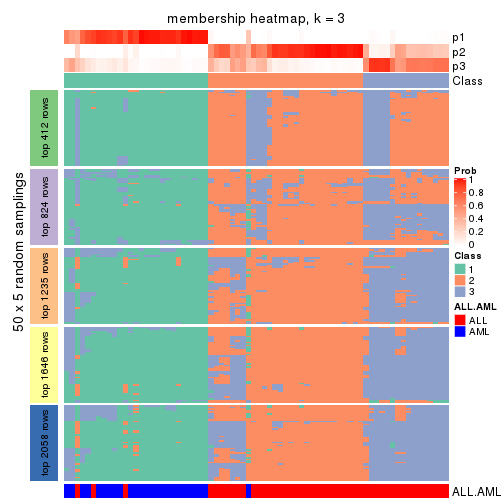

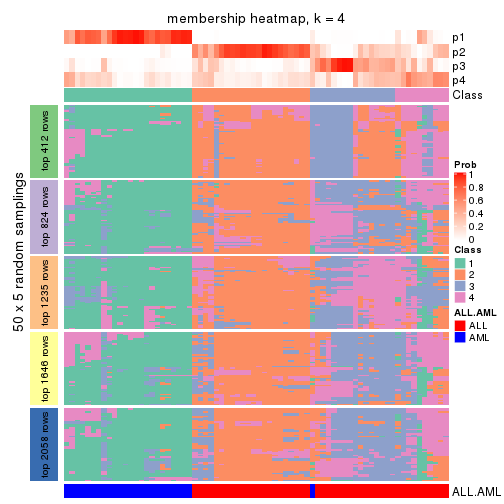

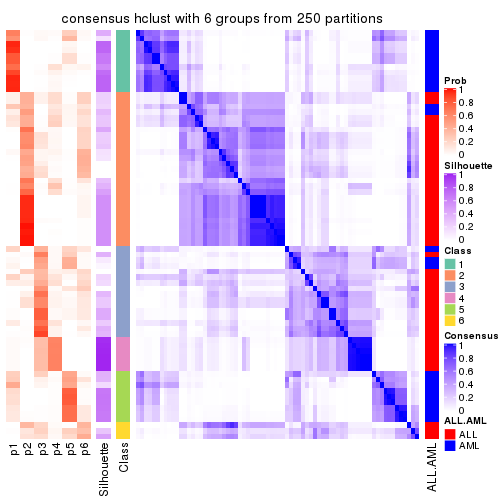

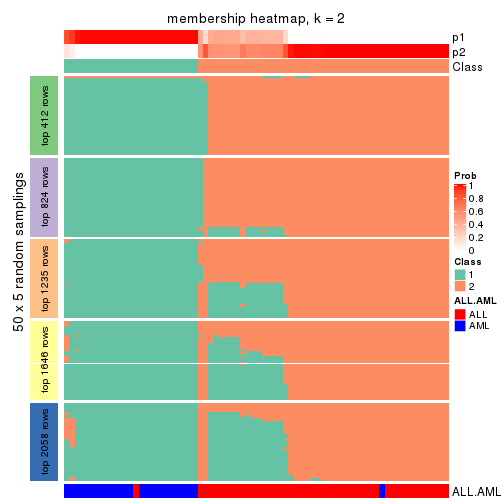

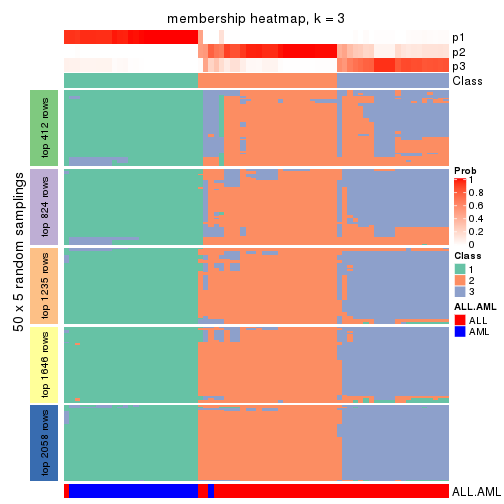

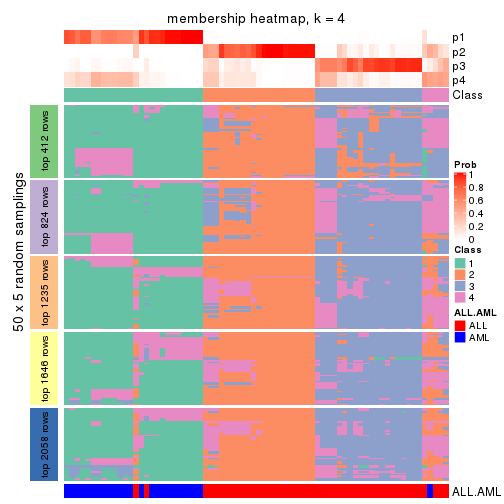

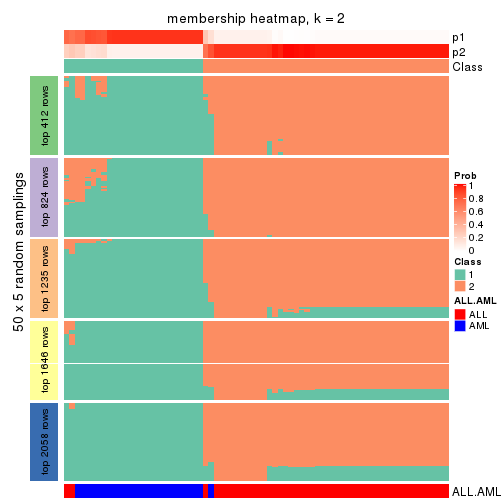

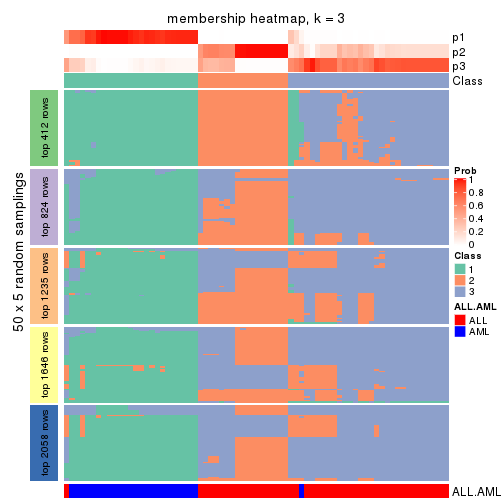

Heatmaps for the membership of samples in all partitions to see how consistent they are:

membership_heatmap(res, k = 2)

membership_heatmap(res, k = 3)

membership_heatmap(res, k = 4)

membership_heatmap(res, k = 5)

membership_heatmap(res, k = 6)

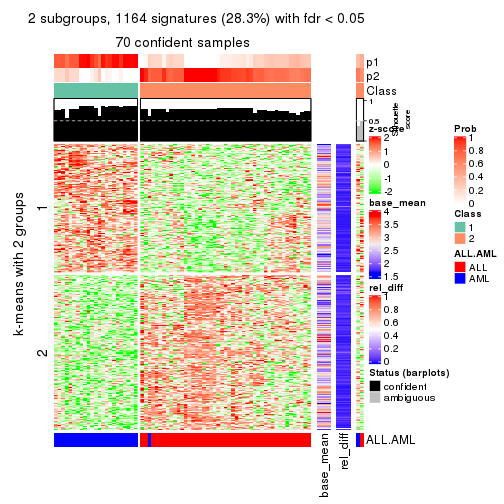

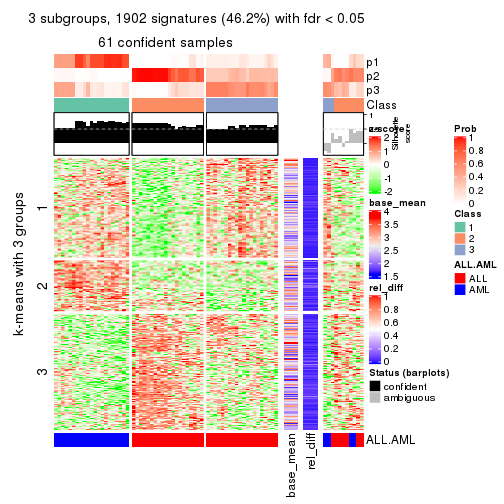

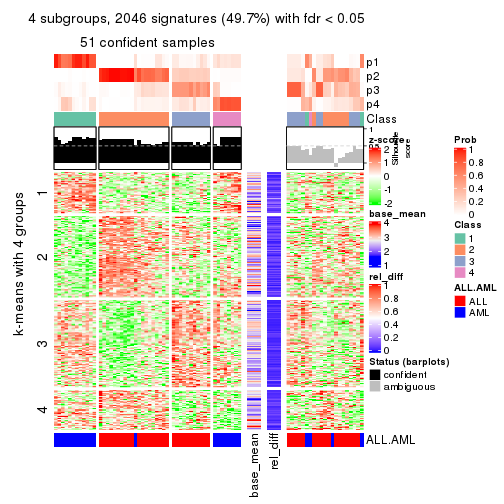

As soon as we have had the classes for columns, we can look for signatures which are significantly different between classes which can be candidate marks for certain classes. Following are the heatmaps for signatures.

Signature heatmaps where rows are scaled:

get_signatures(res, k = 2)

get_signatures(res, k = 3)

get_signatures(res, k = 4)

get_signatures(res, k = 5)

get_signatures(res, k = 6)

Signature heatmaps where rows are not scaled:

get_signatures(res, k = 2, scale_rows = FALSE)

get_signatures(res, k = 3, scale_rows = FALSE)

get_signatures(res, k = 4, scale_rows = FALSE)

get_signatures(res, k = 5, scale_rows = FALSE)

get_signatures(res, k = 6, scale_rows = FALSE)

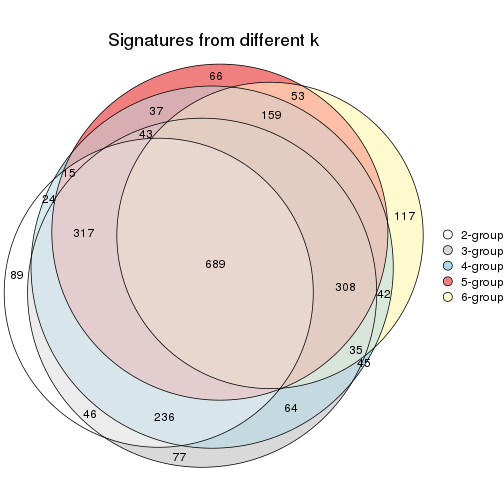

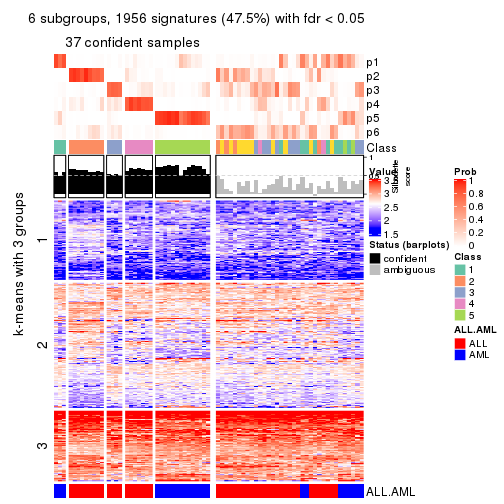

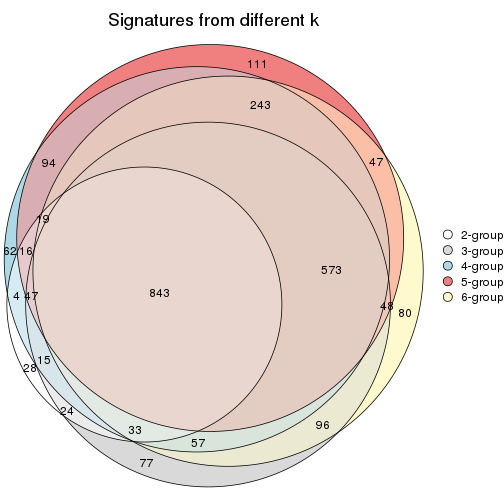

Compare the overlap of signatures from different k:

compare_signatures(res)

get_signature() returns a data frame invisibly. TO get the list of signatures, the function

call should be assigned to a variable explicitly. In following code, if plot argument is set

to FALSE, no heatmap is plotted while only the differential analysis is performed.

# code only for demonstration

tb = get_signature(res, k = ..., plot = FALSE)

An example of the output of tb is:

#> which_row fdr mean_1 mean_2 scaled_mean_1 scaled_mean_2 km

#> 1 38 0.042760348 8.373488 9.131774 -0.5533452 0.5164555 1

#> 2 40 0.018707592 7.106213 8.469186 -0.6173731 0.5762149 1

#> 3 55 0.019134737 10.221463 11.207825 -0.6159697 0.5749050 1

#> 4 59 0.006059896 5.921854 7.869574 -0.6899429 0.6439467 1

#> 5 60 0.018055526 8.928898 10.211722 -0.6204761 0.5791110 1

#> 6 98 0.009384629 15.714769 14.887706 0.6635654 -0.6193277 2

...

The columns in tb are:

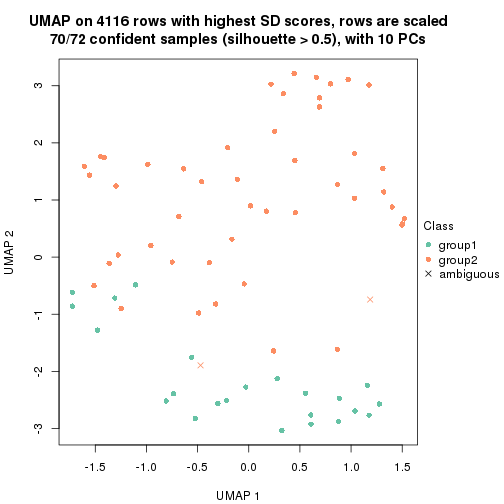

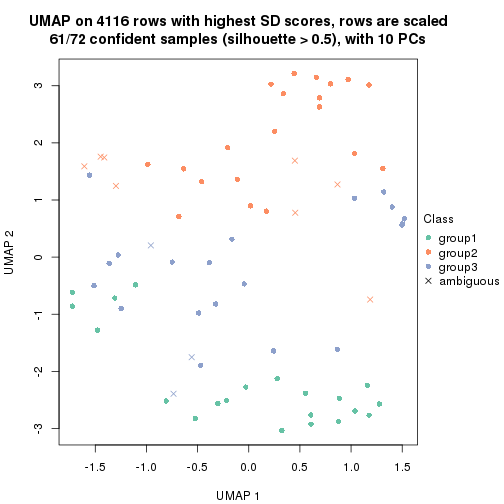

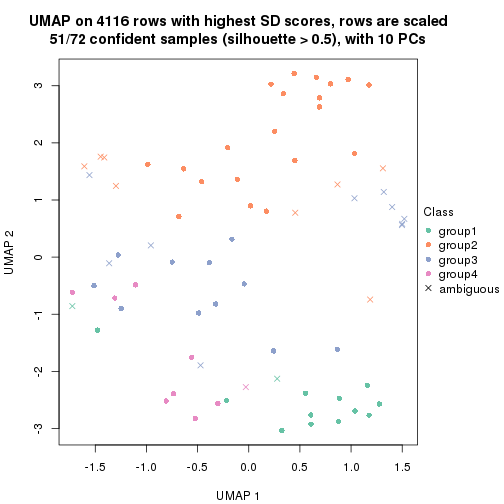

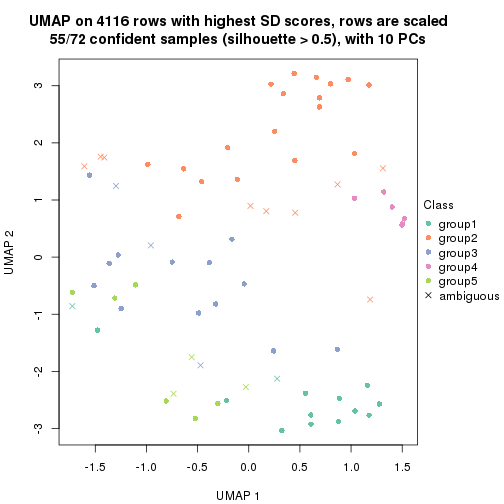

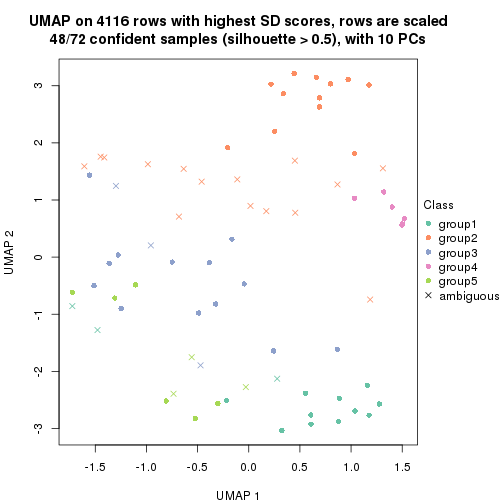

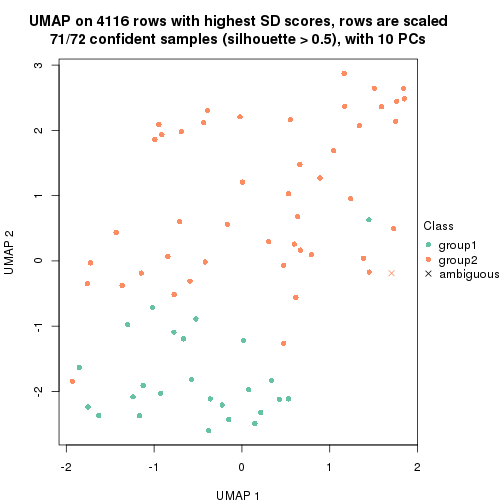

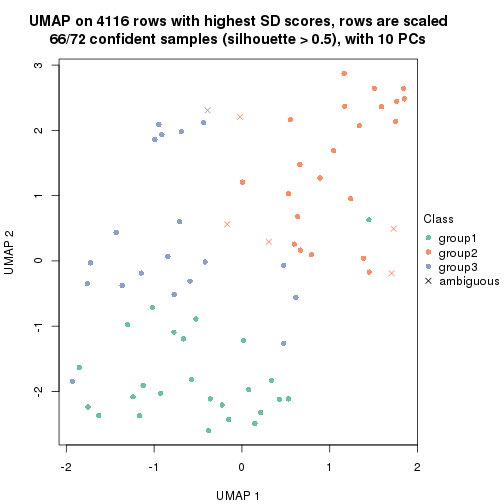

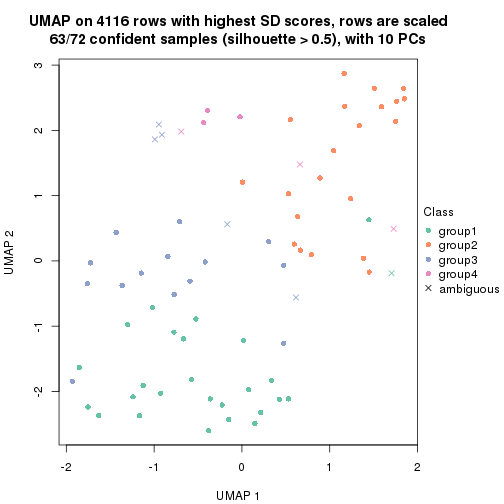

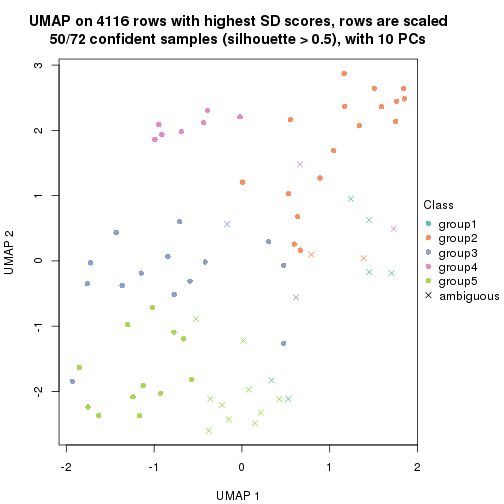

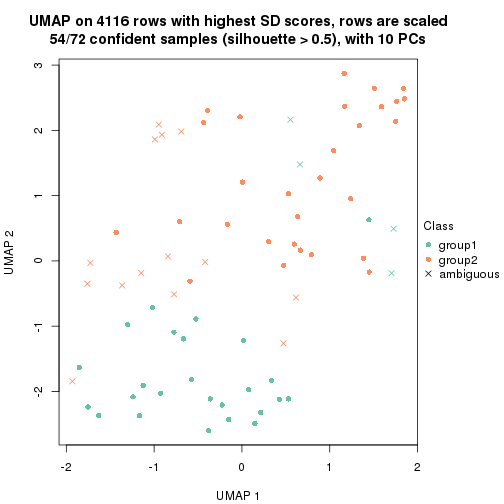

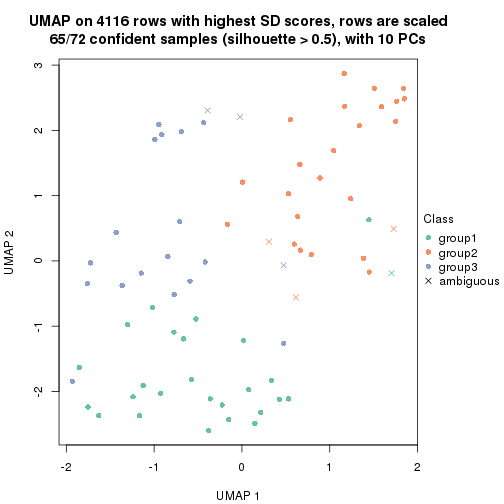

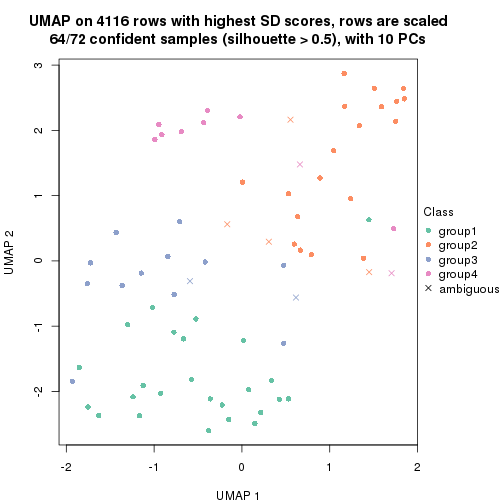

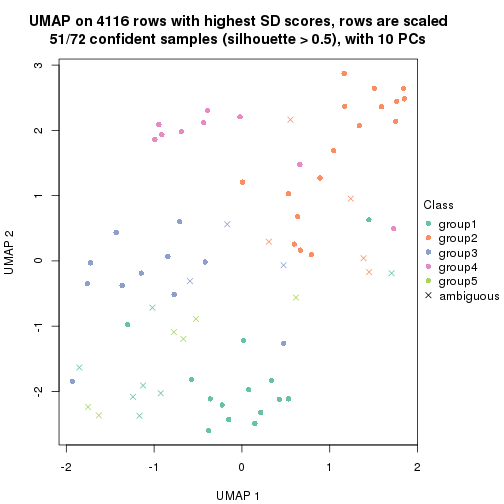

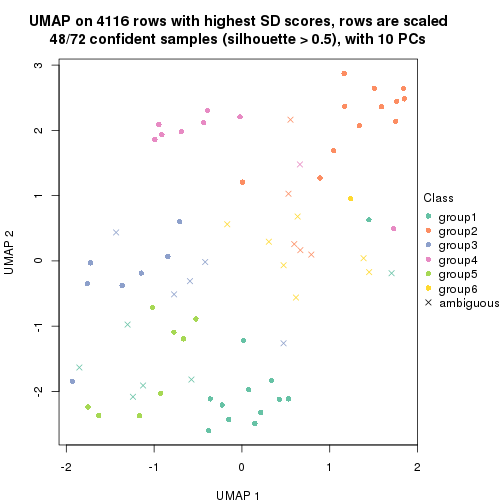

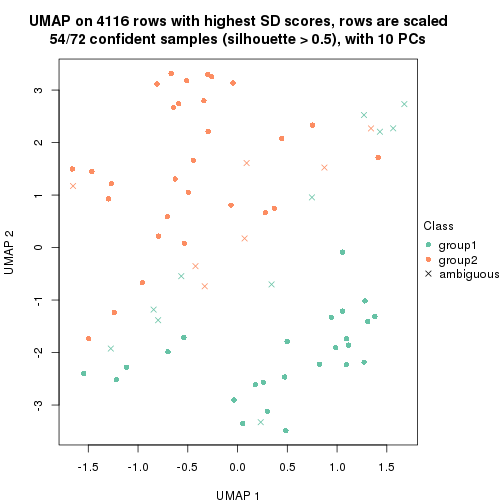

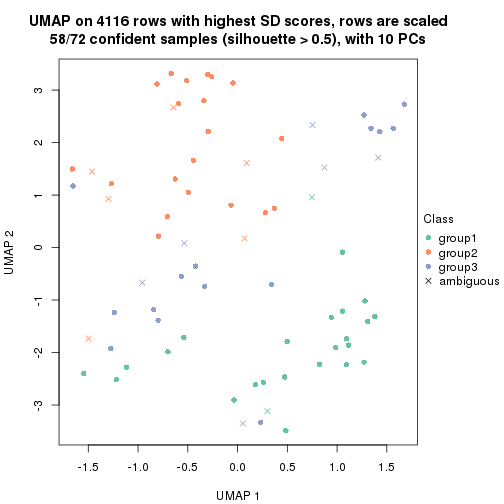

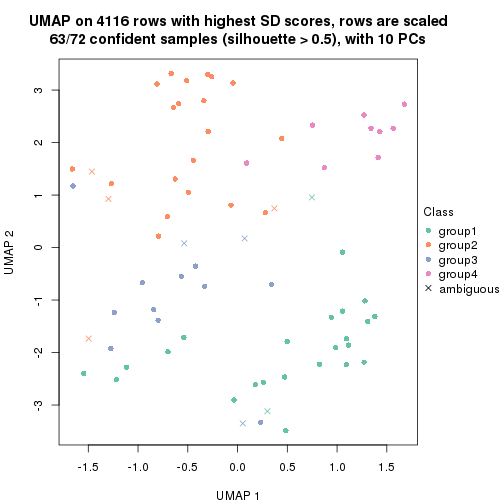

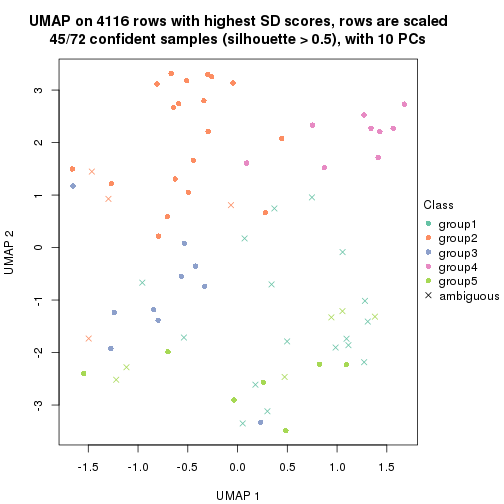

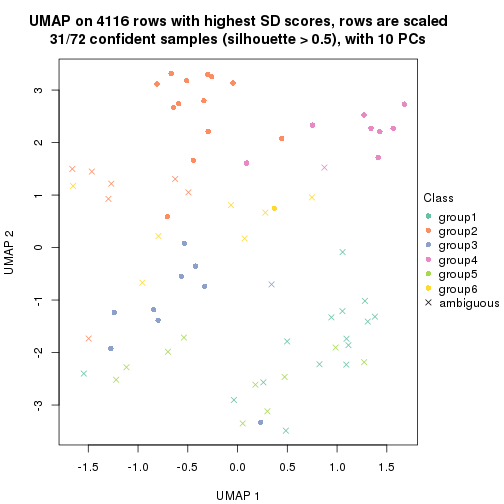

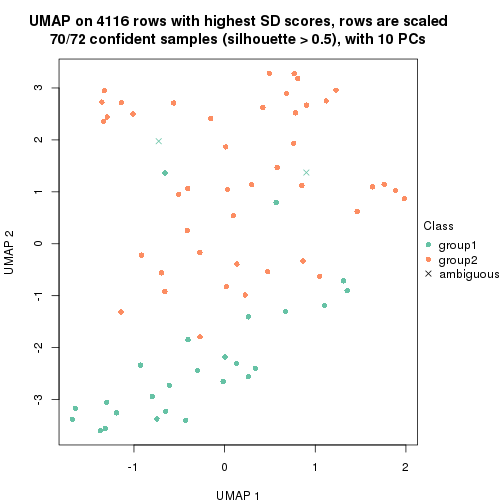

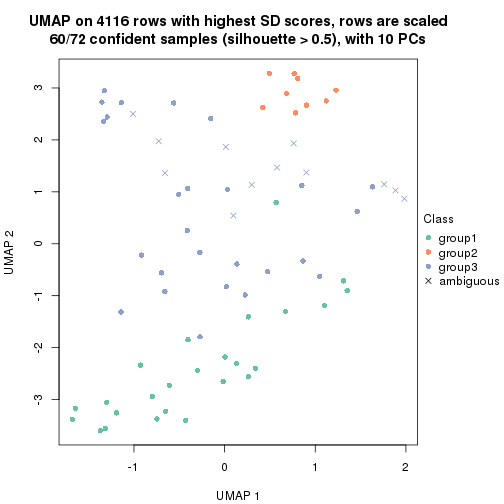

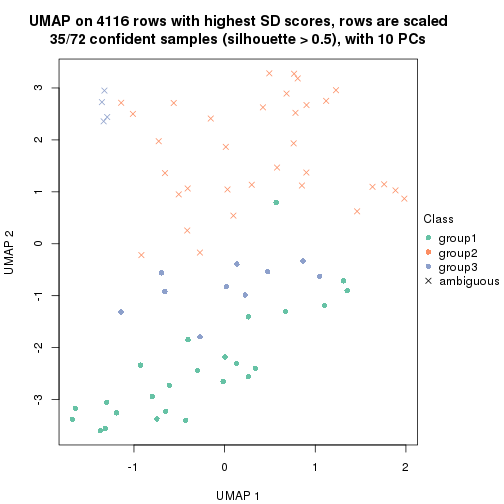

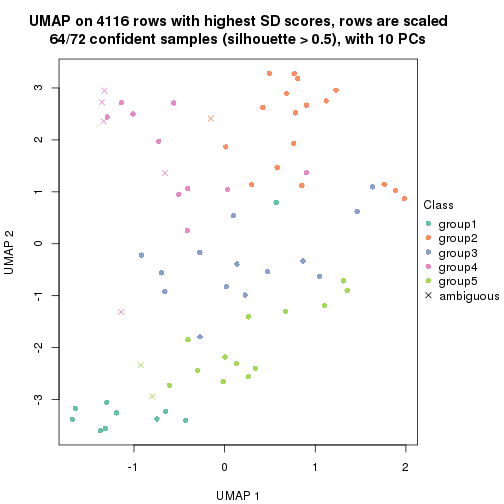

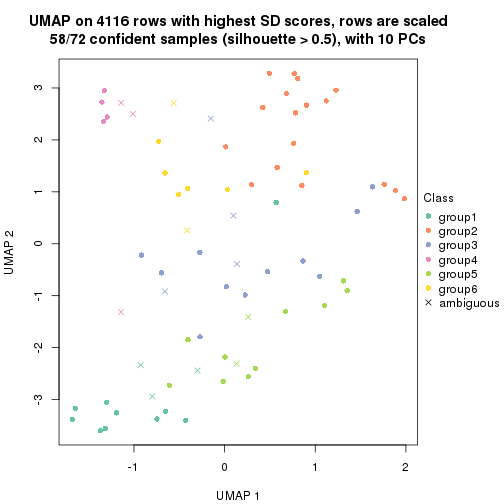

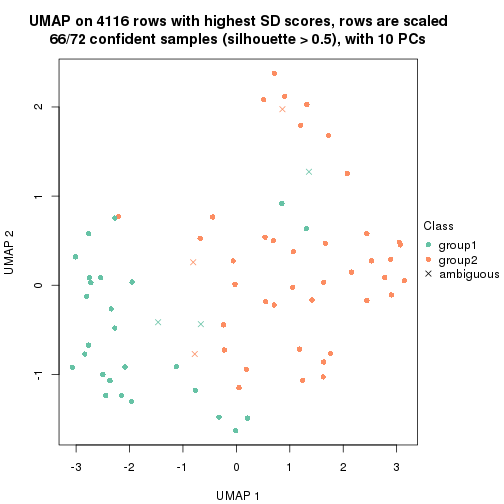

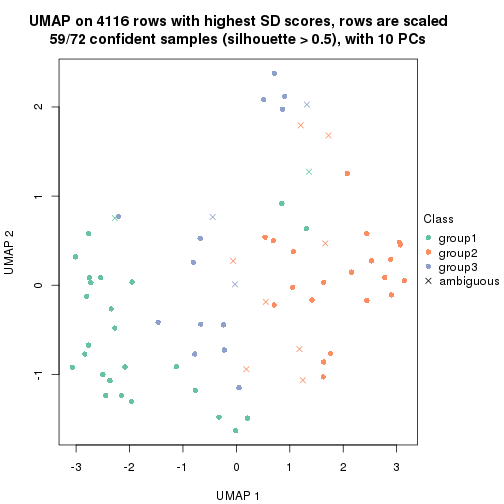

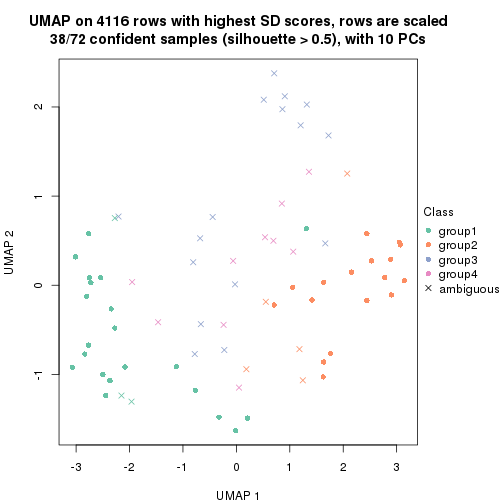

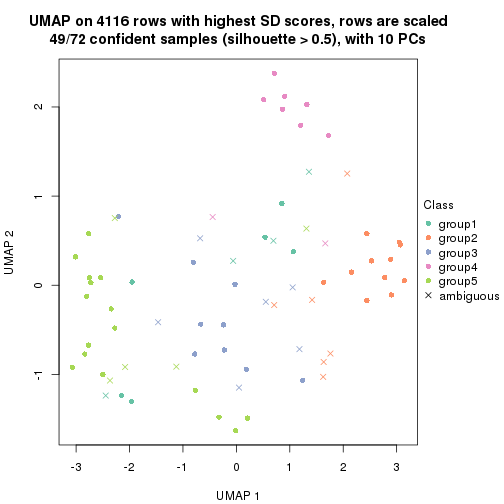

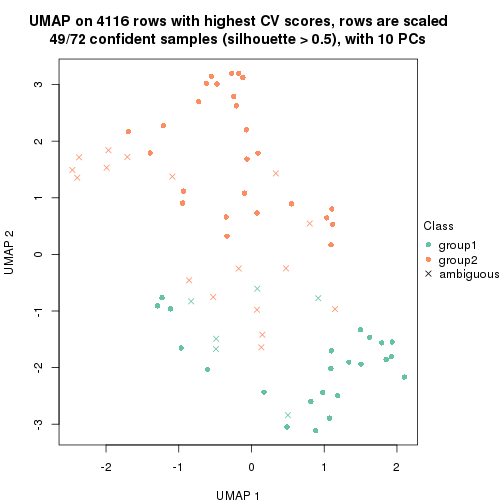

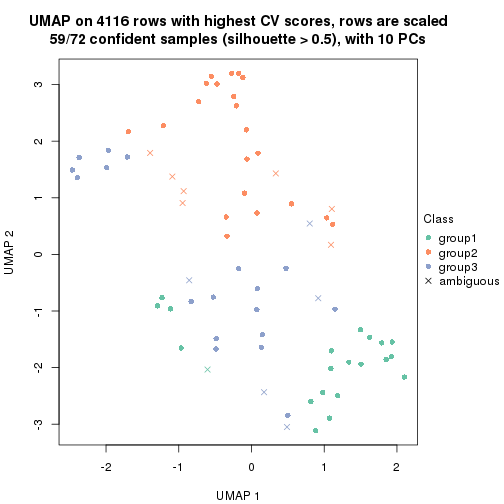

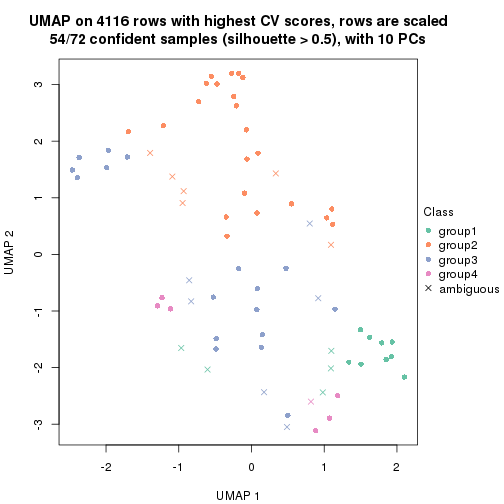

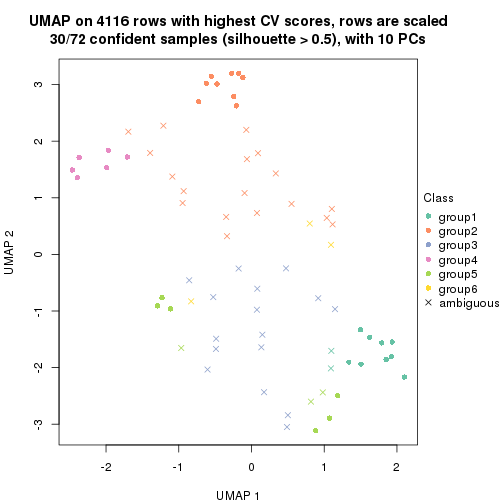

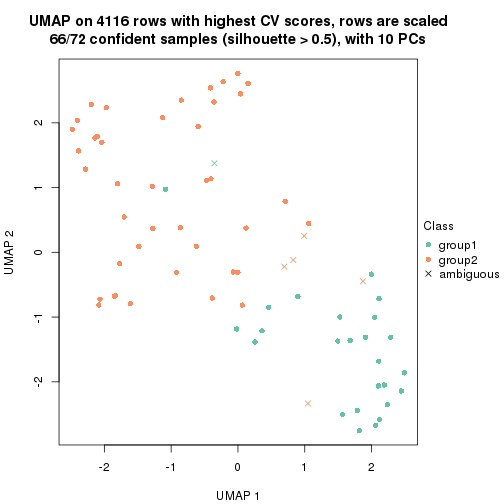

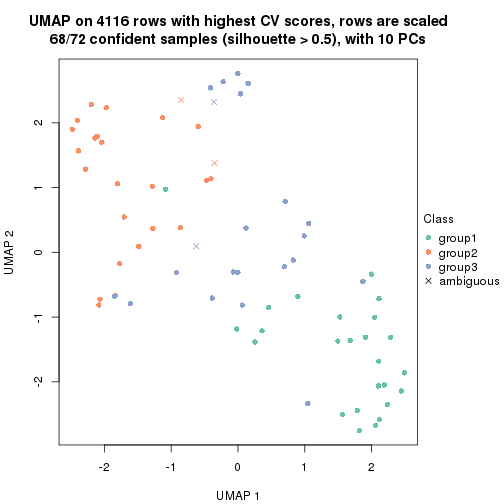

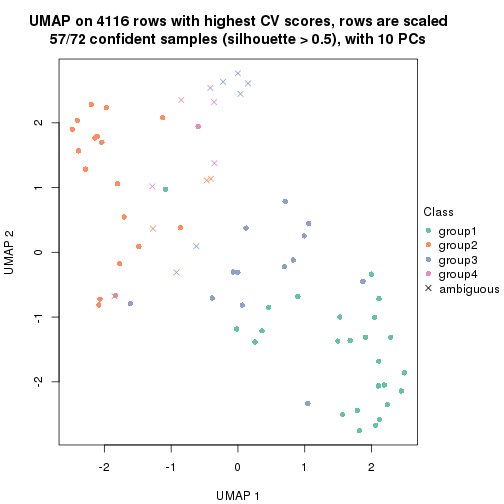

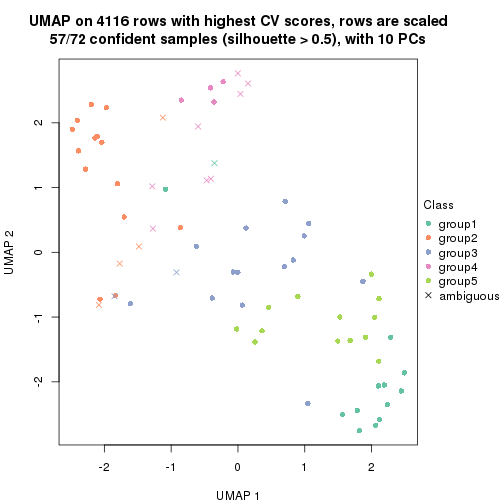

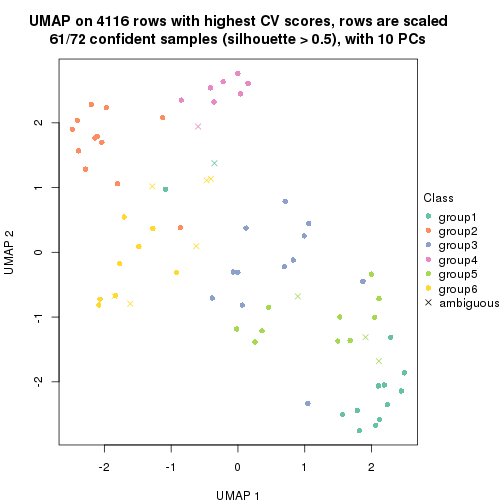

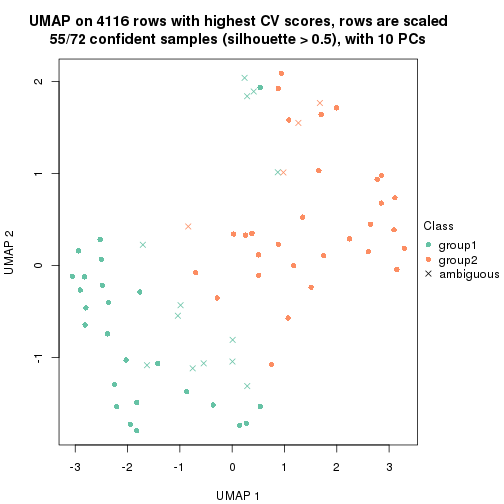

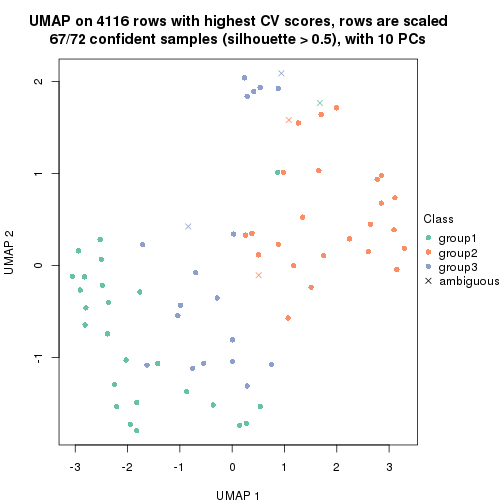

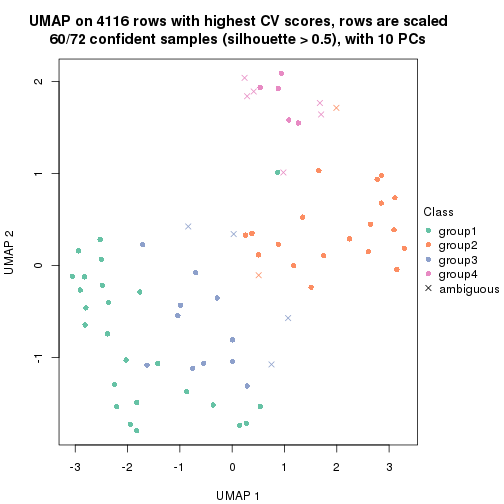

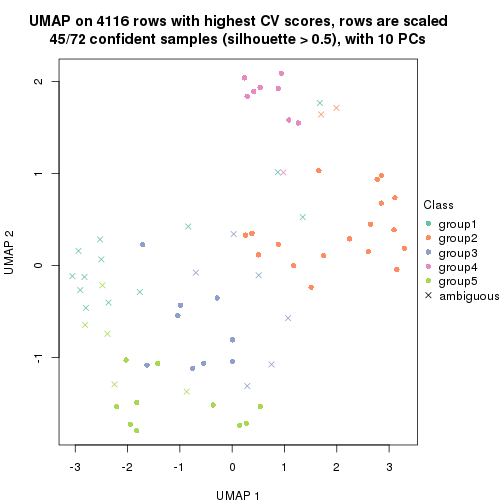

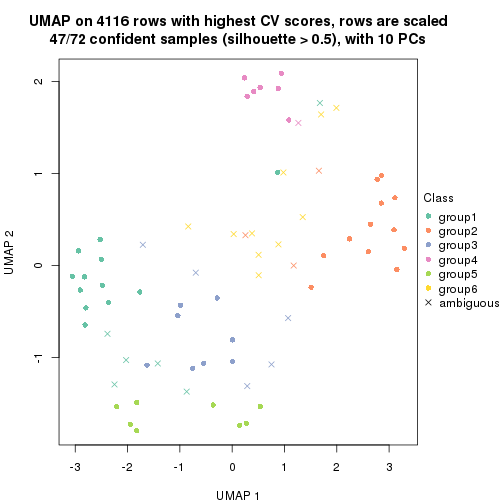

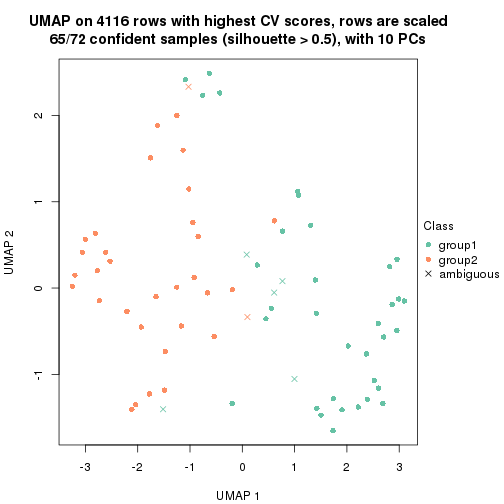

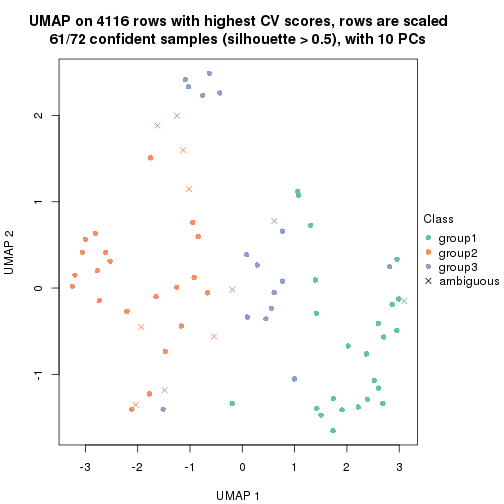

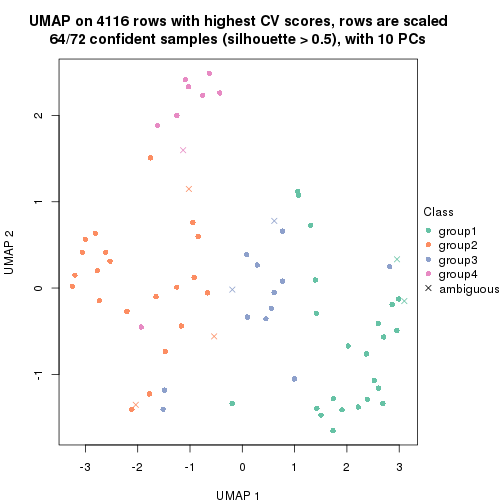

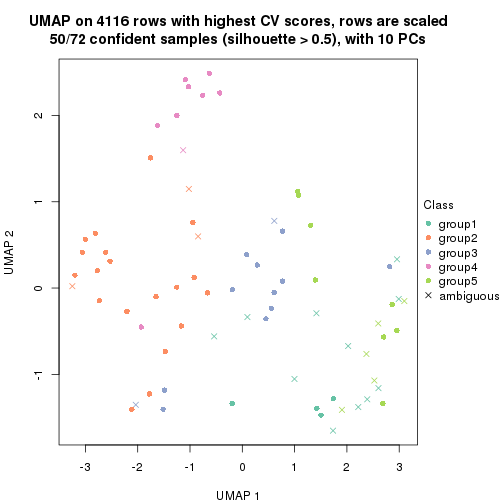

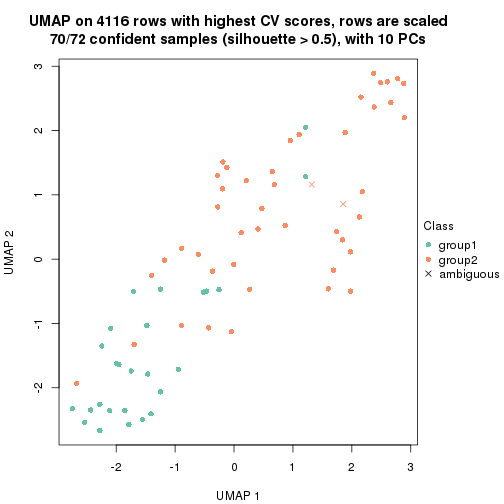

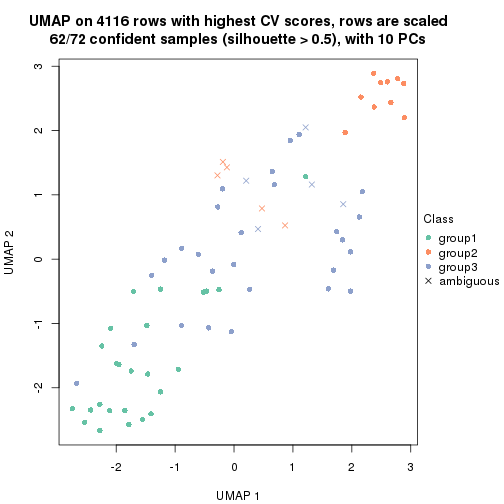

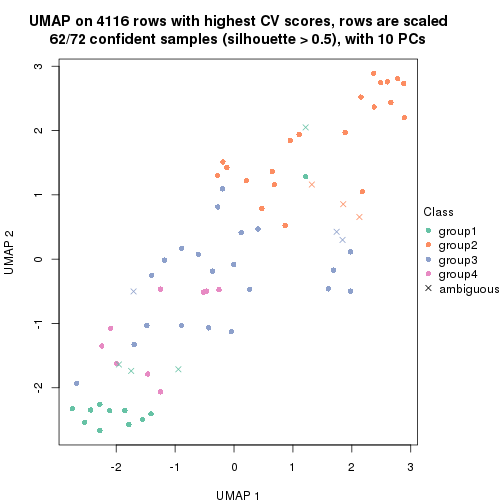

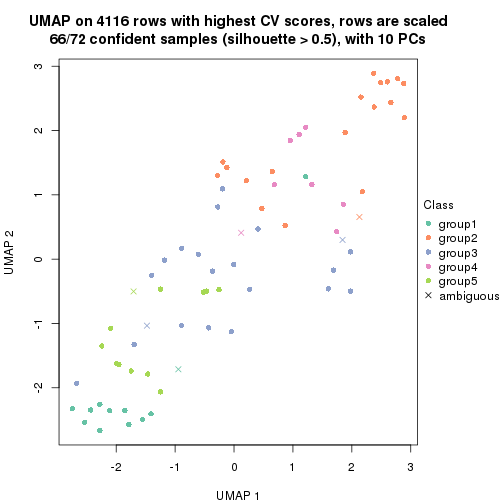

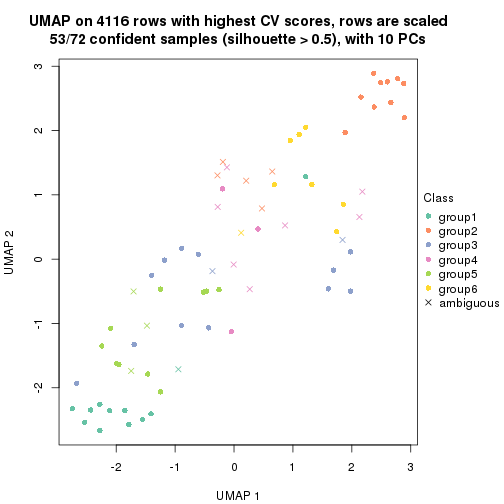

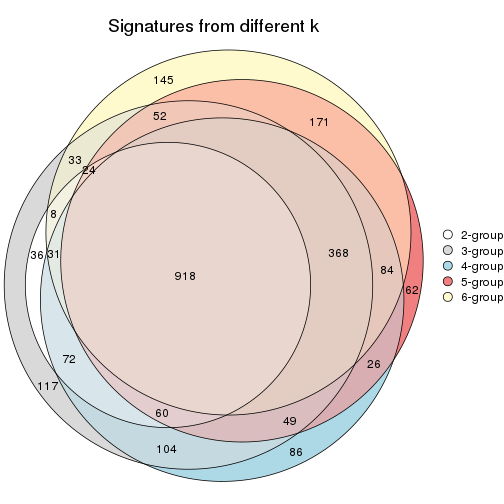

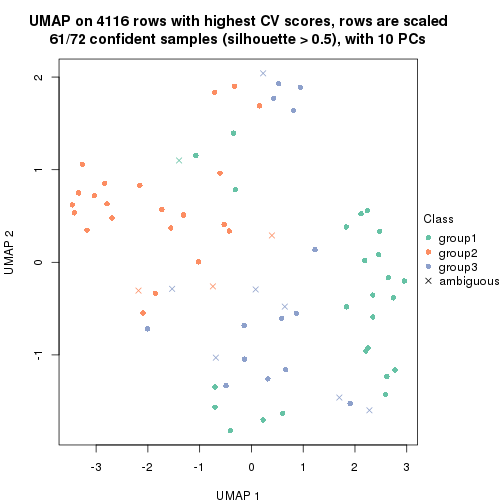

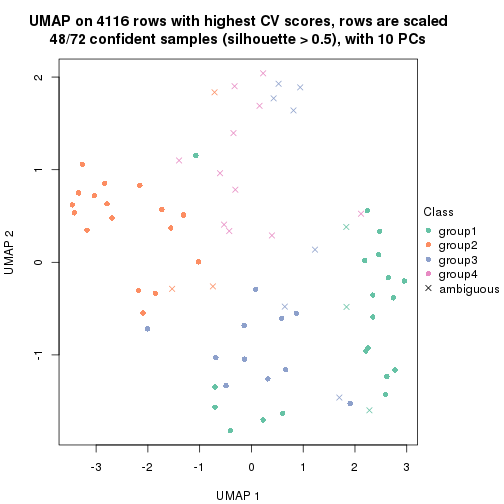

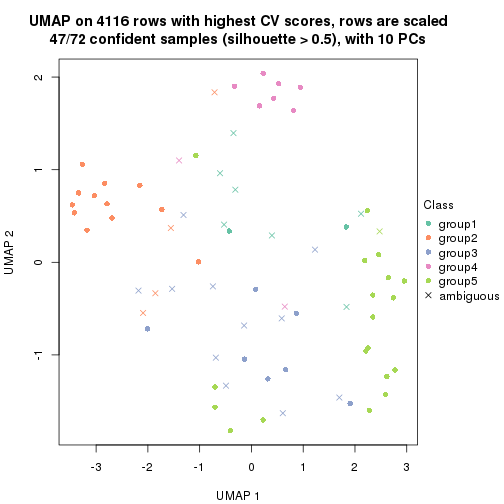

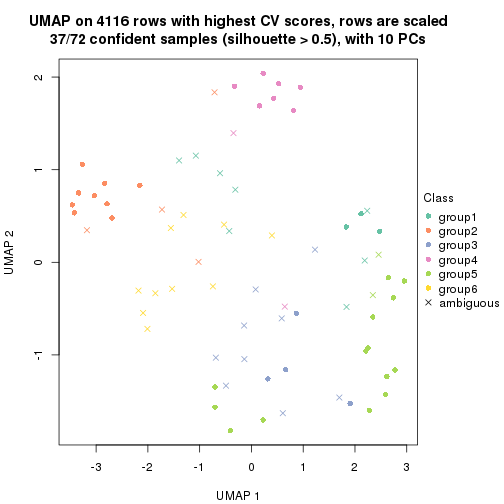

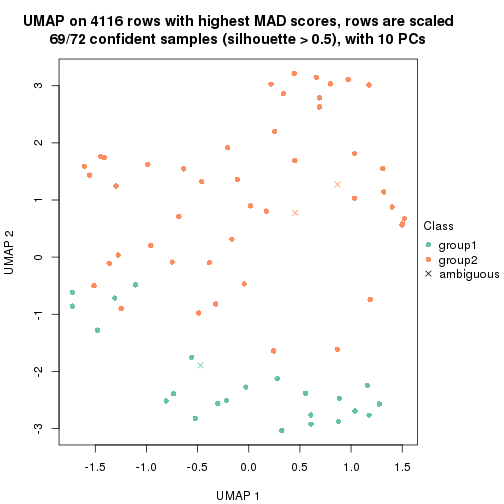

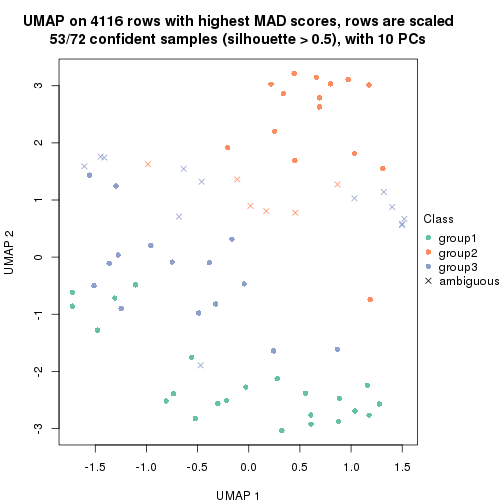

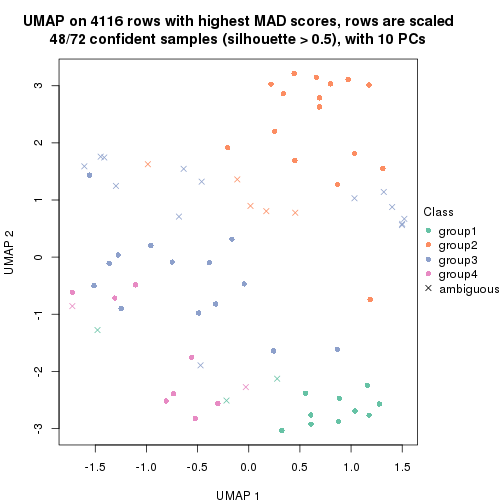

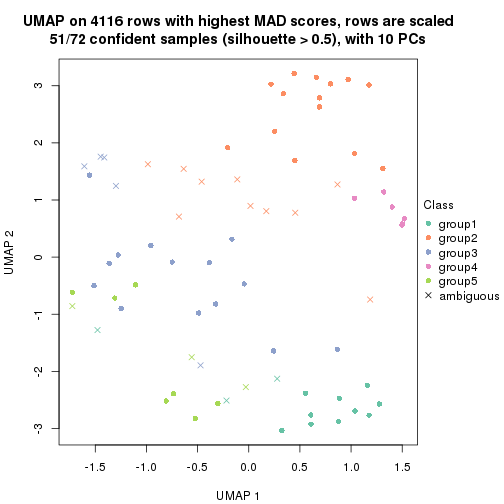

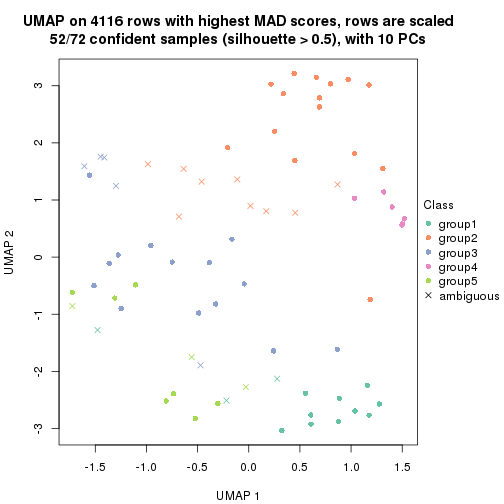

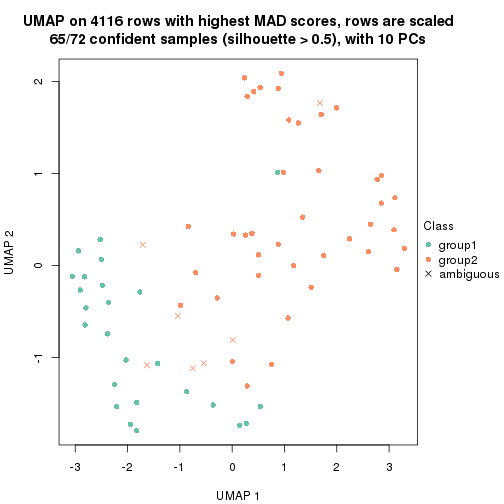

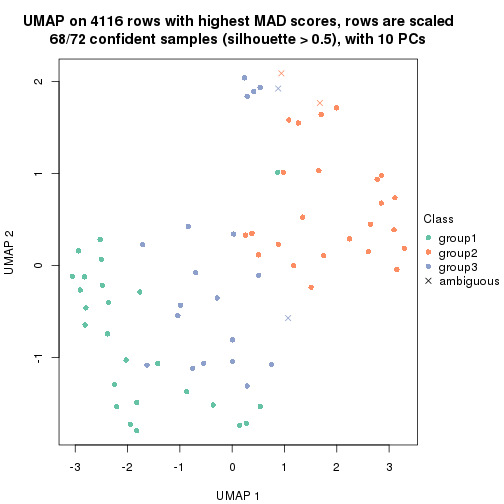

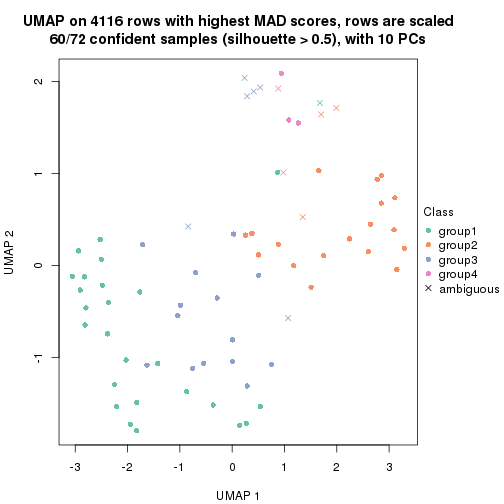

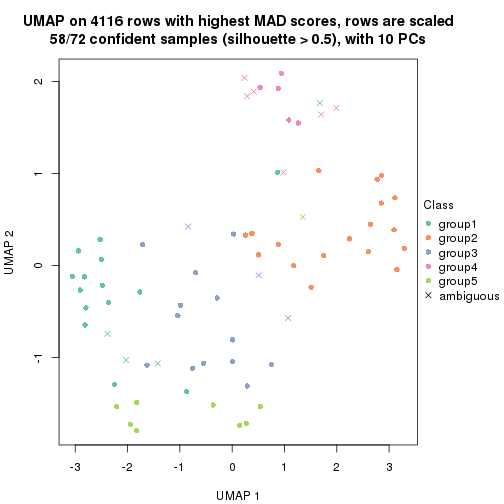

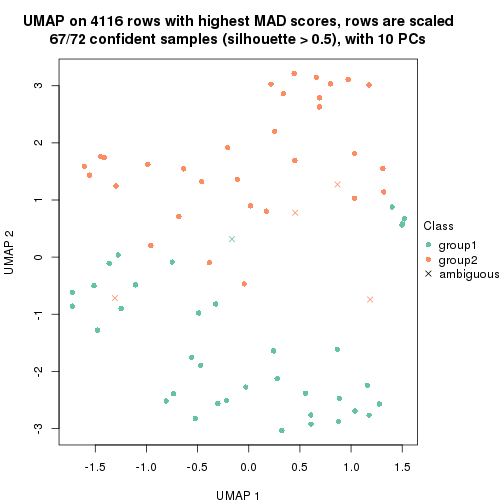

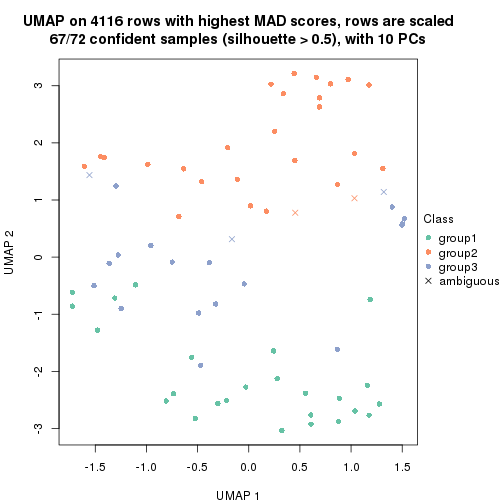

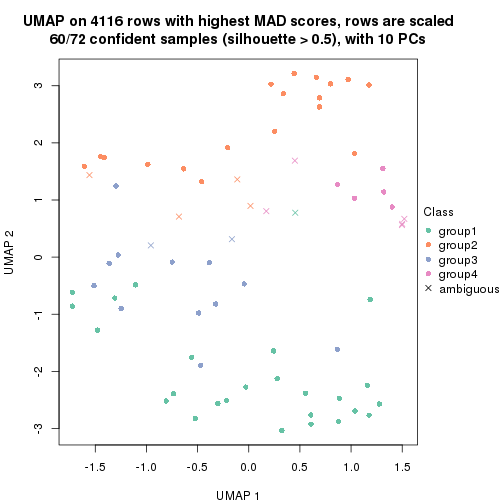

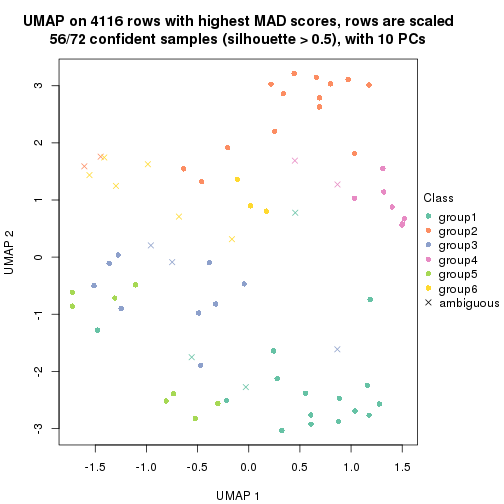

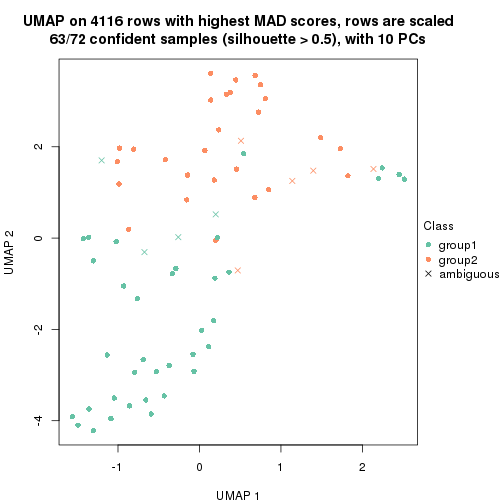

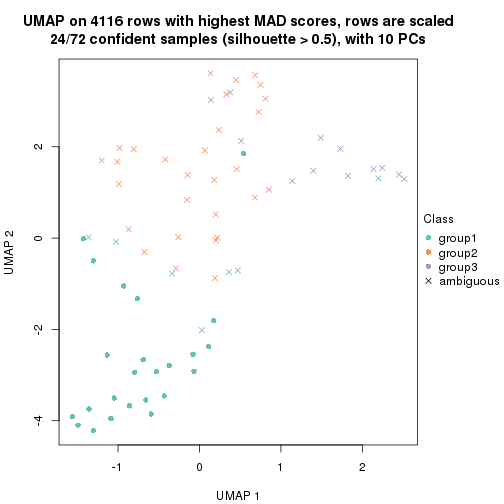

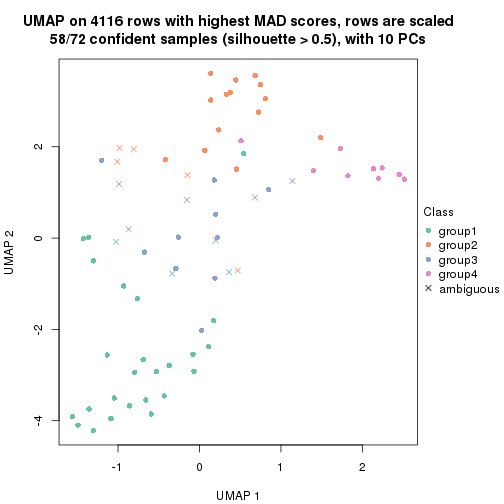

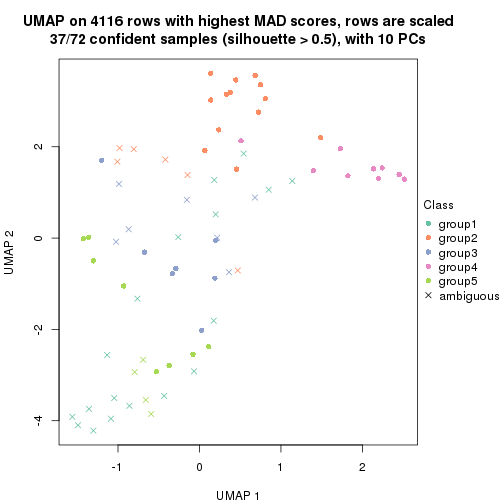

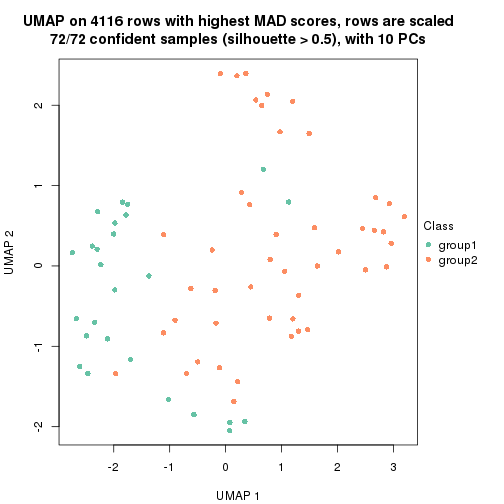

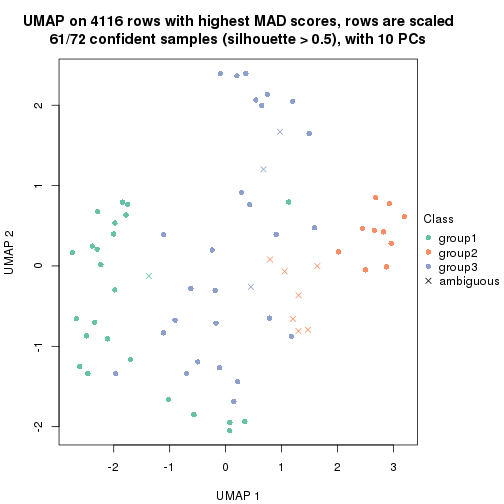

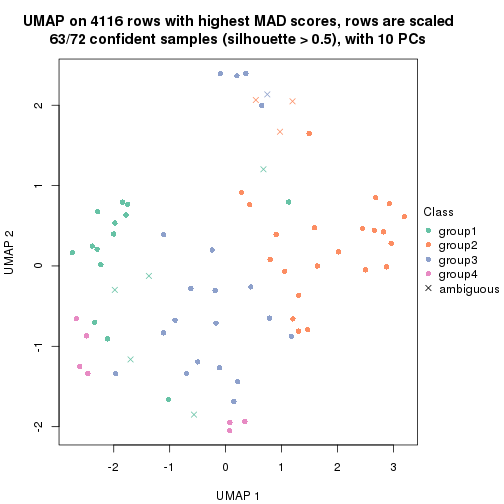

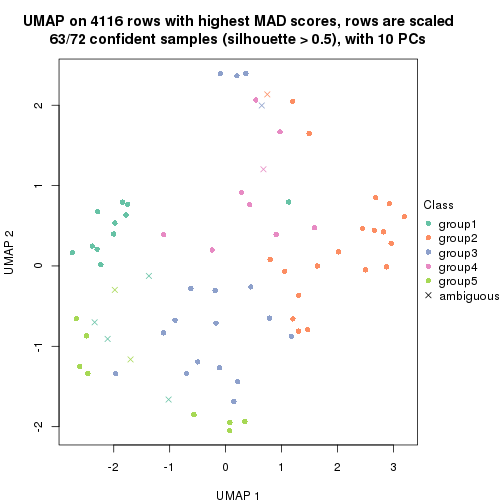

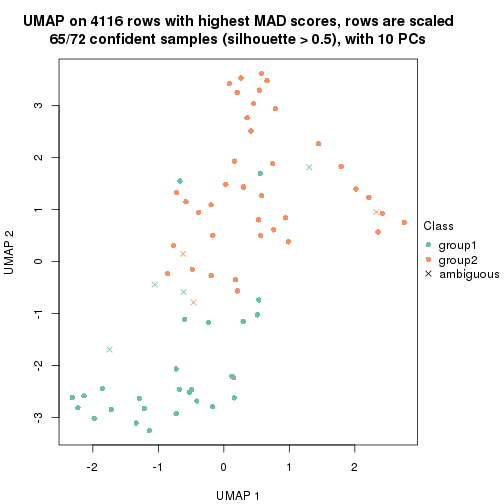

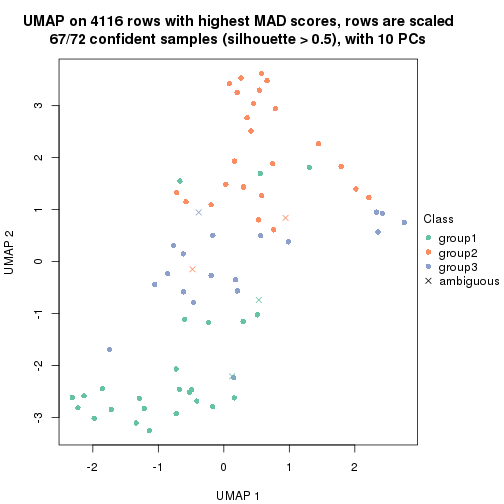

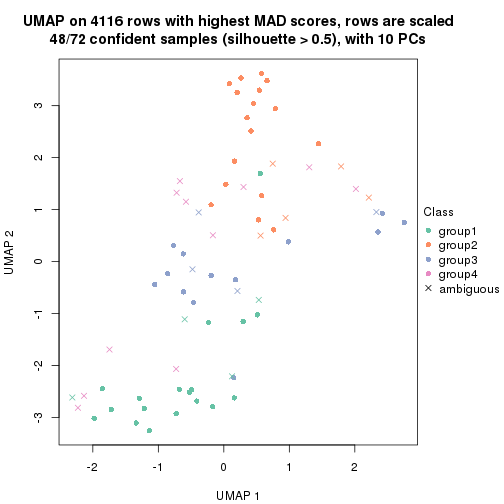

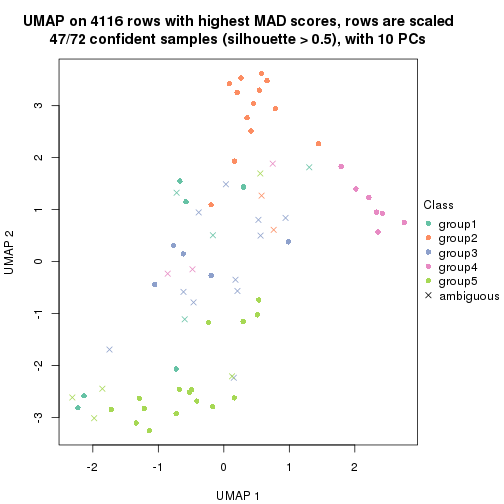

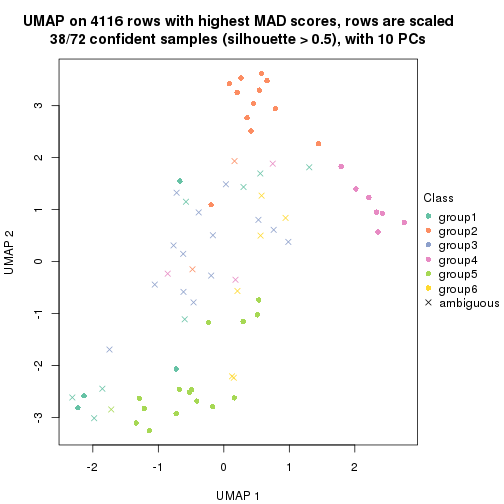

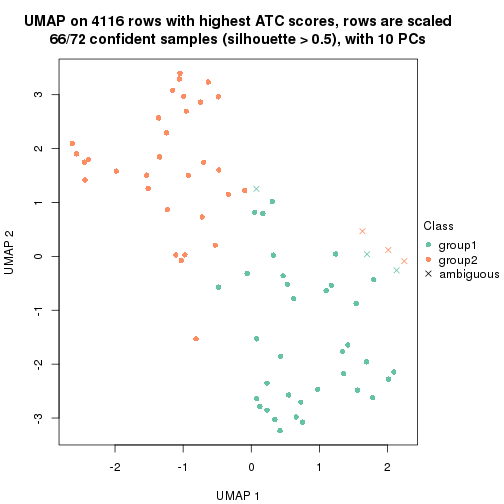

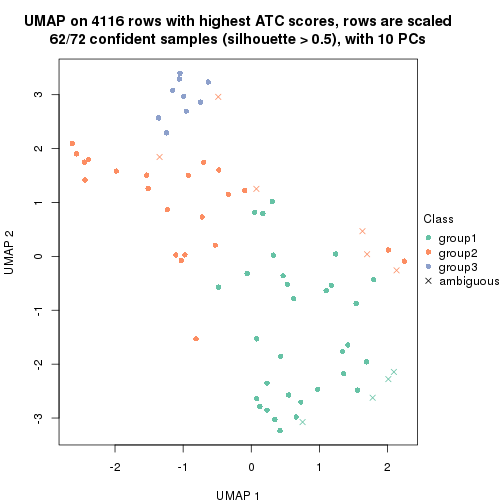

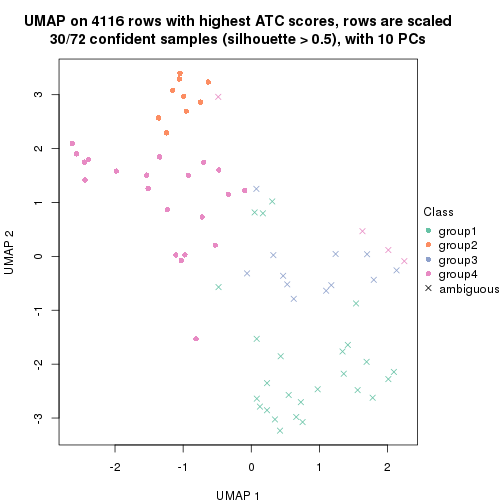

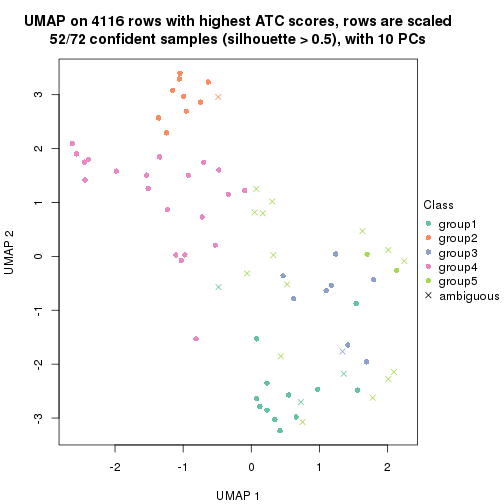

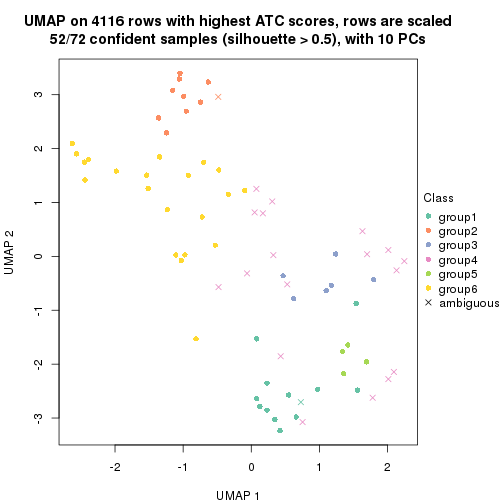

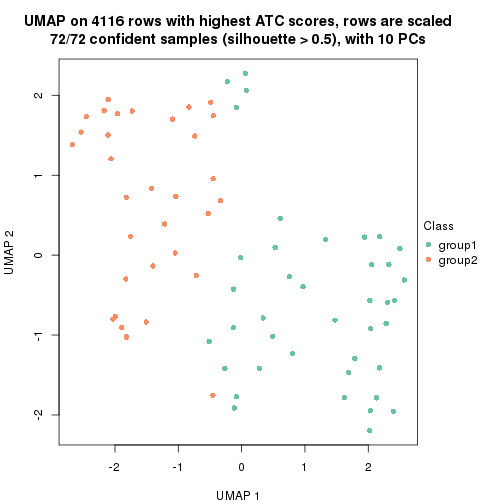

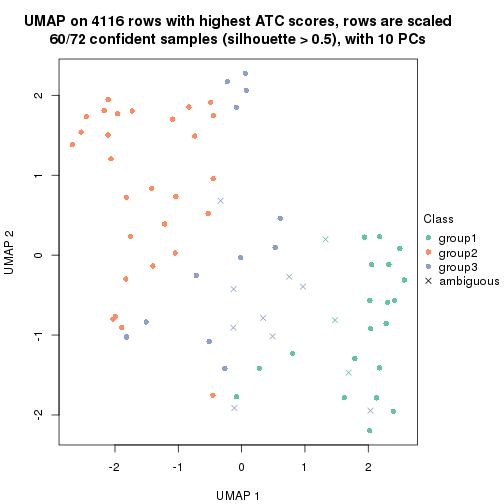

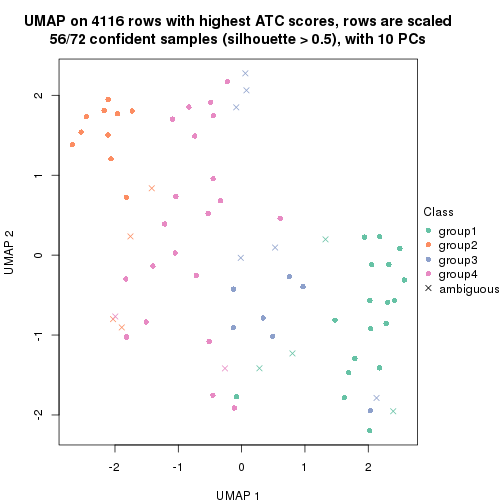

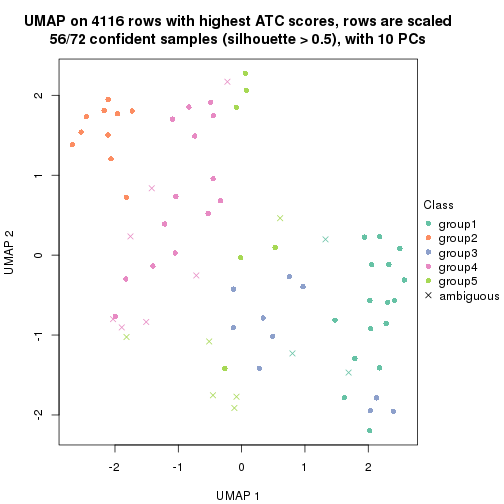

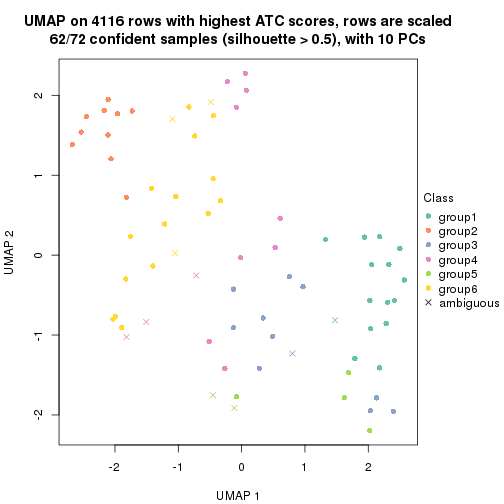

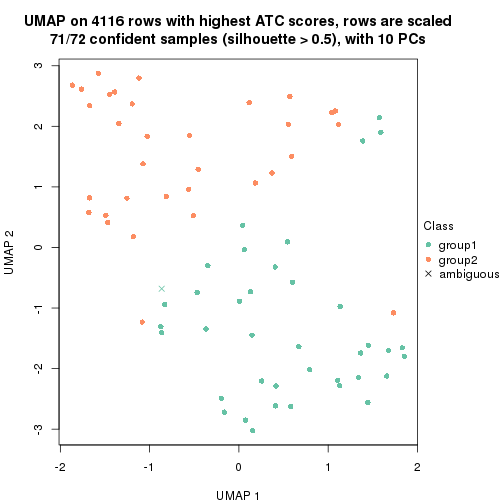

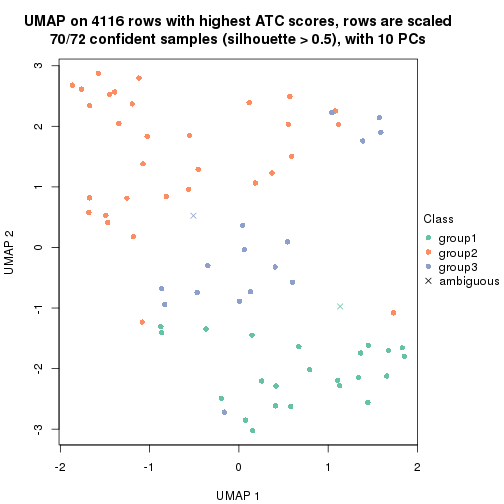

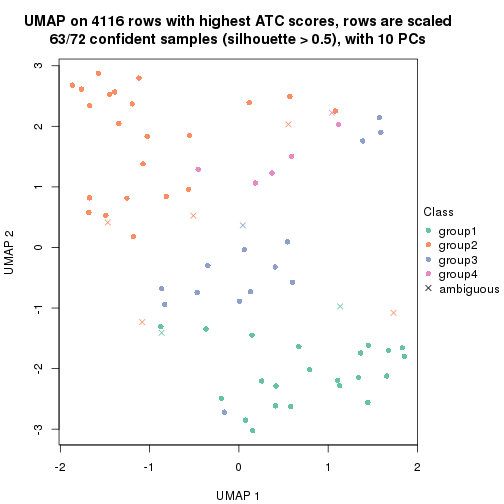

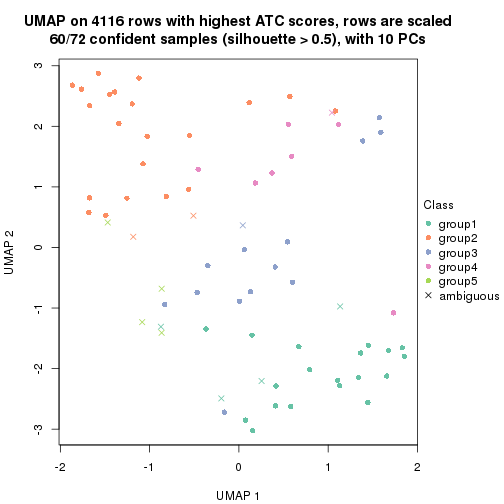

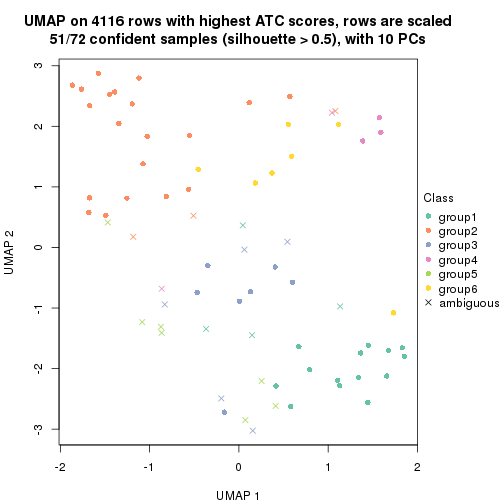

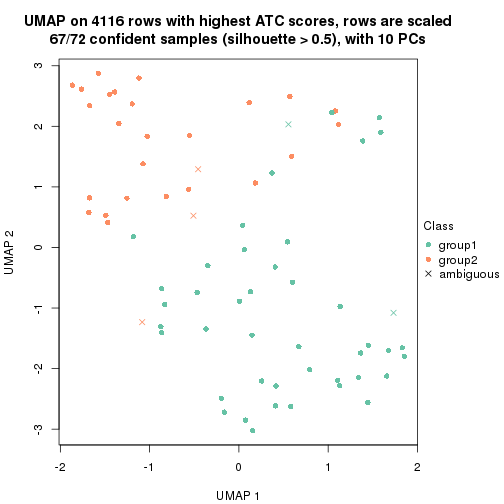

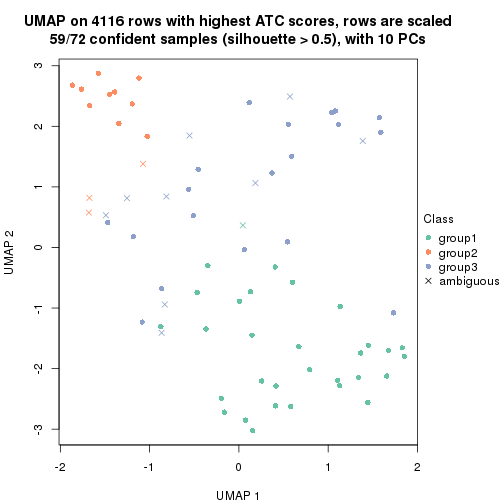

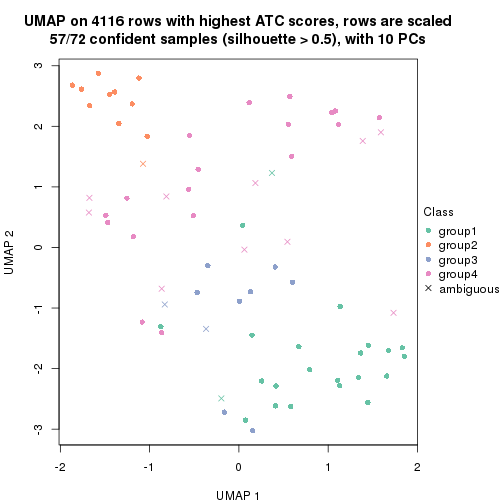

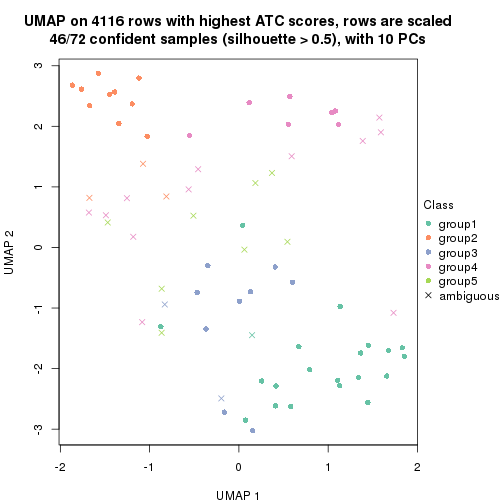

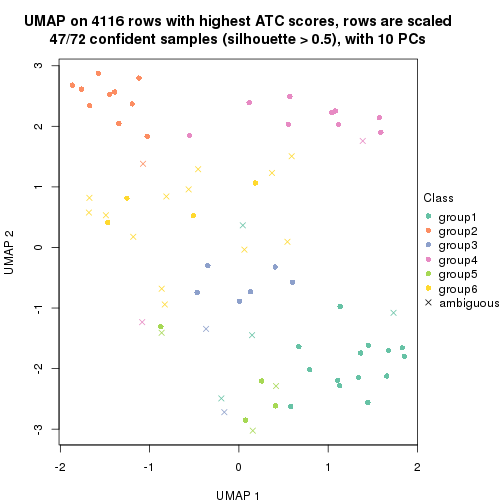

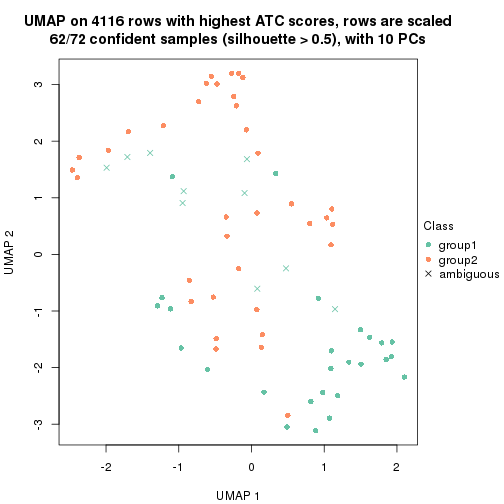

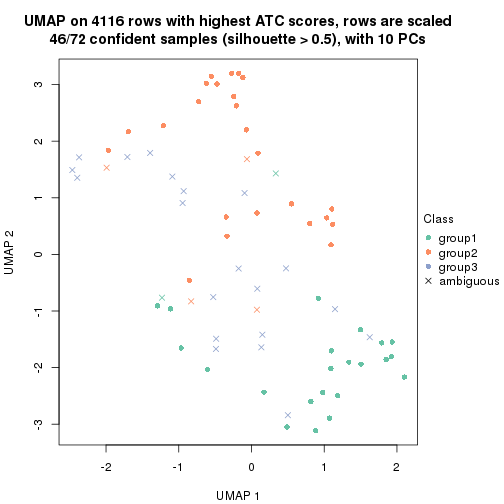

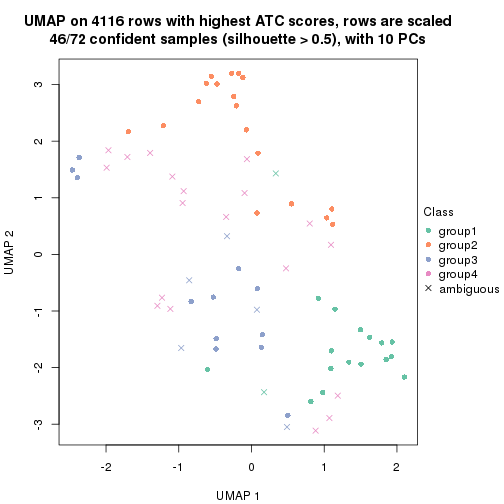

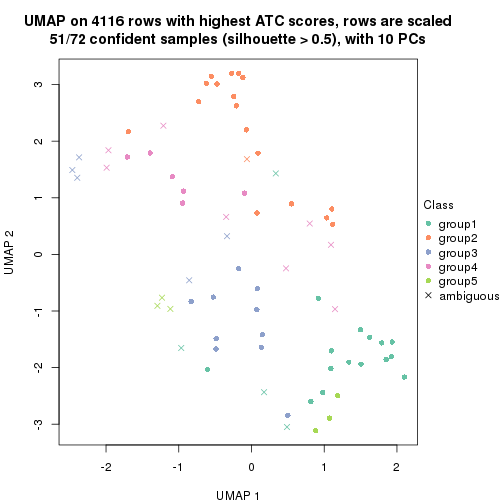

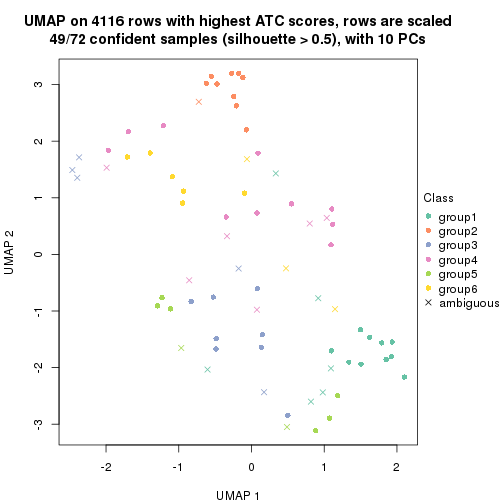

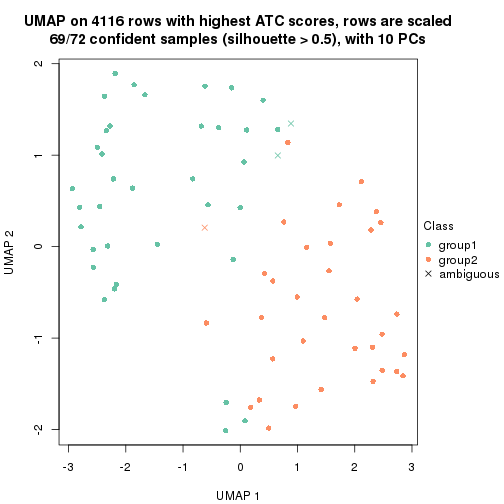

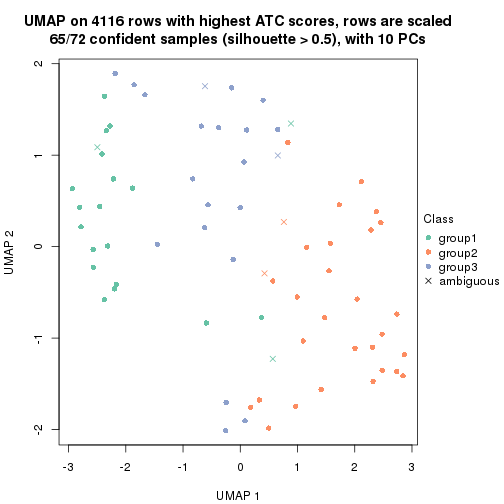

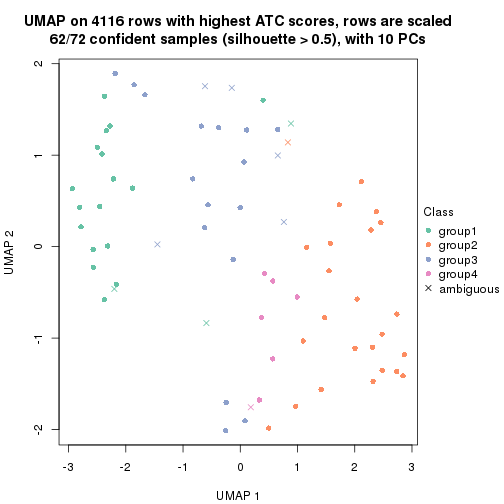

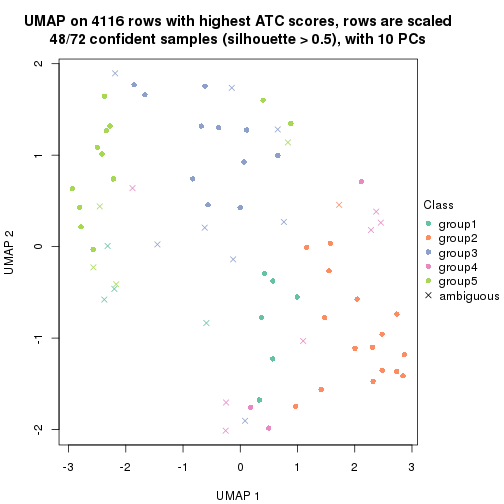

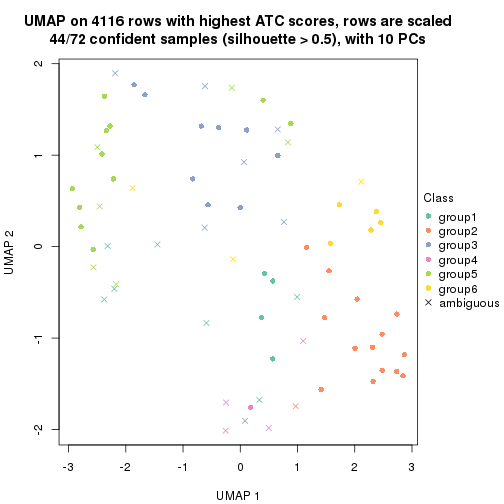

which_row: row indices corresponding to the input matrix.fdr: FDR for the differential test. mean_x: The mean value in group x.scaled_mean_x: The mean value in group x after rows are scaled.km: Row groups if k-means clustering is applied to rows.UMAP plot which shows how samples are separated.

dimension_reduction(res, k = 2, method = "UMAP")

dimension_reduction(res, k = 3, method = "UMAP")

dimension_reduction(res, k = 4, method = "UMAP")

dimension_reduction(res, k = 5, method = "UMAP")

dimension_reduction(res, k = 6, method = "UMAP")

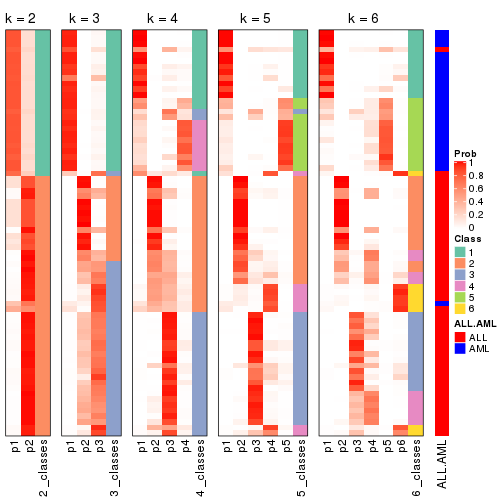

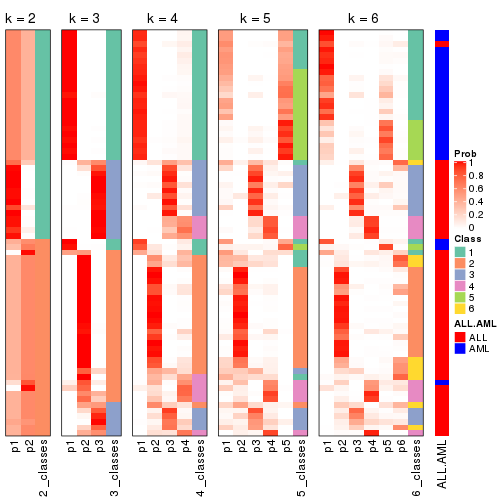

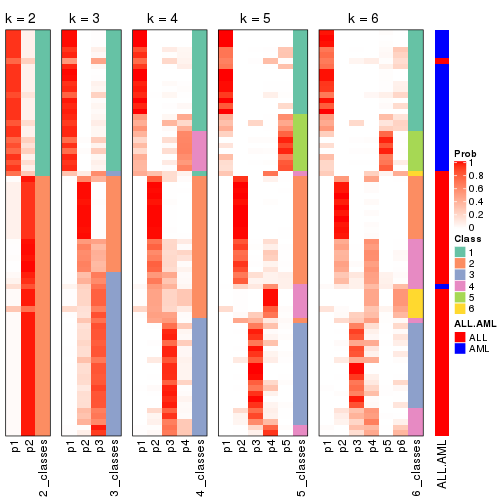

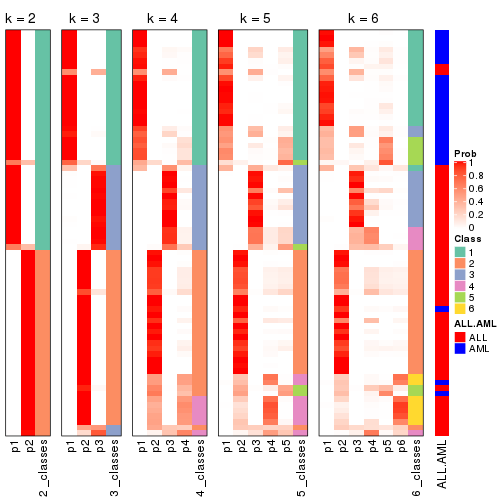

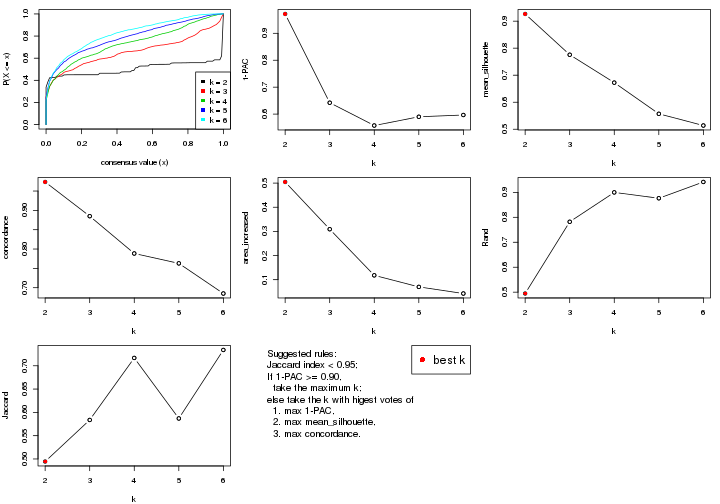

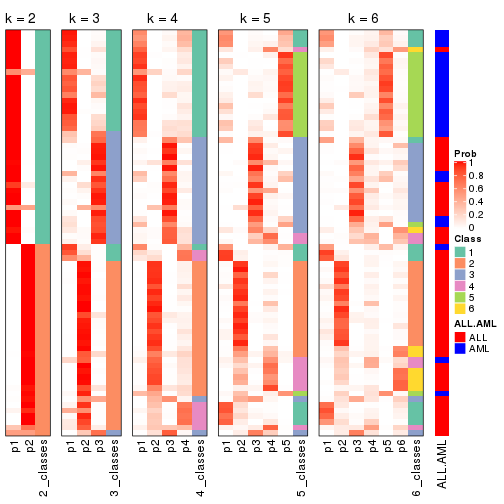

Following heatmap shows how subgroups are split when increasing k:

collect_classes(res)

Test correlation between subgroups and known annotations. If the known annotation is numeric, one-way ANOVA test is applied, and if the known annotation is discrete, chi-squared contingency table test is applied.

test_to_known_factors(res)

#> n ALL.AML(p) k

#> SD:hclust 70 4.70e-15 2

#> SD:hclust 61 5.68e-14 3

#> SD:hclust 51 3.35e-10 4

#> SD:hclust 55 2.43e-10 5

#> SD:hclust 48 9.44e-10 6

If matrix rows can be associated to genes, consider to use functional_enrichment(res,

...) to perform function enrichment for the signature genes. See this vignette for more detailed explanations.

The object with results only for a single top-value method and a single partition method can be extracted as:

res = res_list["SD", "kmeans"]

# you can also extract it by

# res = res_list["SD:kmeans"]

A summary of res and all the functions that can be applied to it:

res

#> A 'ConsensusPartition' object with k = 2, 3, 4, 5, 6.

#> On a matrix with 4116 rows and 72 columns.

#> Top rows (412, 824, 1235, 1646, 2058) are extracted by 'SD' method.

#> Subgroups are detected by 'kmeans' method.

#> Performed in total 1250 partitions by row resampling.

#> Best k for subgroups seems to be 2.

#>

#> Following methods can be applied to this 'ConsensusPartition' object:

#> [1] "cola_report" "collect_classes" "collect_plots"

#> [4] "collect_stats" "colnames" "compare_signatures"

#> [7] "consensus_heatmap" "dimension_reduction" "functional_enrichment"

#> [10] "get_anno_col" "get_anno" "get_classes"

#> [13] "get_consensus" "get_matrix" "get_membership"

#> [16] "get_param" "get_signatures" "get_stats"

#> [19] "is_best_k" "is_stable_k" "membership_heatmap"

#> [22] "ncol" "nrow" "plot_ecdf"

#> [25] "rownames" "select_partition_number" "show"

#> [28] "suggest_best_k" "test_to_known_factors"

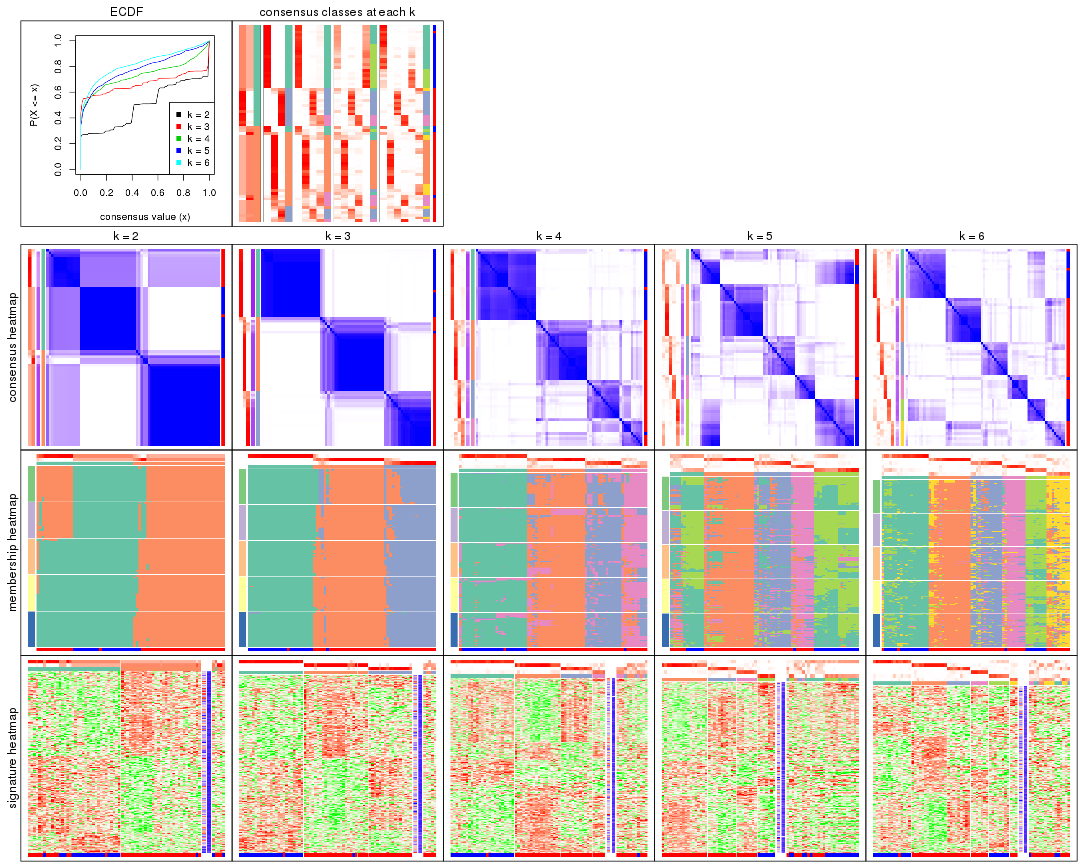

collect_plots() function collects all the plots made from res for all k (number of partitions)

into one single page to provide an easy and fast comparison between different k.

collect_plots(res)

The plots are:

k and the heatmap of

predicted classes for each k.k.k.k.All the plots in panels can be made by individual functions and they are plotted later in this section.

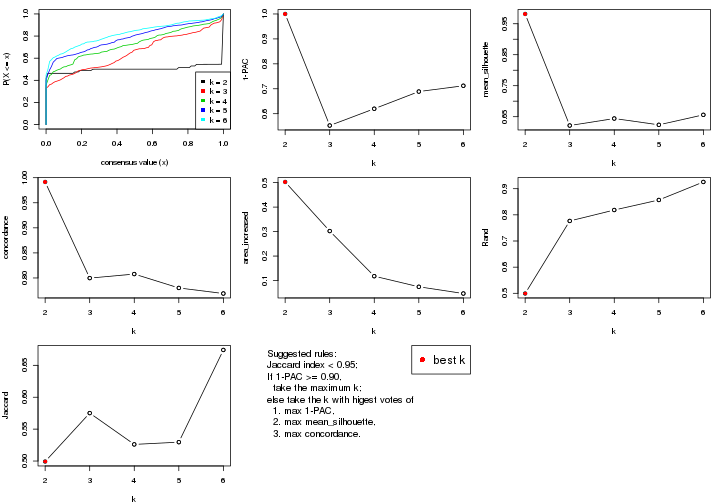

select_partition_number() produces several plots showing different

statistics for choosing “optimized” k. There are following statistics:

k;k, the area increased is defined as \(A_k - A_{k-1}\).The detailed explanations of these statistics can be found in the cola vignette.

Generally speaking, lower PAC score, higher mean silhouette score or higher

concordance corresponds to better partition. Rand index and Jaccard index

measure how similar the current partition is compared to partition with k-1.

If they are too similar, we won't accept k is better than k-1.

select_partition_number(res)

The numeric values for all these statistics can be obtained by get_stats().

get_stats(res)

#> k 1-PAC mean_silhouette concordance area_increased Rand Jaccard

#> 2 2 0.662 0.836 0.911 0.4793 0.540 0.540

#> 3 3 0.686 0.822 0.870 0.3544 0.789 0.609

#> 4 4 0.580 0.653 0.780 0.1247 0.900 0.718

#> 5 5 0.656 0.530 0.683 0.0757 0.922 0.732

#> 6 6 0.710 0.652 0.782 0.0496 0.852 0.445

suggest_best_k() suggests the best \(k\) based on these statistics. The rules are as follows:

suggest_best_k(res)

#> [1] 2

Following shows the table of the partitions (You need to click the show/hide

code output link to see it). The membership matrix (columns with name p*)

is inferred by

clue::cl_consensus()

function with the SE method. Basically the value in the membership matrix

represents the probability to belong to a certain group. The finall class

label for an item is determined with the group with highest probability it

belongs to.

In get_classes() function, the entropy is calculated from the membership

matrix and the silhouette score is calculated from the consensus matrix.

cbind(get_classes(res, k = 2), get_membership(res, k = 2))

#> class entropy silhouette p1 p2

#> sample_39 2 0.9286 0.614 0.344 0.656

#> sample_40 2 0.9522 0.575 0.372 0.628

#> sample_42 2 0.0376 0.858 0.004 0.996

#> sample_47 2 0.0376 0.858 0.004 0.996

#> sample_48 2 0.0376 0.858 0.004 0.996

#> sample_49 2 0.9552 0.568 0.376 0.624

#> sample_41 2 0.0376 0.858 0.004 0.996

#> sample_43 2 0.0376 0.858 0.004 0.996

#> sample_44 2 0.0376 0.858 0.004 0.996

#> sample_45 2 0.0376 0.858 0.004 0.996

#> sample_46 2 0.0376 0.858 0.004 0.996

#> sample_70 2 0.0672 0.857 0.008 0.992

#> sample_71 2 0.0000 0.856 0.000 1.000

#> sample_72 2 0.0000 0.856 0.000 1.000

#> sample_68 2 0.0376 0.858 0.004 0.996

#> sample_69 2 0.0376 0.858 0.004 0.996

#> sample_67 2 1.0000 -0.047 0.500 0.500

#> sample_55 2 0.9393 0.599 0.356 0.644

#> sample_56 2 0.9393 0.599 0.356 0.644

#> sample_59 2 0.0376 0.858 0.004 0.996

#> sample_52 1 0.0672 0.992 0.992 0.008

#> sample_53 1 0.0938 0.988 0.988 0.012

#> sample_51 1 0.0672 0.992 0.992 0.008

#> sample_50 1 0.0672 0.992 0.992 0.008

#> sample_54 1 0.4690 0.887 0.900 0.100

#> sample_57 1 0.0672 0.992 0.992 0.008

#> sample_58 1 0.0672 0.992 0.992 0.008

#> sample_60 1 0.0672 0.992 0.992 0.008

#> sample_61 1 0.0672 0.992 0.992 0.008

#> sample_65 1 0.0672 0.992 0.992 0.008

#> sample_66 2 0.2423 0.838 0.040 0.960

#> sample_63 1 0.0672 0.992 0.992 0.008

#> sample_64 1 0.0672 0.992 0.992 0.008

#> sample_62 1 0.0672 0.992 0.992 0.008

#> sample_1 2 0.0672 0.857 0.008 0.992

#> sample_2 2 0.6148 0.744 0.152 0.848

#> sample_3 2 0.9323 0.619 0.348 0.652

#> sample_4 2 0.0672 0.857 0.008 0.992

#> sample_5 2 0.0376 0.858 0.004 0.996

#> sample_6 2 0.9323 0.619 0.348 0.652

#> sample_7 2 0.9358 0.604 0.352 0.648

#> sample_8 2 0.9580 0.562 0.380 0.620

#> sample_9 2 0.1633 0.849 0.024 0.976

#> sample_10 2 0.9323 0.619 0.348 0.652

#> sample_11 2 0.1633 0.849 0.024 0.976

#> sample_12 1 0.0672 0.992 0.992 0.008

#> sample_13 2 0.0376 0.858 0.004 0.996

#> sample_14 2 0.1633 0.849 0.024 0.976

#> sample_15 2 0.0376 0.858 0.004 0.996

#> sample_16 2 0.0376 0.858 0.004 0.996

#> sample_17 2 0.1414 0.852 0.020 0.980

#> sample_18 2 0.5842 0.788 0.140 0.860

#> sample_19 2 0.0376 0.858 0.004 0.996

#> sample_20 2 0.0376 0.858 0.004 0.996

#> sample_21 2 0.0000 0.856 0.000 1.000

#> sample_22 2 0.9552 0.562 0.376 0.624

#> sample_23 2 0.9323 0.619 0.348 0.652

#> sample_24 2 0.0376 0.858 0.004 0.996

#> sample_25 2 0.8955 0.650 0.312 0.688

#> sample_26 2 0.0376 0.858 0.004 0.996

#> sample_27 2 0.9580 0.562 0.380 0.620

#> sample_34 1 0.0672 0.992 0.992 0.008

#> sample_35 1 0.0672 0.992 0.992 0.008

#> sample_36 1 0.0672 0.992 0.992 0.008

#> sample_37 1 0.0672 0.992 0.992 0.008

#> sample_38 1 0.0938 0.988 0.988 0.012

#> sample_28 1 0.0672 0.992 0.992 0.008

#> sample_29 1 0.3431 0.928 0.936 0.064

#> sample_30 1 0.0672 0.992 0.992 0.008

#> sample_31 1 0.0672 0.992 0.992 0.008

#> sample_32 1 0.0672 0.992 0.992 0.008

#> sample_33 1 0.0672 0.992 0.992 0.008

cbind(get_classes(res, k = 3), get_membership(res, k = 3))

#> class entropy silhouette p1 p2 p3

#> sample_39 3 0.6287 0.8560 0.024 0.272 0.704

#> sample_40 3 0.6226 0.8634 0.028 0.252 0.720

#> sample_42 2 0.0747 0.8499 0.000 0.984 0.016

#> sample_47 2 0.0000 0.8528 0.000 1.000 0.000

#> sample_48 2 0.0237 0.8529 0.000 0.996 0.004

#> sample_49 3 0.6187 0.8644 0.028 0.248 0.724

#> sample_41 2 0.1529 0.8440 0.000 0.960 0.040

#> sample_43 2 0.0424 0.8515 0.000 0.992 0.008

#> sample_44 2 0.0747 0.8507 0.000 0.984 0.016

#> sample_45 2 0.0592 0.8516 0.000 0.988 0.012

#> sample_46 2 0.0592 0.8516 0.000 0.988 0.012

#> sample_70 3 0.5905 0.7804 0.000 0.352 0.648

#> sample_71 2 0.2261 0.8079 0.000 0.932 0.068

#> sample_72 2 0.2165 0.8120 0.000 0.936 0.064

#> sample_68 2 0.1411 0.8461 0.000 0.964 0.036

#> sample_69 2 0.0000 0.8528 0.000 1.000 0.000

#> sample_67 2 0.7841 0.0517 0.472 0.476 0.052

#> sample_55 3 0.5849 0.8599 0.028 0.216 0.756

#> sample_56 3 0.6226 0.8634 0.028 0.252 0.720

#> sample_59 2 0.6252 -0.3283 0.000 0.556 0.444

#> sample_52 1 0.2625 0.9576 0.916 0.000 0.084

#> sample_53 1 0.0747 0.9588 0.984 0.000 0.016

#> sample_51 1 0.0747 0.9588 0.984 0.000 0.016

#> sample_50 1 0.0592 0.9596 0.988 0.000 0.012

#> sample_54 1 0.3112 0.9525 0.900 0.004 0.096

#> sample_57 1 0.2878 0.9536 0.904 0.000 0.096

#> sample_58 1 0.2796 0.9551 0.908 0.000 0.092

#> sample_60 1 0.2878 0.9536 0.904 0.000 0.096

#> sample_61 1 0.1643 0.9627 0.956 0.000 0.044

#> sample_65 1 0.1643 0.9629 0.956 0.000 0.044

#> sample_66 2 0.5680 0.6667 0.024 0.764 0.212

#> sample_63 1 0.2711 0.9566 0.912 0.000 0.088

#> sample_64 1 0.2796 0.9551 0.908 0.000 0.092

#> sample_62 1 0.2711 0.9566 0.912 0.000 0.088

#> sample_1 3 0.5678 0.8231 0.000 0.316 0.684

#> sample_2 2 0.8616 0.4682 0.148 0.588 0.264

#> sample_3 3 0.3112 0.7825 0.004 0.096 0.900

#> sample_4 3 0.5327 0.8388 0.000 0.272 0.728

#> sample_5 2 0.1411 0.8461 0.000 0.964 0.036

#> sample_6 3 0.3112 0.7825 0.004 0.096 0.900

#> sample_7 3 0.5894 0.8601 0.028 0.220 0.752

#> sample_8 3 0.6264 0.8635 0.028 0.256 0.716

#> sample_9 3 0.4399 0.7249 0.000 0.188 0.812

#> sample_10 3 0.3112 0.7825 0.004 0.096 0.900

#> sample_11 3 0.5988 0.3704 0.000 0.368 0.632

#> sample_12 1 0.1647 0.9529 0.960 0.004 0.036

#> sample_13 2 0.0237 0.8529 0.000 0.996 0.004

#> sample_14 2 0.6244 0.2863 0.000 0.560 0.440

#> sample_15 2 0.1411 0.8461 0.000 0.964 0.036

#> sample_16 2 0.1163 0.8445 0.000 0.972 0.028

#> sample_17 2 0.3752 0.7568 0.000 0.856 0.144

#> sample_18 3 0.5692 0.8554 0.008 0.268 0.724

#> sample_19 2 0.0592 0.8510 0.000 0.988 0.012

#> sample_20 2 0.0000 0.8528 0.000 1.000 0.000

#> sample_21 2 0.1411 0.8476 0.000 0.964 0.036

#> sample_22 3 0.6596 0.8615 0.040 0.256 0.704

#> sample_23 3 0.3112 0.7825 0.004 0.096 0.900

#> sample_24 2 0.1529 0.8440 0.000 0.960 0.040

#> sample_25 3 0.7222 0.7093 0.032 0.388 0.580

#> sample_26 2 0.5138 0.4580 0.000 0.748 0.252

#> sample_27 3 0.6187 0.8644 0.028 0.248 0.724

#> sample_34 1 0.2261 0.9606 0.932 0.000 0.068

#> sample_35 1 0.2796 0.9580 0.908 0.000 0.092

#> sample_36 1 0.0424 0.9612 0.992 0.000 0.008

#> sample_37 1 0.0747 0.9588 0.984 0.000 0.016

#> sample_38 1 0.0983 0.9575 0.980 0.004 0.016

#> sample_28 1 0.0983 0.9575 0.980 0.004 0.016

#> sample_29 1 0.0983 0.9571 0.980 0.004 0.016

#> sample_30 1 0.0237 0.9608 0.996 0.000 0.004

#> sample_31 1 0.2165 0.9612 0.936 0.000 0.064

#> sample_32 1 0.0424 0.9621 0.992 0.000 0.008

#> sample_33 1 0.0747 0.9588 0.984 0.000 0.016

cbind(get_classes(res, k = 4), get_membership(res, k = 4))

#> class entropy silhouette p1 p2 p3 p4

#> sample_39 3 0.4819 0.67129 0.004 0.152 0.784 0.060

#> sample_40 3 0.2926 0.71108 0.004 0.096 0.888 0.012

#> sample_42 2 0.6994 0.56098 0.012 0.604 0.128 0.256

#> sample_47 2 0.2596 0.81643 0.000 0.908 0.024 0.068

#> sample_48 2 0.0336 0.82986 0.000 0.992 0.008 0.000

#> sample_49 3 0.2861 0.71000 0.004 0.092 0.892 0.012

#> sample_41 2 0.1545 0.81601 0.000 0.952 0.040 0.008

#> sample_43 2 0.4419 0.77663 0.000 0.812 0.104 0.084

#> sample_44 2 0.4673 0.76617 0.000 0.792 0.132 0.076

#> sample_45 2 0.3834 0.79510 0.000 0.848 0.076 0.076

#> sample_46 2 0.4100 0.78716 0.000 0.832 0.092 0.076

#> sample_70 3 0.5875 0.60802 0.000 0.204 0.692 0.104

#> sample_71 2 0.7146 0.52216 0.016 0.596 0.132 0.256

#> sample_72 2 0.7013 0.53504 0.012 0.604 0.132 0.252

#> sample_68 2 0.1356 0.81813 0.000 0.960 0.032 0.008

#> sample_69 2 0.0336 0.82955 0.000 0.992 0.008 0.000

#> sample_67 1 0.8033 -0.02386 0.488 0.212 0.020 0.280

#> sample_55 3 0.3619 0.66518 0.004 0.100 0.860 0.036

#> sample_56 3 0.3221 0.71204 0.004 0.100 0.876 0.020

#> sample_59 3 0.6280 0.50968 0.000 0.304 0.612 0.084

#> sample_52 1 0.4836 0.76925 0.672 0.000 0.008 0.320

#> sample_53 1 0.0336 0.79640 0.992 0.000 0.000 0.008

#> sample_51 1 0.0188 0.79765 0.996 0.000 0.000 0.004

#> sample_50 1 0.0188 0.79765 0.996 0.000 0.000 0.004

#> sample_54 1 0.6825 0.70709 0.556 0.008 0.088 0.348

#> sample_57 1 0.6439 0.72143 0.576 0.000 0.084 0.340

#> sample_58 1 0.6135 0.74118 0.608 0.000 0.068 0.324

#> sample_60 1 0.6523 0.71280 0.564 0.000 0.088 0.348

#> sample_61 1 0.4782 0.79838 0.780 0.000 0.068 0.152

#> sample_65 1 0.3808 0.80376 0.812 0.000 0.012 0.176

#> sample_66 4 0.7782 0.00808 0.080 0.408 0.052 0.460

#> sample_63 1 0.5173 0.76532 0.660 0.000 0.020 0.320

#> sample_64 1 0.6338 0.73656 0.600 0.000 0.084 0.316

#> sample_62 1 0.5271 0.76377 0.656 0.000 0.024 0.320

#> sample_1 3 0.3910 0.69878 0.000 0.156 0.820 0.024

#> sample_2 4 0.8174 0.49359 0.188 0.184 0.068 0.560

#> sample_3 3 0.5614 -0.26529 0.008 0.012 0.568 0.412

#> sample_4 3 0.3306 0.65895 0.000 0.156 0.840 0.004

#> sample_5 2 0.1545 0.81601 0.000 0.952 0.040 0.008

#> sample_6 3 0.5614 -0.26529 0.008 0.012 0.568 0.412

#> sample_7 3 0.3172 0.67541 0.004 0.112 0.872 0.012

#> sample_8 3 0.2466 0.70936 0.004 0.096 0.900 0.000

#> sample_9 4 0.6407 0.52600 0.008 0.048 0.424 0.520

#> sample_10 4 0.5577 0.45338 0.008 0.008 0.460 0.524

#> sample_11 4 0.6965 0.61441 0.008 0.100 0.344 0.548

#> sample_12 1 0.3916 0.72171 0.848 0.004 0.092 0.056

#> sample_13 2 0.0336 0.82986 0.000 0.992 0.008 0.000

#> sample_14 4 0.7178 0.60794 0.000 0.156 0.324 0.520

#> sample_15 2 0.1545 0.81601 0.000 0.952 0.040 0.008

#> sample_16 2 0.2408 0.80791 0.000 0.896 0.104 0.000

#> sample_17 2 0.5705 0.52086 0.000 0.712 0.108 0.180

#> sample_18 3 0.4046 0.70455 0.000 0.124 0.828 0.048

#> sample_19 2 0.4458 0.77258 0.000 0.808 0.116 0.076

#> sample_20 2 0.0188 0.82988 0.000 0.996 0.004 0.000

#> sample_21 2 0.1610 0.81865 0.000 0.952 0.032 0.016

#> sample_22 3 0.4821 0.66538 0.024 0.088 0.812 0.076

#> sample_23 3 0.5614 -0.26529 0.008 0.012 0.568 0.412

#> sample_24 2 0.1970 0.79947 0.000 0.932 0.060 0.008

#> sample_25 3 0.7376 0.47043 0.016 0.236 0.580 0.168

#> sample_26 3 0.6752 0.10797 0.000 0.440 0.468 0.092

#> sample_27 3 0.2861 0.71000 0.004 0.092 0.892 0.012

#> sample_34 1 0.4212 0.79874 0.772 0.000 0.012 0.216

#> sample_35 1 0.5783 0.77430 0.692 0.000 0.088 0.220

#> sample_36 1 0.0707 0.80135 0.980 0.000 0.000 0.020

#> sample_37 1 0.0336 0.79640 0.992 0.000 0.000 0.008

#> sample_38 1 0.2124 0.76243 0.924 0.000 0.008 0.068

#> sample_28 1 0.1807 0.77306 0.940 0.000 0.008 0.052

#> sample_29 1 0.2530 0.73173 0.888 0.000 0.000 0.112

#> sample_30 1 0.0469 0.80077 0.988 0.000 0.000 0.012

#> sample_31 1 0.3933 0.80105 0.792 0.000 0.008 0.200

#> sample_32 1 0.0927 0.80175 0.976 0.000 0.008 0.016

#> sample_33 1 0.0336 0.79640 0.992 0.000 0.000 0.008

cbind(get_classes(res, k = 5), get_membership(res, k = 5))

#> class entropy silhouette p1 p2 p3 p4 p5

#> sample_39 3 0.3589 0.76090 0.084 0.032 0.848 0.036 0.000

#> sample_40 3 0.2086 0.78722 0.000 0.028 0.928 0.016 0.028

#> sample_42 1 0.8187 -0.28465 0.388 0.284 0.168 0.160 0.000

#> sample_47 2 0.5495 0.64954 0.220 0.684 0.048 0.048 0.000

#> sample_48 2 0.0912 0.76262 0.012 0.972 0.016 0.000 0.000

#> sample_49 3 0.1729 0.78458 0.004 0.032 0.944 0.012 0.008

#> sample_41 2 0.1095 0.75438 0.012 0.968 0.012 0.008 0.000

#> sample_43 2 0.7095 0.53773 0.264 0.520 0.164 0.052 0.000

#> sample_44 2 0.7328 0.48667 0.228 0.496 0.220 0.056 0.000

#> sample_45 2 0.6027 0.63074 0.228 0.644 0.076 0.052 0.000

#> sample_46 2 0.6513 0.60323 0.228 0.604 0.112 0.056 0.000

#> sample_70 3 0.5802 0.62765 0.232 0.040 0.656 0.072 0.000

#> sample_71 1 0.8380 -0.28127 0.324 0.308 0.164 0.204 0.000

#> sample_72 2 0.8374 0.11846 0.316 0.320 0.168 0.196 0.000

#> sample_68 2 0.0693 0.75718 0.012 0.980 0.000 0.008 0.000

#> sample_69 2 0.1211 0.76119 0.024 0.960 0.016 0.000 0.000

#> sample_67 1 0.8192 0.18424 0.416 0.088 0.012 0.240 0.244

#> sample_55 3 0.3847 0.74394 0.056 0.064 0.844 0.028 0.008

#> sample_56 3 0.1706 0.78787 0.012 0.016 0.948 0.016 0.008

#> sample_59 3 0.6564 0.56616 0.256 0.092 0.588 0.064 0.000

#> sample_52 5 0.3867 0.57094 0.144 0.000 0.004 0.048 0.804

#> sample_53 5 0.4219 0.29396 0.416 0.000 0.000 0.000 0.584

#> sample_51 5 0.4201 0.30913 0.408 0.000 0.000 0.000 0.592

#> sample_50 5 0.4201 0.30913 0.408 0.000 0.000 0.000 0.592

#> sample_54 5 0.5809 0.49726 0.212 0.000 0.052 0.068 0.668

#> sample_57 5 0.5401 0.53295 0.184 0.000 0.044 0.064 0.708

#> sample_58 5 0.4775 0.55573 0.160 0.000 0.032 0.052 0.756

#> sample_60 5 0.5783 0.50833 0.200 0.000 0.056 0.068 0.676

#> sample_61 5 0.3530 0.54640 0.104 0.000 0.028 0.024 0.844

#> sample_65 5 0.1830 0.56053 0.068 0.000 0.000 0.008 0.924

#> sample_66 4 0.7379 0.12455 0.344 0.224 0.016 0.404 0.012

#> sample_63 5 0.3989 0.57051 0.144 0.000 0.008 0.048 0.800

#> sample_64 5 0.4978 0.54686 0.140 0.000 0.068 0.040 0.752

#> sample_62 5 0.4058 0.56953 0.144 0.000 0.008 0.052 0.796

#> sample_1 3 0.1997 0.78867 0.016 0.028 0.932 0.024 0.000

#> sample_2 4 0.6342 0.32452 0.320 0.088 0.012 0.564 0.016

#> sample_3 4 0.3636 0.70975 0.000 0.000 0.272 0.728 0.000

#> sample_4 3 0.3342 0.72663 0.008 0.136 0.836 0.020 0.000

#> sample_5 2 0.0854 0.75620 0.012 0.976 0.004 0.008 0.000

#> sample_6 4 0.3636 0.70975 0.000 0.000 0.272 0.728 0.000

#> sample_7 3 0.2616 0.76792 0.008 0.068 0.900 0.016 0.008

#> sample_8 3 0.1518 0.78845 0.012 0.020 0.952 0.016 0.000

#> sample_9 4 0.3098 0.76669 0.000 0.016 0.148 0.836 0.000

#> sample_10 4 0.2732 0.76298 0.000 0.000 0.160 0.840 0.000

#> sample_11 4 0.3193 0.76182 0.004 0.032 0.112 0.852 0.000

#> sample_12 5 0.6376 -0.00821 0.388 0.000 0.080 0.032 0.500

#> sample_13 2 0.0912 0.76262 0.012 0.972 0.016 0.000 0.000

#> sample_14 4 0.3476 0.74547 0.000 0.076 0.088 0.836 0.000

#> sample_15 2 0.0854 0.75620 0.012 0.976 0.004 0.008 0.000

#> sample_16 2 0.4514 0.62916 0.024 0.736 0.220 0.020 0.000

#> sample_17 2 0.4859 0.53344 0.032 0.740 0.032 0.192 0.004

#> sample_18 3 0.3716 0.76714 0.072 0.036 0.844 0.048 0.000

#> sample_19 2 0.7105 0.52595 0.216 0.536 0.192 0.056 0.000

#> sample_20 2 0.0798 0.76254 0.008 0.976 0.016 0.000 0.000

#> sample_21 2 0.0740 0.75819 0.004 0.980 0.008 0.008 0.000

#> sample_22 3 0.3514 0.74802 0.088 0.016 0.848 0.048 0.000

#> sample_23 4 0.3636 0.70975 0.000 0.000 0.272 0.728 0.000

#> sample_24 2 0.1095 0.75438 0.012 0.968 0.012 0.008 0.000

#> sample_25 3 0.7512 0.38966 0.348 0.092 0.448 0.108 0.004

#> sample_26 3 0.7167 0.47689 0.220 0.148 0.548 0.084 0.000

#> sample_27 3 0.1729 0.78458 0.004 0.032 0.944 0.012 0.008

#> sample_34 5 0.0798 0.57805 0.016 0.000 0.008 0.000 0.976

#> sample_35 5 0.2756 0.55600 0.024 0.000 0.092 0.004 0.880

#> sample_36 5 0.4530 0.34139 0.376 0.000 0.004 0.008 0.612

#> sample_37 5 0.4227 0.28696 0.420 0.000 0.000 0.000 0.580

#> sample_38 1 0.4430 -0.10635 0.540 0.000 0.000 0.004 0.456

#> sample_28 1 0.4448 -0.16001 0.516 0.000 0.000 0.004 0.480

#> sample_29 1 0.4882 -0.12385 0.532 0.000 0.000 0.024 0.444

#> sample_30 5 0.4530 0.33769 0.376 0.000 0.004 0.008 0.612

#> sample_31 5 0.1059 0.57677 0.020 0.000 0.008 0.004 0.968

#> sample_32 5 0.4520 0.37971 0.296 0.000 0.008 0.016 0.680

#> sample_33 5 0.4219 0.29396 0.416 0.000 0.000 0.000 0.584

cbind(get_classes(res, k = 6), get_membership(res, k = 6))

#> class entropy silhouette p1 p2 p3 p4 p5 p6

#> sample_39 3 0.3276 0.7517 0.004 0.008 0.812 0.004 0.008 0.164

#> sample_40 3 0.2650 0.8556 0.000 0.004 0.888 0.016 0.036 0.056

#> sample_42 6 0.4976 0.5731 0.044 0.080 0.044 0.048 0.016 0.768

#> sample_47 6 0.4928 0.2835 0.000 0.464 0.024 0.004 0.016 0.492

#> sample_48 2 0.1500 0.8854 0.000 0.936 0.012 0.000 0.000 0.052

#> sample_49 3 0.1198 0.8705 0.000 0.004 0.960 0.012 0.020 0.004

#> sample_41 2 0.0665 0.8971 0.000 0.980 0.000 0.008 0.008 0.004

#> sample_43 6 0.5687 0.5293 0.004 0.300 0.092 0.004 0.020 0.580

#> sample_44 6 0.6106 0.4981 0.000 0.316 0.172 0.000 0.020 0.492

#> sample_45 6 0.5328 0.4064 0.000 0.404 0.060 0.000 0.020 0.516

#> sample_46 6 0.5410 0.4208 0.000 0.396 0.068 0.000 0.020 0.516

#> sample_70 6 0.4642 0.1774 0.000 0.012 0.452 0.000 0.020 0.516

#> sample_71 6 0.6180 0.5174 0.036 0.080 0.068 0.108 0.028 0.680

#> sample_72 6 0.6467 0.5345 0.036 0.088 0.084 0.108 0.028 0.656

#> sample_68 2 0.0520 0.8989 0.000 0.984 0.000 0.008 0.008 0.000

#> sample_69 2 0.1701 0.8722 0.000 0.920 0.008 0.000 0.000 0.072

#> sample_67 6 0.7288 -0.0366 0.372 0.048 0.000 0.144 0.048 0.388

#> sample_55 3 0.4076 0.7915 0.000 0.084 0.800 0.004 0.064 0.048

#> sample_56 3 0.1036 0.8684 0.000 0.004 0.964 0.000 0.024 0.008

#> sample_59 6 0.5394 0.2297 0.000 0.036 0.412 0.000 0.044 0.508

#> sample_52 5 0.2520 0.7676 0.152 0.004 0.000 0.000 0.844 0.000

#> sample_53 1 0.1387 0.7832 0.932 0.000 0.000 0.000 0.068 0.000

#> sample_51 1 0.1387 0.7832 0.932 0.000 0.000 0.000 0.068 0.000

#> sample_50 1 0.1387 0.7832 0.932 0.000 0.000 0.000 0.068 0.000

#> sample_54 5 0.3296 0.7399 0.056 0.000 0.040 0.008 0.856 0.040

#> sample_57 5 0.3255 0.7557 0.076 0.000 0.040 0.004 0.852 0.028

#> sample_58 5 0.3436 0.7713 0.108 0.000 0.028 0.004 0.832 0.028

#> sample_60 5 0.3296 0.7399 0.056 0.000 0.040 0.008 0.856 0.040

#> sample_61 1 0.6001 -0.1745 0.464 0.000 0.036 0.008 0.416 0.076

#> sample_65 1 0.4983 -0.1871 0.484 0.000 0.000 0.008 0.460 0.048

#> sample_66 6 0.6888 0.3290 0.108 0.088 0.000 0.196 0.044 0.564

#> sample_63 5 0.2340 0.7701 0.148 0.000 0.000 0.000 0.852 0.000

#> sample_64 5 0.4936 0.7289 0.100 0.004 0.060 0.016 0.752 0.068

#> sample_62 5 0.2482 0.7701 0.148 0.004 0.000 0.000 0.848 0.000

#> sample_1 3 0.1624 0.8615 0.000 0.008 0.936 0.012 0.000 0.044

#> sample_2 4 0.7114 0.0846 0.148 0.024 0.004 0.396 0.048 0.380

#> sample_3 4 0.2278 0.8297 0.000 0.004 0.128 0.868 0.000 0.000

#> sample_4 3 0.3368 0.8074 0.000 0.124 0.828 0.016 0.004 0.028

#> sample_5 2 0.0520 0.8989 0.000 0.984 0.000 0.008 0.008 0.000

#> sample_6 4 0.2278 0.8297 0.000 0.004 0.128 0.868 0.000 0.000

#> sample_7 3 0.2979 0.8408 0.000 0.080 0.868 0.016 0.012 0.024

#> sample_8 3 0.1836 0.8644 0.000 0.004 0.928 0.012 0.008 0.048

#> sample_9 4 0.1124 0.8533 0.000 0.008 0.036 0.956 0.000 0.000

#> sample_10 4 0.1299 0.8533 0.004 0.004 0.036 0.952 0.004 0.000

#> sample_11 4 0.1180 0.8483 0.004 0.008 0.024 0.960 0.004 0.000

#> sample_12 1 0.5595 0.5130 0.632 0.000 0.028 0.016 0.080 0.244

#> sample_13 2 0.1500 0.8854 0.000 0.936 0.012 0.000 0.000 0.052

#> sample_14 4 0.1887 0.8348 0.000 0.024 0.020 0.932 0.016 0.008

#> sample_15 2 0.0520 0.8989 0.000 0.984 0.000 0.008 0.008 0.000

#> sample_16 2 0.4449 0.5167 0.000 0.696 0.216 0.000 0.000 0.088

#> sample_17 2 0.4492 0.7035 0.020 0.784 0.012 0.112 0.044 0.028

#> sample_18 3 0.4624 0.6378 0.000 0.004 0.724 0.024 0.060 0.188

#> sample_19 6 0.5552 0.5141 0.000 0.328 0.108 0.004 0.008 0.552

#> sample_20 2 0.1563 0.8825 0.000 0.932 0.012 0.000 0.000 0.056

#> sample_21 2 0.1371 0.8927 0.000 0.948 0.004 0.004 0.004 0.040

#> sample_22 3 0.3612 0.7648 0.016 0.000 0.804 0.012 0.016 0.152

#> sample_23 4 0.2420 0.8294 0.000 0.004 0.128 0.864 0.004 0.000

#> sample_24 2 0.0520 0.8989 0.000 0.984 0.000 0.008 0.008 0.000

#> sample_25 6 0.5010 0.4766 0.024 0.020 0.224 0.024 0.012 0.696

#> sample_26 6 0.5408 0.3514 0.000 0.080 0.384 0.004 0.008 0.524

#> sample_27 3 0.1198 0.8705 0.000 0.004 0.960 0.012 0.020 0.004

#> sample_34 5 0.5471 0.3041 0.408 0.004 0.004 0.016 0.512 0.056

#> sample_35 5 0.6976 0.4355 0.292 0.004 0.112 0.016 0.492 0.084

#> sample_36 1 0.1814 0.7656 0.900 0.000 0.000 0.000 0.100 0.000

#> sample_37 1 0.1267 0.7819 0.940 0.000 0.000 0.000 0.060 0.000

#> sample_38 1 0.1769 0.7206 0.924 0.000 0.000 0.004 0.012 0.060

#> sample_28 1 0.1625 0.7338 0.928 0.000 0.000 0.000 0.012 0.060

#> sample_29 1 0.3251 0.6699 0.828 0.000 0.000 0.008 0.040 0.124

#> sample_30 1 0.1663 0.7734 0.912 0.000 0.000 0.000 0.088 0.000

#> sample_31 5 0.5214 0.2145 0.444 0.004 0.004 0.012 0.496 0.040

#> sample_32 1 0.4613 0.5409 0.700 0.000 0.000 0.008 0.204 0.088

#> sample_33 1 0.1444 0.7830 0.928 0.000 0.000 0.000 0.072 0.000

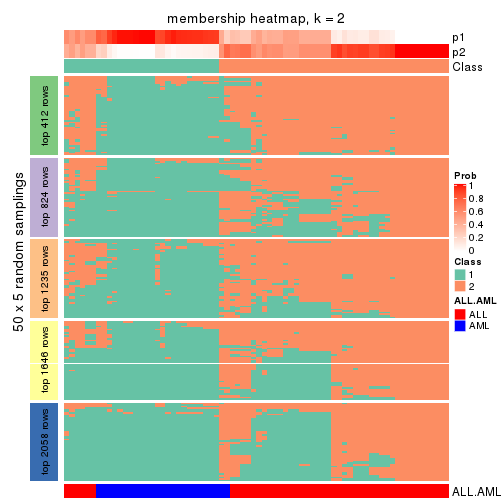

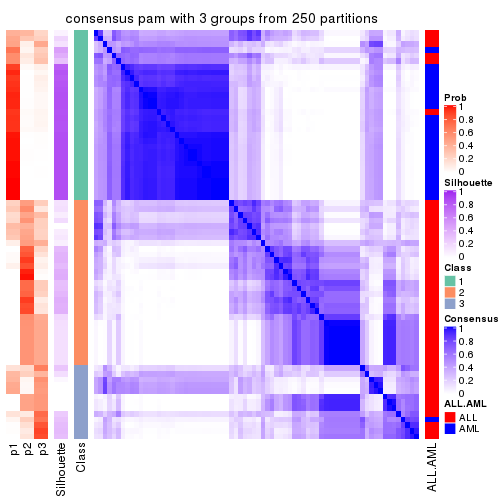

Heatmaps for the consensus matrix. It visualizes the probability of two samples to be in a same group.

consensus_heatmap(res, k = 2)

consensus_heatmap(res, k = 3)

consensus_heatmap(res, k = 4)

consensus_heatmap(res, k = 5)

consensus_heatmap(res, k = 6)

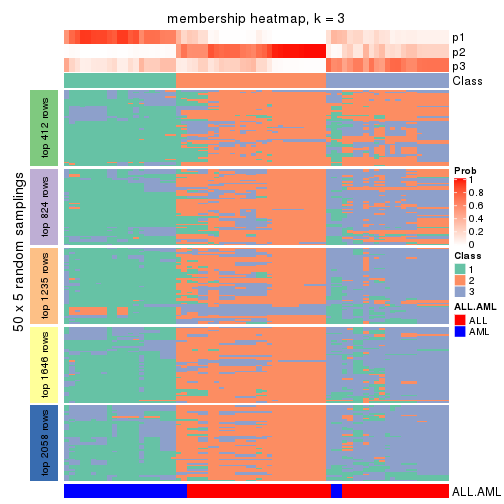

Heatmaps for the membership of samples in all partitions to see how consistent they are:

membership_heatmap(res, k = 2)

membership_heatmap(res, k = 3)

membership_heatmap(res, k = 4)

membership_heatmap(res, k = 5)

membership_heatmap(res, k = 6)

As soon as we have had the classes for columns, we can look for signatures which are significantly different between classes which can be candidate marks for certain classes. Following are the heatmaps for signatures.

Signature heatmaps where rows are scaled:

get_signatures(res, k = 2)

get_signatures(res, k = 3)

get_signatures(res, k = 4)

get_signatures(res, k = 5)

get_signatures(res, k = 6)

Signature heatmaps where rows are not scaled:

get_signatures(res, k = 2, scale_rows = FALSE)

get_signatures(res, k = 3, scale_rows = FALSE)

get_signatures(res, k = 4, scale_rows = FALSE)

get_signatures(res, k = 5, scale_rows = FALSE)

get_signatures(res, k = 6, scale_rows = FALSE)

Compare the overlap of signatures from different k:

compare_signatures(res)

get_signature() returns a data frame invisibly. TO get the list of signatures, the function

call should be assigned to a variable explicitly. In following code, if plot argument is set

to FALSE, no heatmap is plotted while only the differential analysis is performed.

# code only for demonstration

tb = get_signature(res, k = ..., plot = FALSE)

An example of the output of tb is:

#> which_row fdr mean_1 mean_2 scaled_mean_1 scaled_mean_2 km

#> 1 38 0.042760348 8.373488 9.131774 -0.5533452 0.5164555 1

#> 2 40 0.018707592 7.106213 8.469186 -0.6173731 0.5762149 1

#> 3 55 0.019134737 10.221463 11.207825 -0.6159697 0.5749050 1

#> 4 59 0.006059896 5.921854 7.869574 -0.6899429 0.6439467 1

#> 5 60 0.018055526 8.928898 10.211722 -0.6204761 0.5791110 1

#> 6 98 0.009384629 15.714769 14.887706 0.6635654 -0.6193277 2

...

The columns in tb are:

which_row: row indices corresponding to the input matrix.fdr: FDR for the differential test. mean_x: The mean value in group x.scaled_mean_x: The mean value in group x after rows are scaled.km: Row groups if k-means clustering is applied to rows.UMAP plot which shows how samples are separated.

dimension_reduction(res, k = 2, method = "UMAP")

dimension_reduction(res, k = 3, method = "UMAP")

dimension_reduction(res, k = 4, method = "UMAP")

dimension_reduction(res, k = 5, method = "UMAP")

dimension_reduction(res, k = 6, method = "UMAP")

Following heatmap shows how subgroups are split when increasing k:

collect_classes(res)

Test correlation between subgroups and known annotations. If the known annotation is numeric, one-way ANOVA test is applied, and if the known annotation is discrete, chi-squared contingency table test is applied.

test_to_known_factors(res)

#> n ALL.AML(p) k

#> SD:kmeans 71 2.08e-14 2

#> SD:kmeans 66 2.72e-13 3

#> SD:kmeans 63 9.95e-13 4

#> SD:kmeans 50 7.99e-11 5

#> SD:kmeans 56 5.63e-10 6

If matrix rows can be associated to genes, consider to use functional_enrichment(res,

...) to perform function enrichment for the signature genes. See this vignette for more detailed explanations.

The object with results only for a single top-value method and a single partition method can be extracted as:

res = res_list["SD", "skmeans"]

# you can also extract it by

# res = res_list["SD:skmeans"]

A summary of res and all the functions that can be applied to it:

res

#> A 'ConsensusPartition' object with k = 2, 3, 4, 5, 6.

#> On a matrix with 4116 rows and 72 columns.

#> Top rows (412, 824, 1235, 1646, 2058) are extracted by 'SD' method.

#> Subgroups are detected by 'skmeans' method.

#> Performed in total 1250 partitions by row resampling.

#> Best k for subgroups seems to be 3.

#>

#> Following methods can be applied to this 'ConsensusPartition' object:

#> [1] "cola_report" "collect_classes" "collect_plots"

#> [4] "collect_stats" "colnames" "compare_signatures"

#> [7] "consensus_heatmap" "dimension_reduction" "functional_enrichment"

#> [10] "get_anno_col" "get_anno" "get_classes"

#> [13] "get_consensus" "get_matrix" "get_membership"

#> [16] "get_param" "get_signatures" "get_stats"

#> [19] "is_best_k" "is_stable_k" "membership_heatmap"

#> [22] "ncol" "nrow" "plot_ecdf"

#> [25] "rownames" "select_partition_number" "show"

#> [28] "suggest_best_k" "test_to_known_factors"

collect_plots() function collects all the plots made from res for all k (number of partitions)

into one single page to provide an easy and fast comparison between different k.

collect_plots(res)

The plots are:

k and the heatmap of

predicted classes for each k.k.k.k.All the plots in panels can be made by individual functions and they are plotted later in this section.

select_partition_number() produces several plots showing different

statistics for choosing “optimized” k. There are following statistics:

k;k, the area increased is defined as \(A_k - A_{k-1}\).The detailed explanations of these statistics can be found in the cola vignette.

Generally speaking, lower PAC score, higher mean silhouette score or higher

concordance corresponds to better partition. Rand index and Jaccard index

measure how similar the current partition is compared to partition with k-1.

If they are too similar, we won't accept k is better than k-1.

select_partition_number(res)

The numeric values for all these statistics can be obtained by get_stats().

get_stats(res)

#> k 1-PAC mean_silhouette concordance area_increased Rand Jaccard

#> 2 2 0.617 0.659 0.865 0.4970 0.512 0.512

#> 3 3 0.745 0.836 0.918 0.3352 0.763 0.560